The evolution of serverless computing has traditionally been characterized by its stateless nature, excelling in scenarios where transient computations were sufficient. However, the increasing complexity of modern applications necessitates persistent state, driving a significant paradigm shift in serverless architecture. This transition presents both opportunities and challenges, demanding innovative solutions to manage data consistency, fault tolerance, and performance in a distributed environment.

This document explores the intricacies of how is serverless evolving for stateful workloads. It delves into the technical hurdles, explores various state management strategies, and examines the role of serverless databases, in-memory caching, and workflow engines. Furthermore, it investigates the impact of event-driven architectures and the utilization of frameworks and tools, concluding with a discussion of future trends and their potential impact on the landscape of stateful serverless applications.

The Shift Towards Stateful Serverless

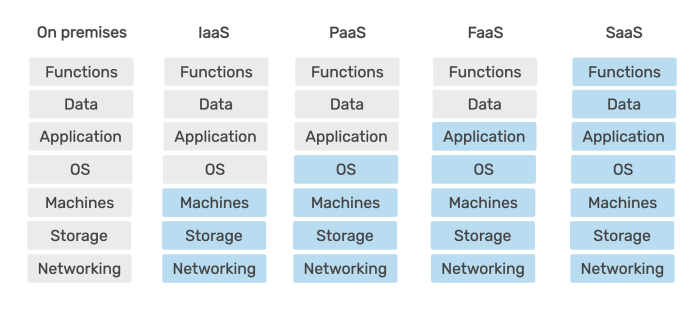

The serverless computing paradigm, traditionally associated with stateless functions, is undergoing a significant transformation. This evolution is driven by the increasing demand for applications that require persistent state, such as those involving databases, session management, and complex data processing. While serverless architectures offer benefits like automatic scaling, reduced operational overhead, and cost optimization, their initial design focused primarily on stateless workloads.

The current landscape witnesses a growing trend toward serverless platforms that effectively handle stateful applications.The limitations of the original stateless serverless model became apparent as developers sought to build more sophisticated applications. Persistent data storage and state management became critical bottlenecks. The lack of native support for stateful operations meant that developers had to rely on external services, increasing complexity, latency, and cost.

This shift is a direct response to the growing needs of modern applications.

Traditional Stateless Serverless Model

The initial serverless model primarily centered around stateless functions, meaning that each function invocation was independent and did not retain any state from previous invocations. This design facilitated horizontal scaling and simplified deployment, as each function instance could handle requests without requiring persistent local storage.

- Function Invocation: Each function execution operates in isolation. It receives input, processes it, and returns an output without storing any information about previous executions.

- Statelessness Advantages: This stateless nature allows for easy scaling. The platform can launch multiple instances of a function to handle a high volume of requests concurrently.

- External Dependency: Any stateful operations, such as data storage or session management, are delegated to external services like databases or caching systems.

- Typical Use Cases: The stateless model excels in scenarios such as processing HTTP requests, image resizing, and event-driven workflows where data is not retained across function invocations.

Limitations of Stateless Serverless for Stateful Applications

The stateless nature of the traditional serverless model poses significant challenges for applications requiring persistent state. The need to interact with external services for state management introduces complexity, performance overhead, and increased costs. These limitations highlight the necessity for serverless platforms to evolve and embrace stateful capabilities.

- Increased Latency: Relying on external services for data storage and retrieval adds latency to operations. Network calls to databases or other services consume time, affecting the overall application performance.

- Complex Architectures: Managing state externally requires developers to design more complex architectures. This often involves handling transactions, data consistency, and service interactions, increasing the development effort.

- Higher Costs: Frequent interactions with external services, such as databases or caching systems, can lead to increased costs, especially for applications with high traffic volumes.

- Operational Overhead: Managing external stateful services adds to the operational burden. This includes tasks like monitoring, scaling, and ensuring data durability.

- Limited Local Context: Without local storage, functions lack a direct context for operations. This limits the ability to perform tasks that require persistent data or session information.

Drivers Behind the Evolution of Serverless to Accommodate Stateful Workloads

The evolution of serverless to support stateful workloads is driven by several factors, including the growing demand for complex applications, the desire to simplify development, and the need to optimize costs and performance. These drivers are shaping the future of serverless computing.

- Growing Application Complexity: Modern applications often require persistent state to manage user sessions, store data, and process complex operations. This trend necessitates serverless platforms that can natively handle stateful workloads.

- Simplified Development: Developers seek to reduce the complexity of building and deploying applications. Serverless platforms that provide built-in state management capabilities simplify development by eliminating the need to manage external services.

- Cost Optimization: While interacting with external services for state management can be costly, native stateful serverless features can reduce costs by optimizing data storage and retrieval.

- Performance Improvement: By integrating state management directly into the serverless platform, applications can achieve improved performance by reducing latency and optimizing data access.

- Increased Adoption: The overall adoption of serverless is growing. The evolution towards stateful capabilities is crucial to expand the range of applications that can benefit from serverless architecture.

Challenges in Stateful Serverless Architectures

Implementing stateful serverless applications presents a complex set of technical hurdles. These challenges stem from the fundamental stateless nature of serverless functions and the distributed, ephemeral environments in which they operate. Successfully navigating these complexities is crucial for building robust, performant, and reliable stateful serverless systems. The following sections detail the key areas of difficulty.

Primary Technical Hurdles in Implementing Stateful Serverless Applications

Several significant technical obstacles hinder the straightforward implementation of stateful serverless architectures. Addressing these challenges requires careful consideration of architectural design and the selection of appropriate technologies.

- State Management Complexity: Serverless functions are inherently stateless, meaning they don’t retain any information about past invocations. Managing state therefore necessitates external storage mechanisms. This introduces complexity in terms of data persistence, retrieval, and consistency. Developers must choose appropriate storage solutions (databases, caches, etc.) and implement strategies for data access and synchronization. For example, choosing a database with strong consistency guarantees, like a relational database, versus a more eventually consistent NoSQL database, has significant implications for the types of applications that can be supported.

- Orchestration and Coordination: Stateful applications often involve multiple functions and services that must interact and coordinate their activities. Orchestrating these interactions, ensuring proper data flow, and managing dependencies can be intricate. Workflow engines or service meshes are frequently employed to handle these complexities, but they add overhead and require careful configuration. The selection of an appropriate orchestration tool depends heavily on the complexity of the application’s workflows; simple state transitions may be handled by basic state machines, while complex business processes may necessitate sophisticated workflow engines.

- Cold Starts: Serverless functions can experience “cold starts,” where the function’s execution environment needs to be initialized, resulting in latency. For stateful applications, this can be especially problematic if the function needs to access state stored in an external service during the cold start. Strategies like function pre-warming or optimizing code for faster initialization are essential to mitigate this issue.

For instance, if a function accesses a large cache, cold starts can be significantly delayed while the cache is loaded into memory.

- Debugging and Monitoring: Debugging and monitoring stateful serverless applications can be more challenging than debugging stateless applications. The distributed nature of the architecture, combined with the ephemeral nature of function instances, makes it difficult to trace the flow of data and identify performance bottlenecks. Robust logging, tracing, and monitoring tools are essential to provide visibility into the application’s behavior. Effective monitoring often involves correlating logs from multiple functions and external services to reconstruct the application’s state and diagnose issues.

Impact of State Management on Latency and Performance

State management significantly impacts the latency and overall performance of serverless applications. The choices made in terms of data storage, access patterns, and consistency models directly influence the speed and responsiveness of the system.

- External Data Access: Accessing state stored in external services (databases, caches, etc.) introduces network latency. The farther the data is from the function’s execution environment, the greater the latency. Developers must carefully consider the geographic location of data centers and choose data storage solutions that minimize network hops. For example, deploying a serverless function and its associated database in the same cloud region can significantly reduce latency compared to accessing a database in a different region.

- Data Serialization and Deserialization: Data must often be serialized before being stored and deserialized when retrieved. The overhead associated with these operations can impact performance, especially when dealing with large or complex data structures. Optimizing the serialization/deserialization process is critical. Choosing efficient data formats like Protocol Buffers or Avro can reduce overhead compared to using JSON.

- Consistency Considerations: Strong consistency guarantees (e.g., ACID properties in relational databases) often come with a performance cost. Transactions, locks, and other mechanisms used to maintain consistency can introduce latency. Developers must carefully weigh the trade-offs between consistency and performance and choose the appropriate consistency model for their application. For example, an application that requires strict ordering of financial transactions will likely need to prioritize strong consistency, even if it means accepting higher latency.

- Caching Strategies: Caching frequently accessed data can significantly improve performance by reducing the number of requests to external storage. However, implementing effective caching strategies requires careful consideration of cache invalidation, cache size, and cache consistency. An improperly configured cache can lead to stale data and inconsistent application behavior. A good example is using a distributed cache like Redis to store session data, reducing the load on a database.

Complexities of Data Consistency and Fault Tolerance in a Stateful Environment

Ensuring data consistency and fault tolerance are critical concerns in stateful serverless architectures. The distributed and ephemeral nature of these systems introduces challenges in maintaining data integrity and handling failures.

- Data Consistency Models: Choosing the right consistency model is crucial. Strong consistency guarantees provide the highest level of data integrity but can impact performance and availability. Eventual consistency offers better performance and availability but may lead to temporary inconsistencies. The choice depends on the application’s requirements. For instance, a shopping cart application might tolerate eventual consistency for item availability, but a financial transaction system requires strong consistency.

- Transaction Management: Transactions are often required to ensure data consistency across multiple operations. Implementing transactions in a serverless environment can be complex, especially when dealing with distributed data stores. Developers need to carefully consider the atomicity, consistency, isolation, and durability (ACID) properties of transactions. Serverless platforms often provide tools or services to facilitate transaction management, such as distributed transaction coordinators.

- Fault Tolerance Strategies: Serverless functions can fail due to various reasons, including infrastructure failures, code errors, and resource limitations. Implementing fault tolerance requires strategies such as retries, circuit breakers, and data replication. Retries can help to overcome transient failures, while circuit breakers can prevent cascading failures by isolating failing services. Data replication ensures data availability in the event of a single point of failure.

For example, replicating data across multiple availability zones within a cloud region can significantly improve fault tolerance.

- Idempotency: Idempotency is the property of an operation that can be executed multiple times without changing the outcome beyond the initial execution. Ensuring idempotency is critical in serverless environments to handle retries and prevent duplicate processing. Developers can implement idempotency using techniques such as unique request identifiers, state tracking, and optimistic locking. For example, a payment processing function should be idempotent to prevent multiple charges if a request is retried due to a network issue.

State Management Solutions for Serverless

Serverless architectures, by their ephemeral nature, present unique challenges for state management. The statelessness of individual function executions necessitates external mechanisms to persist and manage data across invocations. This section explores various strategies employed to address this challenge, analyzing their respective strengths, weaknesses, and practical applications within a serverless context.

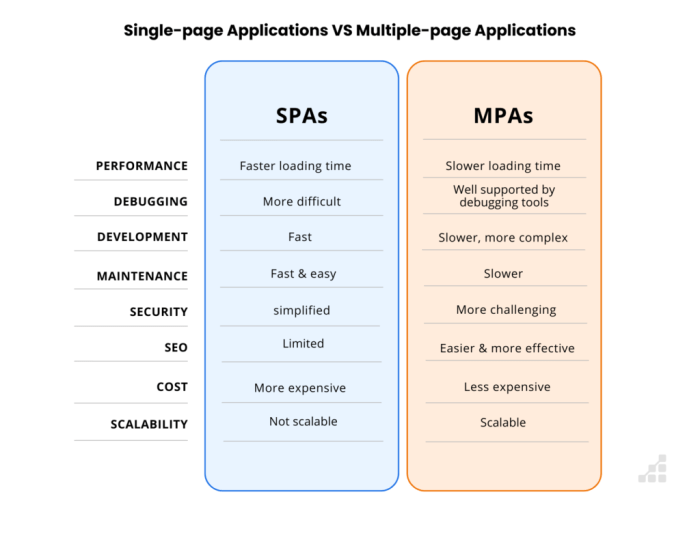

State Management Solutions for Serverless: Comparison

Effective state management is crucial for building complex and reliable serverless applications. Several strategies exist, each with its own trade-offs regarding performance, cost, and complexity. The following table provides a comparative analysis of these approaches.

| Strategy | Pros | Cons | Use Cases |

|---|---|---|---|

| Databases (e.g., Relational, NoSQL) |

|

|

|

| In-Memory Caches (e.g., Redis, Memcached) |

|

|

|

| External Services (e.g., Event Streams, Object Storage) |

|

|

|

Integration of State Management Solutions within Serverless Functions

Serverless functions interact with state management solutions through various mechanisms. These interactions are often facilitated by SDKs (Software Development Kits) provided by cloud providers or third-party services. The integration approach depends on the chosen state management strategy.

- Databases: Functions use database client libraries (e.g., AWS SDK for DynamoDB, PostgreSQL drivers) to connect to and interact with databases. These libraries provide methods for performing CRUD (Create, Read, Update, Delete) operations, executing queries, and managing transactions. For example, a function could use the AWS SDK for DynamoDB to read user profile data from a DynamoDB table.

- In-Memory Caches: Functions utilize cache client libraries (e.g., Redis clients) to connect to and interact with in-memory caches. These libraries offer methods for setting, getting, and deleting data from the cache. A function might cache the results of an expensive database query in Redis to reduce database load and improve response times.

- External Services: Functions interact with external services through their respective APIs or SDKs. For example, a function might use the AWS SDK for S3 to upload a file to Amazon S3 or use a message queue client to publish a message to an event stream. Functions typically interact with these services using RESTful APIs or client libraries.

The choice of state management solution and its integration approach significantly influences the performance, cost, and complexity of a serverless application. Careful consideration of these factors is essential for building robust and scalable serverless systems. For example, consider an e-commerce application. User profile data might be stored in a relational database, frequently accessed product details could be cached in Redis, and order processing events could be handled by an event stream like Amazon Kinesis.

This layered approach balances performance, cost, and data consistency requirements.

Serverless Databases and Stateful Workloads

Serverless databases are fundamentally changing how stateful applications are built and deployed. They offer a compelling alternative to traditional database systems by abstracting away the complexities of infrastructure management, scaling, and operational overhead. This shift is particularly significant for serverless architectures, as it provides a robust and efficient way to manage persistent data, which is essential for most stateful workloads.

Enabling Stateful Applications with Serverless Databases

Serverless databases, such as Amazon DynamoDB, FaunaDB, and Google Cloud Firestore, are designed to seamlessly integrate with serverless compute platforms. These databases enable stateful applications by providing a fully managed data storage solution that automatically scales to handle varying workloads. They offer high availability, fault tolerance, and pay-per-use pricing models, making them an attractive option for a wide range of applications.

For example, a gaming platform could use DynamoDB to store player profiles, game scores, and session data, allowing the application to scale automatically based on the number of active users.

Database Features: Transactions, Consistency, and Indexing

Serverless databases provide crucial features for building reliable and performant stateful applications. These features address the critical requirements for data integrity, access speed, and query efficiency.

- Transactions: Many serverless databases support transactions, allowing developers to ensure data consistency across multiple operations. Transactions guarantee that either all operations within a transaction succeed, or none of them do, preventing data corruption. For example, in an e-commerce application, a transaction might be used to update inventory, create an order, and deduct funds from a customer’s account.

- Consistency Levels: Serverless databases offer various consistency levels, allowing developers to balance read performance with data consistency.

- Strong consistency ensures that all reads reflect the most recent write.

- Eventual consistency allows for faster reads but may return stale data for a short period.

Choosing the appropriate consistency level depends on the application’s requirements. Applications that require immediate data accuracy, such as financial transactions, typically opt for strong consistency, while applications that can tolerate some data lag, such as social media feeds, might use eventual consistency for improved performance.

- Indexing: Efficient indexing is crucial for fast data retrieval in serverless databases. Indexes allow developers to optimize query performance by creating pre-computed data structures that speed up data access. Serverless databases often provide various indexing options, including primary key indexes, secondary indexes, and global secondary indexes. The selection of appropriate indexes depends on the application’s query patterns.

Designing a Serverless Application with a Serverless Database: Steps

Designing a serverless application using a serverless database involves several key steps to ensure efficient data management and application performance. This process typically involves planning, implementation, and continuous optimization.

- Define Data Model: Begin by defining the data model, including the entities, attributes, and relationships. Consider the application’s data access patterns to optimize the schema for query performance. For example, if the application frequently queries users by email address, create an index on the email attribute.

- Choose a Serverless Database: Select a serverless database that aligns with the application’s requirements. Consider factors such as data model support, scalability, consistency levels, and pricing. DynamoDB is a good choice for applications that require high throughput and scalability, while FaunaDB excels in supporting complex relationships and advanced data modeling.

- Design Data Access Patterns: Determine how the application will interact with the database. Consider the read and write patterns, query requirements, and potential performance bottlenecks. Optimize the data access patterns to minimize latency and maximize throughput.

- Implement Data Access Logic: Write the code that interacts with the database. Use the database’s SDK or client library to perform CRUD (Create, Read, Update, Delete) operations. Implement error handling and data validation to ensure data integrity.

- Configure Database Settings: Configure database settings, such as consistency levels, indexing, and scaling parameters. Regularly monitor the database performance and adjust settings as needed to optimize performance and cost.

- Deploy and Test: Deploy the serverless application and test the data access functionality. Perform load testing to ensure that the database can handle the expected workload. Monitor the application’s performance and make adjustments as needed.

- Optimize and Monitor: Continuously monitor the application’s performance and database usage. Identify and address any performance bottlenecks. Optimize the data model, query patterns, and database settings to improve performance and reduce costs.

In-Memory Caching for Serverless State

In-memory caching represents a crucial strategy for optimizing the performance and cost-efficiency of stateful serverless applications. By storing frequently accessed data in fast, volatile memory, caching solutions significantly reduce latency and the load on underlying data stores. This section explores the role of in-memory caching, its benefits, and a typical data flow within a serverless architecture.

Role of In-Memory Caching Solutions

In-memory caching solutions, such as Redis and Memcached, serve as high-speed data stores that sit between the serverless functions and the primary data storage (e.g., databases). These caches hold frequently accessed data in RAM, enabling extremely rapid retrieval. This architecture dramatically improves response times, as accessing data from RAM is orders of magnitude faster than retrieving it from disk-based storage or network-dependent databases.

This approach is particularly beneficial in scenarios with high read-to-write ratios and where data access patterns are predictable.

Benefits of Caching in Terms of Performance and Cost

Caching offers significant advantages in terms of both performance and cost. By reducing the number of requests to the primary data store, caching minimizes the load on the database, leading to:

- Improved Performance: Data retrieval from in-memory caches typically takes milliseconds, significantly reducing latency compared to database queries. This results in faster application response times and a better user experience. For example, a content delivery network (CDN) uses caching to deliver content to users faster by storing it closer to them.

- Reduced Database Load: Fewer database requests translate to lower resource consumption on the database side. This can lead to cost savings, especially in pay-per-use cloud environments where database usage is directly tied to cost. Consider an e-commerce platform. Caching frequently accessed product details, such as prices and descriptions, reduces the number of database queries, leading to cost savings and improved performance during peak shopping seasons.

- Scalability: Caching can help to scale the application more effectively. By offloading read requests from the database, the system can handle a larger volume of traffic without requiring significant database upgrades.

Caching also helps reduce operational costs by:

- Optimizing Resource Utilization: By reducing the load on databases, caching helps to optimize the utilization of underlying resources. This is particularly important in serverless architectures where resources are often dynamically allocated.

- Cost Reduction: Minimizing database queries and resource consumption translates to lower operational costs, especially when utilizing cloud-based database services that charge based on usage.

Design of a Flow Diagram for Caching Data in a Serverless Architecture

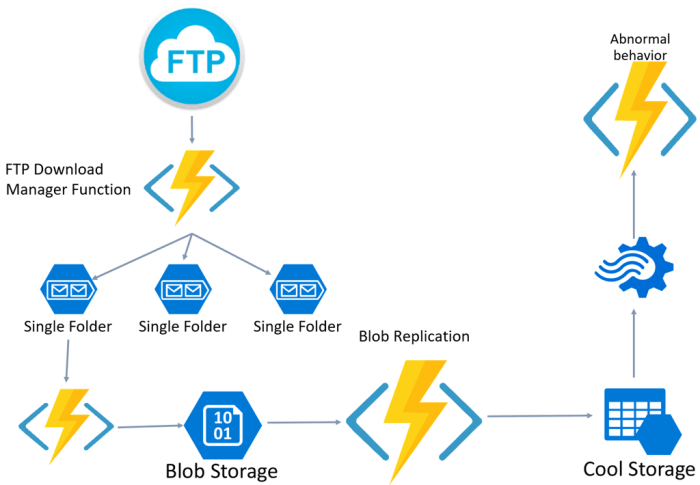

The following Artikels the typical flow of data within a serverless architecture employing in-memory caching:

Data Flow Description:

1. Client Request

A client (e.g., web browser, mobile app) sends a request to the serverless function.

2. Function Invocation

The serverless function is triggered by the request.

3. Cache Check

The function first checks the in-memory cache (e.g., Redis) for the requested data.

- Cache Hit: If the data is found in the cache, it’s retrieved and returned to the function, which then sends it to the client.

- Cache Miss: If the data is not in the cache, the function proceeds to the next step.

4. Data Retrieval from Database

The function queries the database to retrieve the required data.

5. Data Caching

The retrieved data is then stored in the in-memory cache. This ensures that subsequent requests for the same data can be served from the cache.

6. Data Return to Client

The function returns the data to the client.

Serverless Workflow Engines and State

Serverless workflow engines provide a powerful mechanism for orchestrating stateful tasks in serverless architectures. They offer a structured approach to managing complex processes that involve multiple steps, dependencies, and state transitions. By decoupling the orchestration logic from the individual functions, workflow engines simplify development, improve maintainability, and enhance the overall reliability of serverless applications.

Managing State and Orchestrating Stateful Tasks with Serverless Workflow Engines

Serverless workflow engines manage state and orchestrate stateful tasks through a combination of durable execution, state persistence, and event-driven transitions. They typically employ a state machine model, where each step in the workflow represents a state, and transitions between states are triggered by events or conditions.Workflow engines achieve state management through:

- Durable Execution: Workflow engines ensure that the execution of each step is durable. If a function fails, the engine automatically retries it, often with exponential backoff, until successful. This guarantees that the workflow progresses even in the face of transient failures.

- State Persistence: The state of the workflow, including input, output, and intermediate data, is persisted in a durable storage mechanism, such as a database or object storage. This allows the workflow to resume from where it left off, even if the execution environment is interrupted.

- Event-Driven Transitions: Workflow engines use events to trigger transitions between states. These events can be triggered by the successful completion of a function, a timer expiring, or an external event such as a message arriving in a queue.

- State Machine Definition: Workflows are defined as state machines, typically using a declarative language such as JSON or YAML. This definition specifies the states, transitions, and associated functions. For instance, in AWS Step Functions, the Amazon States Language (ASL) is used.

For example, consider an order processing workflow:

- Order Received: The workflow starts when an order is received (e.g., via an API Gateway).

- Payment Processing: A function is invoked to process the payment. The state of this step includes the order details and payment information.

- Inventory Check: A function checks the availability of items in inventory. The state includes the inventory status.

- Shipping: If inventory is available, a function initiates the shipping process. The state includes the shipping details and tracking information.

- Order Complete: Once shipped, the workflow marks the order as complete.

Each step in this workflow is a state, and the transitions between states are triggered by events such as successful payment processing, inventory availability, and successful shipment. The workflow engine manages the state of each step, retries failed functions, and ensures that the entire process completes reliably.

Benefits of Using Workflow Engines for Complex Stateful Processes

Employing workflow engines in serverless architectures offers several advantages for managing complex stateful processes. These benefits include improved reliability, enhanced observability, and simplified development.

- Improved Reliability and Fault Tolerance: Workflow engines provide built-in fault tolerance. They automatically retry failed functions and manage the state of the workflow, ensuring that the process continues even if individual functions fail. This contrasts with manual error handling and retry mechanisms, which can be complex and error-prone.

- Enhanced Observability and Debugging: Workflow engines offer comprehensive monitoring and logging capabilities. They provide a visual representation of the workflow execution, making it easier to track progress, identify bottlenecks, and debug issues. This significantly reduces the time required to troubleshoot and resolve problems.

- Simplified Development and Maintainability: Workflow engines abstract away the complexities of orchestrating stateful tasks. Developers can focus on writing the individual functions, while the workflow engine handles the orchestration, state management, and error handling. This results in cleaner, more maintainable code.

- Scalability and Concurrency: Workflow engines are designed to handle high volumes of concurrent requests. They automatically scale to meet demand, ensuring that the workflow can process a large number of requests without performance degradation.

- Version Control and Rollbacks: Workflow definitions can be version-controlled, enabling easy rollbacks to previous versions if necessary. This is crucial for managing changes to complex processes without disrupting existing operations.

For instance, consider a financial transaction processing system. Using a workflow engine allows for robust handling of transactions. If a payment processing function fails, the engine automatically retries it. If an inventory check fails, the engine can trigger an alert and automatically revert the transaction. This ensures data integrity and minimizes the impact of failures.

Architecture of a Serverless Workflow Engine

The architecture of a serverless workflow engine typically comprises several key components that work together to orchestrate stateful tasks. These components include a state machine engine, a task executor, a state persistence layer, and an event trigger system.

- State Machine Engine: This is the core component that interprets the workflow definition (e.g., the ASL in AWS Step Functions). It manages the state transitions, invokes the appropriate functions based on the current state, and handles error conditions.

- Task Executor: The task executor is responsible for invoking the individual functions defined in the workflow. It receives input from the state machine engine, invokes the function, and passes the output back to the engine.

- State Persistence Layer: This layer stores the state of the workflow, including the input, output, and intermediate data of each step. It provides durable storage and ensures that the workflow can resume from where it left off. Databases, object storage, or other persistent storage services are commonly used.

- Event Trigger System: The event trigger system is responsible for triggering state transitions based on events. This includes receiving events from external sources (e.g., message queues, API calls) and from the completion of functions within the workflow.

- Monitoring and Logging: A comprehensive monitoring and logging system is integrated to track the execution of workflows. This system provides metrics, logs, and visual representations of the workflow’s progress, enabling developers to monitor performance, debug issues, and optimize the workflow.

For example, in AWS Step Functions:

The State Machine Engine is the core component that orchestrates the workflow. The Task Executor invokes Lambda functions or other AWS services. The State Persistence Layer uses services like DynamoDB or S3 to store state. The Event Trigger System integrates with services like SQS, SNS, and API Gateway.

The interaction between these components ensures the reliable and efficient execution of stateful serverless workflows. The state machine engine controls the flow, the task executor runs the functions, the state persistence layer preserves the state, and the event trigger system drives the transitions.

Event-Driven Architectures and Stateful Serverless

The synergy between event-driven architectures and stateful serverless applications is a critical area of development, enabling complex, responsive systems. Event-driven designs excel at reacting to changes in real-time, while stateful serverless functions manage persistent data. This combination offers significant advantages in scalability, resilience, and operational efficiency, providing a robust foundation for modern application development.

Relationship Between Event-Driven Architectures and Stateful Serverless Applications

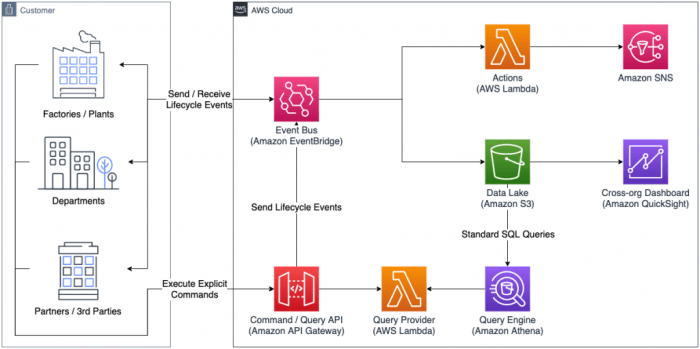

Event-driven architectures and stateful serverless applications share a complementary relationship. Events act as triggers, initiating the execution of serverless functions that manage and update state. The event bus serves as a central communication hub, decoupling components and enabling asynchronous processing. Stateful serverless functions, which maintain state across invocations, can then respond to these events by modifying data stored in databases, caches, or other stateful resources.

This architecture allows for highly scalable and resilient systems, as individual functions can be scaled independently based on event volume, and failures in one function do not necessarily impact others.

How Events Trigger State Changes and Updates

Events initiate state changes and updates within stateful serverless applications through a defined process. When an event occurs, it is published to an event bus, such as AWS EventBridge, Azure Event Grid, or Google Cloud Pub/Sub. Serverless functions are subscribed to these events. Upon receiving an event, a function is triggered, and its execution is governed by the event’s payload and the function’s logic.

The function then accesses and modifies the relevant stateful resources, such as databases or caches. The outcome of this modification is the updated state, reflecting the impact of the event. The entire process, from event generation to state update, must be designed to handle concurrency, ensuring data consistency and integrity, particularly when multiple events may trigger simultaneous updates to the same stateful resources.

Event-Driven Pattern for an E-commerce Shopping Cart

An e-commerce shopping cart serves as a prime example of a stateful use case benefiting from an event-driven architecture. Consider the following scenario:

When a customer adds an item to their cart, an `ItemAddedToCart` event is published.

A serverless function, `UpdateCart`, subscribes to this event.

The `UpdateCart` function retrieves the customer’s cart from a database (e.g., DynamoDB).

It updates the cart with the new item and calculates the new total.

The updated cart is then saved back to the database.

A `CartUpdated` event is then published, triggering other functions, like a `SendCartSummary` function that might send a notification to the user.

This pattern demonstrates how events trigger state changes, allowing the shopping cart to be updated asynchronously and efficiently. This design allows the system to scale independently, with the `UpdateCart` function handling many simultaneous additions without blocking the customer’s interaction. It ensures that all parts of the system remain consistent and responsive.

Frameworks and Tools for Stateful Serverless Development

The evolution of stateful serverless applications has spurred the development of specialized frameworks and tools, designed to streamline the complexities of building, deploying, and managing these applications. These tools offer abstractions over the underlying infrastructure, simplifying tasks such as state management, resource provisioning, and application monitoring. Their adoption significantly accelerates development cycles and reduces operational overhead.

Key Frameworks and Tools for Stateful Serverless Applications

Several key frameworks and tools have emerged as essential components for developing stateful serverless applications. These tools provide various functionalities, from infrastructure-as-code to serverless function management, enhancing developer productivity and operational efficiency.

- Serverless Framework: This is a popular open-source framework that simplifies the deployment and management of serverless applications across multiple cloud providers. It utilizes a YAML-based configuration file (serverless.yml) to define the application’s resources, functions, and event triggers. The Serverless Framework abstracts away the complexities of provisioning infrastructure and allows developers to focus on writing code.

- Terraform: Terraform, developed by HashiCorp, is an infrastructure-as-code (IaC) tool that allows developers to define and manage infrastructure resources using declarative configuration files. While not exclusively for serverless, Terraform can provision and manage the necessary infrastructure components for stateful serverless applications, such as databases, caching services, and message queues. It supports multiple cloud providers, enabling cross-cloud deployments.

- AWS Cloud Development Kit (CDK): The AWS CDK allows developers to define cloud infrastructure using familiar programming languages like TypeScript, Python, and Java. It provides a higher-level abstraction compared to Terraform, enabling developers to write code that represents the desired infrastructure state. The CDK translates this code into CloudFormation templates, which are then used to provision the resources on AWS.

- Pulumi: Pulumi is another infrastructure-as-code tool that supports multiple cloud providers. It allows developers to define infrastructure using general-purpose programming languages like Python, JavaScript, Go, and C#. Pulumi offers similar benefits to Terraform and CDK, enabling developers to manage infrastructure programmatically.

Simplification of Development and Deployment

These frameworks and tools contribute significantly to simplifying the development and deployment processes for stateful serverless applications. They provide several key advantages that enhance developer productivity and reduce operational complexity.

- Infrastructure as Code: IaC tools like Terraform, CDK, and Pulumi allow developers to define infrastructure resources as code. This approach offers benefits such as version control, reusability, and automated deployments. Developers can easily replicate environments and ensure consistency across different stages of the development lifecycle.

- Automated Deployment: These tools automate the deployment process, reducing the manual effort required to provision and configure resources. Developers can define the desired state of the application and let the tool handle the underlying infrastructure management.

- Abstraction of Complexity: Frameworks like the Serverless Framework abstract away the complexities of managing serverless functions, event triggers, and resource provisioning. This allows developers to focus on writing business logic rather than dealing with the underlying infrastructure details.

- Cross-Cloud Compatibility: Tools like Terraform and Pulumi support multiple cloud providers, enabling developers to deploy applications across different cloud environments. This provides flexibility and reduces vendor lock-in.

- Simplified State Management: Some frameworks and tools provide built-in support for state management, such as managing database connections, caching, and session data. This simplifies the development of stateful serverless applications.

Demonstration of Stateful Function Deployment with the Serverless Framework

The Serverless Framework can be employed to deploy a simple stateful function. This example illustrates the basic steps involved in deploying a function that interacts with a simple in-memory store.

First, the `serverless.yml` file is created, defining the function, its event triggers (e.g., an HTTP endpoint), and the necessary permissions. The function code, written in a language such as Node.js, would interact with an in-memory store, perhaps using a library like `node-cache`. The configuration would specify the function’s handler, the event it responds to (e.g., an HTTP GET request), and any environment variables needed by the function.

A simplified `serverless.yml` file might look like this:

service: stateful-function-exampleprovider: name: aws runtime: nodejs18.x region: us-east-1 iam: role: statements: -Effect: "Allow" Action: -"dynamodb:*" # Example permission, not required for in-memory store Resource: "*" # Example resource, not required for in-memory storefunctions: getState: handler: handler.getState events: -http: method: get path: /state setState: handler: handler.setState events: -http: method: post path: /state

The corresponding `handler.js` file (example):

const NodeCache = require( "node-cache" );const cache = new NodeCache();exports.getState = async (event) => const value = cache.get("myKey"); return statusCode: 200, body: JSON.stringify( value: value || null ), ;;exports.setState = async (event) => const body = JSON.parse(event.body); cache.set("myKey", body.value); return statusCode: 200, body: JSON.stringify( message: "State updated" ), ;; To deploy the function, the developer would run the `serverless deploy` command in the terminal.

The Serverless Framework will then handle the provisioning of the necessary AWS resources (e.g., Lambda functions, API Gateway endpoints). Once deployed, the function can be invoked via the HTTP endpoints defined in the `serverless.yml` file. Subsequent calls to the `/state` endpoint would retrieve and modify the state stored in the in-memory cache. While this example uses an in-memory cache, demonstrating a basic stateful pattern, more robust implementations would integrate external state management solutions like databases or caching services, as discussed earlier.

Future Trends and Innovations

The landscape of stateful serverless is poised for significant evolution, driven by emerging technologies and shifting architectural paradigms. These innovations promise to reshape how state is managed, applications are deployed, and resources are utilized. Understanding these trends is crucial for anticipating the future of stateful serverless and capitalizing on its potential.

Emerging Trends in Stateful Serverless

Several key trends are reshaping the future of stateful serverless computing, offering new opportunities and challenges. These trends include edge computing, WebAssembly (Wasm), and the increasing adoption of specialized hardware.Edge computing is pushing the boundaries of serverless by enabling the execution of code closer to the data source or end-user. This proximity reduces latency and enhances responsiveness, particularly for applications requiring real-time processing or geographically distributed data.

This trend is particularly relevant for Internet of Things (IoT) applications, content delivery networks (CDNs), and gaming. For example, consider a smart city application that uses serverless functions to process sensor data collected from traffic lights. By deploying these functions at the edge, the application can react instantaneously to traffic conditions, optimizing traffic flow in real-time.WebAssembly (Wasm) is emerging as a powerful technology for serverless computing, enabling the execution of code written in various languages within a sandboxed environment.

Wasm offers performance advantages, enhanced security, and portability across different platforms. Wasm’s ability to run efficiently in resource-constrained environments makes it well-suited for edge computing. For instance, a video processing application could leverage Wasm to transcode video streams at the edge, reducing bandwidth consumption and improving the user experience.The increasing adoption of specialized hardware, such as GPUs and FPGAs, is also influencing the evolution of stateful serverless.

These hardware accelerators can significantly improve the performance of computationally intensive tasks, such as machine learning and image processing. Serverless platforms are increasingly integrating support for these accelerators, enabling developers to build high-performance applications without the need for complex infrastructure management. For example, a serverless application designed for fraud detection could leverage GPUs to accelerate the analysis of large datasets, improving the accuracy and speed of fraud detection.

Potential Impact of These Trends on State Management

The adoption of edge computing, WebAssembly, and specialized hardware has significant implications for state management in serverless architectures. These trends necessitate new approaches to data storage, synchronization, and consistency.Edge computing introduces challenges related to data locality and consistency. Managing state across distributed edge nodes requires careful consideration of data replication, conflict resolution, and eventual consistency models. For instance, in a gaming application, player data needs to be synchronized across multiple edge locations to ensure a seamless gaming experience.

This requires the use of distributed databases or state management solutions designed for edge environments.WebAssembly’s sandboxed environment necessitates a different approach to state management compared to traditional serverless functions. Wasm modules typically do not have direct access to the underlying operating system or shared memory. Therefore, state management solutions need to provide mechanisms for securely accessing and manipulating state within the Wasm environment.

This may involve the use of dedicated state stores or APIs.Specialized hardware introduces new requirements for state management. Applications utilizing GPUs or FPGAs often require access to large amounts of data. Efficiently managing and transferring data between the serverless function and the hardware accelerator is critical for performance. This necessitates the use of optimized data transfer mechanisms and state management solutions that can handle large datasets.

Future Scenario: Fully Integrated Stateful Serverless Applications

Envision a future where stateful serverless applications are fully integrated with emerging technologies, creating a seamless and responsive user experience. Consider a smart retail environment where the interaction between physical and digital worlds is nearly invisible.The scenario unfolds in a large retail store, equipped with a network of sensors, cameras, and edge devices. Customers interact with the environment using their smartphones and smart carts.* Edge Computing and Real-time Personalization: As a customer enters the store, edge devices located near the entrance recognize their presence via Bluetooth beacons.

A serverless function, deployed on an edge server, retrieves the customer’s profile from a distributed state store. The profile contains preferences, purchase history, and real-time location within the store. This data allows the system to personalize the customer’s shopping experience.

WebAssembly and Dynamic Content Generation

The edge server uses WebAssembly modules to generate dynamic content for the customer’s smartphone. For example, as the customer approaches the grocery aisle, a Wasm module renders a personalized shopping list based on their past purchases and current promotions.

Specialized Hardware and Predictive Analytics

Cameras throughout the store capture video footage, which is processed by serverless functions that leverage GPUs. These functions perform object detection, identify customer behavior, and predict demand. The results are fed back into the state store, which is used to optimize product placement, manage inventory, and adjust pricing in real-time.

Distributed State Management

A distributed state management solution, such as a globally distributed database, ensures data consistency across the entire system. Updates to the customer’s profile, product inventory, and sales data are synchronized across all edge locations and backend services.

Seamless User Experience

The customer navigates the store with the aid of their smartphone, receiving personalized recommendations, real-time promotions, and automated checkout options. The entire experience is powered by stateful serverless applications, seamlessly integrating edge computing, WebAssembly, specialized hardware, and distributed state management.In this future scenario, stateful serverless provides the agility, scalability, and cost-effectiveness needed to support a dynamic and personalized retail experience.

The integration of edge computing, WebAssembly, and specialized hardware enables real-time processing, improved performance, and enhanced user engagement. The distributed state management solution ensures data consistency and reliability across the entire system. This future scenario illustrates the transformative potential of stateful serverless and its ability to create intelligent, responsive, and highly personalized applications.

Final Wrap-Up

In conclusion, the progression of how is serverless evolving for stateful workloads marks a pivotal moment in cloud computing. Addressing the challenges of state management through serverless databases, caching, and workflow engines is paramount. As technologies like edge computing and WebAssembly continue to emerge, the future of stateful serverless applications promises enhanced scalability, performance, and responsiveness, ushering in a new era of sophisticated, event-driven architectures capable of handling complex, data-intensive operations with unprecedented efficiency.

Questions Often Asked

What are the primary cost considerations when implementing stateful serverless applications?

Cost considerations include database usage, in-memory cache size and operation, workflow engine execution time, and the number of function invocations. Efficient resource utilization and careful selection of services are crucial to minimize expenses.

How does the choice of programming language impact stateful serverless application development?

Programming language choice influences performance, available libraries for state management (e.g., database connectors, caching clients), and the ecosystem of frameworks and tools. Languages like Python, Node.js, and Java are commonly used, each with its strengths and weaknesses.

What are the security implications of stateful serverless architectures?

Security concerns include protecting sensitive data stored in databases or caches, securing access to these resources, and preventing unauthorized function invocations. Proper authentication, authorization, and encryption are critical.

How can developers monitor and debug stateful serverless applications?

Monitoring and debugging involve logging function executions, tracking database operations, and analyzing cache performance. Cloud providers offer tools for monitoring, logging, and tracing, enabling developers to identify and resolve issues effectively.