Selecting the appropriate database for your cloud architecture is crucial for application performance, scalability, and cost-effectiveness. This comprehensive guide explores the key factors to consider, from understanding your application’s needs to evaluating cloud providers’ offerings. We’ll delve into various database types, performance benchmarks, security considerations, and migration strategies, ultimately equipping you with the knowledge to make informed decisions.

Choosing the right database is a critical step in building a robust and efficient cloud application. Understanding your data volume, access patterns, and scalability requirements is paramount to avoiding costly overprovisioning or performance bottlenecks. This guide will walk you through the process, helping you select a database that perfectly aligns with your cloud architecture’s needs.

Introduction to Database Selection

Selecting the appropriate database is crucial for the success of any cloud architecture. A poorly chosen database can lead to performance bottlenecks, scalability issues, and increased operational costs. The right database choice directly impacts application performance, data integrity, and the overall efficiency of the cloud-based system. Careful consideration of various factors, including data characteristics, anticipated workload, and budgetary constraints, is paramount in this decision-making process.The selection process is influenced by numerous factors, including the nature of the data being stored, the anticipated volume and velocity of data, the required query complexity, and the specific needs of the application.

Scalability, security, and cost-effectiveness are equally important considerations. Cloud-specific factors, such as deployment models and service-level agreements (SLAs), also play a significant role.

Factors Influencing Database Choice

The choice of a database is multifaceted and hinges on several key factors. Data characteristics, application needs, and cloud deployment model all play a critical role. Understanding these factors allows for a more informed decision regarding the optimal database solution.

- Data Characteristics: The structure and type of data are primary considerations. Structured data, with predefined schemas, often benefits from relational databases, while unstructured or semi-structured data might be better suited for NoSQL databases.

- Application Needs: The application’s query patterns, data manipulation requirements, and transaction needs significantly impact the database selection. For instance, high-volume read operations might favor a different database than those focused on complex queries.

- Cloud Deployment Model: The chosen cloud deployment model (e.g., IaaS, PaaS, SaaS) and associated services influence the database deployment and management strategy. Considerations include vendor lock-in, cost, and the need for managed services.

Key Considerations for Cloud-Based Database Deployments

Cloud-based database deployments demand specific attention to security, scalability, and operational efficiency.

- Security: Protecting sensitive data in a cloud environment requires robust security measures. These include encryption at rest and in transit, access controls, and regular security audits. Data encryption and access control mechanisms should be implemented for both the database and the surrounding infrastructure.

- Scalability: Cloud databases should be able to adapt to fluctuating workloads. The ability to scale horizontally or vertically, to adjust storage capacity and processing power, is crucial for ensuring optimal performance and responsiveness.

- Operational Efficiency: Automated management tools, monitoring, and maintenance procedures are crucial for optimizing database operations in a cloud environment. Simplified management processes, automatic scaling, and built-in monitoring tools are critical.

Types of Databases Suitable for Cloud Environments

Various database types cater to different needs within cloud architectures.

- Relational Databases (SQL): These databases organize data into structured tables with defined relationships. Examples include MySQL, PostgreSQL, and Oracle Database. They excel at handling structured data and complex queries. They are commonly used for applications requiring ACID properties (Atomicity, Consistency, Isolation, Durability).

- NoSQL Databases: These databases offer flexible schemas and can handle unstructured or semi-structured data. Popular choices include MongoDB, Cassandra, and Redis. They often outperform relational databases in terms of scalability and handling large datasets, particularly in scenarios with high read/write operations.

Database Comparison

The table below highlights key differences between relational and NoSQL databases, focusing on use cases, scalability, and costs.

| Feature | Relational Database | NoSQL Database |

|---|---|---|

| Use Cases | Transaction-heavy applications, complex queries, structured data | Big data analytics, high-volume read/write operations, unstructured data |

| Scalability | Vertical scaling (adding resources to a single server) | Horizontal scaling (adding more servers to the cluster) |

| Costs | Can be higher for large-scale deployments | Often more cost-effective for large-scale deployments due to horizontal scaling |

Evaluating Cloud Database Services

Selecting the appropriate cloud database service is crucial for a robust and scalable cloud architecture. Careful consideration of various factors, including provider-specific characteristics, pricing models, performance benchmarks, and security features, is essential to make an informed decision. This evaluation phase allows for a tailored selection that aligns with specific application needs and budget constraints.A comprehensive evaluation of cloud database services necessitates a thorough understanding of the unique strengths and limitations of each provider.

This includes scrutinizing the performance characteristics, pricing structures, and security measures offered by Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Understanding these nuances will enable a more strategic and informed choice, ultimately optimizing the overall cloud architecture.

Key Characteristics of Cloud Database Providers

Different cloud providers offer various database services with unique strengths and features. Understanding these differences is critical in aligning the chosen database with specific application requirements. AWS, Azure, and GCP each boast distinct strengths in their respective offerings. For instance, AWS is known for its extensive ecosystem of services, while Azure emphasizes integration with its broader platform, and GCP excels in its focus on data analytics capabilities.

- Amazon Web Services (AWS): AWS offers a wide array of relational and NoSQL databases, including Amazon Aurora, Amazon RDS, and DynamoDB. AWS’s vast ecosystem often translates to seamless integration with other AWS services.

- Microsoft Azure: Azure provides robust relational databases (SQL Database) and NoSQL databases (Cosmos DB). Azure’s strengths lie in its strong integration with other Microsoft services, making it a suitable choice for enterprises already invested in the Microsoft ecosystem.

- Google Cloud Platform (GCP): GCP’s database offerings include Cloud SQL (for relational databases) and Cloud Spanner (for globally distributed data). GCP’s emphasis on data analytics and machine learning often makes it an attractive option for data-intensive applications.

Pricing Models for Database Services

Understanding the pricing models is paramount for effective budgeting. Different providers employ varying pricing structures, which can significantly impact the total cost of ownership. It’s essential to analyze the pricing components carefully to ensure cost-effectiveness.

- AWS: AWS pricing models often involve per-usage charges for compute, storage, and data transfer, with varying tiers for different service levels. Reserved instances and other commitment options can provide significant cost savings for consistent usage.

- Azure: Azure’s pricing structure is comparable to AWS, emphasizing usage-based charges. Azure also offers reserved instances and other commitment options for cost optimization.

- GCP: GCP’s pricing structure is characterized by a blend of usage-based charges and pre-paid options. GCP’s pricing often includes a focus on granular control over resources to achieve cost efficiency.

Performance Benchmarks for Cloud Database Solutions

Performance benchmarks are crucial for assessing the suitability of a database for specific application needs. Thorough analysis of benchmarks is essential to determine optimal throughput and latency for the chosen application.

- AWS: Performance of AWS databases often depends on factors such as the chosen database engine, instance type, and configuration. Extensive benchmarks and documentation are typically available from AWS for specific solutions.

- Azure: Azure’s database performance is contingent on similar factors as AWS, including the selected database, instance type, and configurations. Detailed performance metrics are often published by Azure for each database solution.

- GCP: GCP’s database performance, like other providers, is dependent on specific configuration choices. GCP’s documentation typically includes performance data and benchmarks for various database instances.

Security Features for Cloud Databases

Security is paramount when selecting a cloud database. A robust security posture is essential for protecting sensitive data. Providers offer various security features to safeguard data integrity and confidentiality.

- AWS: AWS offers a comprehensive suite of security features, including encryption at rest and in transit, access control, and security auditing tools. AWS also emphasizes compliance with various industry standards.

- Azure: Azure provides a broad range of security features, such as encryption, role-based access control (RBAC), and advanced threat protection mechanisms. Azure’s security offerings are designed to align with enterprise-grade security requirements.

- GCP: GCP’s security features include encryption, access control, and audit logging capabilities. GCP’s security architecture is built around industry best practices and compliance with security standards.

Comparative Analysis of Database Services

This table summarizes the key features, pricing, and security aspects of database services from each cloud provider.

| Feature | AWS | Azure | GCP |

|---|---|---|---|

| Database Types | Relational (Aurora, RDS), NoSQL (DynamoDB) | Relational (SQL Database), NoSQL (Cosmos DB) | Relational (Cloud SQL), NoSQL (Cloud Spanner) |

| Pricing Model | Usage-based, Reserved Instances | Usage-based, Reserved Instances | Usage-based, Committed Use Discounts |

| Security Features | Encryption, Access Control, Auditing | Encryption, RBAC, Threat Protection | Encryption, Access Control, Audit Logging |

Understanding Database Requirements

Choosing the right database for your cloud architecture hinges on a thorough understanding of your application’s data needs. This involves analyzing data storage requirements, access patterns, and the importance of robust backup and recovery strategies. Careful consideration of these factors ensures optimal performance, scalability, and data integrity.A well-defined understanding of your application’s data characteristics allows you to select a database system that aligns perfectly with its operational needs.

This includes anticipating future growth and scalability demands.

Data Storage Needs

Understanding the diverse ways data is stored is crucial for database selection. Different data types and structures require different storage mechanisms. Structured data, such as customer information in a relational database, requires different storage compared to unstructured data, like user-generated content in a NoSQL database. This understanding guides the choice of a suitable database.

Data Volume and Velocity

The volume and velocity of data are critical performance considerations. A database designed for a small, static dataset will likely struggle with high data volumes and high-velocity inputs. Consider the expected growth of your data to ensure scalability. For example, a social media platform will experience massive data volume and velocity compared to a simple inventory management system.

Data Consistency and Integrity

Ensuring data consistency and integrity is paramount for reliable applications. The chosen database must support mechanisms to maintain data accuracy and reliability. This includes ACID properties (Atomicity, Consistency, Isolation, Durability) for transactions in relational databases or other equivalent mechanisms in NoSQL databases. Data validation rules and constraints help maintain integrity.

Data Access Patterns

Different applications have unique data access patterns. Knowing how data will be accessed—read-heavy, write-heavy, or a mix—helps select a database optimized for these patterns. For instance, a real-time analytics platform might require high-speed read operations, while a transactional system will need fast write operations. Understanding these patterns is crucial to performance optimization. A banking application, for example, needs extremely reliable transaction consistency, demanding a database that prioritizes atomicity and durability.

Data Backup and Recovery Mechanisms

Robust backup and recovery strategies are essential for disaster recovery and data protection. The chosen database should support efficient backup mechanisms and recovery procedures. Regular backups and replication strategies are critical to prevent data loss. The frequency and method of backups should be carefully considered, especially for applications with critical data. For example, financial institutions require extremely reliable data backup and recovery solutions to ensure the continuity of business operations in the face of potential disasters.

Scalability and Performance Considerations

Choosing the right database for your cloud architecture hinges significantly on its scalability and performance capabilities. Understanding how a database scales under load and responds to varying data volumes is critical for ensuring the reliability and responsiveness of your applications. Effective performance monitoring and robust scaling strategies are essential for a successful cloud database implementation.Cloud databases offer various scaling strategies to accommodate fluctuating demands.

Efficient scaling techniques are vital to maintaining optimal performance and avoiding bottlenecks, particularly in environments with unpredictable traffic patterns. Measuring and monitoring database performance in the cloud provides crucial insights into resource utilization and potential issues. These insights allow proactive adjustments and prevent performance degradation under pressure.

Different Scaling Strategies for Cloud Databases

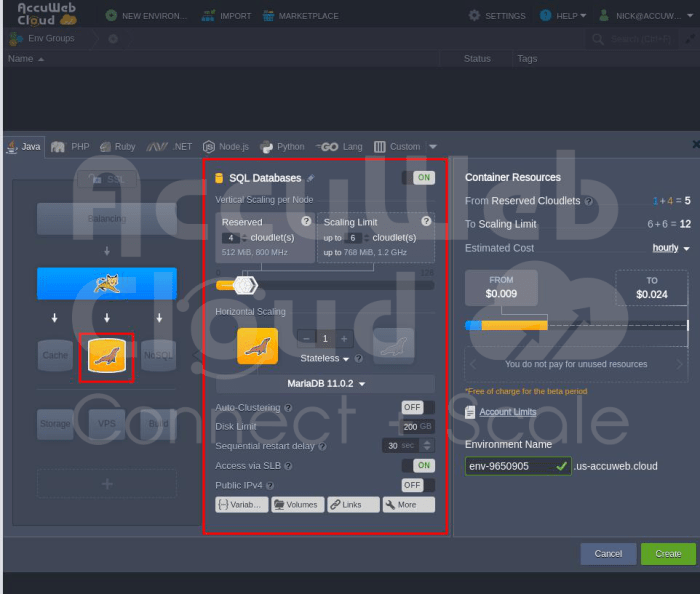

Cloud databases provide various scaling options to adapt to fluctuating workloads. These options include vertical scaling (increasing resources on a single instance) and horizontal scaling (adding more instances). Vertical scaling is often simpler but has limitations in terms of capacity, while horizontal scaling offers more flexibility and scalability. Auto-scaling capabilities in cloud providers allow databases to automatically adjust resources based on real-time demand, optimizing resource utilization.

- Vertical Scaling: This strategy involves increasing the resources of a single database instance, such as RAM, CPU, or storage. It’s generally simpler to implement but has limitations. Increasing resources can be costly and may not address issues like insufficient storage or CPU constraints. Suitable for small to medium workloads where predictable growth is anticipated.

- Horizontal Scaling: This method involves distributing the workload across multiple database instances. It’s more complex to implement but provides significant scalability and redundancy. It’s suitable for high-volume applications with fluctuating demands and requirements for high availability. This strategy often involves load balancing across instances.

- Auto-Scaling: Cloud providers offer automated scaling solutions. These systems dynamically adjust resources based on monitored metrics, such as CPU usage, query rate, and data volume. Auto-scaling ensures that the database infrastructure adapts to the fluctuating demands of the application.

Measuring and Monitoring Database Performance in the Cloud

Monitoring database performance in the cloud is crucial for identifying bottlenecks and optimizing resource allocation. Tools and dashboards provided by cloud providers offer comprehensive performance metrics, including query response times, connection rates, and resource utilization. Careful analysis of these metrics allows for timely identification and resolution of potential issues.

- Monitoring Tools: Cloud providers offer built-in monitoring tools for database performance. These tools provide detailed metrics on query execution times, resource utilization, and other performance indicators. Effective use of these tools allows for proactive identification of potential issues.

- Performance Metrics: Key performance indicators (KPIs) for database performance include query response time, transaction throughput, resource utilization (CPU, memory, network), and connection rates. Monitoring these metrics allows for real-time analysis and detection of performance issues.

- Alerting Mechanisms: Alerting systems are essential for notifying administrators of performance degradation. These systems trigger alerts based on predefined thresholds for various metrics, ensuring timely intervention and preventing service disruptions.

Handling High-Volume Data Transactions in a Cloud Database

High-volume data transactions can significantly impact database performance. Techniques for handling such transactions include database sharding, caching, and data partitioning. Sharding distributes data across multiple instances, caching reduces the load on the database by storing frequently accessed data, and partitioning divides data into smaller units to optimize queries.

- Sharding: Sharding is a technique for distributing large datasets across multiple database instances. It allows for handling high-volume transactions by distributing the load. Sharding enhances scalability and performance by reducing the workload on individual instances.

- Caching: Caching involves storing frequently accessed data in a faster memory store. This reduces the database load, enabling faster query response times. Caching can significantly improve performance by reducing the frequency of database access.

- Data Partitioning: Partitioning divides data into smaller, manageable units. This strategy allows for efficient data retrieval and reduced query time. Data partitioning can improve performance, particularly for large datasets.

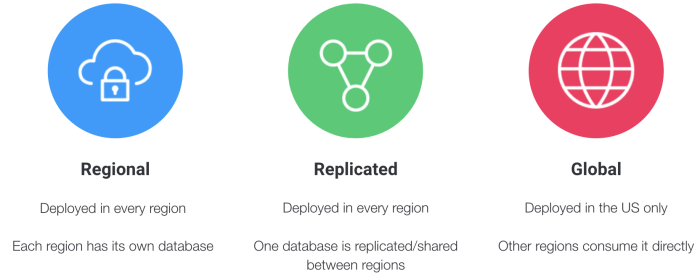

Impact of Data Distribution on Database Performance

Data distribution significantly influences database performance. Efficient data distribution strategies minimize latency and improve query performance. Strategies for data distribution often include geographical considerations, balancing data loads, and optimizing query patterns.

- Data Distribution Strategies: Careful consideration of data distribution strategies, including geographical distribution and data balancing, can optimize database performance. Strategies for data distribution should address query patterns and data access patterns.

- Latency Minimization: Data distribution strategies should aim to minimize latency for data access. Geographic proximity and network infrastructure can influence query response times.

- Query Optimization: Query optimization is crucial for leveraging data distribution effectively. Efficient queries reduce the time needed to retrieve data, improving overall database performance.

Scalability Options for Different Database Types in the Cloud

The scalability options for various database types in the cloud differ based on their architecture and functionality. Choosing the right database type often depends on the specific needs of the application.

| Database Type | Vertical Scaling | Horizontal Scaling | Auto-Scaling |

|---|---|---|---|

| Relational Databases (e.g., MySQL, PostgreSQL) | Limited | Supported (with sharding) | Supported |

| NoSQL Databases (e.g., MongoDB, Cassandra) | Limited | Highly Scalable | Supported |

| Graph Databases (e.g., Neo4j) | Limited | Supported (with sharding) | Supported |

Security and Compliance

Choosing the right database for your cloud architecture involves careful consideration of security and compliance aspects. A secure database is crucial for protecting sensitive data and maintaining the trust of users and stakeholders. Failure to address security risks can lead to significant financial losses, reputational damage, and legal repercussions. This section delves into the critical security implications and compliance requirements inherent in selecting a cloud database.Cloud databases offer potential security advantages but also present unique challenges.

Understanding these nuances is vital to mitigating risks and ensuring a secure and compliant solution. Proper implementation of security measures, from encryption to access control, is essential to protect data from unauthorized access and maintain adherence to regulatory mandates.

Security Implications of Choosing a Specific Cloud Database

Various cloud databases have differing security features and architectures. Some offer built-in encryption and access controls, while others require more extensive configuration. The chosen database’s security posture directly impacts the overall security of the application. For instance, a database lacking robust encryption mechanisms exposes sensitive data to potential breaches if the database is compromised. This is especially crucial for applications handling personally identifiable information (PII) or financial data.

Therefore, evaluating the security features of each database is critical before deployment.

Compliance Requirements for Cloud Databases

Many industries and jurisdictions mandate specific data security and privacy standards. Compliance with these regulations is mandatory for organizations operating within those domains. Examples include HIPAA for healthcare, GDPR for European Union data, and PCI DSS for financial institutions. Failure to comply with these regulations can result in substantial fines, legal action, and damage to reputation. Carefully assessing the compliance capabilities of the selected cloud database and integrating necessary controls is essential.

Security Best Practices for Cloud Databases

Implementing robust security practices is vital for securing data stored in cloud databases. These practices often include multi-factor authentication (MFA), regular security audits, and vulnerability scanning. Properly configuring access controls and implementing strong passwords are also critical security best practices. Furthermore, regularly updating the database software and patching known vulnerabilities is essential. These practices reduce the likelihood of unauthorized access and data breaches.

Methods for Securing Data Access in a Cloud Database

Implementing strict access control measures is essential for securing data within a cloud database. Role-based access control (RBAC) and granular permissions help to limit access to only authorized users and applications. Using strong passwords and enforcing password policies, along with multi-factor authentication, enhances the security posture. Data masking techniques can also be employed to protect sensitive data during development and testing.

The implementation of these techniques strengthens the security posture against potential breaches.

The Role of Encryption and Access Control in a Cloud Database

Encryption is a cornerstone of data security in cloud databases. Encrypting data at rest and in transit safeguards it from unauthorized access during storage and transmission. Robust encryption algorithms and key management practices are crucial to maintain data confidentiality. Access control mechanisms, such as RBAC, dictate who can access specific data or perform specific actions on the database.

The combination of encryption and access control provides a layered approach to data security, ensuring data protection against unauthorized access and breaches.

Data Migration Strategies

Selecting the appropriate data migration strategy is crucial for a successful cloud database transition. A well-planned migration minimizes downtime, ensures data integrity, and avoids costly errors. Careful consideration of the source and target database systems, data volume, and desired migration timeline is paramount. Choosing the right approach directly impacts the overall success of the cloud deployment.Effective data migration strategies are not just about moving data; they are about ensuring a smooth transition that preserves data integrity, minimizes downtime, and maintains business continuity.

A thoughtful approach that encompasses the entire process, from initial assessment to post-migration validation, is vital. This involves a deep understanding of the source and target systems, the data itself, and the specific requirements of the application.

Data Migration Approaches

Various approaches exist for migrating data to a cloud database, each with its own strengths and weaknesses. The best approach depends on factors like the size of the data, the complexity of the schema, and the desired timeframe for completion. Common approaches include:

- Full Migration: This approach involves copying all data from the on-premises database to the cloud database in one operation. It’s suitable for smaller datasets or when a complete data refresh is required. However, it can be time-consuming and disruptive if not carefully planned.

- Incremental Migration: This method involves migrating data in batches or smaller portions over time. It’s useful for large datasets, allowing for continuous operation of the application while data is being transferred. Careful scheduling and monitoring are critical to minimize disruptions.

- Data Refresh Migration: This method involves transferring only the updated or changed data, saving significant time and resources compared to a full migration. It is best suited for applications where data updates are frequent. This method requires careful tracking of changes and efficient change data capture (CDC) mechanisms.

- Reverse Engineering Migration: This approach involves creating a new database in the cloud and loading data into it by reconstructing the data structure from the original database. It is appropriate for legacy systems or situations where the existing database structure is not easily portable.

Data Migration Tools

Numerous tools facilitate the migration process. Choosing the right tool depends on the specific needs of the project, including the source and target databases, the volume of data, and the desired level of automation.

- Database Migration Tools (e.g., AWS Database Migration Service, Azure Database Migration Service): Cloud providers offer dedicated tools for migrating data between databases, providing automation and monitoring features. These tools handle complex tasks, often including schema transformations and data type conversions. They are typically robust and well-integrated with cloud environments, streamlining the process.

- ETL (Extract, Transform, Load) Tools (e.g., Informatica, Talend): ETL tools are powerful for data migration, offering flexibility for complex data transformations and cleansing. They can handle a wide range of data formats and are valuable for migrating data between diverse systems. However, they often require more configuration and technical expertise.

- Scripting Languages (e.g., Python, SQL): Scripting languages enable customized data migration processes, often offering more granular control over the migration process. They are suitable for small-scale migrations or scenarios requiring specific data manipulation logic.

Steps in a Successful Data Migration

A systematic approach ensures a smooth transition. A well-defined plan reduces risks and improves the efficiency of the migration process.

- Assessment and Planning: Thoroughly analyze the source and target databases, identifying data volumes, schemas, and dependencies. Establish clear migration goals, timelines, and budget. This phase includes understanding the data, establishing a detailed plan, and estimating the resources required.

- Data Validation and Testing: Validate the integrity of the data before migration and during the process. Develop test cases to ensure data consistency and accuracy across the transition. Thorough testing in a non-production environment is crucial for minimizing errors in the production environment.

- Migration Execution: Implement the chosen migration strategy, monitoring the process closely. Implement appropriate controls and error handling mechanisms during the transfer. This step involves the actual data transfer, continuously monitoring progress, and addressing any issues promptly.

- Post-Migration Validation: Verify that the data in the cloud database is complete, accurate, and consistent with the source database. This involves performing comprehensive checks and reconciliations. Regular monitoring after migration ensures ongoing data integrity.

Challenges in Data Migration

Data migration is not without its complexities. Careful planning and mitigation strategies are crucial to a successful migration.

- Data Volume and Complexity: Large datasets and complex schemas can significantly impact the migration time and require significant resources. Optimizing the migration process and using appropriate tools is critical to minimize the time and effort involved.

- Data Integrity Issues: Inconsistencies, errors, and missing data in the source database can lead to problems in the cloud database. Robust data validation and cleansing procedures are necessary to mitigate these risks.

- Downtime and Application Impact: The migration process can cause application downtime. Implementing strategies to minimize downtime and maintain business continuity during the transition is critical.

- Security Concerns: Ensuring the security of sensitive data during the migration process is paramount. Employing appropriate security measures and adhering to industry standards is essential.

Mitigation Strategies

Several strategies can mitigate the risks associated with data migration. Planning and thorough testing can significantly reduce the potential for errors.

- Data Validation and Cleansing: Implementing rigorous validation and cleansing procedures before and during the migration process ensures data quality and reduces the likelihood of errors.

- Testing and Prototyping: Performing thorough testing and prototyping in a non-production environment helps identify potential issues and refine the migration strategy. This approach ensures a smooth and accurate transition to the cloud.

- Backup and Recovery Planning: Establishing robust backup and recovery procedures is critical to minimize the impact of potential data loss or system failures during the migration. A well-defined backup strategy allows for rapid recovery if necessary.

- Communication and Collaboration: Open communication and collaboration between stakeholders throughout the migration process ensure everyone is aligned on goals, timelines, and potential roadblocks.

Integration with Cloud Services

Selecting a database for your cloud architecture necessitates careful consideration of its integration capabilities with other cloud services. Effective integration streamlines workflows, enhances data management, and optimizes overall application performance. This section explores the various aspects of database integration within a cloud environment, encompassing methods, tools, potential compatibility issues, and monitoring strategies.

Integration Methods

Proper integration hinges on the chosen methods. Cloud providers offer diverse approaches, each with its own advantages and limitations. These methods can be categorized into API-based integrations, which use Application Programming Interfaces to exchange data, and service-to-service integrations, which connect cloud services directly. Direct integration, where the database and other services communicate directly, offers optimized performance but might be more complex to implement.

Available Integration Tools

Various tools facilitate the seamless integration of cloud databases with other cloud services. These tools typically include APIs, SDKs (Software Development Kits), and management consoles. APIs allow developers to programmatically interact with the database, while SDKs provide pre-built functions and libraries for specific programming languages. Management consoles offer a user-friendly interface for managing database connections and configurations.

Potential Compatibility Issues

Compatibility concerns can arise when integrating a database with other cloud services. Differences in data formats, communication protocols, or service versions can lead to compatibility issues. Careful consideration of the database’s API specifications and the compatibility requirements of the target services is crucial to mitigate such risks. For instance, a database might not support the specific data types required by a particular analytics service, necessitating data transformation.

Monitoring the Integration Process

Effective monitoring is essential for ensuring the smooth operation of the database integration. Monitoring tools allow tracking of key metrics, such as query performance, connection latency, and data transfer rates. Real-time monitoring alerts can notify administrators of potential issues, such as high latency or errors in data transfer. Log analysis tools can provide insights into the interactions between the database and other cloud services, helping pinpoint and resolve problems effectively.

Regular performance testing and load testing are critical to maintain optimal performance under various conditions.

Example: Cloud Storage Integration

Consider a scenario where a cloud database is used to store customer data. Integration with cloud storage services, such as Amazon S3, allows for efficient storage of large datasets. This integration is typically API-based, allowing seamless transfer of data between the database and storage. Tools like AWS SDKs enable developers to easily interact with both services. Compatibility issues might arise if the database doesn’t support the file formats used by the storage service.

Monitoring involves tracking storage space usage and the time it takes to upload and download data. Tools like AWS CloudWatch can help monitor the health and performance of the integration process.

Cost Optimization Strategies

Optimizing database costs in the cloud is crucial for long-term financial sustainability. Effective strategies leverage cloud provider features, proper database sizing, and smart pricing models. Careful analysis and proactive optimization tools are essential to ensure cost-effectiveness throughout the database lifecycle.Cloud database services offer a spectrum of pricing models, allowing businesses to select options that best align with their usage patterns.

Understanding these models and tailoring the database configuration to minimize costs while maintaining performance is paramount. This section explores various approaches to optimize database costs in the cloud.

Database Sizing Considerations

Proper database sizing is paramount for cost optimization. Over-provisioning leads to unnecessary expenditure, while under-provisioning can compromise performance, potentially impacting application availability and user experience. An appropriate database instance size must balance cost with the expected workload demands. Analyzing historical data and projected future usage patterns aids in selecting the optimal size.

Cloud Provider Cost-Saving Features

Cloud providers offer various cost-saving features designed to minimize database expenses. These features include:

- Reserved Instances: Reserved instances provide significant discounts for predictable workloads. Companies with consistent, known usage patterns benefit substantially from locking in these reduced rates.

- Spot Instances: Spot instances leverage unused cloud capacity at significantly reduced prices. However, these instances may be interrupted, so they are ideal for workloads that can tolerate temporary interruptions or can be scheduled during periods of lower demand.

- Free Tier/Trial Periods: Many providers offer free tiers or trial periods that allow users to test database services and features without incurring immediate costs. This is invaluable for evaluation purposes.

- Pay-as-you-go Pricing: A flexible pricing model, allowing users to pay only for the resources they consume. This approach is best for fluctuating workloads, where demand may change frequently.

- Database Optimization Tools: Cloud providers offer tools and resources for optimizing database performance, which in turn can contribute to cost savings by reducing resource consumption.

Pricing Models for Cost Control

Cloud providers offer various pricing models. Choosing the right model directly impacts costs.

- Pay-as-you-go: This model charges based on actual resource consumption, offering flexibility but potentially higher costs for unpredictable workloads.

- Reserved Instances: This model provides significant discounts for predictable workloads but requires a commitment to the instance type and duration.

- Spot Instances: These instances offer substantial cost savings by utilizing unused capacity, but availability is not guaranteed, and interruption is a possibility.

Analyzing and Optimizing Database Costs

Analyzing database costs is essential for identifying areas of improvement. Regular monitoring and analysis allow proactive adjustments to pricing models and resource allocation.

- Cost Monitoring Tools: Cloud providers offer built-in tools for monitoring database costs, enabling detailed tracking of resource consumption and expenditure. These tools provide insights into areas where costs can be reduced.

- Performance Tuning: Optimizing database performance directly impacts resource consumption and, subsequently, costs. Tools that assist in query optimization and indexing strategies can significantly reduce resource use.

- Usage Patterns Analysis: Analyzing database usage patterns reveals trends and anomalies that indicate opportunities for cost optimization. Identifying periods of high and low usage can inform decisions about instance sizing and pricing models.

Choosing the Right Database Type

Selecting the appropriate database type is crucial for the success of a cloud application. The chosen database should seamlessly integrate with the application’s functionalities, scale efficiently, and provide adequate performance. Different database types offer varying strengths and weaknesses, making careful consideration essential. A thorough understanding of these characteristics empowers informed decisions and ensures optimal application performance and scalability.

Relational Databases

Relational databases (RDBMS) organize data into tables with defined relationships. They utilize structured query language (SQL) for data manipulation and retrieval. These systems excel at managing structured data and enforcing data integrity through constraints and relationships.

- Advantages: Data integrity is a strong point, enabling reliable data management. SQL’s standardized nature facilitates efficient querying and data manipulation, making it a popular choice for applications requiring complex data relationships. A vast ecosystem of tools and expertise exists, making maintenance and support readily available.

- Disadvantages: Relational databases can be less flexible for unstructured or rapidly changing data. Scaling to accommodate massive datasets can present challenges, and the schema design can be rigid and require significant upfront planning.

- Examples: MySQL, PostgreSQL, Oracle Database, SQL Server.

NoSQL Databases

NoSQL databases offer flexibility and scalability, accommodating unstructured and semi-structured data. They come in various types, each tailored for specific data models, such as document, key-value, column-family, and graph databases. NoSQL databases are often preferred for applications handling large volumes of data with varying structures.

- Advantages: Scalability is a significant advantage, allowing for handling massive datasets and high traffic loads. The flexible schema design allows for easier adaptation to evolving data models, a key benefit for rapidly changing applications. Faster read and write speeds are often possible compared to relational databases for specific use cases.

- Disadvantages: Data consistency and integrity might be less straightforward to manage compared to relational databases. Complex queries and relationships can sometimes be challenging to implement effectively. The lack of a standardized query language can make development and maintenance more complex.

- Examples: MongoDB, Cassandra, Couchbase, Redis.

Graph Databases

Graph databases store data as nodes and relationships. These structures are exceptionally suited for applications involving complex relationships, such as social networks, recommendation systems, and fraud detection.

- Advantages: Ideal for applications requiring efficient traversal of complex relationships. They provide excellent performance for queries involving relationships between entities, a key advantage for certain use cases. Graph databases excel in scenarios involving network analysis and complex data structures.

- Disadvantages: Not always the optimal choice for simple applications or those needing only basic data retrieval. The query language and database structure might be less familiar to developers with limited experience. Scalability to handle extremely large datasets may pose challenges.

- Examples: Neo4j, Amazon Neptune.

Database Engine Comparison

Different database engines offer various performance characteristics. Factors such as query optimization, concurrency control, and transaction management significantly impact the efficiency of database operations.

| Database Engine | Characteristics |

|---|---|

| MySQL | Robust, widely used, supports various storage engines, relatively easy to use. |

| PostgreSQL | Powerful, open-source, supports complex queries and advanced features, strong ACID properties. |

| MongoDB | Flexible, document-oriented, schema-less, excellent for unstructured data. |

| Cassandra | Highly scalable, distributed, ideal for large datasets and high availability. |

Criteria for Selection

The choice of database type hinges on specific application requirements. Factors such as data volume, data structure, query patterns, scalability needs, and budget should be considered.

- Data Volume: High-volume data necessitates a scalable database solution.

- Data Structure: Structured data favors relational databases, while unstructured data benefits from NoSQL solutions.

- Query Patterns: Complex queries often require a relational database, whereas simple queries can use NoSQL databases effectively.

- Scalability Requirements: Highly scalable applications demand databases capable of horizontal scaling.

- Budget Constraints: Open-source databases often provide cost-effective solutions.

Monitoring and Maintenance

Choosing the right database for your cloud architecture involves more than just selecting the type and service. Effective monitoring and maintenance are crucial for ensuring optimal performance, security, and cost-effectiveness. Properly implemented monitoring and maintenance strategies can proactively identify and resolve potential issues before they impact your application or data.Maintaining a healthy cloud database environment requires a proactive approach.

This includes establishing robust monitoring systems, understanding best practices for maintenance, and having clear procedures for resolving issues. Proactive management, coupled with the right tools, enables continuous optimization and minimizes downtime.

Importance of Monitoring Cloud Databases

Continuous monitoring of cloud databases is vital for identifying performance bottlenecks, security threats, and potential issues early. This allows for timely intervention and prevents escalation into significant problems. Real-time monitoring data empowers proactive adjustments to resource allocation and database configuration, leading to enhanced performance and reduced operational costs. Monitoring also facilitates adherence to service-level agreements (SLAs) and guarantees consistent service delivery.

Examples of Monitoring and Maintenance Tools

Various tools are available for monitoring and maintaining cloud databases. These tools offer varying functionalities, ranging from basic performance metrics to advanced issue resolution capabilities. Examples include CloudWatch for AWS, Azure Monitor for Azure, and Datadog, a platform that supports a variety of cloud providers. These tools enable real-time performance tracking, alerting on critical events, and facilitating efficient troubleshooting.

Furthermore, specialized database management systems (DBMS) often include their own monitoring and maintenance features.

Best Practices for Database Maintenance

Proactive maintenance strategies are critical for maintaining database health. Regular backups, including incremental backups, are essential for data recovery in case of failures. Furthermore, scheduled maintenance windows should be implemented for tasks such as applying patches, upgrading software, and conducting performance tuning. Consistent adherence to these best practices helps prevent unexpected downtime and data loss.

Importance of Performance Tuning for Cloud Databases

Performance tuning is essential for cloud databases to ensure optimal efficiency. It involves adjusting database configurations, optimizing queries, and managing resource allocation. This optimization process aims to enhance query response times, reduce resource consumption, and improve overall application performance. Understanding query patterns and resource utilization helps in making informed tuning decisions.

Identifying and Resolving Issues in a Cloud Database

Troubleshooting database issues requires a systematic approach. First, identify the root cause by analyzing logs, monitoring metrics, and reviewing error messages. Once the cause is identified, appropriate corrective actions can be taken. This may involve adjusting query optimization, scaling resources, or implementing appropriate maintenance strategies. It’s important to document all steps taken to resolve the issue for future reference and prevent recurrence.

Effective issue resolution procedures are vital for maintaining service reliability and minimizing disruptions to applications.

Case Studies and Examples

Selecting the appropriate database for a cloud architecture requires careful consideration of various factors, including scalability, performance, security, and cost. Real-world case studies provide valuable insights into the successful implementation of different database solutions, highlighting best practices and lessons learned. These examples demonstrate how various database types can address specific application needs in a cloud environment.

Successful Database Implementations in Cloud Architectures

Several organizations have successfully deployed databases in their cloud architectures. One example is a retail company that migrated its transactional database to a cloud-based, highly available, and scalable database service. This migration improved transaction processing speed and reduced latency, leading to enhanced customer satisfaction. Another example is a social media platform that utilized a NoSQL database to handle the massive volume of user data and interactions.

This approach enabled rapid scalability and efficient data retrieval, supporting a rapidly growing user base.

Challenges and Solutions Encountered

Implementing cloud databases is not without its challenges. One common challenge is data migration, which can be complex and time-consuming. Solutions often involve using cloud-native migration tools and techniques to minimize disruption and data loss. Another challenge is ensuring data security and compliance in the cloud. Solutions include implementing robust access controls, encryption, and adhering to industry-specific regulations.

Lessons Learned from Implementations

Critical lessons learned from successful cloud database implementations include the importance of meticulous planning, rigorous testing, and robust monitoring. Properly defined database requirements, coupled with careful selection of the right database type, are crucial for achieving desired performance and scalability. Thorough data migration strategies are vital for a smooth transition and minimizing potential disruptions.

Use Cases for Different Database Types in the Cloud

Different database types are suitable for various application needs. Relational databases, with their structured data model, are well-suited for transactional applications requiring ACID properties, such as online banking and order processing systems. NoSQL databases, with their flexible schemas, are ideal for applications with high volumes of unstructured or semi-structured data, such as social media platforms and content management systems.

Database Solutions for Specific Application Types

| Application Type | Suitable Database Type | Explanation |

|---|---|---|

| Online Banking | Relational Database (e.g., PostgreSQL, MySQL) | Ensures data integrity and ACID properties are crucial for financial transactions. |

| Social Media Platform | NoSQL Database (e.g., MongoDB, Cassandra) | Handles high volumes of user data and interactions efficiently with flexible schema. |

| E-commerce Platform | Relational Database (e.g., MySQL, SQL Server) | Supports complex relationships between products, customers, and orders, and ensures ACID properties. |

| Content Management System (CMS) | NoSQL Database (e.g., MongoDB, Redis) | Efficiently stores and retrieves large volumes of unstructured data, such as articles, images, and videos. |

| IoT Data Storage | NoSQL Database (e.g., Cassandra, Document DB) | Handles the massive influx of sensor data with flexible schema and scalability. |

Conclusion

In conclusion, selecting the right database for your cloud architecture requires careful consideration of various factors, from application requirements to cloud provider services. This guide has provided a framework for evaluating different database types, cloud providers, and cost optimization strategies. By understanding the intricacies of database selection and implementation, you can ensure a smooth transition to the cloud and a scalable, performant application.

Top FAQs

What are some common database access patterns?

Common access patterns include read-heavy operations, write-heavy operations, and mixed read/write operations. The chosen database should efficiently handle the anticipated patterns for optimal performance.

How can I measure database performance in the cloud?

Performance can be measured using metrics like query response time, transaction throughput, and resource utilization. Cloud providers often offer monitoring tools to track these metrics.

What are the key security considerations for cloud databases?

Security considerations include data encryption, access controls, regular security audits, and adherence to relevant industry compliance standards. Properly securing your database is crucial to protecting sensitive information.

What are some common challenges during data migration?

Common challenges include data inconsistencies, compatibility issues with the target database, and downtime during the migration process. Careful planning and testing are vital to mitigating these challenges.