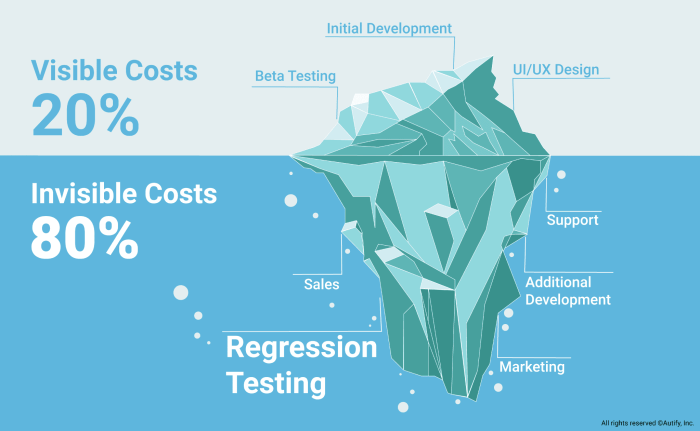

Forecasting cloud costs is a critical aspect of cloud resource management, essential for financial planning, and operational efficiency. Understanding the intricacies of cloud provider pricing models, resource consumption, and optimization strategies is paramount. This guide delves into the methodologies and tools necessary to accurately predict and manage cloud expenses, empowering organizations to make informed decisions and maximize their return on investment within their target cloud environment.

This document provides a comprehensive overview of how to forecast costs for the target cloud environment. It examines the various facets of cloud cost forecasting, including identifying cost components, assessing resource consumption, estimating future needs, selecting the right pricing models, utilizing cost management tools, optimizing resource allocation, implementing cost-effective storage strategies, forecasting network costs, building a cost forecast model, and reviewing and refining the forecast.

Each section builds upon the previous one, offering a cohesive and practical approach to cloud cost management.

Understanding the Target Cloud Environment

The foundation of accurate cloud cost forecasting lies in a comprehensive understanding of the target cloud environment. This encompasses a deep dive into the major cloud providers, their pricing models, the services offered, and the factors that influence costs. A thorough analysis of these elements allows for informed decisions and proactive cost management strategies.

Major Cloud Providers and Their Pricing Models

The cloud computing landscape is dominated by a few key players, each with its own pricing structure and service offerings. These pricing models are complex and designed to cater to diverse needs and usage patterns.

- Amazon Web Services (AWS): AWS offers a vast array of services and a highly flexible pricing model. Pricing is primarily based on a pay-as-you-go model, with options for reserved instances, spot instances, and savings plans.

AWS pricing varies depending on the specific service, instance type, and geographic region. For example, EC2 instances (compute) have different prices based on the instance family (e.g., general purpose, compute optimized, memory optimized), the operating system, and the region where the instance is deployed.Storage costs (e.g., S3) are determined by storage class (e.g., Standard, Intelligent-Tiering, Glacier), data transfer volume, and the number of requests. Database services (e.g., RDS) factor in instance size, storage, and database engine type.

- Microsoft Azure: Azure’s pricing model is also based on a pay-as-you-go approach, with options for reserved instances and spot virtual machines. Azure offers a wide range of services, with pricing varying depending on the service, instance size, and geographic location.

Azure pricing for compute services (e.g., Virtual Machines) depends on the VM size, operating system, and region. Storage costs (e.g., Azure Blob Storage) are determined by storage tier (e.g., Hot, Cool, Archive), data transfer volume, and the number of transactions.Database services (e.g., Azure SQL Database) are priced based on the service tier, compute size (vCores), and storage.

- Google Cloud Platform (GCP): GCP utilizes a pay-as-you-go pricing model, with options for committed use discounts and sustained use discounts. GCP’s pricing is generally competitive and offers detailed pricing breakdowns.

GCP pricing for compute services (e.g., Compute Engine) depends on the machine type, operating system, and region. Storage costs (e.g., Cloud Storage) are determined by storage class (e.g., Standard, Nearline, Coldline), data transfer volume, and operations.Database services (e.g., Cloud SQL) are priced based on the instance size, storage, and database engine.

Common Cloud Services and Their Cost Drivers

Understanding the cost drivers for common cloud services is crucial for effective cost forecasting. These services have specific factors that influence their costs, necessitating careful monitoring and analysis.

- Compute: Compute services, such as virtual machines (VMs) and container services, are typically priced based on the instance size, operating system, and the duration of use. Other factors include the number of CPUs, the amount of memory, and the network bandwidth.

For example, a larger VM with more CPU cores and memory will generally cost more than a smaller VM.Long-running instances often benefit from reserved instance pricing or committed use discounts.

- Storage: Storage costs are driven by the storage class, the volume of data stored, and the number of data access requests. Different storage classes offer varying levels of performance and availability, impacting the price.

For instance, frequently accessed data stored in a “hot” storage tier will cost more per gigabyte than data stored in a “cold” or archival storage tier.Data transfer costs, particularly for egress (data leaving the cloud), also contribute significantly to storage expenses.

- Database: Database costs are influenced by the database engine, instance size, storage capacity, and the number of read/write operations. Managed database services often include features like automatic backups and high availability, which can affect the overall cost.

Choosing a larger database instance with more storage and higher performance will incur higher costs. Optimizing database queries and minimizing unnecessary data access can help control costs.

Impact of Geographic Regions on Cloud Costs

Cloud providers operate data centers in various geographic regions around the world. The location of these data centers can significantly impact the cost of cloud services.

- Pricing Variations: Pricing for the same cloud services can vary across different regions. This is often due to factors such as local infrastructure costs, energy prices, and market competition.

For example, compute instances in regions with lower energy costs might be cheaper than in regions with higher energy costs. Similarly, regions with high demand for cloud services might experience higher prices. - Data Transfer Costs: Data transfer costs, particularly for data transferred between regions, can be substantial. This is especially true for egress traffic (data leaving the cloud provider’s network).

The cost of transferring data from one region to another is generally higher than transferring data within the same region. Selecting the appropriate region for data storage and processing is therefore crucial to minimize these costs. - Compliance and Latency: The choice of region can also be influenced by compliance requirements and latency considerations. Some organizations are required to store data within specific geographic boundaries.

Choosing a region closer to end-users can reduce latency, improving application performance. Selecting a region that meets data residency requirements is essential for regulatory compliance.

Comparing Pricing Structures for Compute Instances

The following table illustrates a simplified comparison of compute instance pricing across three major cloud providers. This comparison highlights the differences in pricing models, instance types, and potential cost variations.

Note

Prices are illustrative and can vary based on specific instance configurations, operating systems, and region. Always consult the official cloud provider pricing documentation for the most up-to-date and accurate information.*

| Cloud Provider | Instance Type (Example) | Pricing Model | Cost Driver Examples |

|---|---|---|---|

| AWS | EC2 t3.medium | Pay-as-you-go, Reserved Instances, Spot Instances, Savings Plans | Instance size, operating system, region, duration, data transfer |

| Azure | Virtual Machines (Standard_B2s) | Pay-as-you-go, Reserved Instances, Spot Virtual Machines | VM size, operating system, region, duration, data transfer |

| GCP | Compute Engine (e2-medium) | Pay-as-you-go, Committed Use Discounts, Sustained Use Discounts | Machine type, operating system, region, duration, data transfer |

Identifying Cost Components

Understanding the granular cost components within a cloud environment is crucial for effective cost forecasting. This involves dissecting the various services consumed and understanding how each contributes to the overall expenditure. Accurate identification allows for informed decisions regarding resource allocation, optimization, and ultimately, cost management. This section details the key cost categories and their influencing factors.

Cost Categories in a Cloud Environment

Cloud environments comprise several distinct cost categories, each representing a different type of resource consumption. Understanding these categories is the foundation for accurate cost forecasting.

- Compute: This category encompasses the cost of virtual machines (VMs), containers, and serverless functions. Compute costs are often the largest expense in a cloud environment, reflecting the resources dedicated to processing workloads.

- Storage: Storage costs cover the expenses associated with storing data, including object storage, block storage, and file storage. The cost varies based on the storage type, volume of data stored, and access frequency.

- Networking: Networking costs relate to the movement of data in and out of the cloud environment, as well as within the cloud itself. This includes data transfer charges, IP address fees, and the cost of using services like load balancers.

- Data Transfer: This is a specific subset of networking costs that focuses on the charges for transferring data into and out of the cloud provider’s network. Data transfer charges can vary significantly based on the origin and destination of the data.

- Database: Database costs involve the expenses related to managed database services, such as relational databases (e.g., MySQL, PostgreSQL) and NoSQL databases (e.g., MongoDB, Cassandra). Costs depend on the database size, performance requirements, and the specific service chosen.

- Monitoring and Logging: Cloud providers offer services for monitoring and logging resource usage and performance. These services come with associated costs based on the volume of data ingested, the retention period, and the complexity of the monitoring setup.

- Other Services: This category encompasses all other services offered by the cloud provider, such as machine learning services, content delivery networks (CDNs), and various platform-as-a-service (PaaS) offerings. Costs are dependent on the specific services used and their consumption patterns.

Factors Influencing Compute Costs

Compute costs are primarily determined by the resources allocated to processing workloads. Several factors significantly influence these costs, impacting the overall cloud expenditure.

- Instance Size: The size of the virtual machine instance (e.g., the number of vCPUs, the amount of RAM) directly impacts the cost. Larger instances, with more resources, are more expensive. For example, a virtual machine with 8 vCPUs and 32 GB of RAM will cost significantly more per hour than a machine with 2 vCPUs and 8 GB of RAM.

- Usage Duration: The length of time an instance is running is a primary cost driver. Cloud providers typically charge by the hour or minute for compute resources. Longer usage durations translate to higher costs. For example, running an instance for 720 hours (a full month) will be more expensive than running it for 10 hours.

- Operating System: The operating system installed on the virtual machine can influence the cost. Some cloud providers charge a premium for specific operating systems, such as Windows Server, due to licensing fees. Linux distributions are often less expensive.

- Region: The geographic region where the compute resources are located can affect the cost. Prices may vary across regions due to factors such as infrastructure costs and market demand. For example, compute instances in a region with high demand might be more expensive than those in a region with lower demand.

- Pricing Model: Cloud providers offer different pricing models, such as on-demand, reserved instances, and spot instances. On-demand instances are the most expensive but offer flexibility. Reserved instances offer significant discounts for committing to a specific instance type for a period. Spot instances are the cheapest but can be terminated if the provider needs the resources back. The choice of pricing model significantly impacts compute costs.

Calculating Storage Costs

Storage costs are calculated based on the volume of data stored, the storage type selected, and the access frequency. Understanding these factors is essential for accurately predicting storage expenses.

- Storage Type: Different storage types offer varying levels of performance, availability, and cost. For example:

- Object Storage (e.g., Amazon S3, Azure Blob Storage): Typically used for storing large amounts of unstructured data, offering high durability and availability at a lower cost. Cost is calculated per GB stored per month, with additional charges for data transfer and access requests.

- Block Storage (e.g., Amazon EBS, Azure Disks): Provides persistent storage volumes for virtual machines. Cost is based on the volume size, performance characteristics (e.g., IOPS), and the region.

- File Storage (e.g., Amazon EFS, Azure Files): Offers shared file systems for multiple instances. Cost is based on the storage capacity used, the performance tier selected, and the number of file operations.

- Data Volume: The total amount of data stored directly impacts the cost. Cloud providers charge per GB or TB stored per month. For example, storing 1 TB of data will be more expensive than storing 100 GB.

- Access Frequency: The frequency with which data is accessed can influence the cost. Cloud providers offer different storage tiers based on access patterns (e.g., frequent access, infrequent access, archive). Tiers with less frequent access are typically cheaper per GB but have higher retrieval costs. For instance, archiving data in a cold storage tier will be less expensive for storage but will incur charges for data retrieval.

- Data Redundancy: The level of data redundancy (e.g., the number of copies of data stored) affects storage costs. Higher redundancy levels provide greater data durability but increase storage expenses.

Common Networking Charges in the Cloud

Networking charges are a significant component of cloud costs, particularly for applications that involve substantial data transfer. Understanding these charges is crucial for cost forecasting.

- Data Transfer Out: Charges for transferring data from the cloud provider’s network to the internet or to other cloud providers. These charges are typically based on the volume of data transferred, with costs varying based on the destination and region.

- Data Transfer In: Generally, data transfer into the cloud provider’s network is free. However, there may be charges for data transfer from certain sources, or for specific services.

- Inter-Region Data Transfer: Charges for transferring data between different regions within the same cloud provider. These charges are often more expensive than data transfer within a single region.

- Elastic IP Addresses/Public IPs: Charges for assigning static public IP addresses to instances.

- Load Balancers: Charges for using load balancing services, which distribute traffic across multiple instances. Costs depend on the type of load balancer, the number of instances it manages, and the volume of traffic it handles.

- VPN Connections: Charges for establishing virtual private network (VPN) connections between the cloud environment and on-premises networks.

- Content Delivery Network (CDN): Charges for using CDN services to cache and deliver content closer to users. Costs depend on the volume of data delivered and the geographic distribution of users.

- Network Firewall: Charges for using network firewall services to secure network traffic.

Assessing Current Resource Consumption

Understanding and accurately assessing current resource consumption is crucial for effective cloud cost forecasting. This process provides a baseline for future cost predictions, allowing for informed decisions regarding resource allocation and optimization. By analyzing existing usage patterns, we can identify trends, bottlenecks, and areas for potential cost savings. This assessment forms the foundation upon which more complex forecasting models are built.

Monitoring Resource Usage within the Target Cloud Environment

Monitoring resource usage involves actively tracking the consumption of CPU, memory, storage, and network bandwidth within the cloud environment. This real-time and historical data provides insights into application performance and resource utilization, enabling proactive management and cost optimization. The specific methods and tools for monitoring vary depending on the cloud provider, but the underlying principles remain consistent.

- Cloud Provider’s Native Monitoring Tools: Most cloud providers offer built-in monitoring services that provide comprehensive data on resource usage. These tools often include dashboards, alerts, and reporting capabilities.

- Amazon Web Services (AWS): AWS CloudWatch provides metrics for various services, including EC2, S3, and RDS. It allows users to create custom dashboards, set up alarms, and analyze historical data.

- Microsoft Azure: Azure Monitor offers similar functionality to CloudWatch, providing metrics, logs, and alerts for Azure resources. It also includes features for application performance monitoring.

- Google Cloud Platform (GCP): Google Cloud Monitoring (formerly Stackdriver) provides monitoring, logging, and alerting services for GCP resources. It integrates with other GCP services and offers a wide range of metrics.

- Third-Party Monitoring Tools: Numerous third-party tools offer advanced monitoring capabilities, including more granular metrics, custom dashboards, and integration with other systems.

- Datadog: A popular monitoring and analytics platform that provides comprehensive visibility into cloud environments.

- New Relic: A performance monitoring platform that offers application performance monitoring, infrastructure monitoring, and real-user monitoring.

- Prometheus and Grafana: Open-source tools for monitoring and visualization. Prometheus collects metrics, and Grafana provides a powerful dashboarding interface.

- Agent-Based Monitoring: Some monitoring solutions require agents to be installed on virtual machines or other resources to collect detailed performance data. This approach provides more in-depth insights but may require additional configuration and management.

Tools and Methods for Tracking CPU, Memory, and Storage Utilization

Tracking CPU, memory, and storage utilization requires the implementation of specific tools and methodologies tailored to the cloud environment and the applications running within it. This involves collecting metrics at regular intervals and analyzing them to understand resource consumption patterns. The granularity of the data collected (e.g., every minute, every five minutes) should be appropriate for the level of detail needed for the forecasting process.

- CPU Utilization: CPU utilization measures the percentage of time the CPU is actively processing instructions. It is a critical metric for understanding application performance and identifying potential bottlenecks.

- Metrics: CPU utilization percentage, CPU credits consumed, CPU credit balance (for burstable instances).

- Tools: Cloud provider monitoring tools (CloudWatch, Azure Monitor, Google Cloud Monitoring), third-party monitoring tools (Datadog, New Relic), operating system-level tools (e.g., `top`, `vmstat` on Linux; Task Manager on Windows).

- Analysis: Analyze trends over time to identify periods of high CPU utilization, which may indicate the need for scaling or optimization.

- Memory Utilization: Memory utilization measures the amount of RAM being used by applications and the operating system. High memory utilization can lead to performance degradation and, in some cases, application crashes.

- Metrics: Memory usage percentage, available memory, swap usage (if applicable).

- Tools: Cloud provider monitoring tools, third-party monitoring tools, operating system-level tools (e.g., `free`, `top` on Linux; Task Manager on Windows).

- Analysis: Monitor memory usage to identify potential memory leaks or inefficient memory allocation. Consider increasing the instance size or optimizing application code if memory usage is consistently high.

- Storage Utilization: Storage utilization measures the amount of storage space being used on disks or other storage devices. Monitoring storage is essential for preventing storage shortages and ensuring sufficient capacity for data storage.

- Metrics: Disk space used, disk space available, I/O operations per second (IOPS), throughput.

- Tools: Cloud provider monitoring tools, third-party monitoring tools, operating system-level tools (e.g., `df` on Linux; Disk Management on Windows).

- Analysis: Track storage usage trends to predict when storage capacity will be exhausted. Consider increasing storage volume size, optimizing data storage practices (e.g., data compression, archiving), or implementing data lifecycle management policies.

Collecting Historical Data on Resource Consumption

Collecting historical data is fundamental to effective cost forecasting. This data provides the foundation for understanding past usage patterns and predicting future resource needs. The length of the historical data required depends on the forecasting model being used and the stability of the application workload. Typically, at least several months of historical data is recommended to capture seasonal variations and long-term trends.

- Data Retention Policies: Implement data retention policies to ensure that historical data is stored for the required duration. This includes defining the retention period and the storage location for the data.

- Data Export and Storage: Export the monitoring data from the cloud provider’s monitoring tools or third-party monitoring solutions. Store the data in a suitable format, such as CSV, JSON, or a time-series database. Consider using a data warehouse or data lake for storing large volumes of historical data.

- CloudWatch Logs Export: AWS CloudWatch allows exporting logs to S3 buckets or other destinations. This enables archiving and analysis of log data.

- Azure Monitor Export: Azure Monitor can export data to Azure Storage, Azure Event Hubs, or other destinations.

- Google Cloud Monitoring Export: Google Cloud Monitoring can export data to BigQuery, enabling advanced analytics and reporting.

- Data Aggregation and Transformation: Aggregate the raw data into meaningful metrics and transform it into a format suitable for analysis and forecasting. This may involve calculating averages, sums, or other statistical measures.

- Data Visualization: Visualize the historical data using dashboards and charts to identify trends, patterns, and anomalies. This can help in understanding resource consumption behavior and identifying areas for optimization.

Designing a Dashboard for Visualizing Resource Consumption Data

Creating a well-designed dashboard is essential for visualizing resource consumption data and gaining actionable insights. The dashboard should provide a clear and concise overview of resource usage, allowing users to quickly identify trends, anomalies, and potential cost-saving opportunities. A dashboard should be designed to be easily understandable and should focus on key metrics relevant to cost forecasting.

| Metric | Visualization Type | Description |

|---|---|---|

| CPU Utilization (per instance) | Line Chart | Displays the percentage of CPU utilization over time for each instance. This chart helps identify instances that are consistently underutilized or overutilized. |

| Memory Utilization (per instance) | Line Chart | Shows the percentage of memory used by each instance over time. This chart can reveal memory leaks or instances that are nearing their memory limits. |

| Storage Utilization (per volume) | Bar Chart | Displays the amount of storage used on each volume. It provides a clear view of storage capacity and potential storage shortages. |

Estimating Future Resource Needs

Forecasting future resource needs is a critical step in cloud cost optimization. Accurate projections allow for proactive scaling, preventing performance bottlenecks and minimizing unnecessary spending. This involves analyzing current consumption, understanding growth patterns, and accounting for seasonal fluctuations to build a realistic and adaptable resource plan.

Projecting Future Resource Requirements Based on Current Trends

Analyzing historical data provides a foundation for projecting future resource needs. This typically involves identifying trends in resource consumption over time.

- Trend Analysis: This technique involves examining the rate of increase or decrease in resource usage over a defined period. Linear regression, a statistical method, can be applied to historical data to establish a trend line. The slope of the line indicates the rate of change, allowing for future predictions. For example, if compute instance usage has increased by 10% per month consistently over the past six months, a linear projection can estimate future needs.

- Moving Averages: Moving averages smooth out short-term fluctuations in data, highlighting the underlying trend. Different periods can be used for the moving average (e.g., 3-month, 6-month). This is particularly useful when dealing with data that has some level of noise or variability. A 3-month moving average can provide a more stable estimate of compute resource demand than a simple monthly data point.

- Exponential Smoothing: This method gives more weight to recent data points, making it responsive to changes in the trend. It is suitable for situations where the trend is not entirely stable and might be subject to shifts. Different smoothing parameters are used to control the weight given to recent data.

Forecasting Resource Needs Based on Anticipated Growth

Beyond historical trends, anticipating growth drivers is crucial for forecasting. This requires understanding the business context and projecting how various factors will impact resource demand.

- User Growth Projections: If the application’s usage is directly tied to the number of users, project user growth based on marketing forecasts, sales pipelines, or market research. This will drive the need for compute, storage, and network resources.

- Feature Release Impact: New feature releases often lead to increased resource consumption. Analyze the expected impact of new features on resource usage. This involves estimating the additional compute, storage, and network resources required to support the new features. Consider beta testing data to estimate the impact before the general release.

- Market Expansion: Expansion into new geographic regions or markets will likely increase resource needs, particularly for services that need to be deployed closer to the users. Factor in the projected user base in the new regions and the associated infrastructure costs.

- Data Volume Growth: For data-intensive applications, predict data volume growth based on the rate of data generation, storage retention policies, and expected data ingestion rates. This impacts storage and potentially compute requirements.

Incorporating Seasonality or Cyclical Patterns into the Forecast

Many applications experience seasonal or cyclical patterns in resource usage. Accounting for these patterns improves forecast accuracy.

- Identifying Seasonal Patterns: Analyze historical data to identify recurring patterns over specific periods (e.g., monthly, quarterly, annually). Seasonal patterns can be visualized using time series plots. Peak demand during specific times of the year can necessitate adjustments to resource allocation.

- Seasonal Decomposition: Decompose the time series data into trend, seasonal, and residual components. This helps isolate the seasonal effects, allowing for more accurate forecasts.

- Adjusting Forecasts: Once seasonal patterns are identified, adjust the baseline forecast to account for the expected fluctuations. This could involve adding or subtracting seasonal indices from the forecast. For example, retail websites often experience increased traffic during the holiday shopping season.

Scenario: Predicting Compute Resource Needs Based on Projected User GrowthA Software-as-a-Service (SaaS) company providing project management tools has 10,000 active users currently. They project a 20% user growth rate per quarter based on their sales pipeline. Their current compute infrastructure utilizes 100 virtual machines (VMs), each supporting 100 concurrent users effectively. To forecast compute needs:

1. Project User Growth

Quarter 1

10,000 – 1.20 = 12,000 users

Quarter 2

12,000 – 1.20 = 14,400 users

Quarter 3

14,400 – 1.20 = 17,280 users

Quarter 4

17,2801.20 = 20,736 users

2. Calculate VMs Required

VMs needed = (Projected Users) / (Users per VM)

Quarter 1

12,000 / 100 = 120 VMs

Quarter 2

14,400 / 100 = 144 VMs

Quarter 3

17,280 / 100 = 173 VMs (rounded up)

Quarter 4

20,736 / 100 = 208 VMs (rounded up)This forecast indicates the company will need to scale their compute resources significantly over the next year to accommodate user growth. They would also need to consider the instance types and their cost, as well as the operational overhead of managing more VMs.

Selecting the Right Pricing Models

Choosing the optimal pricing model is crucial for cost-effective cloud utilization. Cloud providers offer various pricing structures, each designed to cater to different workload characteristics and usage patterns. A thorough understanding of these models, along with their associated trade-offs, is essential for making informed decisions and minimizing cloud spending. This section delves into the specifics of different pricing models, providing guidance on selecting the most appropriate option for various scenarios.

Pricing Model Overview

Cloud providers typically offer several pricing models to accommodate diverse needs. Each model presents a different balance between cost and flexibility. Understanding these models allows users to align their spending with their specific resource requirements and usage behavior.

- On-Demand Instances: This model allows users to pay for compute capacity by the hour or second, without any upfront commitment. It provides maximum flexibility, allowing users to launch and terminate instances as needed. This model is suitable for unpredictable workloads, short-term tasks, and testing environments.

- Reserved Instances: Reserved Instances (RIs) offer significant discounts compared to on-demand pricing in exchange for a commitment to use a specific instance type for a defined period (typically one or three years). RIs are ideal for steady-state workloads with predictable resource needs, such as databases or web servers.

- Spot Instances: Spot Instances allow users to bid on spare compute capacity, potentially achieving substantial cost savings. Spot instances are ideal for fault-tolerant applications that can withstand interruptions, such as batch processing jobs or data analysis. The price of a spot instance fluctuates based on supply and demand. If the spot price exceeds the user’s bid, the instance is terminated.

- Savings Plans: Savings Plans provide a flexible pricing model that offers discounts on compute usage in exchange for a commitment to a consistent amount of compute usage (measured in dollars per hour) over a one- or three-year term. Unlike RIs, Savings Plans apply across instance families and regions, offering greater flexibility.

- Dedicated Hosts: Dedicated Hosts provide dedicated physical servers for your use. This model is suitable for workloads with specific compliance or regulatory requirements that require the isolation of hardware. Users pay for the entire server, regardless of their actual utilization.

Cost and Flexibility Trade-offs

Each pricing model presents a unique trade-off between cost and flexibility. The optimal choice depends on the workload’s characteristics, including its predictability, duration, and tolerance for interruption.

- On-Demand: Offers the highest flexibility but typically the highest cost. Suitable for unpredictable workloads or those with short lifespans.

- Reserved Instances: Provides significant cost savings compared to on-demand, but requires a commitment. Less flexible than on-demand, as the instance type and region are fixed.

- Spot Instances: Offers the lowest cost but the least flexibility. Instances can be terminated with little or no notice if the spot price exceeds the user’s bid. Suitable for fault-tolerant and interruptible workloads.

- Savings Plans: Provides a balance between cost savings and flexibility. Offers discounts without requiring rigid instance type commitments.

- Dedicated Hosts: Offers the highest level of control and isolation but comes at a premium cost.

Pricing Model Application

Selecting the appropriate pricing model requires careful consideration of the workload’s characteristics. The following examples illustrate when to use each model.

- On-Demand: Ideal for development and testing environments, where resource needs are unpredictable. Also suitable for short-lived tasks, such as batch processing jobs that run only occasionally. For example, a development team might use on-demand instances for their testing environment, as the workload is highly variable.

- Reserved Instances: Best suited for steady-state workloads, such as databases, web servers, or application servers that run continuously. For instance, a company running a production web application could purchase RIs for their core application servers to reduce their ongoing operational costs.

- Spot Instances: Perfect for fault-tolerant applications that can withstand interruptions, such as batch processing, data analysis, and machine learning tasks. Consider a data science team using spot instances to train machine learning models. The team can bid on spare capacity, significantly reducing their training costs.

- Savings Plans: Suitable for workloads with predictable compute usage. For instance, a company with consistent web traffic can use Savings Plans to cover the cost of its web servers and other compute resources.

- Dedicated Hosts: Appropriate for workloads that require dedicated hardware for compliance or regulatory reasons. A financial institution might use dedicated hosts for its sensitive data processing to meet specific security requirements.

Pricing Model Comparison

The following table provides a comparative analysis of the different cloud pricing models, highlighting their advantages and disadvantages.

| Pricing Model | Advantages | Disadvantages | Typical Use Cases |

|---|---|---|---|

| On-Demand | Highest flexibility, no upfront commitment. | Most expensive, suitable for short-term or unpredictable workloads. | Development and testing, short-lived tasks, burst capacity. |

| Reserved Instances | Significant cost savings, predictable costs. | Requires upfront commitment, less flexible than on-demand. | Steady-state workloads, databases, web servers, application servers. |

| Spot Instances | Lowest cost, potential for significant savings. | Instances can be terminated, suitable only for fault-tolerant workloads. | Batch processing, data analysis, machine learning, fault-tolerant applications. |

| Savings Plans | Flexible, offers discounts without rigid instance type commitments. | Requires commitment to a consistent amount of compute usage. | Workloads with predictable compute usage, web servers. |

| Dedicated Hosts | Highest level of control and isolation. | Most expensive, requires full server utilization. | Compliance requirements, regulatory needs. |

Utilizing Cloud Cost Management Tools

Effectively managing cloud costs necessitates leveraging the robust cost management tools offered by cloud providers. These tools provide visibility into spending patterns, enable proactive cost optimization, and facilitate the establishment of budgetary controls. This section delves into the functionalities of these tools, providing practical examples and insights to streamline cloud financial management.

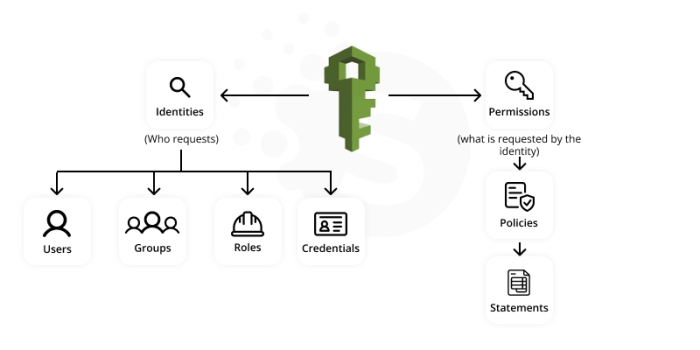

Available Cloud Provider Cost Management Tools

Cloud providers offer a suite of cost management tools, each designed to address specific aspects of cost control and optimization. These tools are typically integrated into the cloud provider’s console and are accessible to account administrators and users with appropriate permissions. Understanding the capabilities of these tools is crucial for informed decision-making.

Monitoring and Optimizing Costs Using Cloud Provider Tools

Cloud cost management tools provide the capability to monitor and optimize cloud spending. This involves analyzing cost trends, identifying areas of overspending, and implementing strategies to reduce costs. The following examples illustrate practical applications of these tools.* Example 1: AWS Cost Explorer: AWS Cost Explorer allows users to visualize, understand, and manage their AWS costs and usage over time. Users can filter and group data by various dimensions, such as service, region, and tag.

For example, a user can identify the specific services contributing the most to their overall spending and then drill down to see which resources within those services are consuming the most resources. This allows for targeted optimization efforts.

Example 2

Google Cloud Cost Management: Google Cloud provides Cloud Billing, which includes cost reporting, cost breakdowns, and recommendations. Users can create custom dashboards to monitor spending and set up alerts to notify them of unexpected cost increases. For instance, a user might set up an alert to be notified if their daily spending on compute instances exceeds a predefined threshold.

Example 3

Azure Cost Management + Billing: Azure Cost Management + Billing provides a comprehensive view of Azure spending. It offers features such as cost analysis, budgeting, and recommendations. Users can analyze costs by resource, resource group, and subscription. They can also leverage the recommendations feature to identify potential cost savings, such as rightsizing virtual machines or deleting unused resources.

Setting Up Budgets and Alerts for Spending Control

Setting up budgets and alerts is a critical aspect of cloud cost management, enabling proactive control over spending. This process involves defining spending thresholds and configuring notifications to alert users when those thresholds are approached or exceeded.* Budgeting: Budgets are set to define spending limits for a specified period. Cloud providers allow users to define budgets based on various criteria, such as service, region, or tag.

When a budget is nearing its threshold, alerts are triggered to notify the user.

Alerting

Alerts are configured to notify users when specific spending thresholds are reached. These alerts can be sent via email, SMS, or other notification channels. Alerts can be set for actual cost, forecasted cost, or both. For instance, if a user sets a budget of $1,000 per month for a specific service and sets an alert at 80% of the budget, they will receive a notification when the spending reaches $800.

Features of the Cloud Provider’s Cost Management Dashboard

The cost management dashboard provided by cloud providers is the central hub for monitoring and managing cloud spending. This dashboard provides a comprehensive overview of cost trends, resource usage, and potential savings opportunities. The following is a bullet point list detailing features generally found in cloud provider cost management dashboards.* Cost Visualization: Displays costs and usage data through interactive charts and graphs, allowing users to visualize spending trends over time.

These visualizations often include filtering and grouping capabilities, enabling users to analyze costs by service, region, tag, and other dimensions.

Cost Breakdown

Provides detailed breakdowns of costs by service, resource, and other relevant categories. This allows users to identify the specific components contributing to their overall spending.

Budgeting and Forecasting

Enables users to set budgets and forecast future spending based on current usage patterns. Budgeting tools often include the ability to set alerts when spending approaches or exceeds predefined thresholds.

Recommendations

Offers cost optimization recommendations based on usage patterns and best practices. These recommendations might include suggestions for rightsizing resources, deleting unused resources, or switching to more cost-effective pricing models.

Reporting

Generates detailed reports on cost and usage data, allowing users to track spending trends and identify areas for improvement. Reports can often be customized and scheduled for automated delivery.

Alerting and Notifications

Allows users to set up alerts and notifications to be informed of cost changes or anomalies. Alerts can be configured to trigger notifications via email, SMS, or other channels.

Tagging and Resource Grouping

Supports the use of tags and resource groups to categorize and organize resources. This allows users to track costs associated with specific projects, teams, or applications.

Cost Allocation

Enables the allocation of costs to specific departments or projects, facilitating cost accounting and chargeback processes.

Optimizing Resource Allocation

Optimizing resource allocation is a critical step in cloud cost forecasting and management. It involves efficiently utilizing the cloud resources provisioned to meet workload demands, while minimizing unnecessary expenditure. This process encompasses identifying underutilized resources, right-sizing instances, and implementing automated scaling mechanisms to adapt to fluctuating demands. Effective resource allocation directly impacts the accuracy of cost forecasts and the overall financial efficiency of cloud operations.

Identifying Underutilized Resources and Optimization Opportunities

Identifying underutilized resources is the initial step in optimization. This process involves a comprehensive analysis of resource utilization metrics to pinpoint instances or services that are not being fully leveraged. This can lead to significant cost savings by reducing the resources allocated to non-critical workloads.Analyzing resource utilization involves several key steps:

- Monitoring CPU Utilization: Monitor the CPU utilization of instances. If the average CPU utilization is consistently low (e.g., below 20-30%) for extended periods, the instance is likely underutilized.

- Assessing Memory Utilization: Evaluate memory usage to identify instances with excessive unused memory. Tools like cloud provider monitoring dashboards or third-party monitoring solutions can provide this data.

- Evaluating Disk I/O: Analyze disk input/output operations per second (IOPS) and throughput. Underutilized disks can indicate inefficient storage allocation.

- Analyzing Network Traffic: Review network bandwidth usage to determine if instances are over-provisioned for their network needs.

- Utilizing Cloud Provider Tools: Cloud providers offer tools to analyze resource utilization and identify optimization opportunities. These tools provide insights into resource usage patterns and recommendations for right-sizing instances. For example, AWS Compute Optimizer, Azure Advisor, and Google Cloud’s recommendations engine can help identify underutilized resources.

- Analyzing Historical Data: Examine historical resource usage data to identify trends and patterns. This analysis can reveal seasonal fluctuations in demand and inform resource allocation decisions. For instance, if an application experiences peak usage during business hours and low usage overnight, the instance can be scaled down during off-peak hours.

Methods for Right-Sizing Instances

Right-sizing instances involves selecting the appropriate instance size to match the actual workload demands. This is a crucial step in optimizing resource allocation and minimizing costs. Over-provisioning results in unnecessary expenses, while under-provisioning can lead to performance issues.Right-sizing strategies include:

- Analyzing Performance Metrics: Collect and analyze performance metrics such as CPU utilization, memory usage, disk I/O, and network traffic. These metrics provide insights into the resource requirements of the workload.

- Choosing Instance Types: Select the instance type that best matches the workload’s requirements. Consider factors like CPU, memory, storage, and network capabilities. For example, memory-intensive applications should be deployed on instances with sufficient memory.

- Testing and Benchmarking: Conduct performance testing and benchmarking to evaluate the performance of different instance sizes. This helps to determine the optimal instance size for the workload.

- Considering Workload Characteristics: Consider the characteristics of the workload, such as its scalability, elasticity, and burstiness. For example, web servers may benefit from instances with high network bandwidth.

- Utilizing Cloud Provider Recommendations: Leverage the recommendations provided by cloud providers. Tools like AWS Compute Optimizer, Azure Advisor, and Google Cloud’s recommendations engine can suggest optimal instance sizes based on resource usage data.

- Implementing a Continuous Monitoring and Optimization Process: Continuously monitor resource utilization and adjust instance sizes as needed. This ensures that resources are always optimized to meet the workload’s demands.

Implementing Auto-Scaling to Optimize Resource Allocation

Auto-scaling is an automated process that dynamically adjusts the number of instances based on real-time demand. This ensures that resources are allocated efficiently and that the application can handle fluctuating workloads without manual intervention. Auto-scaling can significantly reduce costs by scaling down resources during periods of low demand.Key aspects of auto-scaling implementation:

- Defining Scaling Policies: Define scaling policies that determine when to scale out (add instances) and scale in (remove instances). These policies are based on metrics like CPU utilization, memory usage, and network traffic. For example, a scaling policy might trigger the addition of instances when CPU utilization exceeds 70% for a sustained period.

- Setting Scaling Triggers: Configure scaling triggers that monitor the specified metrics and initiate scaling actions when the defined thresholds are met.

- Choosing Scaling Groups: Utilize scaling groups to manage the instances. Scaling groups automatically launch and terminate instances based on the scaling policies.

- Implementing Health Checks: Implement health checks to ensure that instances are healthy and responsive. Unhealthy instances are automatically terminated and replaced.

- Using Load Balancers: Use load balancers to distribute traffic across multiple instances. Load balancers ensure that traffic is evenly distributed and that the application remains available even if some instances become unavailable.

- Monitoring and Tuning: Continuously monitor the performance of the auto-scaling configuration and tune the scaling policies and triggers as needed. This ensures that the auto-scaling mechanism is effectively managing resources.

Steps for Optimizing Compute Instance Sizes

| Step | Description | Tools/Considerations |

|---|---|---|

| 1. Monitor Resource Utilization | Continuously monitor CPU, memory, disk I/O, and network traffic to understand resource usage patterns. This data forms the foundation for informed optimization decisions. | Cloud provider monitoring tools (e.g., AWS CloudWatch, Azure Monitor, Google Cloud Monitoring), third-party monitoring solutions, application performance monitoring (APM) tools. |

| 2. Analyze Historical Data | Analyze historical resource usage data to identify trends, seasonality, and peak demand periods. This analysis provides context for right-sizing and auto-scaling decisions. | Cloud provider data analysis tools, custom scripting, data visualization tools. |

| 3. Identify Underutilized Resources | Identify instances that are consistently underutilized. Look for instances with low CPU utilization, excess memory, or low disk I/O. | Cloud provider recommendations (e.g., AWS Compute Optimizer, Azure Advisor, Google Cloud recommendations), manual analysis of monitoring data. |

| 4. Right-Size Instances | Adjust instance sizes to match actual workload demands. Consider different instance types and sizes based on the workload’s requirements. | Cloud provider instance type catalogs, performance testing and benchmarking, workload characteristics (CPU, memory, storage, network). |

| 5. Implement Auto-Scaling | Implement auto-scaling to automatically adjust the number of instances based on real-time demand. Define scaling policies and triggers based on resource utilization metrics. | Cloud provider auto-scaling services (e.g., AWS Auto Scaling, Azure Virtual Machine Scale Sets, Google Cloud Managed Instance Groups), load balancers. |

| 6. Test and Validate | Test the right-sized instances and auto-scaling configurations to ensure they meet performance requirements and optimize resource utilization. | Load testing tools, performance monitoring tools. |

| 7. Continuously Monitor and Optimize | Continuously monitor resource utilization, performance, and costs. Regularly review and adjust instance sizes and auto-scaling configurations to maintain optimal resource allocation. | Cloud provider monitoring tools, cost management tools, regular performance reviews. |

Implementing Cost-Effective Storage Strategies

Efficient storage management is critical for controlling cloud costs. Data storage often represents a significant portion of cloud expenses, and optimizing storage strategies directly impacts the overall cost-effectiveness of the cloud environment. By carefully selecting storage tiers, implementing data lifecycle management, and leveraging cost optimization tools, organizations can significantly reduce storage costs without compromising performance or data availability.

Different Storage Tiers and Associated Costs

Cloud providers offer a range of storage tiers, each designed for different data access patterns and performance requirements. Understanding these tiers and their associated costs is the first step toward cost optimization. The pricing structure varies significantly based on factors such as storage capacity, data access frequency, data retrieval speed, and data redundancy.

- Hot Storage: Designed for frequently accessed data, hot storage offers the highest performance and lowest latency. It is typically the most expensive storage tier. Examples include data used by active applications, databases, and content delivery networks (CDNs).

- Warm Storage: Suitable for data that is accessed less frequently than hot storage but still requires relatively fast retrieval. It balances performance and cost. Examples include data backups, older application logs, and infrequently accessed archives.

- Cold Storage: Optimized for infrequently accessed data with lower performance requirements. It offers a lower cost per gigabyte compared to hot and warm storage. Examples include long-term data archives, regulatory compliance data, and disaster recovery backups.

- Archive Storage: Designed for rarely accessed data that requires long-term retention. Archive storage offers the lowest cost per gigabyte but has the longest data retrieval times. Examples include historical records, legal documents, and data used for compliance purposes.

Choosing the Right Storage Tier Based on Data Access Patterns

Selecting the appropriate storage tier depends on the frequency with which data is accessed. Analyzing data access patterns is crucial for making informed decisions and minimizing storage costs. This analysis can be performed using cloud provider tools or third-party monitoring solutions. The goal is to match the storage tier’s characteristics with the data’s access requirements.

Consider these examples:

- High-Frequency Access: For applications requiring real-time data access, such as e-commerce platforms or financial transaction systems, hot storage is the most suitable option due to its high performance.

- Medium-Frequency Access: Applications that access data on a daily or weekly basis, such as data analytics dashboards or operational reporting, might benefit from warm storage, balancing cost and access speed.

- Low-Frequency Access: For infrequently accessed data, such as backups and historical data, cold storage provides a cost-effective solution.

- Rarely Accessed Data: Data that is accessed very infrequently, such as archival data or compliance records, can be stored in archive storage to minimize costs.

Strategies for Optimizing Storage Costs Through Data Lifecycle Management

Data lifecycle management (DLM) automates the process of moving data between different storage tiers based on predefined policies. This strategy helps to reduce storage costs by automatically migrating data to the most cost-effective tier based on its access frequency. DLM policies are typically based on data age, access patterns, and other criteria.

Key aspects of data lifecycle management include:

- Data Classification: Categorizing data based on its importance, access frequency, and retention requirements.

- Policy Definition: Creating rules that define how data is moved between storage tiers based on data characteristics and time-based criteria.

- Automation: Automating the process of data migration based on the defined policies.

- Monitoring and Optimization: Regularly monitoring the effectiveness of DLM policies and adjusting them as needed to optimize costs and performance.

Scenario: A company stores application logs in hot storage. After 30 days, the logs are rarely accessed. Implementing a lifecycle policy that automatically moves logs to warm storage after 30 days and then to cold storage after 90 days would significantly reduce storage costs. This shift to lower-cost tiers, while maintaining data accessibility for audit and analysis, can lead to substantial savings, especially for organizations with large volumes of log data.

This is directly related to cost-saving strategies in data storage, improving overall cloud spending.

Forecasting Network Costs

Network costs in a cloud environment represent a significant portion of overall expenses. Accurately forecasting these costs is critical for budget planning and financial control. This section delves into the intricacies of predicting network expenditures, focusing on the influential factors, estimation methodologies, and optimization strategies.

Factors Influencing Network Costs

Network costs are multifaceted, driven by various components that must be understood for effective forecasting. Several factors play a significant role in determining the final network bill.

- Data Transfer: This is often the most significant cost driver. It encompasses the movement of data into (ingress) and out of (egress) the cloud environment. Egress charges are typically higher than ingress charges.

- Bandwidth: Bandwidth costs relate to the capacity of the network connection. Higher bandwidth demands typically translate to higher costs, especially for dedicated connections or high-performance networking services.

- Inter-Region Data Transfer: Transferring data between different geographic regions incurs costs. These costs are often substantial, especially for applications with global reach or disaster recovery requirements.

- Network Services: The utilization of managed network services such as load balancers, content delivery networks (CDNs), and firewalls also contributes to network costs. Pricing for these services is based on usage, features, and performance levels.

- IP Addresses: The allocation and usage of public IP addresses, particularly static IP addresses, can add to the overall network expenses.

- Network Monitoring and Logging: While essential for operational visibility, the storage and analysis of network logs and monitoring data can generate costs, particularly with high data volumes.

Estimating Data Transfer Costs Based on Anticipated Traffic

Data transfer costs are directly proportional to the volume of data transmitted. Accurate estimation necessitates a comprehensive understanding of expected traffic patterns and data volumes.

- Traffic Volume Analysis: Begin by estimating the total data volume expected to be transferred. This involves identifying the sources and destinations of data transfers.

- Traffic Pattern Modeling: Analyze historical traffic data or simulate expected traffic patterns to understand peak and off-peak usage. This helps in predicting the amount of data transferred during different time periods.

- Pricing Model Selection: Cloud providers offer tiered pricing models for data transfer. Select the appropriate pricing tier based on the anticipated data volume.

- Data Transfer Calculation: Apply the selected pricing model to the estimated data volume to calculate the total data transfer cost.

For instance, consider an e-commerce application hosted on a cloud platform. The application anticipates 10,000 users per day, each downloading an average of 2 MB of product images and other content. The monthly data transfer volume would be calculated as follows:

Daily data transfer = 10,000 users

2 MB/user = 20,000 MB = 20 GB

Monthly data transfer = 20 GB/day

30 days = 600 GB

Assuming a data transfer rate of $0.08 per GB, the monthly data transfer cost would be: 600 GB

– $0.08/GB = $48. This calculation provides a basic estimate, and it must be refined by considering other traffic types, such as API calls and database queries, and the specific pricing tiers offered by the cloud provider.

Methods for Optimizing Network Configurations to Reduce Costs

Optimizing network configurations is crucial for minimizing network costs. Several strategies can be implemented to reduce data transfer volume and overall network expenses.

- Content Delivery Networks (CDNs): Utilize CDNs to cache static content closer to users, reducing data transfer from the origin servers. CDNs distribute content across multiple geographic locations, improving performance and lowering data transfer costs.

- Data Compression: Employ data compression techniques to reduce the size of data before transmission. This is particularly effective for text-based files, images, and videos.

- Data Caching: Implement caching mechanisms at various levels, such as web server caches and database caches, to reduce the frequency of data retrieval.

- Traffic Shaping and Throttling: Implement traffic shaping and throttling to manage network traffic and prevent unexpected spikes that could lead to higher bandwidth charges.

- Region Selection: Choose cloud regions that are geographically closer to the user base to reduce latency and inter-region data transfer costs.

- Network Monitoring and Optimization: Regularly monitor network traffic patterns and identify areas for optimization. Utilize network monitoring tools to detect and address performance bottlenecks.

Formula for Calculating Data Transfer Costs

The following table demonstrates a formula for calculating data transfer costs, considering data volume and pricing tiers. This is a simplified example and should be adapted to the specific cloud provider’s pricing structure.

| Column 1: Data Transfer Volume (GB) | Column 2: Pricing Tier | Column 3: Price per GB | Column 4: Total Cost |

|---|---|---|---|

| 0-100 | Tier 1 | $0.10 | Data Transfer Volume

|

| 101-1000 | Tier 2 | $0.08 | Data Transfer Volume

|

| 1001+ | Tier 3 | $0.06 | Data Transfer Volume

|

Example Calculation:

If the estimated data transfer volume is 1500 GB:

- First 100 GB: 100 GB

– $0.10/GB = $10.00 - Next 900 GB (1000-100): 900 GB

– $0.08/GB = $72.00 - Remaining 500 GB (1500-1000): 500 GB

– $0.06/GB = $30.00 - Total Cost: $10.00 + $72.00 + $30.00 = $112.00

Building a Cost Forecast Model

Creating a robust cloud cost forecast model is crucial for effective financial planning and resource management in a cloud environment. It allows organizations to anticipate future expenses, make informed decisions about resource allocation, and proactively manage costs. This section details the essential components and methodologies involved in constructing a comprehensive cost forecast model.

Components of a Comprehensive Cloud Cost Forecast Model

A comprehensive cloud cost forecast model integrates various elements to provide an accurate prediction of future cloud spending. The core components include data ingestion, data processing, forecasting algorithms, and reporting mechanisms. The interaction of these components forms the foundation of the model.* Data Ingestion: This involves collecting relevant data from various sources, including cloud provider billing APIs, resource utilization metrics, and historical cost data.

The data should be structured and stored in a format suitable for analysis.* Data Processing: The collected data undergoes processing steps such as cleaning, transformation, and aggregation. This ensures the data is accurate, consistent, and prepared for use in forecasting algorithms. This might involve removing outliers, converting data types, and aggregating data by time periods (e.g., daily, weekly, monthly).* Forecasting Algorithms: Selecting appropriate forecasting algorithms is critical for model accuracy.

These algorithms use historical data and resource projections to predict future costs. Common techniques include time series analysis (e.g., ARIMA, exponential smoothing), regression analysis, and machine learning models.* Reporting and Visualization: The model should generate reports and visualizations that effectively communicate the forecast results. This enables stakeholders to understand the projected costs, identify potential cost drivers, and make informed decisions.

Reports might include cost breakdowns by service, region, and time period, along with visualizations such as line charts and bar graphs.

Incorporating Data and Models into the Forecast

Integrating historical data, resource projections, and pricing models is essential for creating an accurate and reliable cost forecast. Each element contributes to the overall accuracy of the model.* Historical Data Integration: Analyzing historical cloud spending patterns provides a baseline for future cost predictions. This involves importing and analyzing historical billing data, identifying trends, and understanding cost drivers. Historical data helps to establish seasonality and other recurring patterns.

For instance, if a company observes a spike in compute costs during the end-of-year holiday season, the model can account for this pattern.* Resource Projection Incorporation: Projecting future resource consumption is a critical step in cost forecasting. This involves estimating the demand for resources such as compute instances, storage, and network bandwidth. Resource projections can be based on business growth forecasts, application usage trends, and planned infrastructure changes.

For example, if a company plans to launch a new application that requires significant compute resources, the model should incorporate the projected resource consumption of the application.* Pricing Model Application: Accurate cost forecasting relies on the correct application of cloud provider pricing models. This involves selecting the appropriate pricing options (e.g., on-demand, reserved instances, spot instances) and applying them to the projected resource consumption.

Different pricing models can significantly impact costs, so it’s important to model these options accurately. Consider a scenario where a company is using on-demand instances for its compute needs. The cost forecast model should be able to calculate the costs based on the on-demand pricing rates for the specific instance types used. If the company then switches to reserved instances, the model must accurately reflect the change in cost based on the reserved instance pricing.

Updating and Refining the Cost Forecast

Regularly updating and refining the cost forecast model is crucial to ensure its accuracy and relevance over time. Cloud environments are dynamic, and factors like resource utilization, pricing changes, and business needs can shift.* Regular Data Refresh: The model should be updated regularly with the latest data from cloud providers and resource utilization metrics. This ensures that the forecast reflects the most current trends and patterns.

Implement automated data refresh processes to streamline this task.* Algorithm Adjustment: Evaluate and adjust the forecasting algorithms periodically. This may involve fine-tuning parameters, selecting different algorithms, or incorporating new data features to improve accuracy. Consider conducting a backtesting analysis to evaluate the performance of the model.* Feedback Loop Implementation: Establish a feedback loop to continuously improve the model.

Compare the forecasted costs with the actual costs, analyze any discrepancies, and identify areas for improvement. Use these insights to refine the model and improve its predictive capabilities.* Scenario Analysis: Conduct scenario analysis to assess the impact of different business decisions and external factors on cloud costs. This involves creating multiple forecast scenarios based on different assumptions, such as changes in resource utilization, pricing models, or business growth.

Key Inputs for a Cloud Cost Forecast Model

A cloud cost forecast model relies on several key inputs to generate accurate predictions. These inputs provide the necessary data for the model to operate effectively.* Historical Cost Data: This includes past billing data from the cloud provider, broken down by service, region, and time period. The data should cover a sufficient time range to capture trends and seasonality.* Resource Utilization Metrics: Detailed information on resource usage, such as CPU utilization, memory usage, storage capacity, and network traffic.

This data helps understand how resources are being consumed.* Resource Projections: Estimates of future resource needs, based on factors like business growth, application usage, and planned infrastructure changes. These projections should be specific and detailed.* Pricing Model Information: Details on the cloud provider’s pricing models, including on-demand rates, reserved instance pricing, spot instance pricing, and any discounts or special offers.

This data ensures accurate cost calculations.* Business Growth Forecasts: Predictions of future business activity, such as user growth, transaction volume, and revenue projections. This information helps estimate the demand for cloud resources.* Application Architecture Information: Details on the application architecture, including the services used, the resource requirements of each service, and the expected workload patterns. This helps to understand the relationship between applications and cloud costs.* Cost Allocation Tags: Metadata used to categorize and track cloud costs by department, project, application, or other relevant dimensions.

This allows for detailed cost analysis and reporting.* Contractual Agreements: Information on any negotiated discounts, committed spend agreements, or other contractual arrangements with the cloud provider. These agreements can significantly impact costs.

Reviewing and Refining the Forecast

Monitoring and refining a cloud cost forecast is a crucial, iterative process. It allows for the validation of initial assumptions, the identification of cost-saving opportunities, and the adaptation to evolving business needs and cloud resource consumption patterns. Regularly comparing actual costs with the forecast provides valuable insights into the accuracy of the prediction and highlights areas for improvement in future forecasting efforts.

Monitoring Actual Costs Against the Forecast

Continuous monitoring is essential for effective cost management. It allows for timely detection of deviations from the forecast, enabling proactive adjustments and preventing unexpected cost overruns.

- Establish a robust cost monitoring system: Implement automated systems to track cloud spending in real-time or near real-time. This typically involves integrating cloud provider cost management tools (e.g., AWS Cost Explorer, Azure Cost Management + Billing, Google Cloud Cost Management) with existing monitoring and alerting infrastructure. The monitoring system should provide granular data on resource consumption, allowing for detailed analysis of cost drivers.

- Set up regular reporting: Generate regular reports (e.g., daily, weekly, monthly) comparing actual costs against the forecast. These reports should clearly visualize variances and highlight significant deviations. Dashboards are a particularly effective way to present this information, providing a consolidated view of key metrics.

- Define key performance indicators (KPIs): Identify and track relevant KPIs, such as cost per unit of work, cost per customer, or cost per transaction. These KPIs help to understand the efficiency of cloud resource utilization and the effectiveness of cost optimization efforts. For example, tracking “cost per user” for a SaaS application allows for a direct comparison of spending against user growth.

- Configure alerts and notifications: Set up alerts to notify relevant stakeholders when actual costs exceed predefined thresholds or deviate significantly from the forecast. These alerts should be tailored to different levels of variance and can trigger automated actions, such as the investigation of specific resources or the adjustment of resource allocation.

Identifying Variances and Reasons

Identifying the causes of cost variances is critical for informed decision-making and forecast refinement. Analyzing the discrepancies between the forecast and actual spending reveals the underlying drivers of cost fluctuations.

- Analyze cost breakdown: Utilize the cloud provider’s cost management tools to analyze the cost breakdown by service, resource, and region. This granular analysis helps to pinpoint specific resources or services that are contributing to cost variances. For example, if compute costs are higher than forecast, the analysis can identify which virtual machines are consuming the most resources.

- Investigate resource utilization: Examine resource utilization metrics (e.g., CPU utilization, memory utilization, network traffic) to determine if resources are being over-provisioned or underutilized. Underutilized resources represent a waste of cloud spending. Over-provisioning can also be identified by examining the cost of resources.

- Review pricing model changes: Stay informed about any changes to cloud provider pricing models. Pricing updates, such as changes to instance pricing or storage costs, can significantly impact actual costs. These changes can also affect your forecast.

- Evaluate infrastructure changes: Assess any changes to the cloud infrastructure, such as the deployment of new services, the scaling of existing resources, or the modification of resource configurations. These changes can have a direct impact on resource consumption and, consequently, on costs.

- Examine external factors: Consider external factors that may influence cloud costs, such as seasonal demand, changes in customer behavior, or market conditions. For example, a sudden surge in website traffic due to a marketing campaign can increase compute and network costs.

Adjusting the Forecast

Adjusting the forecast is an ongoing process that ensures its accuracy and relevance. Regular adjustments based on actual spending and changing requirements are essential for effective cost management.

- Update assumptions: Revise the underlying assumptions used in the forecast based on the analysis of variances. This may involve adjusting estimates for resource consumption, pricing models, or growth rates. For example, if actual storage costs are consistently higher than forecast, the forecast may need to be adjusted to reflect the increased storage requirements.

- Incorporate new data: Integrate the latest actual spending data into the forecast model. This allows the model to learn from past performance and improve its accuracy. Machine learning algorithms can be used to automate the process of updating the forecast based on historical data.

- Adjust for changing requirements: Modify the forecast to accommodate changing business needs and cloud requirements. This may involve adding new resources, scaling existing resources, or adapting to changes in application architecture. For example, if a new feature is launched that requires additional compute resources, the forecast should be updated to reflect the increased cost.

- Implement what-if scenarios: Utilize “what-if” scenarios to assess the potential impact of different cost optimization strategies or business decisions on the forecast. This allows for proactive planning and decision-making. For example, a “what-if” scenario could be used to evaluate the cost savings of migrating to a different instance type.

- Document changes: Maintain a detailed record of all forecast adjustments, including the reasons for the changes and the impact on the forecast. This documentation is essential for tracking the evolution of the forecast and for ensuring its transparency.

Calculating the Variance

Calculating the variance between the forecast and actual costs provides a clear measure of the forecast’s accuracy. The variance analysis helps identify areas where the forecast needs improvement. The table below illustrates how to calculate the variance.

| Metric | Description | Formula/Calculation |

|---|---|---|

| Forecasted Cost | The predicted cost for a specific period (e.g., month, quarter). | Based on the cost forecast model. |

| Actual Cost | The actual spending incurred during the same period. | Retrieved from cloud provider cost management tools. |

| Variance | The difference between the actual cost and the forecasted cost. | Variance = Actual Cost - Forecasted Cost |

| Percentage Variance | The variance expressed as a percentage of the forecasted cost. This allows for easier comparison across different cost levels. | Percentage Variance = (Variance / Forecasted Cost) - 100 |

Ultimate Conclusion

In conclusion, mastering the art of cloud cost forecasting is not merely about predicting expenses; it’s about strategically aligning resource allocation with business objectives. By leveraging the insights and techniques presented in this guide, organizations can gain greater control over their cloud spending, enhance operational efficiency, and ultimately drive innovation. The ability to accurately forecast and manage cloud costs is a fundamental capability for any organization seeking to thrive in the cloud era, ensuring financial sustainability and enabling long-term success within the target cloud environment.

Popular Questions

What is the difference between reserved instances and on-demand instances?

On-demand instances offer the flexibility of pay-as-you-go pricing, while reserved instances provide significant discounts in exchange for a commitment to use a specific instance type for a set period (typically 1 or 3 years).

How often should I review my cloud cost forecast?

It’s recommended to review and refine your cloud cost forecast at least monthly, or even more frequently if your resource usage patterns are highly dynamic. Adjustments should be made based on actual spending, changing business requirements, and new pricing models.

What are some key metrics to monitor for cloud cost optimization?

Key metrics include CPU utilization, memory utilization, storage capacity, data transfer volume, and the cost per unit of work (e.g., cost per transaction, cost per user).

How can I estimate data transfer costs?

Data transfer costs are calculated based on the volume of data transferred in and out of the cloud provider’s network. You can estimate these costs by analyzing your anticipated traffic patterns, the data transfer rates charged by your provider, and the geographic regions involved.