The sunsetting of applications, while a necessary part of software lifecycle management, often leaves behind a wealth of valuable data. Effectively handling data archival for retired applications is crucial for maintaining compliance, supporting business intelligence, and preserving historical context. This process requires a systematic approach, from identifying the scope of data to be archived to ensuring its long-term accessibility and security.

This guide provides a structured framework for navigating the complexities of data archival. It explores key considerations such as data identification, archival strategies, extraction and transformation processes, storage formats, security protocols, and retrieval mechanisms. Furthermore, it addresses the critical aspects of data retention policies, cost management, testing, and long-term maintenance, equipping readers with the knowledge to implement robust archival solutions.

Understanding the Need for Data Archival for Retired Applications

Data archival for retired applications is a critical process that involves securely storing and preserving data from applications that are no longer actively used. This practice ensures that valuable historical information remains accessible for various purposes, ranging from compliance requirements to business intelligence. Effectively managing archived data is crucial for minimizing risks, optimizing resource allocation, and maintaining a comprehensive record of an organization’s operations.

Primary Reasons for Archiving Data from Decommissioned Applications

The decision to archive data from retired applications stems from a confluence of factors, each contributing to the overall necessity of this practice. These reasons are rooted in compliance, operational efficiency, and strategic business goals.

- Compliance Requirements: Many industries are subject to stringent regulations that mandate the retention of specific data for a predetermined period. These regulations are designed to ensure transparency, accountability, and the ability to reconstruct events for auditing or legal purposes. Archiving data enables organizations to meet these requirements and avoid potential penalties.

- Operational Efficiency: Archiving data helps to declutter active systems, improving their performance and reducing storage costs. By moving historical data to a separate archive, the operational burden on current applications is lessened, resulting in faster processing times and improved user experience. This is especially important for large-scale systems that generate significant amounts of data.

- Business Intelligence and Analytics: Historical data provides valuable insights into past trends, patterns, and behaviors. By analyzing archived data, organizations can gain a deeper understanding of their business operations, identify areas for improvement, and make more informed decisions. This data can be used to predict future outcomes, optimize strategies, and gain a competitive edge.

- Legal and Audit Purposes: Archived data often serves as critical evidence in legal proceedings, audits, and investigations. Preserving this data ensures that organizations can provide accurate and complete information when needed, protecting them from potential legal liabilities. This is particularly important in industries with high regulatory scrutiny.

- Data Recovery and Disaster Recovery: Archived data can serve as a valuable backup source in case of data loss or system failures in current operational systems. By having a separate archive, organizations can quickly restore lost data and minimize the impact of unforeseen events.

Compliance Regulations that Necessitate Data Archival

Numerous compliance regulations across various sectors explicitly mandate the retention and archival of specific types of data. Adhering to these regulations is not merely a best practice but a legal requirement. Failure to comply can result in significant financial penalties, legal repercussions, and damage to an organization’s reputation.

- General Data Protection Regulation (GDPR): This regulation, primarily impacting organizations that process data of individuals within the European Union (EU), specifies data retention periods and the right of individuals to access their data. Archiving is critical to meet these requirements. Failure to comply can result in fines of up to 4% of global annual turnover or €20 million, whichever is higher.

- Health Insurance Portability and Accountability Act (HIPAA): HIPAA, relevant to the healthcare industry in the United States, mandates the secure storage and retention of protected health information (PHI). Archiving data in compliance with HIPAA ensures patient privacy and prevents data breaches. Non-compliance can lead to substantial fines and civil and criminal penalties.

- Sarbanes-Oxley Act (SOX): SOX, applicable to publicly traded companies in the United States, requires the retention of financial records and other relevant data for auditing purposes. This is crucial for ensuring financial transparency and accountability. Non-compliance can lead to severe penalties, including fines and imprisonment for executives.

- Payment Card Industry Data Security Standard (PCI DSS): PCI DSS, applicable to organizations that process credit card information, requires the secure storage and retention of transaction data. This helps prevent fraud and protects cardholder data. Non-compliance can result in fines, revocation of the ability to process card payments, and damage to the organization’s reputation.

- Securities and Exchange Commission (SEC) Regulations: The SEC mandates the retention of various financial and transactional records for public companies. These records are essential for ensuring market integrity and investor protection. Violations can result in significant fines and legal action.

Business Benefits of Preserving Historical Application Data

Beyond compliance and operational efficiency, archiving historical application data provides substantial business benefits that contribute to strategic decision-making, risk mitigation, and competitive advantage. The value derived from archived data extends far beyond simply meeting regulatory requirements.

- Improved Decision-Making: Access to historical data enables organizations to make more informed decisions based on past performance and trends. Analyzing archived data can reveal patterns, identify opportunities, and help avoid costly mistakes. For example, by analyzing past sales data, a company can better forecast future demand and optimize inventory management.

- Enhanced Risk Management: Archived data can be used to identify and mitigate risks. By analyzing past incidents, organizations can learn from their mistakes and implement measures to prevent similar occurrences in the future. This includes risks related to fraud, data breaches, and compliance violations.

- Competitive Advantage: Historical data can provide insights into customer behavior, market trends, and competitor activities. This information can be used to develop new products and services, improve marketing strategies, and gain a competitive edge.

- Cost Savings: While archiving involves costs, it can also lead to significant cost savings in the long run. By freeing up storage space on active systems, organizations can reduce their IT infrastructure costs. Moreover, the ability to access historical data can help prevent costly legal battles and fines.

- Business Continuity and Disaster Recovery: Archived data serves as a critical resource for business continuity and disaster recovery planning. In the event of a system failure or data loss, archived data can be used to restore operations quickly and minimize downtime. This ensures business resilience and reduces the impact of unforeseen events.

- Knowledge Management: Archived data represents a valuable repository of institutional knowledge. By preserving historical information, organizations can ensure that knowledge is not lost when employees leave or systems are retired. This knowledge can be used to train new employees, improve processes, and maintain operational efficiency.

Data Identification and Scope Definition

Data archival for retired applications necessitates a meticulous approach to data identification and scope definition. This process ensures that the correct data is archived efficiently and effectively, balancing preservation needs with resource constraints and compliance requirements. The initial steps involve comprehensively understanding the data landscape within the retired applications and then defining the boundaries of the archival effort.

Types of Data Stored in Retired Applications

Retired applications often contain a diverse range of data types, each with its own characteristics and archival considerations. Understanding these data types is crucial for developing an effective archival strategy.

- Transactional Data: This encompasses the core business data, including orders, invoices, payments, and customer interactions. This data is often highly structured and represents the historical record of business operations. Examples include:

- Sales records with customer details, product information, and transaction dates.

- Financial transactions, including debits, credits, and account balances.

- Master Data: This type includes static information about entities, such as customers, products, suppliers, and employees. It serves as a reference for transactional data. Examples include:

- Customer profiles containing contact information, purchase history, and preferences.

- Product catalogs with descriptions, pricing, and inventory levels.

- Metadata: Metadata provides context and describes the data itself. It includes information about data structure, data quality, and data lineage. Examples include:

- Database schema definitions, detailing table structures, data types, and relationships.

- Data validation rules, defining constraints and acceptable values for data fields.

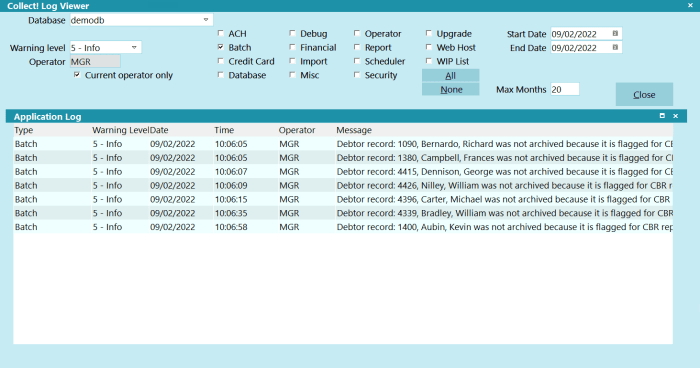

- Log Data: Log files capture system events, user activities, and application errors. They are invaluable for auditing, troubleshooting, and security analysis. Examples include:

- Audit logs, tracking user logins, data modifications, and system access.

- Error logs, documenting application errors, stack traces, and error timestamps.

- Document and Media Data: This includes unstructured data such as documents, images, audio files, and videos. The volume and format of this data can vary significantly. Examples include:

- Scanned documents, such as contracts, invoices, and correspondence.

- Images, such as product photos, marketing materials, and user-generated content.

- Configuration Data: This data defines the application’s settings and behavior. It includes parameters, environment variables, and application code settings. Examples include:

- Database connection strings, defining the location and access credentials for data storage.

- Application server configurations, specifying resource allocation and performance settings.

Process for Determining the Scope of Data to be Archived

Defining the scope of data archival involves a systematic process that considers various factors, including data criticality, legal and regulatory requirements, and business value. A well-defined scope ensures that the archival effort is focused and cost-effective.

The scope determination typically involves the following steps:

- Data Inventory and Analysis: This involves identifying all data sources within the retired application, documenting data types, and understanding data relationships. Data profiling techniques can be employed to analyze data characteristics, such as data volume, data quality, and data distribution.

- Business Requirements Assessment: This step involves interviewing stakeholders to understand their data needs, including legal, compliance, and business requirements. Determine how long the data needs to be retained and the frequency of access.

- Legal and Regulatory Compliance Review: Identify applicable legal and regulatory requirements, such as data retention mandates and privacy regulations. For example, the General Data Protection Regulation (GDPR) mandates specific data retention periods for personal data.

- Data Classification and Prioritization: Categorize data based on sensitivity, importance, and legal requirements. Prioritize data for archival based on these classifications.

- Archival Strategy Development: Define the archival strategy, including storage location, archival format, and access methods. Consider factors like data volume, access frequency, and data security requirements.

- Cost-Benefit Analysis: Evaluate the costs associated with archiving different data sets against the potential benefits, such as reduced legal risk and improved business intelligence.

- Documentation: Document the scope of the archival effort, including the data included, the archival strategy, and the rationale for the decisions made.

Data Categorization Based on Sensitivity and Importance

Categorizing data based on sensitivity and importance provides a framework for prioritizing archival efforts and applying appropriate security measures. This classification helps in determining the level of protection and the access controls required for archived data.

- Critical Data: This data is essential for legal, regulatory, or business continuity purposes. It requires the highest level of protection and long-term retention. Examples include:

- Financial records, required for tax audits and financial reporting.

- Personal data, subject to privacy regulations like GDPR.

- Intellectual property, such as patents and trade secrets.

- Important Data: This data supports business operations and decision-making. It requires moderate protection and a defined retention period. Examples include:

- Customer relationship management (CRM) data, providing insights into customer behavior.

- Sales and marketing data, supporting sales forecasting and marketing campaigns.

- Sensitive Data: This data contains confidential information that requires specific security measures to prevent unauthorized access or disclosure. Examples include:

- Protected health information (PHI), subject to HIPAA regulations.

- Employee records, containing sensitive personal information.

- Operational Data: This data supports day-to-day operations but may not be critical for long-term retention. It can be retained for a shorter period. Examples include:

- System logs, used for troubleshooting and performance monitoring.

- Temporary files, generated during application processing.

- Public Data: This data is publicly available and requires minimal protection. It may be retained for a short period or archived for historical purposes. Examples include:

- Marketing materials, such as brochures and presentations.

- Website content, providing information about products and services.

Choosing Archival Strategies

Selecting the appropriate archival strategy is critical for balancing data accessibility, cost, and security. The optimal approach depends heavily on the data’s nature, the required level of access, and the organization’s budgetary constraints. A poorly chosen strategy can lead to unnecessary expenses, compromised data integrity, or an inability to retrieve vital information when needed. This section explores several archival methods, comparing their strengths and weaknesses.

Comparing Archival Methods

Various methods exist for archiving data, each with distinct characteristics. The selection process must consider several factors, including the data’s sensitivity, the frequency of access, and the organization’s risk tolerance. The following table provides a comparative overview of several common archival strategies.

| Method | Cost | Accessibility | Security |

|---|---|---|---|

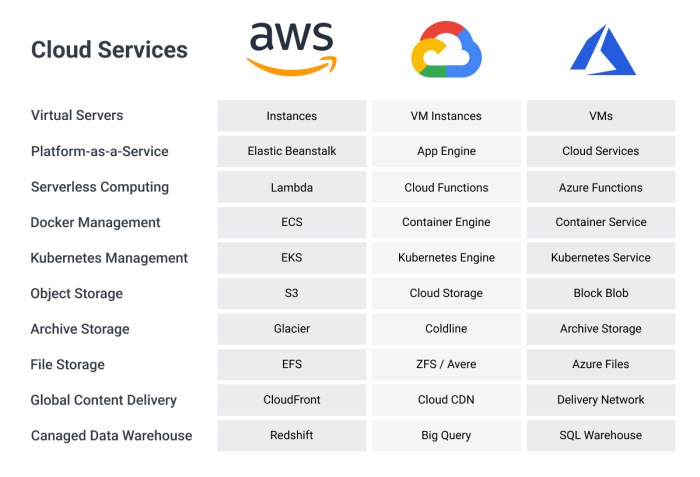

| Cold Storage (e.g., Tape) | Low upfront cost; potentially high long-term maintenance and retrieval costs. | Slow retrieval times; requires physical access to the storage media. Typically not suitable for frequent access. | High physical security required to protect the storage media. Data encryption can be implemented. Vulnerable to physical damage or loss. |

| Cloud Archiving (e.g., AWS Glacier, Azure Archive Storage, Google Cloud Storage Coldline/Archive) | Low storage cost; retrieval costs can vary based on access frequency and data volume. | Variable access times depending on the service tier (e.g., nearline, coldline, archive). Retrieval can take from minutes to hours. | Generally high security provided by the cloud provider, including data encryption at rest and in transit. Data is typically replicated across multiple geographic locations for redundancy. Security is reliant on the provider’s security practices. |

| On-Premise Storage (e.g., NAS, SAN) | High upfront cost; ongoing costs for hardware maintenance, power, and cooling. | High accessibility depending on the storage system’s configuration. Access times can be very fast. | Security depends on the organization’s security practices, including physical security, access controls, and data encryption. Data loss risk is higher than cloud archiving. |

| Hybrid Approach (Combination of the above) | Variable cost, depending on the combination of strategies used. | Variable accessibility, offering a balance between fast and slow access options. | Security varies depending on the components used. This allows tailoring the security to the needs of different data sets. |

Data Extraction and Transformation

The successful archival of data from retired applications hinges on a robust data extraction and transformation (ETL) process. This phase is critical for retrieving data from often complex and outdated systems, adapting it to a modern archival platform, and ensuring data integrity throughout the transition. A well-defined ETL strategy minimizes data loss, maintains data consistency, and facilitates future data retrieval and analysis.

Procedures for Extracting Data from Retired Applications

Data extraction involves retrieving data from the source systems. The specific procedures depend on the application’s architecture, data storage format, and available access methods.

- Database Extraction: If the retired application utilizes a relational database, extraction often involves using SQL queries or database-specific tools. The choice of tool depends on the database management system (DBMS) in use (e.g., Oracle, MySQL, PostgreSQL, SQL Server).

- File-Based Extraction: Applications that store data in files (e.g., CSV, TXT, XML) require parsing the files to extract relevant information. This process might involve scripting languages (e.g., Python, Perl) or specialized data integration tools.

- API Extraction: Some applications offer APIs for data access. Utilizing these APIs can be an effective method for extracting data, especially when dealing with web-based applications or services.

- Legacy System Extraction: Legacy systems might require more complex extraction techniques, such as screen scraping or direct access to data storage. These methods are often less reliable and more prone to errors.

- Data Profiling: Before extraction, data profiling is essential. This involves examining the data to understand its structure, quality, and potential issues. Data profiling helps identify data types, missing values, and potential inconsistencies.

- Metadata Extraction: Alongside the data itself, metadata (data about data) is crucial. This includes information about data definitions, data relationships, and data lineage. Metadata aids in understanding the data’s context and facilitates its proper interpretation in the archival system.

Designing a Data Transformation Process for Archival System Compatibility

Data transformation is the process of converting extracted data into a format compatible with the archival system. This involves cleaning, mapping, and restructuring the data to ensure its usability and consistency.

- Data Mapping: Data mapping defines the relationships between source and target data elements. It involves identifying corresponding fields and determining how data values should be transformed.

- Data Type Conversion: Data types may need to be converted to align with the archival system’s requirements. For example, dates might need to be standardized to a specific format (e.g., YYYY-MM-DD), and numeric values might need to be converted to a compatible data type.

- Data Cleansing: Data cleansing involves correcting or removing errors, inconsistencies, and redundancies in the data. This includes handling missing values, correcting invalid data, and removing duplicate records.

- Data Enrichment: Data enrichment involves adding extra information to the data, such as geocoding addresses or adding customer demographics. This can improve the value of the archived data.

- Data Aggregation and Summarization: Depending on the archival requirements, data might need to be aggregated or summarized. For example, daily transaction data might be aggregated into monthly summaries.

- Data Structuring: The data may need to be restructured to fit the target schema. This could involve changing table structures, creating new tables, or denormalizing data to improve query performance.

- Transformation Tools: Data transformation can be performed using various tools, including ETL tools (e.g., Informatica, Talend, Apache NiFi), scripting languages (e.g., Python, R), or custom-built programs.

Steps to Handle Data Cleansing and Validation During the Extraction Process

Data cleansing and validation are crucial steps to ensure data quality and reliability in the archival system. These steps should be integrated into the ETL process.

- Data Profiling for Quality Assessment: Thorough data profiling is the initial step. This helps in identifying data quality issues such as missing values, incorrect formats, and inconsistencies.

- Defining Validation Rules: Validation rules define the criteria that data must meet to be considered valid. These rules should be based on business requirements and data constraints.

- Handling Missing Values: Strategies for handling missing values include replacing them with default values, using statistical imputation techniques (e.g., mean, median), or removing records with missing values.

- Correcting Data Errors: Data errors can be corrected using various techniques, such as looking up values in reference tables, applying business rules, or manually correcting the data.

- Removing Duplicate Records: Duplicate records should be identified and removed to ensure data accuracy. This might involve using deduplication algorithms or matching rules.

- Data Validation Techniques: Data validation techniques include range checks (ensuring values fall within a specified range), format checks (verifying that data conforms to a specific format), and referential integrity checks (ensuring that foreign keys reference valid primary keys).

- Auditing and Logging: Implement auditing and logging to track data quality issues and the actions taken to resolve them. This helps in identifying data quality trends and improving the ETL process.

- Automated Validation: Implement automated validation checks within the ETL process to ensure data quality throughout the extraction and transformation stages.

Data Storage and Format Considerations

Choosing the right data storage formats and strategies is crucial for the long-term preservation and accessibility of archived data from retired applications. This section delves into the intricacies of selecting appropriate formats, ensuring data integrity, and leveraging metadata for effective retrieval. The goal is to provide a comprehensive guide to making informed decisions that support data preservation and future usability.

Choosing Appropriate Data Storage Formats

The selection of data storage formats directly impacts data accessibility, efficiency, and longevity. Different formats are suited to various data types and use cases.

- CSV (Comma Separated Values): CSV files are a simple, widely compatible format, ideal for tabular data. They are easily readable by humans and can be processed by a wide range of software tools. However, CSV files lack support for complex data structures and metadata. For example, consider a retired application that tracked customer orders. The core order details (order ID, date, customer ID, total amount) could be efficiently stored in a CSV file.

This format’s simplicity makes it easy to import the data into reporting tools or other systems for analysis.

- JSON (JavaScript Object Notation): JSON is a lightweight, human-readable format suitable for storing structured data, including nested objects and arrays. It is commonly used for data exchange between applications and is easily parsed by most programming languages. JSON supports metadata and can represent more complex data relationships than CSV. If the customer order data included items, discounts, and shipping information, JSON would be a better choice, allowing for the representation of nested data structures related to each order.

- XML (Extensible Markup Language): XML provides a flexible, extensible format for storing structured data. It supports complex data structures and metadata through the use of tags. XML is particularly useful when data requires extensive formatting and validation. However, XML files can be larger and more complex than JSON files, potentially impacting storage and processing efficiency.

- Proprietary Formats: Data may have originally been stored in a proprietary format specific to the retired application. These formats can present challenges due to their dependence on specific software or hardware. In such cases, the archival strategy must include converting the data to a more open and accessible format. This might involve reverse engineering the format or utilizing available documentation to create a conversion tool.

Considerations for Long-Term Data Integrity and Accessibility

Ensuring the long-term integrity and accessibility of archived data requires careful planning and implementation of specific strategies. This includes selecting storage media, implementing data validation techniques, and planning for technology obsolescence.

- Storage Media Selection: The choice of storage media directly affects data longevity. Optical media (CDs, DVDs) have a limited lifespan and are susceptible to physical damage. Magnetic media (tapes, hard drives) are also prone to degradation over time and require regular maintenance. Solid-state drives (SSDs) offer improved durability and access speed, but they also have a finite lifespan. Cloud storage provides scalability, redundancy, and disaster recovery capabilities, but it relies on the availability and stability of the cloud provider.

For instance, a data archive might use a combination of cloud storage for primary access and offline tape storage for long-term preservation.

- Data Validation: Implementing data validation techniques is crucial for ensuring data integrity. This involves verifying the accuracy and consistency of the data throughout the archival process. Techniques include checksums, data type validation, and referential integrity checks. For example, after extracting and transforming data, checksums can be calculated for each file and verified periodically to detect any corruption during storage or retrieval.

- Data Redundancy: Employing data redundancy mechanisms is essential to protect against data loss due to hardware failures or other unforeseen events. This can be achieved through techniques such as RAID (Redundant Array of Independent Disks) configurations, data replication, and geographically dispersed storage. A RAID 5 configuration, for example, distributes data and parity information across multiple disks, allowing the system to continue operating even if one disk fails.

- Technology Obsolescence Planning: Data formats, storage media, and software tools become obsolete over time. Planning for technology obsolescence involves regularly migrating data to newer formats and storage media to maintain accessibility. This process might involve converting data from older formats (e.g., older versions of Microsoft Word) to more current formats (e.g., DOCX) or migrating data from magnetic tapes to cloud storage.

- Regular Audits: Conducting regular audits of the archived data is crucial to ensure its ongoing integrity and accessibility. These audits should include data validation checks, media integrity checks, and accessibility tests. For example, a quarterly audit might involve verifying checksums, testing data retrieval from various storage locations, and assessing the usability of the data with current software tools.

Demonstrating the Importance of Metadata and Its Role in Data Retrieval

Metadata provides critical context for archived data, enabling effective retrieval and understanding of the data’s meaning and purpose. The inclusion of comprehensive and well-structured metadata is a key component of any successful data archival strategy.

- Types of Metadata: Metadata can be categorized into descriptive, structural, administrative, and preservation metadata. Descriptive metadata describes the content of the data (e.g., title, author, date created). Structural metadata describes the organization of the data (e.g., file structure, relationships between data elements). Administrative metadata provides information about the data’s management (e.g., access rights, storage location). Preservation metadata documents the history of the data and any transformations applied to it (e.g., format conversions, data migrations).

- Metadata Standards: Using standardized metadata schemas ensures consistency and interoperability. Examples include Dublin Core for general descriptive metadata, MODS (Metadata Object Description Schema) for library and archival resources, and PREMIS (Preservation Metadata: Implementation Strategies) for preservation metadata. Employing these standards enables the archived data to be easily integrated with other systems and allows for more efficient data discovery and retrieval.

- Metadata Implementation: Metadata can be stored within the data files themselves (e.g., in JSON or XML files) or in a separate metadata repository. A metadata repository provides a centralized location for storing and managing metadata. This repository should include features for searching, indexing, and version control. The design of the metadata repository should consider the specific needs of the archived data and the expected users.

For example, a museum archiving digital photographs might store descriptive metadata (artist, date taken, subject) in a separate database linked to the image files.

- Metadata for Data Retrieval: Effective metadata is essential for data retrieval. Without adequate metadata, it is difficult or impossible to find and understand archived data. Metadata allows users to search and filter data based on various criteria, such as date, author, subject, and file type. For instance, consider a company archiving financial records. Comprehensive metadata (e.g., account number, transaction date, amount, description) allows auditors to quickly locate specific transactions or identify trends.

- Example: Imagine a company archiving customer support tickets. The metadata could include ticket ID, customer ID, date opened, date closed, subject, description, assigned agent, and resolution. With this metadata, a user could easily search for all tickets related to a specific product, opened within a certain time frame, and resolved by a particular agent. This facilitates efficient retrieval of the information needed for analysis or reporting.

Security and Access Control

Data archival for retired applications necessitates robust security and access control measures to safeguard sensitive information and ensure compliance with regulatory requirements. Implementing these measures is crucial for maintaining data integrity, confidentiality, and availability throughout the archival lifecycle. This section Artikels the security considerations and access control strategies essential for protecting archived data.

Security Measures for Archived Data Protection

Protecting archived data involves implementing a multi-layered security approach to mitigate risks. This approach encompasses encryption, access controls, and regular security audits.

- Encryption: Encryption is a fundamental security measure to protect data at rest and in transit. Archived data should be encrypted using strong encryption algorithms such as Advanced Encryption Standard (AES) with a key length of 256 bits. Encryption protects data from unauthorized access, even if the storage media is compromised. For example, a financial institution archiving customer transaction data would encrypt the data before storing it in an archive repository.

This ensures that even if the storage system is breached, the data remains unreadable without the decryption key.

- Access Controls: Implementing strict access controls is critical. Only authorized personnel should have access to archived data. Role-Based Access Control (RBAC) should be used to define and manage access permissions based on job roles and responsibilities. For example, auditors may need read-only access to archived financial records, while data administrators may require broader access for data management tasks.

- Regular Security Audits and Monitoring: Periodic security audits and continuous monitoring are essential for detecting and responding to security threats. Security audits should assess the effectiveness of security controls, identify vulnerabilities, and ensure compliance with security policies and regulations. Monitoring systems should track access attempts, detect unusual activity, and generate alerts for security incidents. For instance, a healthcare organization should conduct regular audits of its archived patient data to ensure compliance with HIPAA regulations, and monitor access logs to detect any unauthorized access attempts.

- Data Integrity Checks: Implementing data integrity checks, such as checksums or cryptographic hashes, ensures that archived data remains unaltered over time. These checks verify the data’s authenticity and detect any accidental or malicious modifications. For example, a legal firm archiving client documents might use SHA-256 hashing to verify the integrity of each document.

- Physical Security: Physical security measures, such as secure data centers with restricted access and environmental controls, are vital to protect the physical storage infrastructure. This includes measures like biometric authentication, surveillance, and intrusion detection systems. Consider the example of a government agency archiving classified information, where the physical security of the archive location is of paramount importance.

- Data Masking and Redaction: For data containing sensitive personal information (PII), data masking or redaction techniques can be used to anonymize or pseudonymize the data before archiving. This helps reduce the risk of data breaches and complies with privacy regulations such as GDPR. For instance, a marketing company archiving customer data might mask the customer’s full social security number while retaining the last four digits for identification purposes.

Implementing Access Controls

Access control implementation involves defining roles, assigning permissions, and enforcing policies to regulate who can access archived data and what actions they can perform. The goal is to minimize the attack surface and prevent unauthorized data access.

- Role-Based Access Control (RBAC): RBAC is a widely used approach for managing access controls. It assigns permissions to roles, and users are assigned to roles based on their job functions. This simplifies access management and ensures consistent application of security policies.

- Defining Roles: Roles should be defined based on the principle of least privilege, granting users only the minimum necessary access to perform their tasks. Examples of roles might include “Data Administrator,” “Auditor,” “Legal Counsel,” and “End User.” Each role should have a specific set of permissions associated with it.

- Assigning Permissions: Permissions define the actions that a user can perform on archived data. Examples include “Read,” “Write,” “Delete,” “Export,” and “Modify Metadata.” Permissions should be carefully assigned to each role based on the requirements of that role. For instance, the “Auditor” role might only have “Read” access to archived data, while the “Data Administrator” role might have full access.

- Authentication and Authorization: Strong authentication mechanisms, such as multi-factor authentication (MFA), should be used to verify user identities. Authorization mechanisms then determine whether a user is permitted to access a specific data object or perform a particular action.

- Regular Reviews and Audits: Access controls should be regularly reviewed and audited to ensure their effectiveness and compliance with security policies. Access rights should be reviewed periodically, especially when employees change roles or leave the organization.

Security Architecture Diagram

The security architecture for archived data should incorporate all the security measures discussed above, integrated within a cohesive framework. This diagram provides a visual representation of the key components and their interactions.

A diagram depicting the security architecture for archived data. The diagram is structured as follows:

At the center is the “Archived Data Repository,” which is the primary storage location.

It is enclosed within a security perimeter, represented by a dashed line, indicating the overall security boundary.

Around the repository are several interconnected components, each contributing to the overall security:

1. User Access Layer

Includes “Users” (e.g., Auditors, Data Administrators) who interact with the archived data.

Uses “Authentication & Authorization” (e.g., MFA, RBAC) to control user access.

2. Data Security Layer

“Encryption” is applied to the data at rest and in transit.

“Data Integrity Checks” are performed to ensure data authenticity.

“Data Masking/Redaction” is used to protect sensitive data.

3. Monitoring and Audit Layer

“Security Information and Event Management (SIEM)” system monitors logs and detects anomalies.

“Audit Logs” track all access and changes to the data.

“Regular Security Audits” assess the effectiveness of security controls.

4. Infrastructure Security Layer

“Secure Network” protects the network infrastructure.

“Physical Security” protects the physical storage location (e.g., data center).

“Data Backup and Disaster Recovery” ensure data availability.

All the layers interact to provide a comprehensive security framework for archived data. The Users access the data through the user access layer, the data is secured with the data security layer, the system is monitored and audited with the monitoring and audit layer, and the infrastructure is secured with the infrastructure security layer.

Data Retrieval and Search Capabilities

Effective data retrieval is crucial for realizing the value of archived data from retired applications. The ability to quickly and accurately access historical information is essential for compliance, legal discovery, business intelligence, and various other analytical purposes. The design of retrieval mechanisms must consider factors such as data volume, access frequency, and the types of queries anticipated.

Features Needed for Effective Data Retrieval

To ensure efficient and reliable access to archived data, several key features are necessary. These features should be implemented with scalability and performance in mind, allowing for growth in data volume and user access.

- Indexing: Indexing involves creating data structures that facilitate rapid data lookup. Indexing methods can include B-trees, hash indexes, or full-text indexes, depending on the nature of the data and the expected query patterns. For example, an index on a customer ID field allows for quick retrieval of all records associated with a specific customer.

- Metadata Management: Comprehensive metadata management is vital for understanding and navigating archived data. Metadata should include information about data structure, data lineage, and data context. It facilitates data discovery and ensures that users can accurately interpret the archived information.

- User Interface (UI) and API: A user-friendly interface is essential for allowing non-technical users to access archived data. APIs enable programmatic access to the data, which is crucial for integration with other systems and automated reporting. The UI should provide search capabilities, filtering options, and data visualization tools.

- Data Integrity Checks: Mechanisms to verify data integrity are essential to ensure the reliability of the retrieved information. This includes checksums, data validation rules, and regular audits. These checks help to detect and correct data corruption, preserving the accuracy of the archive.

- Access Control: Robust access control mechanisms are needed to restrict data access based on user roles and permissions. This ensures that only authorized users can view sensitive data, complying with data privacy regulations. Access control should be granular, allowing control over specific data elements or data sets.

Methods for Enabling Efficient Search and Filtering of Archived Data

Efficient search and filtering are critical for quickly locating relevant information within the archived data. Various techniques can be employed to optimize search performance and provide users with flexible data exploration capabilities.

- Search: Implementing search functionality allows users to search for specific terms or phrases within the archived data. This can be achieved through full-text indexing techniques, which analyze the text content and create indexes that enable rapid searches.

- Advanced Search Operators: Support for advanced search operators, such as AND, OR, NOT, and proximity searches, enables users to refine their search queries and improve search accuracy.

- Filtering Options: Providing filtering options based on data attributes allows users to narrow down the results. This can include filtering by date ranges, data types, or specific values. For example, users can filter archived sales data to show transactions within a specific quarter.

- Faceting: Faceting involves categorizing search results based on different attributes. This enables users to quickly explore data by drilling down into specific categories. For example, in an archive of customer support tickets, faceting can be used to group tickets by product, issue type, or resolution status.

- Data Visualization: Visualizing data through charts, graphs, and dashboards enhances the user’s ability to understand and interpret the archived information. Visualizations can reveal trends, patterns, and anomalies that might not be apparent through raw data alone.

Query Optimization Techniques for Large Datasets

Optimizing queries is crucial for ensuring acceptable performance when retrieving data from large archives. Several techniques can be employed to improve query execution speed and reduce resource consumption.

- Query Planning: Database systems use query planners to determine the most efficient way to execute a query. Query planners analyze the query and available indexes to create an execution plan. It is essential to analyze and optimize the query plan to ensure optimal performance.

- Index Usage: Properly designed indexes are critical for efficient data retrieval. The query optimizer utilizes indexes to quickly locate relevant data. Regularly reviewing and maintaining indexes, including adding or dropping indexes based on query patterns, is necessary.

- Partitioning: Partitioning involves dividing a large dataset into smaller, more manageable segments. This can improve query performance by reducing the amount of data that needs to be scanned. For example, data can be partitioned by date, region, or other relevant attributes.

- Data Compression: Compressing archived data reduces storage space and can also improve query performance by reducing the amount of data that needs to be read from disk. Compression algorithms can be applied to both structured and unstructured data.

- Caching: Implementing caching mechanisms can significantly improve query performance by storing frequently accessed data in memory. Caching can reduce the need to access the underlying storage system, resulting in faster retrieval times.

- Query Profiling: Query profiling involves analyzing the execution of a query to identify performance bottlenecks. Profiling tools provide insights into the time spent on different operations, such as index lookups, table scans, and data transfers.

Data Retention Policies and Compliance

Data retention policies are a critical component of data archival for retired applications. These policies dictate how long data must be kept, based on legal, regulatory, and business requirements. Failure to adhere to these policies can result in significant legal penalties, reputational damage, and loss of business opportunities. Effective data retention ensures compliance while optimizing storage costs and facilitating efficient data retrieval when needed.

Identifying Legal and Regulatory Requirements for Data Retention

Legal and regulatory requirements for data retention vary significantly depending on the industry, geographic location, and type of data. A thorough understanding of these requirements is paramount for designing and implementing a compliant data archival strategy.

- General Data Protection Regulation (GDPR): The GDPR, applicable across the European Union, sets stringent requirements for the retention of personal data. Organizations must justify the retention period based on a legitimate purpose and adhere to data minimization principles, retaining data only for as long as necessary. Failure to comply can result in substantial fines.

- California Consumer Privacy Act (CCPA): The CCPA, and subsequent California Privacy Rights Act (CPRA), grants California consumers rights regarding their personal data, including the right to know, the right to delete, and the right to opt-out. Data retention practices must align with these consumer rights.

- Health Insurance Portability and Accountability Act (HIPAA): HIPAA regulations in the United States govern the handling of protected health information (PHI). Data retention requirements are specific, often requiring retention for a minimum of six years from the date of creation or the last effective date.

- Sarbanes-Oxley Act (SOX): SOX mandates the retention of financial records and audit trails for publicly traded companies in the United States. This includes data related to financial transactions, internal controls, and audit reports, typically requiring retention for a minimum of seven years.

- Industry-Specific Regulations: Various industries have specific regulations. For example, financial institutions are subject to regulations from agencies like the Securities and Exchange Commission (SEC) and the Financial Industry Regulatory Authority (FINRA), which mandate the retention of specific types of data, such as trade confirmations and communications.

- Country-Specific Laws: Data retention requirements vary across countries. For example, China’s Cybersecurity Law imposes data localization and retention requirements, which can impact how international organizations archive data.

Creating a Sample Data Retention Policy Template

A well-defined data retention policy serves as a roadmap for data archival practices. It should be comprehensive, easy to understand, and tailored to the specific needs of the organization. The following template provides a framework.

Data Retention Policy Template

1. Purpose: To define the guidelines for retaining and disposing of data related to retired applications in compliance with legal, regulatory, and business requirements.

2. Scope: This policy applies to all data associated with retired applications, including but not limited to:

- Database records

- Log files

- Audit trails

- Documents and reports

- Metadata

3. Data Retention Schedule: The following table Artikels the retention periods for different types of data:

| Data Type | Retention Period | Justification | Responsible Department |

|---|---|---|---|

| Financial Records | 7 years | SOX compliance | Finance |

| Customer Personal Data | 5 years after last interaction | GDPR, CCPA compliance | Marketing, Customer Service |

| Health Information | 6 years | HIPAA compliance | Healthcare Services |

| Audit Logs | 3 years | Security incident response | IT Security |

4. Data Storage and Access Control:

- Data will be stored in a secure archival system.

- Access to archived data will be restricted based on the principle of least privilege.

- Regular audits will be conducted to ensure access controls are effective.

5. Data Disposal:

- Data will be securely disposed of after the retention period has expired.

- Disposal methods will comply with industry best practices and data security standards.

- Documentation of data disposal will be maintained.

6. Policy Review and Updates:

- This policy will be reviewed and updated at least annually or as required by changes in legal or regulatory requirements.

- Changes to the policy will be communicated to all relevant stakeholders.

7. Enforcement: Non-compliance with this policy may result in disciplinary action, legal penalties, and reputational damage.

8. Contact Information: For questions or clarifications regarding this policy, please contact [Contact Person/Department].

Discussing the Importance of Regularly Reviewing and Updating Retention Policies

Data retention policies are not static documents; they must be dynamic and responsive to evolving legal landscapes, business needs, and technological advancements. Regular review and updates are essential to ensure continued compliance and effectiveness.

- Legal and Regulatory Changes: Laws and regulations governing data retention are subject to change. For example, the GDPR has been refined through court rulings and guidance from regulatory bodies. Regularly reviewing the policy ensures it reflects the latest legal requirements.

- Business Needs: Changes in business operations, mergers and acquisitions, or the introduction of new products and services can impact data retention requirements. For example, the acquisition of a new company might necessitate the inclusion of its data within the existing retention policy, and potentially the extension of retention periods.

- Technological Advancements: New technologies and data storage methods can impact how data is archived and accessed. For instance, the adoption of cloud-based archival systems may require updates to the policy to reflect the security and access control mechanisms of the new platform.

- Data Security Incidents: Following a data breach or security incident, the retention policy may need to be updated to address the vulnerabilities that led to the incident. This might involve extending the retention period for audit logs or implementing more stringent access controls.

- Industry Best Practices: Regularly reviewing the policy against industry best practices ensures that the organization is adopting the most effective and efficient data retention strategies.

- Audit Findings: Internal or external audits may identify areas for improvement in the data retention policy. The findings of these audits should be used to inform updates to the policy.

Cost Management and Budgeting

Data archival, while essential for retired applications, introduces significant financial considerations. Effective cost management and budgeting are crucial to ensure that archival projects are sustainable and deliver value without excessive expenditure. This section delves into the various factors influencing archival costs, provides guidelines for budgeting, and offers strategies for optimizing costs while maintaining data integrity.

Factors Influencing Archival Costs

Several interconnected factors contribute to the overall cost of data archival. Understanding these drivers is vital for accurate budgeting and informed decision-making.

- Data Volume: The sheer volume of data to be archived is a primary cost determinant. Larger datasets require more storage space, increased processing power for extraction and transformation, and potentially higher bandwidth for data transfer.

- Storage Costs: The choice of storage medium significantly impacts costs. Options range from on-premise storage solutions to cloud-based services. Each option has associated costs, including hardware, software licenses, maintenance, and energy consumption (on-premise), or subscription fees, data transfer charges, and egress fees (cloud).

Example: Consider a company archiving 10 TB of data.

On-premise storage, including hardware, software, and maintenance, might cost $5,000 per year. Cloud storage, with a similar storage capacity, could cost $100 per month plus data transfer and egress fees, which could vary significantly based on access frequency.

- Data Transformation Complexity: The complexity of data transformation processes, which might include data cleansing, deduplication, and format conversion, affects costs. More complex transformations require specialized skills, potentially leading to higher labor costs and increased processing time.

- Data Access Frequency: The anticipated frequency of data retrieval influences storage choices and associated costs. Frequently accessed data might necessitate faster, more expensive storage solutions, whereas infrequently accessed data can be stored on more cost-effective, slower storage tiers.

- Security and Compliance Requirements: Stringent security and compliance mandates, such as those related to GDPR or HIPAA, increase costs. These requirements necessitate robust security measures, data encryption, access controls, and audit trails, adding to the complexity and expense of the archival process.

- Personnel Costs: Skilled personnel are needed for data extraction, transformation, storage, and ongoing maintenance. These costs include salaries, benefits, and training expenses for data engineers, archivists, and security specialists.

- Data Retrieval and Search Capabilities: The sophistication of data retrieval and search mechanisms affects costs. Implementing advanced search features and indexing capabilities requires specialized software and infrastructure, leading to increased expenses.

- Project Duration: The duration of the archival project impacts costs. Longer projects typically involve higher labor costs, increased resource utilization, and potentially higher storage expenses.

Guidelines for Budgeting for Data Archival Projects

Developing a comprehensive budget is essential for managing the financial aspects of data archival. A well-defined budget helps in resource allocation, project tracking, and informed decision-making.

- Define Project Scope and Objectives: Clearly articulate the scope of the archival project, including the data sources, volume, retention period, and access requirements. This provides a foundation for estimating costs accurately.

- Assess Data Volume and Growth: Accurately estimate the current data volume and project future data growth. This is crucial for determining storage capacity requirements and associated costs.

- Evaluate Storage Options and Costs: Research and compare various storage options, including on-premise, cloud-based, and hybrid solutions. Obtain detailed cost estimates from vendors, including storage fees, data transfer charges, and any associated software or hardware costs.

- Estimate Transformation and Processing Costs: Calculate the costs associated with data extraction, transformation, and loading (ETL) processes. Consider labor costs, software licensing fees, and processing infrastructure requirements.

- Factor in Security and Compliance Costs: Include costs related to implementing security measures, access controls, data encryption, and audit trails to meet compliance requirements.

- Allocate for Personnel Costs: Budget for the salaries, benefits, and training of personnel involved in the archival project.

- Consider Data Retrieval and Search Costs: Factor in the costs of implementing data retrieval and search capabilities, including software licensing, infrastructure requirements, and ongoing maintenance.

- Include Contingency Funds: Allocate a contingency fund to address unforeseen costs or project delays. A typical contingency fund ranges from 10% to 20% of the total project budget.

- Develop a Detailed Budget Spreadsheet: Create a detailed budget spreadsheet that itemizes all anticipated costs, including storage, processing, personnel, security, and contingency funds. Regularly update the budget to track actual expenses against planned costs.

- Obtain Multiple Quotes: Obtain quotes from multiple vendors for storage, software, and services to compare pricing and identify cost-effective solutions.

Tips for Optimizing Archival Costs

Optimizing archival costs without compromising data integrity is achievable through strategic planning and the implementation of cost-saving measures.

- Data Tiering: Implement data tiering strategies to store data based on its access frequency. Frequently accessed data can be stored on faster, more expensive storage tiers, while infrequently accessed data can be moved to slower, more cost-effective tiers.

- Data Compression: Utilize data compression techniques to reduce storage space requirements and associated costs. Compression can be applied during data extraction or transformation processes.

- Data Deduplication: Implement data deduplication to eliminate redundant data copies, reducing storage needs and costs.

- Cloud Storage Optimization: When using cloud storage, optimize storage tiering, data transfer, and egress fees. Consider using object storage tiers designed for archival purposes, which typically offer lower storage costs.

- Automation: Automate data archival processes to reduce manual effort and associated labor costs. Automation can be applied to data extraction, transformation, storage, and retrieval tasks.

- Vendor Negotiation: Negotiate with storage and service providers to obtain favorable pricing and contract terms.

- Regular Review and Optimization: Periodically review the archival process and storage costs to identify opportunities for optimization. Evaluate storage utilization, data access patterns, and vendor pricing to identify cost-saving opportunities.

- Data Retention Optimization: Review data retention policies to ensure that data is retained only for the required duration. Reducing the retention period for certain data types can lower storage costs.

- Utilize Open-Source Tools: Leverage open-source tools for data extraction, transformation, and storage to reduce software licensing costs.

- Implement a Data Governance Framework: Establish a data governance framework to manage data quality, metadata, and access controls effectively. This can improve data management efficiency and reduce costs associated with data retrieval and maintenance.

Testing and Validation

Testing and validation are critical phases in the data archival process for retired applications, ensuring data integrity, accessibility, and compliance. Rigorous testing minimizes the risk of data loss or corruption and confirms that archived data can be successfully retrieved and utilized when needed. Data validation, in particular, is crucial for verifying the accuracy and completeness of the archived data, especially considering potential transformations during extraction and storage.

Steps in Testing the Archival Process

The testing phase involves a series of systematic steps to evaluate the effectiveness and reliability of the data archival process. These steps are designed to identify and address potential issues before the full-scale archival of data occurs.

- Planning and Scope Definition: Define the scope of the testing, including which datasets, applications, and archival processes will be tested. Establish clear test objectives and success criteria, such as the percentage of data records that must be successfully archived and the acceptable error rates.

- Test Data Selection: Choose representative test datasets that cover various data types, formats, and sizes. These datasets should include both common and edge-case scenarios to ensure comprehensive testing. Consider creating synthetic data to simulate specific conditions or data volumes.

- Environment Setup: Set up a testing environment that mirrors the production environment as closely as possible. This includes replicating the hardware, software, and network configurations used in production.

- Data Extraction and Transformation Testing: Test the data extraction and transformation processes to ensure that data is accurately extracted from the source system and transformed into the target format. This includes verifying data mapping, data cleansing, and data type conversions. For instance, verify that dates are correctly converted from one format to another.

- Archival Storage Testing: Test the data storage process to confirm that data is stored securely and efficiently. Verify that data can be written to the archival storage and that the storage system can handle the expected data volume and access patterns.

- Data Retrieval Testing: Test the data retrieval process to ensure that archived data can be successfully retrieved and accessed. Verify that data can be retrieved using various search criteria and that the retrieved data is accurate and complete. This includes testing the search functionality and ensuring that search results are consistent.

- Security and Access Control Testing: Test the security and access control mechanisms to ensure that only authorized users can access archived data. Verify that user roles and permissions are correctly implemented and that data is protected from unauthorized access.

- Performance Testing: Measure the performance of the archival process, including data extraction, transformation, storage, and retrieval times. Identify any performance bottlenecks and optimize the process for efficiency.

- Error Handling and Logging Testing: Test the error handling and logging mechanisms to ensure that errors are correctly detected, logged, and handled. Verify that error messages are informative and that logs provide sufficient information for troubleshooting.

- Documentation and Reporting: Document all testing activities, including test cases, test results, and any issues encountered. Generate reports that summarize the testing results and provide recommendations for improvements.

Importance of Data Validation After Archiving

Data validation after archiving is essential to guarantee the integrity and reliability of the archived data. It verifies that the archived data accurately reflects the original data and that no data loss or corruption occurred during the archival process.

- Data Accuracy: Validation ensures that the archived data is accurate and free from errors. This is crucial for maintaining the reliability of the data for future use.

- Data Completeness: Validation confirms that all necessary data has been archived and that no data is missing. Completeness is vital for providing a comprehensive view of the archived information.

- Data Consistency: Validation verifies that the archived data is consistent with the original data, especially after transformations.

- Compliance: Data validation helps ensure compliance with regulatory requirements, such as data retention policies and data privacy regulations.

- Data Usability: Validated data is more easily accessible and usable for future analysis and reporting.

Procedures for Verifying Data Integrity Over Time

Verifying data integrity over time is an ongoing process that ensures the long-term reliability of archived data. It involves regular checks and maintenance to identify and address any potential issues.

- Checksum Verification: Implement checksums (e.g., MD5, SHA-256) during data archival and regularly verify them against the archived data. If the checksums do not match, it indicates that the data has been altered or corrupted. For example, if the original data has an MD5 checksum of `abc123def`, and the archived data’s checksum changes to `xyz456ghi`, it means the data has been corrupted.

- Periodic Data Audits: Conduct periodic audits of the archived data to ensure its integrity. This can involve sampling the archived data and comparing it to the original data to identify any discrepancies.

- Regular Data Retrieval and Verification: Regularly retrieve a sample of archived data and verify its accuracy and completeness. This helps to identify any issues with the retrieval process or the storage system.

- Storage System Monitoring: Monitor the storage system for any errors or performance issues. This includes monitoring disk space, data transfer rates, and storage system health.

- Data Replication and Backup: Implement data replication and backup strategies to protect against data loss. Replicating data to a secondary storage location provides a redundant copy of the data in case of a primary storage failure. Regular backups are essential for recovering data in case of accidental deletion or corruption.

- Data Migration: Periodically migrate archived data to new storage media or formats to ensure long-term accessibility and compatibility. This can involve migrating data from older storage formats (e.g., tape) to newer formats (e.g., cloud storage) to prevent data loss due to media degradation or obsolescence.

- Implement a Data Integrity Policy: Develop and enforce a data integrity policy that Artikels the procedures for verifying data integrity, including the frequency of checks, the tools to be used, and the responsibilities of data owners and administrators.

Long-Term Data Management and Maintenance

The long-term viability of archived data hinges on robust management and maintenance procedures. This encompasses not only the initial archival process but also the ongoing care and preservation of the data over extended periods. Proactive strategies are essential to mitigate data degradation, ensure accessibility, and adapt to evolving technological landscapes.

Procedures for Ongoing Maintenance of Archived Data

Ongoing maintenance is crucial to safeguard the integrity and accessibility of archived data. This involves a multifaceted approach that includes regular checks, proactive monitoring, and established protocols for addressing potential issues.

- Data Integrity Checks: Regular integrity checks are essential to verify the accuracy and completeness of the archived data. This process often involves:

- Checksum verification: Comparing the checksums of archived files with their original values to detect any corruption. This uses algorithms like SHA-256 or MD5. For example, if a file’s SHA-256 checksum changes, it indicates potential data corruption.

- Data validation: Validating the data against defined schemas or business rules to ensure its consistency and adherence to the intended format. This is especially important for structured data in databases.

- Bit rot detection: Implementing methods to identify and remediate bit rot, a gradual decay of data on storage media. This may involve periodic data refreshing or migration to new storage.

- Storage Media Monitoring: Continuous monitoring of storage media health is critical to prevent data loss. This includes:

- Hardware diagnostics: Utilizing diagnostic tools provided by storage vendors to identify potential hardware failures.

- Performance monitoring: Tracking storage performance metrics, such as read/write speeds and latency, to detect bottlenecks or degradation.

- Capacity management: Monitoring storage capacity utilization to prevent overfilling and ensure sufficient space for future data growth.

- Access Control and Security Audits: Regular audits of access controls and security measures are necessary to maintain data confidentiality and prevent unauthorized access. This involves:

- Reviewing user permissions: Periodically reviewing user access rights to ensure they align with current roles and responsibilities.

- Security vulnerability assessments: Conducting regular vulnerability scans to identify and address potential security weaknesses in the archival system.

- Log analysis: Analyzing security logs to detect any suspicious activity or potential security breaches.

- Data Refreshing: Data refreshing involves periodically copying archived data to new storage media to mitigate the risks of media degradation and bit rot. The frequency of refreshing depends on the storage technology and the criticality of the data. For example, magnetic tape storage may require refreshing every 3-7 years, while solid-state drives (SSDs) may have longer refresh cycles.

- Software and Hardware Updates: Keeping the archival system software and hardware up-to-date is crucial for security, performance, and compatibility. This involves:

- Applying security patches: Regularly applying security patches to address vulnerabilities and protect against potential threats.

- Upgrading software versions: Updating the archival software to the latest versions to benefit from new features, bug fixes, and performance improvements.

- Hardware upgrades: Replacing outdated hardware components to ensure optimal performance and compatibility with current technologies.

Strategies for Migrating Archived Data to New Storage Technologies

As storage technologies evolve, migrating archived data to new platforms becomes necessary to maintain accessibility, performance, and cost-effectiveness. A well-defined migration strategy minimizes downtime, data loss, and disruption to operations.

- Planning and Assessment: Before initiating a migration, a thorough planning and assessment phase is essential. This involves:

- Data inventory: Creating a comprehensive inventory of all archived data, including its size, format, and location.

- Technology evaluation: Evaluating different storage technologies based on factors such as cost, performance, scalability, and data retention requirements.

- Migration strategy development: Developing a detailed migration plan that Artikels the steps involved, the timeline, and the resources required.

- Data Extraction and Transformation: Data extraction and transformation are often necessary to prepare data for the new storage platform. This may involve:

- Data extraction: Extracting the data from the existing storage system.

- Data transformation: Converting the data to a format compatible with the new storage platform. This might involve changes in file formats, database schemas, or data structures.

- Data validation: Validating the transformed data to ensure its accuracy and consistency.

- Data Migration Methods: Various migration methods can be employed, depending on the volume of data, the desired downtime, and the available resources. These include:

- “Big Bang” migration: Transferring all data at once, which minimizes complexity but can result in significant downtime.

- Phased migration: Migrating data in stages, allowing for reduced downtime and easier troubleshooting.

- Online migration: Migrating data while the system remains online, which minimizes downtime but requires more complex planning and execution.

- Data Validation and Verification: After the data has been migrated, thorough validation and verification are crucial to ensure data integrity. This includes:

- Checksum verification: Verifying checksums to ensure data has been transferred without corruption.

- Data comparison: Comparing data in the old and new systems to identify any discrepancies.

- Functional testing: Testing the migrated data to ensure it functions as expected.

- Post-Migration Activities: After the data migration is complete, certain post-migration activities are necessary. This includes:

- Decommissioning the old storage system: Properly decommissioning the old storage system to free up resources and prevent data loss.

- Updating documentation: Updating the archival documentation to reflect the new storage platform and migration procedures.

- Ongoing monitoring: Implementing ongoing monitoring to ensure the performance and availability of the new storage system.

Importance of Documenting the Archival Process and Data Formats

Comprehensive documentation is a cornerstone of effective long-term data management. It provides a detailed record of the archival process, data formats, and related information, which is essential for data recovery, understanding, and future migrations.

- Documenting the Archival Process: Documenting the entire archival process is critical for reproducibility, troubleshooting, and future reference. This should include:

- Data sources: Identifying the sources of the data, including the retired applications and databases.

- Archival procedures: Detailing the steps involved in data extraction, transformation, and storage.

- Data validation processes: Describing the data validation steps performed to ensure data integrity.

- Retention policies: Outlining the data retention policies and their rationale.

- Access control mechanisms: Documenting the access control mechanisms and security measures in place.

- Documenting Data Formats: Detailed documentation of data formats is crucial for data interpretation and retrieval over time. This includes:

- File formats: Specifying the file formats used for storing archived data, such as CSV, XML, or proprietary formats.

- Data schema: Providing the data schema, including the data types, field definitions, and relationships between data elements.

- Data dictionaries: Creating data dictionaries to define the meaning and context of each data element.

- Data transformation rules: Documenting the rules used to transform data from its original format to the archived format.

- Benefits of Comprehensive Documentation: Well-maintained documentation provides numerous benefits:

- Data recovery: Facilitating data recovery in case of data loss or corruption.

- Data understanding: Enabling future users to understand and interpret the archived data.

- Data migration: Simplifying the migration of data to new storage technologies.

- Compliance: Supporting compliance with data retention regulations and industry standards.

- Knowledge transfer: Facilitating knowledge transfer between team members and over time.

- Documentation Maintenance: Documentation must be regularly updated and maintained to reflect changes in the archival process, data formats, and storage technologies. This involves:

- Version control: Using version control systems to track changes to the documentation.

- Regular reviews: Conducting regular reviews of the documentation to ensure its accuracy and completeness.

- Training: Providing training to team members on the documentation and its use.

Closing Notes

In conclusion, successfully handling data archival for retired applications demands a holistic strategy encompassing technical expertise, legal compliance, and strategic planning. By meticulously addressing data identification, implementing appropriate archival methods, and prioritizing security and accessibility, organizations can ensure the long-term preservation of valuable historical data. This approach not only mitigates risks associated with data loss and non-compliance but also unlocks opportunities for informed decision-making and continued business value derived from legacy application data.

Common Queries

What are the primary risks of not archiving data from retired applications?

The primary risks include potential data loss, non-compliance with regulatory requirements, inability to meet legal discovery obligations, and the loss of historical business insights. Furthermore, failure to archive can lead to reputational damage and financial penalties.

How do I determine the appropriate retention period for archived data?

Retention periods are determined by a combination of legal, regulatory, and business requirements. Consult legal counsel and review relevant industry standards and compliance regulations (e.g., GDPR, HIPAA, SOX) to establish appropriate retention schedules tailored to your specific data types and business needs.

What is the difference between data archiving and data backup?

Data archiving is the long-term preservation of data for historical or compliance purposes, often with less frequent access requirements. Data backup, on the other hand, is focused on short-term data recovery and business continuity, with the goal of quickly restoring data in case of a failure or disaster.

What are the cost considerations for data archival?

Cost considerations include storage costs, extraction and transformation expenses, security and access control implementation, data retrieval and search infrastructure, and ongoing maintenance. It is important to consider the volume of data, the archival method, and the desired level of accessibility and security when budgeting for data archival projects.