In the dynamic realm of microservices, where agility and independent deployments reign supreme, the challenge of managing shared databases emerges as a critical consideration. This exploration navigates the complexities of data sharing across services, examining how it can both hinder and enhance the benefits of a microservices architecture.

We’ll delve into the core problems, exploring the pitfalls of tightly coupled data, and the advantages of embracing strategies that promote data consistency and efficient resource utilization. From understanding the intricacies of data consistency and transaction management to designing effective database strategies, this discussion provides insights for building resilient and scalable microservice systems.

The Challenge of Shared Databases in Microservices

Microservices architectures offer numerous advantages, including independent deployability, scalability, and technology diversity. However, when teams adopt a microservices approach, the temptation to share a single database across multiple services can arise. This seemingly convenient approach, while offering an initial simplicity, often leads to significant challenges that can undermine the core benefits of a microservices architecture.

The Core Problem of Data Sharing

The central issue with shared databases in a microservices environment is the tight coupling it creates between services. When multiple services directly access the same database schema, any change to the schema can potentially impact all services that rely on it. This interdependence hinders independent development and deployment, making it difficult to iterate quickly and adapt to changing business needs.

Common Issues Arising from Shared Database Architectures

Shared database architectures introduce several problems that complicate development, deployment, and maintenance. These issues directly counteract the advantages of microservices, leading to increased complexity and reduced agility.

- Tight Coupling: Services become tightly coupled to the database schema. A change in the schema, such as adding a new column or modifying a data type, requires careful coordination and potentially coordinated deployments across multiple services. This reduces the independence of services.

- Deployment Challenges: Coordinating deployments becomes complex. Deploying a new version of a service that interacts with a shared database often requires deploying other dependent services simultaneously, increasing the risk of errors and downtime.

- Scalability Bottlenecks: The shared database can become a bottleneck. As the application grows, the database may struggle to handle the increased load from multiple services, potentially impacting performance and availability. Scaling a shared database can be complex and expensive.

- Data Conflicts: Concurrent access to the database by multiple services can lead to data conflicts, requiring careful management of transactions, locking, and conflict resolution strategies. These strategies add complexity and can impact performance.

- Technology Limitations: The choice of database technology can limit the technology choices for individual services. If the shared database is a relational database, for example, services might be constrained to use SQL-based data access, even if a NoSQL database would be a better fit for a specific service’s data requirements.

- Data Integrity Challenges: Ensuring data integrity across multiple services accessing the same database requires careful design and implementation. It becomes more challenging to enforce consistent data models and maintain data consistency across different services.

Benefits of Microservices Undermined by Shared Databases

Microservices are designed to be independent and autonomous. Shared databases directly conflict with these principles, negating many of the benefits that microservices are intended to provide.

- Reduced Agility: Shared databases hinder the ability to quickly adapt to changing business needs. Changes to the database schema require careful planning and coordination across multiple teams, slowing down the development and deployment process.

- Impaired Scalability: The shared database can become a single point of failure and a bottleneck for scaling. Scaling a monolithic database is often more complex and expensive than scaling individual microservices.

- Increased Complexity: Managing a shared database across multiple services adds complexity to the architecture. It requires careful planning, design, and coordination to avoid conflicts and ensure data integrity.

- Reduced Fault Isolation: A failure in one service can potentially impact other services that share the same database. This reduces the fault isolation that is a key benefit of microservices.

- Limited Technology Choices: The choice of database technology can limit the technology choices for individual services, hindering the ability to use the best tool for the job.

Data Consistency and Transactions Across Services

Maintaining data consistency in a microservices architecture is significantly more complex than in a monolithic application. The distributed nature of microservices introduces challenges in ensuring that data remains accurate and reliable across multiple services, especially when a single business operation involves updates to data owned by different services. Addressing these challenges is crucial for the overall integrity and reliability of the system.

Challenges of Maintaining Data Consistency in a Distributed Environment

The distributed nature of microservices introduces several challenges when striving to maintain data consistency. These challenges stem from the inherent characteristics of distributed systems, including network latency, partial failures, and the need for eventual consistency in many scenarios.

- Network Latency: Communication between microservices occurs over a network. This introduces latency, meaning that updates to data in one service may not be immediately visible to other services. This delay can lead to inconsistencies if not managed carefully. For instance, consider an e-commerce system. If a customer places an order, the inventory service needs to update the stock levels.

If the network is slow, the order service might confirm the order before the inventory service updates, potentially leading to overselling.

- Partial Failures: In a distributed system, it’s possible for one service to fail while others continue to operate. If a transaction spans multiple services and one fails, the entire transaction may need to be rolled back to maintain consistency. This is especially challenging if the services have different failure rates or are deployed on different infrastructure.

- Data Replication and Synchronization: Microservices often store their data independently. Ensuring data consistency across replicated data stores adds another layer of complexity. This can involve techniques like distributed consensus algorithms, which are often used in databases like Apache Cassandra, or more relaxed consistency models.

- Eventual Consistency: The need to relax consistency guarantees to improve performance and availability can lead to situations where data is temporarily inconsistent. Eventual consistency means that, over time, the data will converge to a consistent state, but there may be a delay before all services see the same data.

- Transaction Management: Coordinating transactions across multiple services is complex. Traditional ACID (Atomicity, Consistency, Isolation, Durability) transactions are difficult to implement in a distributed environment, and alternative strategies are often required.

Transaction Management Strategies

Various strategies exist to manage transactions across microservices, each with its trade-offs in terms of consistency, performance, and complexity. Two prominent approaches are the two-phase commit protocol and the Saga pattern.

- Two-Phase Commit (2PC): The two-phase commit protocol is a distributed transaction protocol designed to ensure atomicity across multiple services. In the first phase (prepare), a transaction coordinator asks all participating services if they are ready to commit the transaction. Each service then indicates its readiness. In the second phase (commit), the coordinator instructs all services to either commit or rollback the transaction based on the responses received in the first phase.

- Saga Pattern: The Saga pattern is an alternative to 2PC, especially suited for long-lived transactions. A Saga is a sequence of local transactions, where each local transaction updates data within a single service. If a local transaction fails, the Saga executes compensating transactions to undo the changes made by the preceding transactions. There are two main Saga orchestration strategies:

- Choreography-based Sagas: Each service listens for events and decides when to execute its local transaction and publish events for other services to react to.

This approach is more decentralized and easier to implement but can be harder to manage as the system grows.

- Orchestration-based Sagas: A central orchestrator service is responsible for coordinating the sequence of local transactions. This approach offers more control and simplifies error handling but introduces a single point of failure.

- Choreography-based Sagas: Each service listens for events and decides when to execute its local transaction and publish events for other services to react to.

Scenarios for Eventual and Strong Consistency

The choice between eventual and strong consistency depends on the specific requirements of the application and the acceptable trade-offs between consistency, availability, and performance.

- Eventual Consistency: Eventual consistency is often acceptable in scenarios where immediate consistency is not critical and where high availability and performance are desired.

- Example: Consider a social media platform. If a user updates their profile picture, it’s acceptable if other users see the new picture a few seconds or minutes later. The system prioritizes availability; users can still browse and interact with the platform even if some data is temporarily out of sync.

- Benefits: High availability, improved performance, and scalability.

- Drawbacks: Data may be inconsistent for a period, which can lead to user confusion or incorrect calculations.

- Strong Consistency: Strong consistency is required in scenarios where data accuracy is paramount and where data integrity is critical.

- Example: In a banking system, when transferring funds between accounts, it’s crucial that the transaction is either fully completed or completely rolled back. The system cannot allow partial updates. The amount must be debited from one account and credited to the other.

- Benefits: Data is always consistent, and the system is easier to reason about.

- Drawbacks: Can lead to reduced availability and performance due to the need for locking and synchronization.

The Saga Pattern

In the realm of microservices, managing transactions that span multiple services becomes a complex undertaking. Traditional ACID transactions, designed for monolithic applications, are often impractical in this distributed environment due to issues like service availability and data isolation. The Saga pattern emerges as a solution, offering a way to maintain data consistency across services while acknowledging the inherent challenges of distributed systems.

This pattern breaks down a large transaction into a sequence of smaller, local transactions, each managed by a single service.

Saga Pattern’s Role in Distributed Transactions

The Saga pattern provides a mechanism for achieving eventual consistency across multiple microservices. It addresses the problem of coordinating operations across service boundaries without relying on distributed transactions. Instead of a single, atomic transaction, a Saga comprises a series of steps. Each step updates a single service’s data, and the entire Saga ensures that either all steps succeed (and the overall transaction is considered successful) or that compensating transactions are executed to undo any partial operations (and the overall transaction is considered failed).

This approach allows for greater service autonomy, as each service manages its own data and transactions.There are two primary approaches to implementing the Saga pattern:

- Orchestration: A central orchestrator service coordinates the Saga’s steps. This orchestrator knows the sequence of operations and handles the execution and compensation logic.

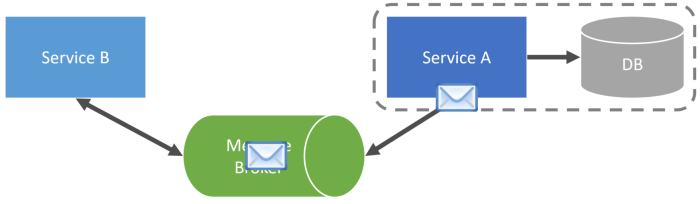

- Choreography: Services themselves communicate and coordinate their actions. Each service listens for events and reacts accordingly, triggering its own local transactions and potentially publishing events for other services to react to.

Orchestration vs. Choreography in the Saga Pattern

The choice between orchestration and choreography depends on factors such as the complexity of the transaction, the level of coupling desired, and the team’s expertise.

Orchestration involves a central component that manages the workflow.

- An orchestrator service dictates the order of operations.

- It sends commands to each service.

- It receives replies and determines the next step or initiates compensation if a failure occurs.

The benefits of orchestration include:

- Centralized control and easier management of the Saga’s logic.

- Simplified error handling and rollback mechanisms.

- Easier debugging and monitoring, as the flow is defined in one place.

However, orchestration also has drawbacks:

- The orchestrator becomes a single point of failure.

- It introduces coupling, as the orchestrator needs to know about all participating services.

- The orchestrator can become a bottleneck as the number of services grows.

Choreography, on the other hand, distributes the decision-making logic across services.

- Each service listens for events related to its own operations.

- When an event occurs, a service performs its local transaction and publishes new events.

- These new events trigger actions in other services.

The benefits of choreography include:

- Decoupling services, as they only need to know about the events they react to.

- Increased resilience, as there is no single point of failure.

- Improved scalability, as the workload is distributed.

The drawbacks of choreography are:

- Increased complexity in understanding and debugging the overall flow.

- Difficulties in managing complex transaction flows with many services.

- Potential for circular dependencies and event storms if not carefully designed.

Implementing a Saga Pattern with Example Service Interactions

Let’s consider a simplified e-commerce scenario involving three services: `Order Service`, `Payment Service`, and `Inventory Service`. A customer places an order, triggering a Saga.

Example Scenario: A customer places an order for a product.

Orchestration Approach Example (Using a Central Orchestrator):

- The `Order Service` receives the order and sends a “OrderCreated” event to the Orchestrator.

- The Orchestrator receives the event.

- The Orchestrator sends a “ProcessPayment” command to the `Payment Service`.

- The `Payment Service` processes the payment and sends a “PaymentProcessed” event to the Orchestrator. If the payment fails, it sends a “PaymentFailed” event.

- If “PaymentProcessed” is received, the Orchestrator sends a “ReserveInventory” command to the `Inventory Service`.

- The `Inventory Service` reserves the inventory and sends a “InventoryReserved” event to the Orchestrator. If inventory is unavailable, it sends an “InventoryNotReserved” event.

- If “InventoryReserved” is received, the Orchestrator marks the order as “Confirmed” in the `Order Service`.

- If any step fails (e.g., “PaymentFailed” or “InventoryNotReserved”), the Orchestrator triggers compensating transactions. For instance, if the payment failed, the Orchestrator sends a “CancelOrder” command to the `Order Service` and releases the inventory.

Choreography Approach Example (Using Event-Driven Communication):

- The `Order Service` receives the order and publishes an “OrderCreated” event.

- The `Payment Service` subscribes to “OrderCreated” events. Upon receiving it, it processes the payment and publishes a “PaymentProcessed” or “PaymentFailed” event.

- The `Inventory Service` subscribes to “OrderCreated” events. Upon receiving it, it reserves the inventory and publishes an “InventoryReserved” or “InventoryNotReserved” event.

- The `Order Service` subscribes to “PaymentProcessed” and “InventoryReserved” events. Upon receiving both, it marks the order as “Confirmed”. If it receives a “PaymentFailed” or “InventoryNotReserved” event, it marks the order as “Cancelled”.

- If any step fails (e.g., the payment fails), the corresponding service publishes a compensating event, triggering actions in other services to undo the changes. For example, if the payment fails, the `Payment Service` publishes a “PaymentFailed” event, which triggers the `Inventory Service` to release the inventory if it had already been reserved, and the `Order Service` to cancel the order.

In both approaches, the key is to ensure that the Saga maintains data consistency across the services. This example demonstrates how the Saga pattern facilitates distributed transactions, allowing services to operate independently while maintaining data integrity.

Eventual Consistency in Microservices

Eventual consistency is a crucial concept in microservices architecture, particularly when dealing with data distributed across multiple services. It acknowledges that achieving immediate consistency across all services after a data modification is often impractical or introduces unacceptable performance penalties. Instead, it focuses on ensuring that data will eventually become consistent across all services, even if there’s a delay. This approach prioritizes availability and responsiveness, accepting a temporary state of inconsistency.

Understanding the Essence of Eventual Consistency

Eventual consistency means that if no new updates are made to a piece of data, all accesses to that data will eventually return the same value. This doesn’t guarantee when the data will converge, but it does promise that it will happen. It’s a trade-off between immediate consistency and performance/availability. In a microservices environment, this is often a necessary compromise to allow services to operate independently and scale efficiently.* Key Characteristics:

Relaxed Consistency

Unlike strong consistency, which requires all replicas to be updated before a read can occur, eventual consistency allows reads to happen even while updates are propagating.

High Availability

Services remain available even during temporary inconsistencies. This is because they don’t need to wait for all other services to be updated before responding to requests.

Partition Tolerance

Eventual consistency is well-suited for distributed systems where network partitions are possible. Services can continue to function independently even if they can’t communicate with each other momentarily.* Real-World Analogy: Consider a bank account. When you deposit money, the transaction might not immediately reflect in all systems (e.g., online banking, ATM, mobile app). However, eventually, all systems will show the updated balance.

This delay is acceptable for the benefit of faster transaction processing and system availability.

Implementing Eventual Consistency: Strategies and Techniques

Several strategies can be employed to achieve eventual consistency in a microservices environment. The choice of strategy depends on the specific requirements of the application, the nature of the data, and the acceptable level of inconsistency.* – *Eventualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualualual

Database Technologies and Solutions

Choosing the right database technology is a critical decision in a microservices architecture. The selection impacts data consistency, performance, scalability, and the overall success of each service. This section explores various database technologies suitable for microservices, their advantages, disadvantages, and strategies for making informed choices.

Relational Databases in Microservices

Relational databases, such as PostgreSQL, MySQL, and SQL Server, are traditional choices for managing structured data. Their ACID properties (Atomicity, Consistency, Isolation, Durability) provide strong data consistency guarantees.

- Advantages:

- ACID Transactions: Relational databases ensure data integrity through ACID properties, which is crucial for services requiring strong consistency.

- Mature Technology: Relational databases are well-established, with extensive tooling, community support, and a deep understanding of their operational characteristics.

- Data Integrity: Features like foreign keys and constraints help enforce data integrity, ensuring data quality.

- SQL Proficiency: Developers generally have a strong understanding of SQL, the standard language for interacting with relational databases.

- Disadvantages:

- Tight Coupling: Using a shared relational database can lead to tight coupling between services, making independent deployments challenging.

- Scalability Challenges: Scaling relational databases horizontally can be complex and may require sharding, which adds operational overhead.

- Schema Rigidity: Changes to the database schema can be time-consuming and potentially disruptive to multiple services.

- Performance Bottlenecks: Complex queries and joins can lead to performance bottlenecks, especially under heavy load.

- Use Cases:

- Services that require strong data consistency and ACID transactions, such as financial transactions.

- Services with complex data relationships and the need for data integrity enforcement.

- Services that benefit from the maturity and tooling of relational database systems.

NoSQL Databases in Microservices

NoSQL databases offer flexible data models and are designed for scalability and performance. They come in various types, including document stores, key-value stores, and graph databases.

- Advantages:

- Scalability: NoSQL databases are generally designed for horizontal scalability, allowing for easier scaling as data volume and traffic increase.

- Flexible Data Models: NoSQL databases often support flexible data models, allowing for schema-less designs and easier evolution of data structures.

- High Performance: NoSQL databases are often optimized for specific data access patterns, leading to improved performance for certain workloads.

- Loose Coupling: Each microservice can manage its own data store, leading to loose coupling between services.

- Disadvantages:

- Eventual Consistency: Many NoSQL databases offer eventual consistency, which may not be suitable for services requiring strong consistency.

- Limited ACID Support: While some NoSQL databases offer ACID properties, the level of support may vary, and may be more limited than relational databases.

- Operational Complexity: Managing and monitoring NoSQL databases can be complex, requiring specialized knowledge.

- Query Limitations: NoSQL databases may have limitations in terms of complex querying capabilities compared to SQL.

- Use Cases:

- Services that require high scalability and performance, such as content delivery and session management.

- Services that benefit from flexible data models, such as product catalogs and user profiles.

- Services that can tolerate eventual consistency, such as recommendation engines and analytics platforms.

Choosing the Right Database Technology

Selecting the appropriate database technology involves considering several factors, including the service’s data requirements, consistency needs, performance requirements, and scalability goals.

- Data Model:

- If the data is highly structured with complex relationships, a relational database might be a good choice.

- If the data is unstructured or semi-structured, or if the data model is likely to evolve frequently, a NoSQL database might be more suitable.

- Consistency Requirements:

- For services requiring strong consistency, a relational database with ACID properties is generally preferred.

- For services that can tolerate eventual consistency, a NoSQL database might be a better fit.

- Performance and Scalability:

- If the service requires high read throughput and horizontal scalability, a NoSQL database can be a good choice.

- If the service requires complex queries and joins, a relational database might be more suitable, although careful design is needed for scalability.

- Team Expertise:

- Consider the team’s existing knowledge and experience with different database technologies.

- Choosing a database that the team is familiar with can speed up development and reduce operational overhead.

- Cost:

- Consider the cost of the database technology, including licensing, infrastructure, and operational costs.

- Open-source options are often a cost-effective choice.

Choosing the right database technology is a crucial step in designing a microservices architecture. A well-chosen database can enhance the performance, scalability, and maintainability of individual services, while an inappropriate choice can lead to performance bottlenecks, data consistency issues, and increased operational complexity.

Data Migration and Schema Evolution

In the dynamic landscape of microservices, data migration and schema evolution are critical for maintaining application health and enabling continuous delivery. As individual services evolve, their data models and underlying schemas must adapt. This process requires careful planning and execution to minimize downtime, ensure data integrity, and avoid disruptions to service functionality. Effective data migration strategies are essential for supporting these changes seamlessly.

Strategies for Migrating Data Between Database Schemas

Migrating data between schemas involves transferring data from an old schema to a new one. Several strategies can be employed, each with its advantages and disadvantages, depending on the complexity of the changes and the acceptable downtime.

- Big Bang Migration: This approach involves shutting down the application, migrating all the data, and then bringing the application back online. It is simple to implement but results in significant downtime, making it unsuitable for production environments with high availability requirements. This is generally only acceptable for smaller datasets or during off-peak hours.

- Blue/Green Deployment: This strategy uses two identical environments: the “blue” environment (current production) and the “green” environment (new deployment with the updated schema). Data is migrated to the green environment, and once validated, traffic is switched to the green environment, minimizing downtime. This approach requires infrastructure to support two complete environments.

- Canary Release with Data Migration: A small percentage of traffic is directed to the new version of the service with the new schema. Data is migrated incrementally, and the new service is monitored for issues. If the canary deployment is successful, the data migration is scaled, and more traffic is directed to the new service. This strategy allows for phased rollouts and minimizes risk.

- Parallel Run/Dual Write: Both the old and new schemas are updated simultaneously. The application writes data to both schemas, allowing for verification of the new schema’s data integrity before switching over. This approach requires careful coordination to ensure data consistency and avoid conflicts. After the data in the new schema is validated, the application can be switched to reading from the new schema and the old schema can be decommissioned.

- Incremental Migration: Data is migrated in smaller batches over time, reducing the impact of any single migration operation. This can be combined with a “strangler fig” pattern, where new features are built on the new schema, and existing features are gradually migrated. This is useful when the schema changes are extensive and complex.

Handling Schema Changes and Data Migrations with Zero Downtime

Achieving zero downtime during schema changes and data migrations is a challenging but achievable goal. Several techniques can be employed to minimize or eliminate downtime.

- Backward Compatibility: Design new schema changes to be backward-compatible with the existing application code. This allows the old version of the service to continue operating while the data migration happens in the background. This often involves adding new columns or tables without removing or altering existing ones.

- Forward Compatibility: Similarly, design new schema changes to be forward-compatible with future versions of the application code. This allows the new version of the service to operate while the data migration is in progress.

- Online Schema Migrations: Utilize database-specific tools and features that allow for schema changes without locking tables or interrupting service availability. For example, tools like pt-online-schema-change for MySQL can be used.

- Versioned Schemas: Implement a system for managing different versions of the database schema. This can involve using schema migration tools that track and apply changes in a controlled and repeatable manner.

- Feature Flags: Use feature flags to control the rollout of new features that depend on the new schema. This allows for incremental releases and the ability to quickly roll back changes if necessary.

- Data Transformation Pipelines: Implement data transformation pipelines to transform data from the old schema to the new schema. This can involve using tools like Apache Kafka or Apache Beam to process and transform data in real-time.

Tools and Techniques for Managing Database Schema Changes

Managing database schema changes effectively requires a combination of tools and techniques. These tools help automate the process, track changes, and ensure data integrity.

- Schema Migration Tools: These tools automate the process of applying schema changes. Examples include:

- Flyway: A popular tool for managing database migrations. It supports a wide range of databases and uses SQL scripts to define changes.

- Liquibase: Another widely used tool that allows for defining schema changes using SQL, XML, YAML, or JSON. It supports database refactoring and version control.

- Rails Migrations (for Ruby on Rails): A framework-specific tool for managing database schema changes.

- Database Version Control: Use version control systems (e.g., Git) to track schema changes alongside application code. This allows for easy rollback and collaboration.

- Data Validation and Testing: Implement data validation and testing to ensure data integrity during and after migrations. This includes:

- Unit tests: To validate individual data transformation steps.

- Integration tests: To validate the entire migration process.

- Automated Deployment Pipelines: Integrate schema migration into the automated deployment pipeline to ensure changes are applied consistently across all environments.

- Monitoring and Alerting: Monitor the migration process and set up alerts to detect and respond to any issues promptly. This involves monitoring database performance, data integrity, and application behavior.

- Database-Specific Tools: Utilize database-specific tools for online schema changes, such as `pt-online-schema-change` for MySQL or similar features offered by other database vendors.

Database Connection Pooling and Resource Management

In a microservices architecture, where numerous services may need to interact with databases, efficient database connection management becomes critical. Database connection pooling and effective resource management are essential for maintaining performance, scalability, and stability. Without these, applications can experience significant slowdowns, resource exhaustion, and ultimately, failures.

Importance of Database Connection Pooling in Microservices

Database connection pooling plays a vital role in optimizing database interactions within a microservices environment. It provides a mechanism to reuse database connections, which significantly reduces the overhead associated with establishing new connections for each request.

- Reduced Connection Overhead: Establishing a new database connection involves a handshake process that consumes time and resources. Connection pooling pre-establishes a pool of connections, ready for immediate use. This eliminates the need to create a new connection for every database request, thereby reducing latency.

- Improved Performance: By reusing existing connections, connection pooling minimizes the time spent on connection setup and teardown. This leads to faster response times and improved overall application performance. Imagine a scenario where each of 100 microservices, each receiving 100 requests per second, needs to establish a new database connection for every request. The database server would be overwhelmed with connection requests.

Connection pooling mitigates this by maintaining a limited number of persistent connections.

- Enhanced Scalability: Connection pooling enables applications to handle a larger volume of requests. The pool manages the available connections, allowing services to scale more effectively without exhausting database resources. A well-configured pool can efficiently distribute the workload across available connections.

- Resource Optimization: Connection pooling reduces the load on the database server by limiting the number of active connections. This prevents resource exhaustion and improves the database server’s ability to handle concurrent requests from multiple microservices.

- Simplified Connection Management: Connection pooling libraries handle the complexities of connection creation, management, and closing. Developers do not need to manually manage these aspects, simplifying code and reducing the risk of connection leaks.

Comparison of Connection Pooling Libraries and Configurations

Various connection pooling libraries are available, each offering different features and configurations. Choosing the right library and configuring it appropriately is crucial for optimal performance. The selection depends on the programming language and database used.

- HikariCP (Java): HikariCP is a popular and highly optimized connection pooling library for Java. It is known for its speed and efficiency.

- c3p0 (Java): c3p0 is another well-established Java connection pooling library. It provides a range of features, including connection testing and statement caching.

- pgbouncer (PostgreSQL): pgbouncer is a connection pooler specifically designed for PostgreSQL. It offers various connection pooling modes and is often deployed as a separate process.

- SQLAlchemy (Python): SQLAlchemy is a popular Python SQL toolkit and Object-Relational Mapper (ORM) that includes built-in connection pooling capabilities.

- Node.js Libraries (e.g., pg, mysql2): Node.js developers can use libraries like `pg` (for PostgreSQL) and `mysql2` (for MySQL) which often include connection pooling or support for it.

Key configuration parameters that influence performance:

- Minimum Idle Connections: The minimum number of connections maintained in the pool, even when idle. This ensures connections are readily available when needed.

- Maximum Pool Size: The maximum number of connections the pool can create. This parameter is critical for controlling the resource usage on the database server. Setting it too high can lead to resource exhaustion; too low, and it might limit performance.

- Connection Timeout: The maximum time a client will wait for a connection to become available.

- Idle Timeout: The maximum time a connection can remain idle in the pool before being closed. This helps prevent the accumulation of unused connections.

- Max Lifetime: The maximum time a connection can live, after which it is closed and re-established. This can help prevent issues with long-lived connections that might become stale.

- Validation Query: A SQL query used to validate connections before they are returned to the client. This ensures that connections are still valid and can be used.

Example (Conceptual – Illustrative of configuration parameters, not executable code):

// Example (Conceptual - HikariCP Java Configuration)HikariConfig config = new HikariConfig();config.setJdbcUrl("jdbc:postgresql://localhost:5432/mydatabase");config.setUsername("user");config.setPassword("password");config.setMinimumIdle(5); // Keep at least 5 connections idleconfig.setMaximumPoolSize(20); // Allow a maximum of 20 connectionsconfig.setConnectionTimeout(30000); // Wait up to 30 seconds for a connectionconfig.setIdleTimeout(600000); // Close idle connections after 10 minutesconfig.setMaxLifetime(1800000); // Close connections after 30 minutesHikariDataSource ds = new HikariDataSource(config); Strategies for Optimizing Database Resource Usage

Optimizing database resource usage involves a combination of techniques, including efficient query design, appropriate connection pooling configuration, and monitoring.

- Efficient Query Design: Well-written SQL queries are fundamental for performance. Avoid using `SELECT

-`, use indexes effectively, and optimize join operations. Analyze query execution plans to identify and address performance bottlenecks. - Proper Connection Pooling Configuration: As discussed above, the correct configuration of the connection pool is critical. Monitor connection pool metrics to ensure that the pool size is appropriate for the workload and that connections are being used efficiently.

- Connection Leaks Prevention: Connection leaks occur when connections are not properly released back to the pool. Use `try-with-resources` blocks (Java) or similar mechanisms to ensure connections are closed in all cases. Implement connection timeout mechanisms to prevent applications from holding connections indefinitely.

- Monitoring and Alerting: Implement comprehensive monitoring to track database performance, connection pool metrics (e.g., active connections, idle connections, connection wait times), and query execution times. Set up alerts to proactively identify and address performance issues.

- Caching: Implement caching mechanisms (e.g., using Redis or Memcached) to reduce the load on the database. Cache frequently accessed data to avoid repeated database queries. Consider using a query cache provided by the database server, where appropriate.

- Read Replicas: For read-heavy workloads, use read replicas to distribute the read load across multiple database instances. This reduces the load on the primary database and improves read performance.

- Database Schema Optimization: Optimize the database schema to improve query performance. This includes using appropriate data types, indexing frequently queried columns, and normalizing the data.

- Resource Limits and Throttling: Implement resource limits and throttling mechanisms to prevent individual microservices from consuming excessive database resources and impacting other services. This can involve setting limits on the number of concurrent connections or the rate of database requests.

- Database-Specific Tuning: Tune the database server’s configuration parameters (e.g., buffer pool size, query cache size) to optimize performance for the specific workload. This may require careful analysis and testing.

- Regular Database Maintenance: Perform regular database maintenance tasks, such as index maintenance, statistics updates, and table optimization, to maintain optimal performance.

Summary

In conclusion, managing shared databases in a microservices world requires careful planning and execution. By understanding the challenges, embracing appropriate design patterns, and selecting the right technologies, developers can unlock the full potential of microservices while ensuring data integrity and system performance. The journey towards a well-architected microservices system demands a focus on data consistency, efficient resource management, and a commitment to adapting to evolving business needs.

Answers to Common Questions

What are the main drawbacks of using a shared database in a microservices architecture?

Shared databases can lead to tight coupling between services, making independent deployments difficult. They also increase the risk of cascading failures, hinder scalability, and complicate data consistency management.

What is the Saga pattern, and why is it relevant to microservices?

The Saga pattern is a design pattern for managing distributed transactions across multiple services. It is relevant because it allows you to maintain data consistency without relying on distributed transactions (e.g., two-phase commit), which can be problematic in microservices.

When is eventual consistency acceptable in a microservices architecture?

Eventual consistency is acceptable when the business requirements can tolerate a short delay in data propagation. This is common in scenarios like user analytics, recommendation engines, or non-critical data updates where immediate consistency is not essential.

What database technologies are commonly used in microservices?

Both relational databases (e.g., PostgreSQL, MySQL) and NoSQL databases (e.g., MongoDB, Cassandra) are used in microservices. The choice depends on the specific needs of each service, including data structure, read/write patterns, and scalability requirements.