Understanding the environmental impact of your cloud architecture is crucial in today’s world. How to Measure the Carbon Footprint of Your Cloud Architecture guides you through a comprehensive process, from defining the footprint to implementing mitigation strategies. This detailed exploration will empower you to make informed decisions, optimize your cloud resources, and contribute to a more sustainable future.

This exploration delves into the multifaceted nature of cloud carbon footprints, covering diverse aspects such as infrastructure choices, data center operations, network traffic, and data storage. We’ll investigate how different cloud service models contribute to the overall footprint, providing insights into the energy consumption patterns of each. Ultimately, this guide equips you with the knowledge to not only calculate but also effectively reduce your cloud’s environmental impact.

Defining Cloud Architecture Carbon Footprint

The carbon footprint of a cloud architecture encompasses the environmental impact of utilizing cloud services. This impact stems from the energy consumed throughout the entire lifecycle of the cloud infrastructure, from manufacturing and deployment of hardware to its operation and eventual disposal. Understanding this footprint is crucial for informed decision-making, enabling organizations to adopt sustainable practices and reduce their overall environmental impact.A comprehensive analysis considers various factors, including the energy used in data centers to power servers, the energy required for network transmission of data, and the emissions associated with manufacturing and disposal of the underlying hardware components.

This multifaceted approach ensures a holistic assessment of the environmental burden of cloud computing.

Components of Cloud Architecture Carbon Footprint

The carbon footprint of a cloud architecture is a complex metric encompassing several interconnected components. Understanding these elements allows for a more granular assessment of environmental impact.

- Server Hardware: The energy consumption of servers is a primary contributor to the overall footprint. Factors such as processor type, cooling systems, and power efficiency of the hardware directly influence the energy demands. Modern server designs often prioritize energy efficiency through technologies like optimized chipsets and advanced cooling techniques. For instance, a server utilizing highly efficient processors and liquid cooling systems will have a lower carbon footprint compared to one using older, less energy-efficient technology.

- Data Centers: Data centers are the physical infrastructure housing the servers and supporting systems. Their energy consumption is substantial, driven by factors like climate control, cooling systems, and the power demands of supporting infrastructure like networking equipment. The geographic location of the data center significantly impacts the carbon footprint, as regional energy sources and environmental conditions influence the energy mix utilized.

- Network Transmission: Data transmission across networks consumes energy. Factors such as the distance data travels, the transmission protocols used, and the efficiency of the network infrastructure all play a role in the carbon footprint. Optimized network protocols and strategically located data centers minimize energy consumption during data transfer. For example, using fiber optic cables over copper wire significantly reduces energy consumption during transmission.

- Manufacturing and Disposal: The production of server hardware and the subsequent disposal of obsolete equipment both contribute to the overall carbon footprint. Manufacturing processes involve energy consumption, material extraction, and potential emissions. Proper disposal methods, including recycling and responsible material management, are crucial for minimizing the environmental impact associated with the lifecycle of the hardware.

Metrics for Measuring Energy Consumption in Data Centers

Various metrics are employed to measure energy consumption within data centers. These metrics provide crucial insights into energy efficiency and environmental impact.

- Power Usage Effectiveness (PUE): PUE is a key metric used to assess the energy efficiency of a data center. It’s calculated as the ratio of total energy consumed by the data center to the energy consumed by the IT equipment. A lower PUE indicates higher energy efficiency. A PUE of 1.5 signifies that for every unit of energy used by the IT equipment, 0.5 units are consumed by the supporting infrastructure.

- Energy Star Ratings: Energy Star ratings provide standardized assessments of energy efficiency for various equipment and technologies, including server hardware and cooling systems. Products with higher Energy Star ratings generally consume less energy and contribute less to the overall carbon footprint.

- Carbon Intensity: Carbon intensity measures the amount of greenhouse gas emissions per unit of energy consumed. By considering carbon intensity alongside energy consumption, organizations can assess the overall environmental impact of their cloud architecture. This provides a more complete picture beyond simply the energy used.

Key Factors Influencing Cloud Architecture Environmental Impact

Several factors directly influence the environmental impact of a cloud architecture. A thorough understanding of these factors is essential for developing sustainable cloud strategies.

| Factor | Description |

|---|---|

| Energy Mix | The proportion of renewable energy sources in the electricity mix used to power data centers. |

| Data Center Location | The geographic location of data centers influences the energy mix available and the energy consumed during data transmission. |

| Server Hardware Efficiency | The energy efficiency of server hardware directly impacts the total energy consumption. |

| Network Infrastructure | Optimized network infrastructure minimizes energy consumption during data transmission. |

| Data Center Design | Efficient data center design minimizes energy consumption for cooling and other support systems. |

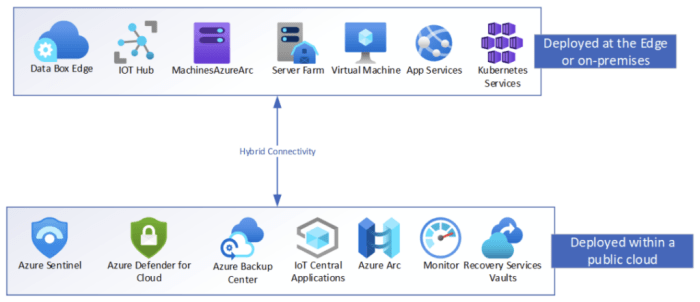

Identifying Cloud Service Models and Their Footprint

Understanding the carbon footprint of cloud services is crucial for making informed decisions about sustainability. Different cloud service models – Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) – exhibit varying levels of energy consumption and environmental impact. This section delves into the specifics of each model, comparing their energy consumption patterns and the factors influencing their respective footprints.

Comparing Carbon Footprint Differences Across Models

The energy consumption and environmental impact of cloud service models are not uniform. Factors such as virtualization, server density, and data transfer significantly influence the carbon footprint of each model. IaaS, PaaS, and SaaS models differ in their level of abstraction and control, which directly affects the energy consumed and the overall environmental impact.

Energy Consumption Patterns of Each Model

IaaS offers the most granular control, allowing users to manage virtual machines (VMs), storage, and networks. This granular control, while beneficial for customization, often translates to higher energy consumption if not optimized properly. PaaS abstracts away the underlying infrastructure, allowing developers to focus on application logic. This reduces the direct control users have over hardware, potentially leading to more efficient resource allocation.

SaaS provides the highest level of abstraction, where the user interacts with the application through a front-end interface, typically with the least direct control over the underlying infrastructure. This model often relies on economies of scale, enabling efficient resource utilization across numerous users. Each model presents a unique set of energy consumption characteristics that contribute to its overall environmental impact.

Factors Influencing Carbon Footprint of Each Service Model

Several key factors significantly influence the carbon footprint of each service model. Virtualization, the process of creating multiple virtual machines on a single physical server, plays a critical role in IaaS. Efficient virtualization can lead to reduced energy consumption compared to running individual physical servers. However, the efficiency of virtualization depends on the specific virtualization technology and the management of virtual resources.

Server density, the number of servers per unit area, is another important factor. Higher server density, in theory, reduces the energy consumption per unit of computing power, but this depends on factors such as cooling systems and the efficiency of the hardware. Data transfer, the movement of data between different components of the cloud infrastructure, also impacts the carbon footprint.

The energy consumed in transmitting data across networks, and the location of data centers, are significant contributors to the overall footprint.

Environmental Impact Comparison Table

| Cloud Service Model | Energy Consumption Pattern | Factors Influencing Footprint | Environmental Impact ||—|—|—|—|| IaaS | High, dependent on resource utilization and optimization | Virtualization efficiency, server density, data transfer, configuration | Moderate to High || PaaS | Moderate, influenced by application complexity and resource allocation | Server density, virtualization, data transfer, application design | Moderate || SaaS | Low, leveraging economies of scale | Data center efficiency, server density, network infrastructure | Low |

The environmental impact of each cloud service model is a complex interplay of various factors. A thorough assessment requires considering not only the energy consumption of the hardware but also the energy used for cooling, data transmission, and the lifecycle of the infrastructure.

Evaluating Infrastructure Impact

Understanding the energy consumption and carbon emissions associated with cloud infrastructure is crucial for accurate carbon footprint calculations. This section delves into the impact of server hardware choices, the role of virtualization, and various energy-efficient technologies, providing insights into reducing the environmental footprint of cloud architectures.The selection of server hardware directly influences the energy consumption and subsequent carbon emissions of a cloud infrastructure.

Factors such as processing power, memory capacity, and cooling requirements significantly impact energy usage. Optimizing these choices with energy-efficient hardware and virtualization techniques is essential for minimizing the overall environmental impact.

Impact of Server Hardware Choices

Server hardware choices play a pivotal role in the energy footprint of a cloud infrastructure. Different server types have varying energy efficiency ratings, influencing the overall carbon emissions. Understanding these differences is crucial for making informed decisions regarding server selection.

- Different server types exhibit diverse energy consumption profiles. For example, high-performance computing (HPC) servers often demand significantly more energy than general-purpose servers, leading to a larger carbon footprint. Conversely, servers optimized for specific tasks, such as database processing, might exhibit lower energy consumption than general-purpose servers. Careful consideration of the workload and specific needs is essential for selecting the most appropriate server type.

- Energy efficiency ratings for servers are often expressed in terms of power usage effectiveness (PUE). A lower PUE indicates a more energy-efficient server design. For instance, servers with a PUE of 1.2 consume 20% more energy than the theoretical minimum for the same level of computing power, compared to a PUE of 1.5, which consumes 50% more. The choice of servers with lower PUE values is crucial for minimizing energy consumption.

Role of Virtualization in Reducing Energy Consumption

Virtualization technology plays a vital role in reducing energy consumption within cloud infrastructure. By consolidating multiple virtual machines (VMs) onto a single physical server, virtualization reduces the overall energy demand for hardware.

- Virtualization significantly enhances server utilization. A single physical server can host multiple virtual servers, allowing for resource sharing and optimized utilization. This reduced need for additional physical servers translates directly into decreased energy consumption and lower carbon emissions.

- Virtualization technology enables dynamic resource allocation. This allows the system to adapt to fluctuating demands, preventing unnecessary energy consumption during periods of low activity. By adjusting the resources allocated to virtual machines based on real-time demands, the system optimizes energy utilization and minimizes waste.

Energy-Efficient Server Technologies

Numerous energy-efficient server technologies are emerging, significantly reducing the carbon footprint of cloud computing. These technologies incorporate innovative approaches to power management and cooling.

- Examples of energy-efficient server technologies include:

- High-efficiency processors with low power consumption, like Intel’s Xeon Scalable processors designed for lower power consumption while maintaining high performance. These processors contribute significantly to the overall energy efficiency of servers.

- Advanced cooling systems, such as liquid cooling, which significantly reduce the energy required for server cooling compared to traditional air cooling. Liquid cooling solutions have been implemented in large data centers, achieving substantial energy savings.

- Server hardware with optimized power supply units (PSUs), which are designed to deliver power efficiently and reduce energy loss. Efficient PSUs are integral to minimizing energy consumption in servers.

- These advancements in server technology lead to reduced energy consumption and, consequently, lower carbon emissions associated with cloud infrastructure operations. For instance, a data center implementing liquid cooling could achieve a significant reduction in energy usage and associated emissions, compared to traditional air cooling methods. This translates to a substantial improvement in the overall environmental impact of cloud computing.

Assessing Data Center Operations

Data centers are the physical infrastructure underpinning cloud computing. Understanding their operational energy consumption is crucial to accurately assessing the carbon footprint of a cloud architecture. This section delves into the energy demands of data center cooling and power distribution, examines sustainable design strategies, and explores the impact of renewable energy sources. Optimizing data center layout for energy efficiency is also addressed.Data center operations significantly contribute to the overall carbon footprint of cloud services.

A comprehensive analysis requires a thorough understanding of the energy consumption associated with various components within these facilities. This involves considering the energy requirements for cooling, power distribution, and the overall facility infrastructure.

Energy Consumption in Data Centers

Data centers require substantial energy to maintain optimal operating temperatures for their servers and other equipment. Power distribution systems, including transformers and cabling, also consume energy. Cooling systems, often relying on large air conditioning units or liquid cooling technologies, are significant energy consumers. The specific energy consumption varies greatly depending on the scale, design, and technology used within each data center.

Sustainable Data Center Design Strategies

Implementing sustainable design strategies is crucial to minimizing the environmental impact of data centers. These strategies often focus on minimizing energy consumption through efficient equipment, optimized layouts, and environmentally friendly materials.

- Efficient Equipment Selection: Choosing servers and other equipment with high energy efficiency ratings (e.g., Energy Star certifications) is a primary step. Modern servers are designed with improved power management capabilities. Employing specialized hardware for tasks like virtualization or caching can also reduce overall energy demands.

- Optimized Data Center Layout: Strategically positioning servers and other equipment can significantly improve energy efficiency. Optimizing the layout involves minimizing distances between equipment and optimizing airflow patterns to ensure efficient cooling. For instance, arranging servers in rows or clusters can enhance cooling efficiency and reduce energy consumption compared to scattered configurations.

- Renewable Energy Integration: Leveraging renewable energy sources, such as solar or wind power, to power data centers can significantly reduce their carbon footprint. This reduces reliance on traditional energy sources and aligns with environmental sustainability goals.

Impact of Renewable Energy Sources

Integrating renewable energy sources into data center operations is a critical step towards minimizing the carbon footprint. The use of solar, wind, or hydro power can reduce reliance on fossil fuels and lower the overall energy consumption. This transition not only reduces carbon emissions but also enhances the data center’s environmental profile.

- Case Study: Many data centers are now incorporating solar panels on their roofs, wind turbines, or partnerships with renewable energy providers. This reduces their reliance on the grid and aligns with sustainability goals. For example, Google’s data centers are increasingly utilizing on-site renewable energy sources to power their operations.

Optimizing Data Center Layout for Energy Efficiency

Data center layout significantly impacts energy efficiency. A well-designed layout minimizes the distances between equipment, optimizes airflow, and facilitates efficient cooling.

- Airflow Management: Strategic placement of servers and equipment ensures proper airflow, reducing the need for excessive cooling. Proper ventilation and heat dissipation systems play a crucial role in maintaining optimal temperatures. For instance, server racks can be arranged in rows to facilitate natural convection currents, which improve cooling efficiency.

- Cooling Strategies: Data centers can implement various cooling strategies, including free cooling, which utilizes outside air when temperatures are suitable, or liquid cooling, which offers a higher efficiency than traditional air cooling. Implementing these strategies can drastically reduce energy consumption compared to traditional air-cooling systems.

Measuring Network Traffic

Network traffic, a crucial component of cloud architectures, significantly contributes to the overall carbon footprint. Understanding the energy consumption associated with data transmission is essential for effective carbon footprint reduction strategies. This section delves into the energy implications of network traffic and presents optimization techniques.

Energy Consumption of Data Transmission

Data transmission over networks consumes substantial energy. The energy expenditure varies based on factors such as the distance data travels, the network protocols used, and the amount of data being transferred. Each hop in a network’s path, from origin to destination, involves energy consumption for routing, processing, and signal amplification. This energy consumption is often overlooked in assessing the total carbon footprint of cloud architectures.

Understanding these intricacies is paramount for informed decisions.

Impact of Network Protocols on Energy Usage

Different network protocols have varying energy implications. Protocols like TCP (Transmission Control Protocol) offer reliable data transfer but often involve more overhead compared to UDP (User Datagram Protocol), which is less reliable but more efficient in terms of energy consumption for simple data packets. The choice of protocol directly influences the energy expenditure of network operations. For instance, TCP’s acknowledgement mechanisms and retransmission procedures can lead to increased energy use compared to UDP’s less rigorous approach.

The appropriate protocol selection for specific data types is a critical aspect of optimizing energy usage.

Network Traffic Optimization Techniques

Optimizing network traffic is vital for reducing the carbon footprint associated with cloud architecture. Techniques for optimizing network traffic include minimizing data transfer volume, employing efficient compression algorithms, and utilizing content delivery networks (CDNs).

Table of Network Optimization Techniques and Impact

| Optimization Technique | Description | Impact on Carbon Footprint | Example |

|---|---|---|---|

| Data Compression | Reducing the size of data packets before transmission. | Significant reduction in transmission energy. | Compressing images or videos before uploading to a cloud storage service. |

| Content Delivery Networks (CDNs) | Distributing content across multiple servers geographically closer to users. | Reduces data transmission distance and latency. | Using a CDN to deliver static website content. This reduces the need for data to travel long distances. |

| Network Caching | Storing frequently accessed data closer to users. | Reduces the need to retrieve data from remote locations. | Caching frequently accessed files in a cloud storage system, reducing network requests. |

| Efficient Routing Protocols | Optimizing the path data takes through the network. | Reduces the number of hops and energy consumption. | Employing routing protocols that consider factors like network congestion and available bandwidth. |

| Traffic Shaping | Prioritizing and managing network traffic. | Directs high-priority data to shorter paths. | Prioritizing critical application data over less important data, optimizing network efficiency. |

Analyzing Data Storage and Management

Data storage plays a crucial role in the carbon footprint of a cloud architecture. The energy consumed by data storage devices, coupled with the impact of data redundancy strategies, significantly contributes to the overall environmental impact. Understanding these factors is essential for designing energy-efficient cloud solutions.Data storage technologies, ranging from traditional hard disk drives (HDDs) to more advanced solid-state drives (SSDs), exhibit varying energy consumption profiles.

The choice of storage technology significantly influences the overall carbon footprint of the cloud infrastructure. Optimizing data storage strategies and implementing energy-efficient solutions are paramount to mitigating this impact.

Energy Consumption of Storage Technologies

Different data storage technologies have different energy consumption characteristics. Hard disk drives (HDDs) typically consume more energy at both read and write operations compared to solid-state drives (SSDs). The energy consumption difference stems from the fundamental operational principles of each technology. HDDs use mechanical components that require more power for movement and data retrieval, while SSDs rely on electronic components that are generally more energy-efficient.

The energy consumption also varies significantly with the storage capacity and the access patterns. While SSDs offer lower power consumption per unit of data, they can still consume substantial amounts of energy at high-throughput rates.

Impact of Data Redundancy

Data redundancy, a crucial aspect of data availability and fault tolerance, also has a direct impact on energy consumption. Redundant data copies require additional storage space and, consequently, higher energy consumption. This increase in energy consumption stems from the need to maintain multiple copies of the data, which translates into higher power requirements for both storage and potential data replication processes.

For example, a cloud provider implementing a three-node architecture with replicated data across these nodes increases the total energy consumption compared to a single-node system.

Energy-Efficient Data Storage Solutions

Several solutions can significantly reduce the energy footprint of data storage in cloud architectures. One key strategy involves leveraging SSDs where appropriate. Furthermore, implementing efficient data compression algorithms reduces the amount of data stored, thus minimizing the energy consumption of the storage devices.Another approach is employing flash memory and NVMe technology. Flash memory, a type of non-volatile memory, is more energy-efficient than traditional HDDs, and NVMe technology allows for high-speed data access, further reducing energy consumption.

Optimizing Data Storage Strategies

Optimizing data storage strategies is critical to minimizing the carbon footprint. Strategies include:

- Data Compression: Employing data compression algorithms can significantly reduce the amount of data stored, thus minimizing energy consumption. The compression process reduces the overall storage capacity needed, leading to lower power requirements for the storage infrastructure.

- Data Deduplication: Data deduplication techniques identify and eliminate redundant data blocks, significantly reducing storage requirements and lowering energy consumption.

- Thin Provisioning: Thin provisioning allocates only the necessary storage space, preventing over-provisioning and reducing energy consumption by optimizing storage capacity utilization.

- Storage Tiers: Implementing a tiered storage system allows for the efficient movement of data to different storage tiers (e.g., cold storage, hot storage) based on access frequency. This strategy minimizes energy consumption by storing frequently accessed data in high-performance storage tiers, and less frequently accessed data in lower-cost and lower-power storage tiers.

Calculating Carbon Emissions

Accurate calculation of carbon emissions is crucial for understanding the environmental impact of cloud architectures. Precise estimations empower informed decisions regarding resource optimization and sustainable practices within cloud deployments. A holistic approach, considering various factors, is essential to derive meaningful results.Understanding the methodologies for calculating carbon emissions allows businesses to make informed choices about their cloud infrastructure and minimize their environmental footprint.

This includes selecting appropriate cloud providers and deploying resource-efficient strategies.

Methods for Calculating Carbon Emissions

Several methods exist for calculating carbon emissions associated with cloud architectures. These methods often rely on energy consumption data, emission factors, and estimations of workload intensity. A common approach involves aggregating data across various stages of the cloud lifecycle, including provisioning, operation, and disposal.

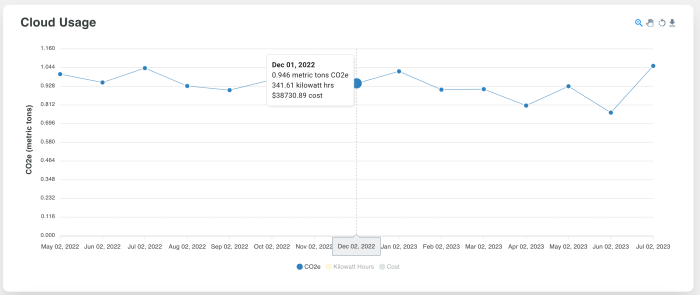

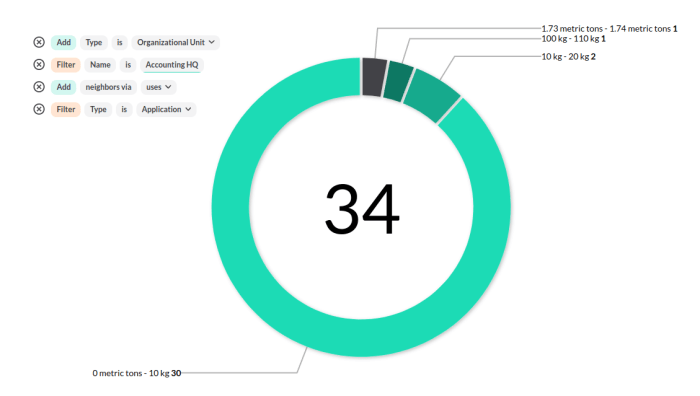

Carbon Footprint Calculators and Tools

Numerous tools and calculators are available to assist in estimating the carbon footprint of cloud architectures. These tools typically require input data on resource utilization, infrastructure specifications, and energy mix characteristics. Examples include those offered by cloud providers, third-party assessment platforms, and specialized software solutions. These tools often employ predefined emission factors specific to various regions and power sources.

Estimating Energy Consumption

Estimating energy consumption is a vital step in calculating carbon emissions. This involves analyzing workload patterns and resource utilization. For instance, a computationally intensive application running on a virtual machine (VM) will consume more energy compared to a less demanding task. Factors such as the type of processor, memory capacity, and network traffic significantly impact energy consumption.

Detailed monitoring tools and historical usage data can provide insights into workload-related energy consumption.

Steps for Calculating Carbon Footprint

A structured approach simplifies the process of calculating a cloud architecture’s carbon footprint. This involves gathering necessary data, applying appropriate emission factors, and performing calculations. The following table Artikels the crucial steps.

| Step | Description |

|---|---|

| 1. Data Collection | Gather information about resource utilization (CPU, memory, storage), infrastructure specifications (servers, network components), and energy mix details. |

| 2. Emission Factor Determination | Identify appropriate emission factors based on the region and energy mix of the cloud provider’s data centers. |

| 3. Energy Consumption Estimation | Estimate energy consumption based on workload and resource utilization, potentially using historical data and performance metrics. Consider factors like server type, operating system, and software configurations. |

| 4. Carbon Emission Calculation | Multiply the estimated energy consumption by the corresponding emission factor to determine the carbon emissions. For example, 1 kWh of electricity from a coal-fired power plant has a different emission factor than 1 kWh from a renewable source. |

| 5. Reporting and Analysis | Summarize the results, identify areas for improvement, and create reports detailing the carbon footprint of the cloud architecture. |

Implementing Mitigation Strategies

Reducing the carbon footprint of a cloud architecture involves a multifaceted approach encompassing various strategies. Effective mitigation requires a commitment to sustainable practices throughout the entire cloud lifecycle, from infrastructure selection to data management. By understanding and implementing these strategies, organizations can significantly decrease their environmental impact while achieving cost savings and enhancing their brand image.Implementing sustainable practices is not just an environmental imperative; it’s also a strategic business decision.

By minimizing energy consumption and optimizing resource utilization, organizations can improve their operational efficiency and reduce costs associated with energy expenditures. This, in turn, can lead to a more resilient and competitive position in the market.

Energy-Efficient Configurations

Optimizing cloud infrastructure for energy efficiency involves careful consideration of hardware specifications, virtualization techniques, and resource allocation strategies. Utilizing server virtualization can significantly reduce energy consumption by consolidating multiple virtual machines onto a single physical server. Selecting hardware with high energy efficiency ratings is crucial. This includes processors and cooling systems designed for reduced power consumption.

Sustainable Practices in Cloud Computing

Sustainable practices in cloud computing extend beyond energy efficiency. Employing renewable energy sources for data center operations is a significant step towards a more environmentally friendly footprint. Organizations can partner with renewable energy providers or invest in on-site renewable energy generation. Another sustainable practice is optimizing data center cooling systems to minimize energy waste. This includes employing advanced cooling technologies and optimizing the layout of the data center to maximize airflow.

Renewable Energy Integration

Leveraging renewable energy sources, such as solar, wind, or hydro power, for data center operations is a crucial step towards sustainability. Many data centers are now exploring partnerships with renewable energy providers, or are investing in on-site renewable energy generation. This not only reduces reliance on fossil fuels but also contributes to a more environmentally friendly image for the organization.

Examples of successful renewable energy integrations include data centers powered by solar farms or wind turbines.

Data Center Optimization

Efficient data center design and operation are critical to minimizing energy consumption. Implementing advanced cooling systems, such as free cooling or liquid cooling, can significantly reduce energy needs. Optimizing server placement and airflow within the data center can also lead to significant energy savings. Properly maintaining and monitoring the cooling systems is equally important to prevent unexpected energy spikes.

Network Optimization

Optimizing network traffic is essential for minimizing energy consumption. Employing techniques like content delivery networks (CDNs) and reducing unnecessary data transfers can significantly reduce energy usage in data transmission. Furthermore, using efficient routing protocols and optimizing network bandwidth utilization can reduce network energy consumption.

Mitigation Strategies Effectiveness

| Mitigation Strategy | Effectiveness (High/Medium/Low) | Description ||—|—|—|| Server Virtualization | High | Consolidating multiple virtual machines onto a single physical server, reducing the overall energy consumption. || Energy-Efficient Hardware | High | Using processors and cooling systems designed for reduced power consumption. || Renewable Energy Integration | High | Using renewable energy sources like solar, wind, or hydro for data center operations.

|| Data Center Optimization | Medium | Implementing advanced cooling systems and optimizing server placement and airflow within the data center. || Network Optimization | Medium | Employing techniques like CDNs and optimizing network bandwidth utilization. || Data Storage Optimization | Medium | Employing techniques like data compression and deduplication to reduce storage space requirements. || Cloud Service Selection | Medium | Choosing cloud services with better energy efficiency ratings.

|

Benchmarking and Reporting

Accurate assessment of a cloud architecture’s carbon footprint necessitates a robust benchmarking and reporting framework. This allows organizations to track progress in reducing emissions, identify areas for improvement, and demonstrate their commitment to sustainability. Effective benchmarking provides a baseline for comparison, facilitating the establishment of realistic targets and enabling continuous improvement in environmental performance.A comprehensive approach to benchmarking and reporting involves not only quantifying emissions but also establishing clear reporting procedures.

Transparency in disclosing carbon footprint data is crucial for building trust with stakeholders, including investors, customers, and the public.

Importance of Benchmarking

Benchmarking provides a crucial baseline for measuring progress in reducing cloud architecture’s environmental impact. By comparing current emissions to established benchmarks, organizations can identify areas requiring improvement and track the effectiveness of implemented mitigation strategies. This data-driven approach allows for informed decision-making, prioritizing actions with the highest potential for emission reduction. For instance, a cloud provider might benchmark its energy consumption per gigabyte of data stored against industry averages to pinpoint areas for optimization.

Setting Targets for Emission Reduction

Establishing realistic and measurable targets for emission reduction is essential for effective climate action. Targets should be specific, measurable, achievable, relevant, and time-bound (SMART). For example, a target might be to reduce energy consumption per unit of computing capacity by 15% within the next three years. These targets should be aligned with broader organizational sustainability goals and be periodically reviewed and updated to reflect evolving technologies and best practices.

Organizations should also consider setting targets for the entire lifecycle of their cloud services, from manufacturing to decommissioning.

Reporting and Transparency Practices

Transparent reporting on cloud architecture carbon footprints is crucial for building trust and demonstrating environmental responsibility. This includes providing clear and concise data on emissions, outlining methodologies used for calculation, and highlighting mitigation strategies. Cloud providers should disclose their carbon footprint data on a regular basis, such as annually, in a standardized format. Furthermore, they should proactively engage with stakeholders to address concerns and promote transparency.

Continuous Monitoring and Improvement

Continuous monitoring and improvement are essential components of a successful cloud sustainability strategy. Regular monitoring of key metrics, such as energy consumption and network traffic, allows for the identification of inefficiencies and opportunities for optimization. Analyzing this data enables the proactive implementation of adjustments to minimize environmental impact. This proactive approach to monitoring and improvement is vital for adapting to changing technologies and evolving environmental regulations.

Feedback loops are critical to ensure continuous improvement and adaptation to new technologies.

Outcome Summary

In conclusion, measuring and mitigating the carbon footprint of your cloud architecture is a multifaceted process requiring a deep understanding of the various contributing factors. By meticulously evaluating infrastructure choices, data center operations, network traffic, and data storage, you can accurately assess your cloud’s environmental impact. The guide highlights strategies for reduction and emphasizes the importance of ongoing benchmarking and reporting for continuous improvement.

This comprehensive approach empowers organizations to embrace sustainable cloud practices and contribute to a greener digital future.

FAQ Insights

What are the key factors influencing a cloud architecture’s environmental impact?

Several factors influence a cloud architecture’s environmental impact, including server hardware choices, data center design, network traffic, and data storage methods. The type of server, virtualization techniques, cooling systems, and the use of renewable energy all play a role.

How do different cloud service models (IaaS, PaaS, SaaS) compare in terms of carbon footprint?

The carbon footprint varies among service models. IaaS generally has a higher footprint due to direct control over hardware, while SaaS, with its shared resources, often has a lower footprint per user. PaaS falls somewhere in between.

What are some examples of energy-efficient server technologies?

Energy-efficient server technologies include those optimized for lower power consumption, such as servers with improved cooling systems and power management features. Specific examples include servers with enhanced cooling and power management. The choice of server type and its energy efficiency rating significantly impacts the carbon footprint.

What are the steps for calculating a cloud architecture’s carbon footprint?

Calculating a cloud architecture’s carbon footprint involves measuring energy consumption across different components, including servers, data centers, and network transmission. Tools and calculators can help estimate the carbon emissions based on resource utilization, workload, and other relevant data.