The evolution of software architecture has ushered in the era of serverless computing, promising unparalleled scalability, cost efficiency, and agility. Refactoring an existing application to embrace this paradigm shift presents a significant undertaking, requiring a strategic understanding of serverless principles, architectural redesign, and meticulous implementation. This guide delves into the intricacies of transforming traditional applications into serverless ecosystems, providing a roadmap for navigating the challenges and harnessing the benefits of this transformative technology.

This exploration systematically dissects the process of serverless refactoring, from initial assessment and service selection to deployment and ongoing maintenance. It addresses critical aspects such as data migration, API design, event-driven architecture, testing, monitoring, and security, providing practical insights and actionable strategies. The aim is to equip developers and architects with the knowledge and tools necessary to successfully migrate their applications to a serverless environment, unlocking the full potential of cloud computing.

Understanding Serverless Architecture

Refactoring an application to a serverless architecture represents a significant shift in how applications are designed, deployed, and managed. This approach moves away from the traditional model of managing and scaling servers, allowing developers to focus primarily on writing code. The core principle is “pay-as-you-go” compute, meaning resources are only consumed when code is actively running.

Serverless Computing Explanation

Serverless computing is a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers deploy code (typically functions) without managing servers. The cloud provider handles all the infrastructure management, including server provisioning, scaling, and patching. This abstraction allows developers to concentrate on building and running applications without the operational overhead of server management. The code is triggered by events (e.g., HTTP requests, database updates, file uploads), and the cloud provider automatically scales the resources to handle the workload.

Billing is based on the actual resources consumed, such as the number of function invocations and the execution time.

Benefits of Serverless Architecture in Application Refactoring

Adopting a serverless architecture during application refactoring provides several key advantages. These benefits often lead to improved agility, reduced operational costs, and increased scalability.

- Reduced Operational Overhead: The elimination of server management significantly reduces the operational burden. Developers no longer need to provision, configure, or maintain servers, freeing up time to focus on application development.

- Cost Optimization: Serverless models typically offer a “pay-per-use” pricing structure. This can result in significant cost savings compared to traditional server-based architectures, especially for applications with variable or spiky workloads.

- Improved Scalability and Availability: Serverless platforms automatically scale resources based on demand. This ensures high availability and responsiveness, as the system can quickly adapt to fluctuating traffic levels.

- Faster Development Cycles: Serverless platforms often offer features such as built-in monitoring, logging, and CI/CD integration, which can accelerate the development process. Developers can deploy and iterate on code more quickly.

- Enhanced Agility: Serverless architectures enable faster experimentation and deployment of new features. The modular nature of serverless functions allows for easier updates and modifications without impacting the entire application.

Serverless vs. Traditional Server-Based Architectures Comparison

A comparison of serverless and traditional server-based architectures highlights the fundamental differences in their approaches. The following table illustrates these distinctions:

| Feature | Serverless | Traditional |

|---|---|---|

| Infrastructure Management | Cloud provider manages all infrastructure (servers, scaling, patching). | Developers manage infrastructure (provisioning, scaling, patching servers). |

| Scaling | Automatic scaling based on demand; typically scales up and down automatically. | Manual scaling or auto-scaling configured by the developer. |

| Billing | Pay-per-use; charged for the actual resources consumed (e.g., function invocations, execution time). | Fixed or variable cost based on server instances, often including idle time. |

| Deployment | Deploy individual functions or containers; often simpler and faster deployment process. | Deploy entire applications or components to servers. |

| Development Focus | Focus on writing code and business logic. | Focus on both code and server management. |

| Availability | High availability, often with built-in redundancy and fault tolerance. | Availability depends on the configuration and management of the servers. |

| Use Cases | Web applications, APIs, event processing, background tasks, IoT backends. | Web applications, databases, enterprise applications. |

Assessing the Current Application

Evaluating an existing application is a crucial first step in determining its suitability for serverless refactoring. This assessment involves understanding the application’s architecture, identifying its components, and analyzing their interactions to pinpoint areas where serverless technologies can provide benefits. A thorough analysis minimizes risks, maximizes efficiency, and ensures a smooth transition.

Identifying Suitable Applications

Certain application characteristics make them particularly well-suited for serverless architectures. Identifying these characteristics early on allows for a more focused and successful refactoring strategy.

- Event-Driven Architecture: Applications that are inherently event-driven, reacting to triggers and asynchronous events, are a prime candidate. Serverless functions can be triggered by various events, such as file uploads, database changes, or scheduled tasks. For example, an image processing application that automatically resizes images uploaded to a storage bucket aligns perfectly with this pattern.

- Stateless Operations: Serverless functions excel in handling stateless operations. If an application’s core logic doesn’t rely on maintaining session state or complex configurations, it can be easily adapted to a serverless model. Consider a web application that generates reports; each report generation can be a stateless operation.

- Microservices Architecture: Applications built on a microservices architecture, where functionalities are modularized into independent services, are naturally suited for serverless. Each microservice can be deployed as a serverless function, improving scalability and maintainability. A e-commerce platform with separate services for product catalog, shopping cart, and payment processing is a strong example.

- Variable Workload: Applications experiencing fluctuating traffic and unpredictable demand benefit greatly from serverless. Serverless platforms automatically scale resources based on the workload, providing cost-effectiveness and improved performance. A social media platform, with its peak times and off-peak times, can leverage this.

- API-Driven Applications: Applications exposing APIs for various functionalities can be easily adapted to serverless. API gateways can be used to route requests to serverless functions, handling authentication, authorization, and request management. Consider a weather application that exposes an API to provide weather data to other applications.

Analyzing Application Architecture

A comprehensive analysis of the existing application architecture is necessary to understand its components, their interactions, and potential serverless migration paths. This analysis includes several key steps.

- Component Identification: Begin by identifying all the major components of the application. These might include web servers, databases, message queues, background processing services, and API gateways. Create a comprehensive list of all the elements that constitute the application.

- Dependency Mapping: Document the dependencies between the components. This involves understanding how components interact with each other, what data they exchange, and what services they rely on. This will help identify potential bottlenecks and areas for optimization.

- Traffic Analysis: Analyze the application’s traffic patterns to understand its usage profile. Identify peak loads, average loads, and any seasonal variations. This data is crucial for determining the scalability requirements of the serverless functions. Consider using monitoring tools to track metrics such as request rates, response times, and error rates.

- Performance Evaluation: Assess the current performance of the application. Identify any performance bottlenecks or areas where the application is slow or inefficient. This will help to prioritize the components that would benefit most from serverless refactoring. Utilize profiling tools to pinpoint slow code sections and database queries.

- Cost Analysis: Evaluate the current infrastructure costs. Determine the cost of running the existing application and compare it with the potential cost savings that serverless can provide. This will help justify the investment in serverless refactoring. Consider the cost of servers, databases, and other resources.

Documenting Components and Interactions

Detailed documentation of the application’s components and their interactions is critical for planning the serverless refactoring. This documentation provides a clear understanding of the current architecture and helps in designing the new serverless architecture.

- Component Diagram: Create a visual representation of the application’s architecture. This diagram should include all the components and their relationships. Use a standardized notation such as UML or a simplified diagram to represent the interactions.

- Component Descriptions: Provide detailed descriptions of each component, including its purpose, functionality, and technologies used. Document the specific tasks performed by each component.

- Dependency Matrix: Create a matrix that shows the dependencies between components. This matrix should identify which components depend on which other components and the type of interactions (e.g., API calls, database queries, message passing).

- API Documentation: Document all the APIs exposed by the application, including their endpoints, request and response formats, and authentication mechanisms. This documentation is crucial for understanding how the application interacts with external systems.

- Database Schema: Document the database schema, including tables, columns, and relationships. This information is important for migrating the database to a serverless-compatible solution.

- Security Considerations: Document the security aspects of the application, including authentication, authorization, and data encryption. This is crucial for ensuring the security of the refactored application.

Identifying Serverless Services

The selection of appropriate serverless services is a critical step in refactoring an application. This process involves analyzing the existing application’s functionality, identifying potential serverless components, and evaluating the available serverless offerings from cloud providers. A systematic approach to service selection ensures that the chosen services align with the application’s requirements, performance goals, and cost constraints. This section will detail a structured approach to identifying and selecting the most suitable serverless services.

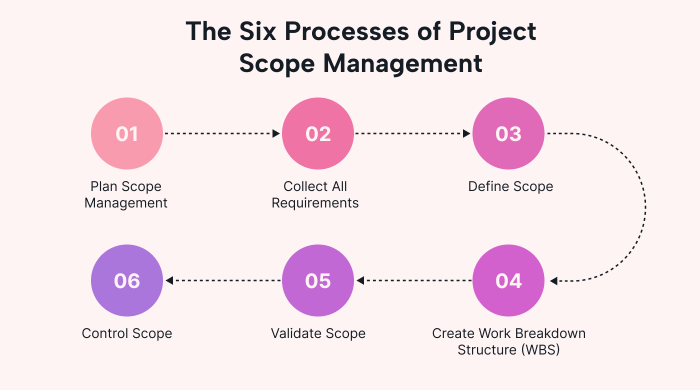

Designing a Process for Selecting Serverless Services

A well-defined process is crucial for navigating the landscape of serverless offerings and making informed decisions. The process should encompass several key stages, starting with a thorough understanding of the application’s current state and culminating in the selection of the most appropriate serverless services.The recommended process includes the following stages:

- Functional Decomposition: Break down the existing application into its core functionalities. This involves identifying individual features, modules, and services that can potentially be migrated to a serverless architecture. This process allows for a granular analysis of each component.

- Service Identification: For each identified functionality, determine which serverless services can best fulfill its requirements. Consider services like AWS Lambda, Azure Functions, Google Cloud Functions for compute, and services for data storage, API management, and messaging.

- Provider Selection: Evaluate different cloud providers (AWS, Azure, Google Cloud) based on factors like pricing, available services, and existing infrastructure. If a multi-cloud strategy is not the primary objective, the existing cloud provider for the application might be the best starting point.

- Proof of Concept (POC): Develop small-scale prototypes or POCs for key functionalities to validate the chosen services and assess their performance and integration capabilities. This allows for hands-on experience and early identification of potential challenges.

- Decision Matrix Application: Use a decision matrix to compare and contrast the different serverless options based on pre-defined criteria, as described in the next .

- Iterative Refinement: The selection process is not necessarily a one-time event. Refine the selection based on the results of the POCs, performance testing, and evolving application requirements. This ensures that the serverless architecture remains optimal over time.

Creating a Decision Matrix for Choosing Serverless Compute Options

A decision matrix provides a structured framework for evaluating and comparing different serverless compute options, such as AWS Lambda, Azure Functions, and Google Cloud Functions. The matrix should include a set of criteria relevant to the application’s needs, allowing for a systematic assessment of each option.The decision matrix should consider the following factors:

- Pricing: Analyze the pricing models of each service, including invocation costs, execution time, and memory allocation. Consider the expected workload and choose the option that offers the most cost-effective solution. For instance, AWS Lambda has a pay-per-use model, charging based on the number of invocations and the duration of execution. Azure Functions also has a pay-as-you-go model. Google Cloud Functions offers a similar pricing structure.

- Language Support: Evaluate the programming languages supported by each service. Ensure that the service supports the languages used in the existing application or the languages that are preferred for development. AWS Lambda supports languages like Python, Node.js, Java, Go, and .NET. Azure Functions supports languages such as C#, JavaScript, Python, Java, and PowerShell. Google Cloud Functions supports Node.js, Python, Go, Java, .NET, and Ruby.

- Integration Capabilities: Assess the integration capabilities of each service with other cloud services, such as databases, storage, and message queues. A seamless integration with existing infrastructure is crucial for a smooth migration.

- Performance: Evaluate the performance characteristics of each service, including cold start times, execution speed, and scalability. The performance requirements of the application should be a key consideration.

- Monitoring and Logging: Examine the monitoring and logging features offered by each service. Robust monitoring and logging capabilities are essential for troubleshooting and optimizing the application’s performance.

- Developer Experience: Consider the developer experience, including the ease of deployment, debugging, and local testing. A positive developer experience can accelerate the development process.

- Community and Support: Evaluate the community support, documentation, and availability of third-party tools and libraries. A strong community and readily available resources can simplify the development process.

- Vendor Lock-in: Assess the level of vendor lock-in associated with each service. Consider the portability of the code and the ease of migrating to another platform if needed.

A sample decision matrix can be structured as a table:

| Criteria | AWS Lambda | Azure Functions | Google Cloud Functions |

|---|---|---|---|

| Pricing | Pay-per-use, granular | Pay-as-you-go, flexible | Pay-per-use, competitive |

| Language Support | Python, Node.js, Java, Go, .NET | C#, JavaScript, Python, Java, PowerShell | Node.js, Python, Go, Java, .NET, Ruby |

| Integration Capabilities | Excellent with AWS services | Excellent with Azure services | Excellent with Google Cloud services |

| Performance | Fast execution, variable cold start | Good performance, cold start can vary | Good performance, improving cold start |

| Monitoring and Logging | CloudWatch | Application Insights | Cloud Logging, Cloud Monitoring |

| Developer Experience | Mature, extensive tooling | Good tooling, growing ecosystem | Good tooling, evolving ecosystem |

| Community and Support | Large, active community | Strong community, Microsoft support | Growing community, Google support |

| Vendor Lock-in | Moderate | Moderate | Moderate |

Each criterion can be weighted based on its importance to the application. Then, each service can be scored against each criterion, and the weighted scores can be totaled to determine the best-suited option.

Leveraging Serverless Services for Common Application Functionalities

Serverless services can be effectively used to implement various common application functionalities, such as API gateways, database integration, and background processing. Leveraging these services can lead to improved scalability, reduced operational overhead, and cost optimization.Examples of leveraging serverless services include:

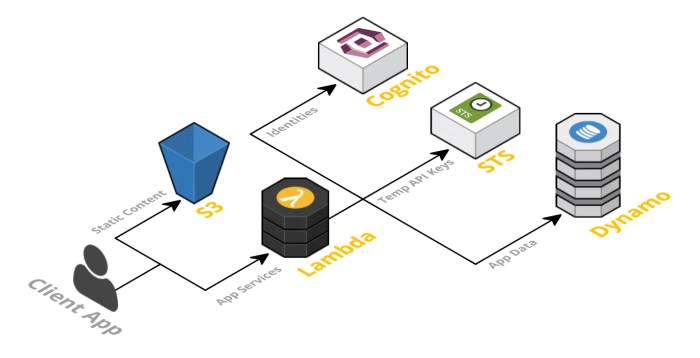

- API Gateway: Implement an API gateway using services like AWS API Gateway, Azure API Management, or Google Cloud API Gateway. The API gateway acts as a front door for the application’s APIs, handling tasks like request routing, authentication, authorization, and rate limiting. For example, an API gateway can route incoming requests to different serverless functions based on the URL path or HTTP method.

- Database Integration: Use serverless functions to interact with databases. For instance, an AWS Lambda function can be triggered by an event in Amazon DynamoDB to process data updates. Azure Functions can be used to read from and write to Azure Cosmos DB. Google Cloud Functions can be used to interact with Cloud SQL. This approach allows for efficient data processing and avoids the need for managing dedicated database servers.

- Background Processing: Implement background processing tasks using serverless functions and message queues. For example, AWS Lambda can be triggered by messages in Amazon SQS to perform tasks such as image processing or sending email notifications. Azure Functions can be triggered by messages in Azure Service Bus or Azure Queue Storage. Google Cloud Functions can be triggered by messages in Cloud Pub/Sub.

- Webhooks: Serverless functions can be used to handle webhooks from third-party services. The function receives the webhook payload, processes the data, and performs the necessary actions, such as updating a database or sending notifications.

- Scheduled Tasks: Use serverless functions to execute scheduled tasks. For example, an AWS Lambda function can be triggered by Amazon CloudWatch Events to run a daily report generation job. Azure Functions can be triggered by a timer trigger to execute scheduled tasks. Google Cloud Functions can be triggered by Cloud Scheduler.

Decomposition and Module Design

Refactoring a monolithic application to a serverless architecture necessitates a strategic decomposition into smaller, independent units. This process aims to leverage the benefits of serverless, such as scalability, cost efficiency, and increased development velocity. A well-defined decomposition strategy, coupled with effective module design, is crucial for realizing these advantages.

Decomposing a Monolithic Application into Serverless Functions

The decomposition process involves breaking down the monolithic application into a collection of serverless functions, each responsible for a specific task or set of related tasks. This modularization facilitates independent scaling, deployment, and maintenance. The approach typically involves identifying functional boundaries and defining how data flows between these boundaries.One common strategy for decomposition is based on the Single Responsibility Principle. This principle dictates that each function should have only one reason to change.

- Identify Functional Boundaries: Analyze the existing application’s code and identify distinct functionalities or features. These functionalities often represent natural boundaries for serverless functions. Consider user authentication, data processing, image resizing, or order management as examples.

- Define Function Responsibilities: For each identified functionality, define the specific responsibilities of the corresponding serverless function. The function should be self-contained and focused on its designated task. Avoid creating functions that perform multiple, unrelated operations.

- Establish Data Flow: Determine how data will flow between the serverless functions. This includes identifying the data inputs, outputs, and any intermediate data transformations. Consider using event-driven architectures, where functions are triggered by events, such as a file upload or a database update.

- Refactor Code: Rewrite the code related to each function’s responsibility into independent, deployable units. This often involves breaking down large code blocks into smaller, more manageable functions.

- Implement API Gateway: Utilize an API Gateway to manage the routing and access control of serverless functions. This gateway acts as a single entry point for external clients, handling authentication, authorization, and traffic management.

Designing Function Boundaries and Responsibilities

Careful design of function boundaries and responsibilities is critical for achieving scalability and maintainability. Poorly designed functions can lead to increased complexity, reduced performance, and difficulty in managing and evolving the application. The goal is to create functions that are loosely coupled, highly cohesive, and easily testable.

- Loose Coupling: Design functions to minimize dependencies on other functions. This means that changes in one function should not require changes in other functions. Data should be passed between functions through well-defined interfaces, such as API endpoints or event queues.

- High Cohesion: Ensure that each function focuses on a specific, well-defined task. All the code within a function should be related to that task. Avoid creating functions that perform multiple, unrelated operations.

- Idempotency: Design functions to be idempotent, meaning that they can be executed multiple times without causing unintended side effects. This is particularly important in serverless environments, where functions may be triggered multiple times due to retries or other factors.

- Error Handling: Implement robust error handling mechanisms within each function. This includes logging errors, handling exceptions, and providing informative error messages. Implement retry mechanisms for transient failures.

- Testing: Write comprehensive unit and integration tests for each function. This ensures that the function behaves as expected and can be easily updated without introducing regressions. Use mocking techniques to isolate functions during testing.

Creating Reusable Serverless Modules

Reusable serverless modules promote code reuse, reduce development time, and improve the maintainability of the application. These modules encapsulate common functionalities, such as data validation, authentication, or utility functions, which can be used across multiple serverless functions.

- Identify Common Functionality: Analyze the application code to identify common functionalities that can be extracted into reusable modules. These functionalities often involve tasks such as data validation, data transformation, or communication with external services.

- Encapsulate Functionality: Package the common functionality into self-contained modules. This may involve creating libraries, utility functions, or custom components.

- Define Clear Interfaces: Define clear interfaces for the modules, including the input parameters, output formats, and any dependencies. This ensures that the modules can be easily integrated into other functions.

- Version Control: Use version control systems, such as Git, to manage the modules. This allows for tracking changes, collaborating with other developers, and easily rolling back to previous versions.

- Dependency Management: Manage module dependencies using a package manager, such as npm for Node.js or pip for Python. This simplifies the process of installing and updating module dependencies.

- Documentation: Provide clear and comprehensive documentation for the modules, including their purpose, usage, and any dependencies. This documentation should include examples of how to use the modules.

Data Migration and Management

Migrating data to a serverless environment is a critical step in refactoring an application. This process involves transferring data from existing databases to serverless-compatible storage solutions while maintaining data integrity and ensuring minimal downtime. Effective data management is crucial for performance, scalability, and security in a serverless architecture.

Strategies for Data Migration

Migrating data to serverless-compatible storage solutions necessitates a strategic approach. The chosen strategy depends on factors like the size of the dataset, the complexity of the database schema, and the required downtime. Several methods are available, each with its advantages and disadvantages.

- One-Time Migration: This approach involves a single, complete transfer of data. It’s suitable for smaller datasets or when minimal ongoing synchronization is needed. Tools like AWS Database Migration Service (DMS) can facilitate this process. For example, migrating a small e-commerce customer database to Amazon DynamoDB might involve a one-time data transfer.

- Incremental Migration: This strategy transfers data in batches, allowing for continuous operation of the existing application while the migration is in progress. This approach is often used for larger datasets, minimizing downtime. A change data capture (CDC) system can be implemented to capture changes in the source database and replicate them to the serverless storage.

- Hybrid Approach: A hybrid strategy combines aspects of both one-time and incremental migrations. Initially, a bulk data transfer occurs, followed by incremental updates to synchronize the data. This is suitable for situations where the initial data load is large, but ongoing changes are relatively frequent.

- Database-Specific Migration Tools: Many database providers offer specific tools and services to facilitate migration. For instance, Google Cloud offers Cloud SQL for PostgreSQL and MySQL, which simplifies data migration to serverless environments like Cloud Run or Cloud Functions. Azure provides similar services for migrating to serverless solutions like Azure Functions and Cosmos DB.

Addressing Data Consistency

Data consistency is a major concern in serverless environments, particularly when dealing with distributed systems. Serverless architectures often rely on eventual consistency models due to the distributed nature of the underlying infrastructure. Addressing data consistency requires careful consideration of data access patterns, transaction management, and error handling.

- Eventual Consistency: Serverless databases, like Amazon DynamoDB or Google Cloud Datastore, often employ eventual consistency. This means that data changes may not be immediately reflected across all replicas. Applications need to be designed to handle potential inconsistencies.

- Strong Consistency: For critical operations requiring immediate data consistency, strong consistency options are available. DynamoDB offers strongly consistent reads, but these come with a performance cost. Consider using transactions where atomic operations are essential.

- Transactions: Implementing transactions across multiple data stores in a serverless environment is challenging. Serverless databases provide transactional support, and these features must be utilized to ensure atomicity, consistency, isolation, and durability (ACID) properties. For instance, DynamoDB transactions allow multiple items to be updated in a single, atomic operation.

- Idempotency: Ensuring that operations are idempotent is critical. Idempotent operations can be safely executed multiple times without unintended side effects. This is particularly important in serverless environments where retries and concurrent invocations are common.

- Error Handling and Retries: Implement robust error handling and retry mechanisms to address transient failures. Serverless functions should be designed to gracefully handle errors and retry operations.

Managing Data Access and Security

Securing data access is paramount in a serverless application. Serverless platforms offer various mechanisms for managing access control and securing data. These mechanisms must be carefully configured to protect sensitive information.

- Identity and Access Management (IAM): Leverage IAM roles and policies to control access to data storage resources. Grant serverless functions only the necessary permissions to access data. Avoid using overly permissive roles.

- Authentication and Authorization: Implement robust authentication and authorization mechanisms. Use API gateways, such as Amazon API Gateway or Google Cloud Endpoints, to authenticate users and authorize access to resources.

- Encryption: Encrypt data at rest and in transit. Serverless storage solutions often provide encryption options. For example, DynamoDB supports encryption at rest using AWS KMS. Utilize HTTPS for secure communication.

- Data Masking and Tokenization: Implement data masking and tokenization techniques to protect sensitive data. Mask sensitive information in logs and audit trails. Tokenize sensitive data elements like credit card numbers to reduce the risk of exposure.

- Auditing and Monitoring: Implement comprehensive auditing and monitoring. Log all data access attempts and monitor for suspicious activity. Utilize cloud-provider specific monitoring tools (e.g., AWS CloudWatch, Google Cloud Monitoring, Azure Monitor) to track application performance and security events.

- Regular Security Audits: Conduct regular security audits to identify and address potential vulnerabilities. Review IAM policies, access controls, and encryption configurations regularly.

API Design and Implementation

The design and implementation of an API are crucial for the success of a serverless application. A well-designed API facilitates communication between different components of the application, enabling seamless interaction with clients and other services. This section will delve into the principles of designing and implementing APIs in a serverless context, focusing on API design, integration with API gateways, and versioning strategies.

Designing an API for a Serverless Application

Designing an API for a serverless application requires careful consideration of several factors, including the application’s functionality, the target audience, and the expected traffic. A well-designed API is intuitive, efficient, and scalable, allowing the application to handle increasing workloads without performance degradation.

- RESTful Principles: Adhering to RESTful principles is essential for designing a modern and scalable API. This involves using HTTP methods (GET, POST, PUT, DELETE) to represent actions on resources, using clear and consistent URL structures, and returning appropriate HTTP status codes to indicate the outcome of requests. For example, using `/users/userId` to retrieve a specific user’s information adheres to REST principles.

- Resource-Oriented Design: The API should be designed around resources, which are the core entities of the application. Each resource should have a unique URL and support a set of operations (methods) that can be performed on it. For example, if the application manages products, the API might have resources like `/products`, `/products/productId/reviews`.

- Input Validation: Implementing robust input validation is crucial for security and data integrity. The API should validate all incoming data to ensure it conforms to the expected format and constraints. This prevents malicious input and ensures that the application processes valid data. For example, validating that an email address is in the correct format or that a numeric value falls within a specific range.

- Output Formatting: The API should consistently format the output data in a structured and easily parsable format, such as JSON or XML. This allows clients to easily consume the data. The choice of format depends on the application’s requirements and the client’s capabilities. JSON is often preferred for its simplicity and widespread support.

- Rate Limiting and Throttling: Implementing rate limiting and throttling mechanisms is essential for protecting the API from abuse and ensuring its availability. These mechanisms control the number of requests a client can make within a given time period. This helps prevent denial-of-service attacks and ensures fair usage of the API. AWS API Gateway provides built-in features for rate limiting.

- Security Considerations: Security should be a primary concern when designing an API. Implementing authentication and authorization mechanisms is crucial to protect sensitive data and resources. This involves verifying the identity of the client and determining their access rights. Common security mechanisms include API keys, OAuth, and JWT (JSON Web Tokens).

Integrating Serverless Functions with an API Gateway

Integrating serverless functions with an API gateway is a core step in building serverless applications. The API gateway acts as a central point of entry for all API requests, routing them to the appropriate serverless functions. This integration process involves configuring the API gateway to trigger serverless functions based on incoming requests.

- Choosing an API Gateway: Several API gateway services are available, including AWS API Gateway, Google Cloud API Gateway, and Azure API Management. The choice depends on the cloud provider used and the specific requirements of the application. AWS API Gateway is a popular choice for serverless applications running on AWS.

- Creating an API: The first step is to create an API within the chosen API gateway. This involves defining the API’s name, description, and endpoint configuration. For example, in AWS API Gateway, you create a new API and select the API type (e.g., REST API, HTTP API).

- Defining API Resources and Methods: Define the API’s resources (e.g., `/users`, `/products`) and the HTTP methods supported for each resource (GET, POST, PUT, DELETE). This defines the structure of the API and the actions clients can perform. For instance, you might define a GET method for the `/users/userId` resource to retrieve user details.

- Integrating with Serverless Functions: Configure the API gateway to integrate with the serverless functions. This involves mapping the API methods to the corresponding Lambda functions. When a request arrives at the API gateway, it triggers the appropriate Lambda function. In AWS API Gateway, this is typically done by configuring the integration type as “Lambda Function”.

- Request and Response Mapping: Configure request and response mapping to transform data between the API gateway and the serverless functions. This allows you to adapt the request format to match the function’s input requirements and format the function’s output to match the API’s response format. This can involve transforming headers, query parameters, and body data.

- Testing and Deployment: After configuration, test the API thoroughly to ensure it functions as expected. Deploy the API to a production environment to make it accessible to clients. This process may involve creating stages for development, staging, and production environments.

API Versioning and Management in a Serverless Architecture

Versioning an API allows for changes and updates without disrupting existing clients. Effective API management is critical for maintaining the API’s usability, security, and performance over time. A robust versioning strategy, coupled with proper management practices, is essential for the long-term success of a serverless application.

API versioning allows for making changes to an API without breaking existing client integrations.

- Versioning Strategies: Several strategies can be employed for API versioning:

- URI-based Versioning: Includes the version number in the URL (e.g., `/v1/users`, `/v2/users`). This is a simple and clear approach, but it can lead to longer URLs.

- Header-based Versioning: Uses custom headers to specify the API version (e.g., `Accept: application/vnd.example.v1+json`). This keeps the URLs cleaner but requires clients to include the header in their requests.

- Query Parameter-based Versioning: Appends the version number as a query parameter (e.g., `/users?version=1`). This is easy to implement but can make the URLs less readable.

- Implementing Versioning: The specific implementation of API versioning depends on the chosen strategy and the API gateway used. For instance, in AWS API Gateway, you can create multiple API stages (e.g., `v1`, `v2`) that map to different versions of your serverless functions. Each stage would represent a different version of the API.

- Backward Compatibility: Strive for backward compatibility whenever possible. When making changes to the API, try to ensure that existing clients continue to function without requiring code modifications. This can be achieved by adding new features or parameters without removing existing ones.

- API Documentation: Maintain comprehensive and up-to-date API documentation to ensure clients understand how to use the API. The documentation should include information on the API’s resources, methods, request/response formats, authentication, and versioning. Tools like Swagger/OpenAPI can automate the generation of API documentation.

- Deprecation and Sunset: Plan for deprecating and eventually sunsetting older API versions. Provide advance notice to clients before deprecating a version, and offer a migration path to the newer version. This can be done through communication channels like email or within the API documentation. For example, a notice might be given six months before deprecating `v1` and directing users to migrate to `v2`.

- Monitoring and Analytics: Monitor API usage, performance, and errors to identify potential issues and areas for improvement. Use analytics tools to track API calls, response times, and error rates. This data helps you understand how clients are using the API and identify areas where optimization is needed. AWS CloudWatch can be used for monitoring and logging.

Event-Driven Architecture

Event-driven architecture (EDA) is a paradigm that allows applications to react to events in real-time, fostering loose coupling and scalability. In a serverless context, EDA leverages the capabilities of cloud-based event services to trigger functions based on specific occurrences. This approach significantly enhances responsiveness, reduces operational overhead, and provides a flexible foundation for complex application logic.

Concept of Event-Driven Architecture in Serverless Applications

Event-driven architecture in serverless applications centers on the concept of events, event sources, and event consumers. Events represent significant occurrences within the system, such as a file upload, a database update, or a scheduled task. Event sources generate these events, and event consumers, typically serverless functions, react to them. This design promotes asynchronous communication, where components don’t need to know about each other directly, increasing resilience and allowing independent scaling.

Examples of Event Triggers for Serverless Functions

Various event triggers can initiate serverless functions, facilitating automation and responsiveness. These triggers are often provided by cloud provider services.

- Database Changes: Database triggers activate functions when data is inserted, updated, or deleted. For instance, when a new user record is added to a database, a serverless function can be invoked to send a welcome email. This event-driven approach ensures immediate processing of database changes.

- File Uploads: File storage services, like Amazon S3 or Google Cloud Storage, can trigger functions upon file uploads. A common example is image processing: when an image is uploaded, a function can automatically resize it, generate thumbnails, and store the processed versions.

- Scheduled Tasks: Cloud providers offer services like AWS CloudWatch Events or Google Cloud Scheduler that allow scheduling events. These events can trigger serverless functions at specified intervals or at predefined times, enabling tasks like report generation or data cleanup.

- Message Queues: Message queues, such as Amazon SQS or Google Cloud Pub/Sub, can trigger functions when messages are added to the queue. This is useful for decoupling components and handling high-volume workloads. A function can process messages related to order processing, for example.

- API Gateway Events: When a user interacts with the API through an API Gateway, the function is triggered by the API request, facilitating real-time interactions and data processing.

Designing and Implementing Event-Driven Workflows with Serverless Services

Designing and implementing event-driven workflows requires careful consideration of event sources, function logic, and error handling. The following steps Artikel a typical approach.

- Identify Events: Determine the key events that drive application logic. These events could be user actions, system updates, or scheduled occurrences. Consider events related to database changes, file uploads, or API requests.

- Choose Event Sources: Select the appropriate cloud services to generate the events. Examples include database services (e.g., Amazon DynamoDB), object storage (e.g., Amazon S3), message queues (e.g., Amazon SQS), or API gateways (e.g., Amazon API Gateway).

- Design Serverless Functions: Develop serverless functions to process events. Each function should be designed to perform a specific task related to the event. Functions should be idempotent to handle potential retries and ensure data consistency.

- Configure Event Triggers: Configure event triggers to connect event sources to serverless functions. This typically involves setting up rules that specify which events should trigger which functions. This could include configuring a database trigger to invoke a function on a new record creation or setting up a file upload trigger to process uploaded images.

- Implement Error Handling: Implement robust error handling to gracefully manage failures. This includes logging errors, implementing retry mechanisms, and using dead-letter queues to handle events that cannot be processed. Proper error handling is crucial for maintaining the reliability of the event-driven system.

- Monitor and Optimize: Continuously monitor the performance and behavior of the event-driven workflow. Use monitoring tools to track function invocations, execution times, and errors. Optimize function code and resource allocation to improve performance and reduce costs.

Testing Serverless Applications

Testing is a critical component of the software development lifecycle, and its importance is amplified in the context of serverless applications. Due to the distributed nature and event-driven architecture common in serverless, robust testing strategies are essential to ensure application reliability, maintainability, and scalability. Thorough testing minimizes the risk of unexpected behavior, performance bottlenecks, and security vulnerabilities. This section Artikels a comprehensive approach to testing serverless applications, covering unit, integration, and end-to-end testing methodologies, alongside techniques for effective dependency mocking and event simulation.

Importance of Testing Serverless Functions

Serverless functions, being the fundamental building blocks of serverless applications, necessitate rigorous testing. Their ephemeral nature, triggered by events and interacting with various services, demands a testing approach that accounts for these characteristics. Testing ensures that individual functions behave as expected under various conditions, and that the interactions between functions and external services are reliable. Failure to test serverless functions adequately can lead to cascading failures, data inconsistencies, and significant operational overhead.

Unit Testing Serverless Functions

Unit testing focuses on isolating and verifying the behavior of individual functions in isolation. It’s a cornerstone of good software development practices and particularly crucial in serverless environments. Unit tests should validate the function’s logic, input/output handling, and interactions with its immediate dependencies.

- Test Function Logic: Verify the core functionality of the function. For example, if a function processes order data, unit tests should validate that it correctly calculates totals, applies discounts, and formats the output. This involves providing different inputs (valid, invalid, edge cases) and asserting that the function returns the expected results.

- Test Input/Output Handling: Ensure the function correctly parses and validates input data, and produces the expected output format. For instance, a function triggered by an API Gateway should be tested to confirm it correctly handles different request methods (GET, POST, PUT, DELETE), headers, and payloads. This includes testing for appropriate error handling when input is malformed or missing.

- Test Dependencies: Because serverless functions often rely on external services (databases, message queues, other APIs), these dependencies must be mocked during unit testing. This allows the tests to run quickly and independently, without the overhead of interacting with real services. Mocking is essential to control the function’s environment and isolate it for testing.

Integration Testing Serverless Applications

Integration tests verify the interactions between multiple serverless functions and their dependencies. Unlike unit tests, integration tests focus on the communication and data flow across different components of the application. They confirm that functions work together as intended, ensuring data consistency and correct behavior throughout the application’s architecture.

- Testing Function-to-Function Communication: Verify the interactions between functions, such as a function that processes an event and another that updates a database. This involves simulating event triggers, verifying the data passed between functions, and checking that each function performs its intended task. For example, testing the communication between a function that receives a payment notification and a function that updates the customer’s account balance.

- Testing Function-to-Service Interactions: Ensure that functions correctly interact with external services like databases, message queues, and storage services. This includes validating data storage, retrieval, and processing within these services. For instance, testing that a function correctly writes data to a database and that another function can retrieve and process that data.

- Testing Error Handling and Recovery: Integration tests should also cover error scenarios, such as network failures, service outages, and invalid data. These tests verify that the application gracefully handles errors and recovers appropriately. For example, testing how the application behaves when a database connection fails or when a third-party API is unavailable.

End-to-End Testing Serverless Applications

End-to-end (E2E) tests simulate user interactions with the entire serverless application, from the front-end to the back-end services. These tests provide the highest level of confidence in the application’s functionality and user experience, validating the entire workflow from start to finish. E2E tests are typically more complex and time-consuming than unit or integration tests, but they are crucial for ensuring that the application meets its functional requirements.

- Testing User Workflows: Simulate real-world user interactions, such as creating an account, placing an order, or submitting a form. This involves sending requests to the application’s API endpoints, verifying the responses, and checking that the data is correctly processed and stored. For example, testing the complete process of a user registering, logging in, and making a purchase.

- Testing Data Flow and Consistency: Validate the flow of data through the entire application, from the user interface to the backend services and databases. This ensures that data is correctly processed, stored, and retrieved across all components. For instance, testing that an order placed through the front-end is correctly reflected in the database and visible in the admin panel.

- Testing Performance and Scalability: Evaluate the application’s performance under load, simulating multiple concurrent users and transactions. This helps identify potential bottlenecks and ensure that the application can handle peak traffic. For example, running load tests to determine how the application performs during a flash sale or a surge in user activity.

Mocking Dependencies and Simulating Events

Mocking dependencies and simulating events are essential techniques for effective testing of serverless applications. These techniques allow developers to isolate functions, control the testing environment, and create repeatable tests.

- Mocking Dependencies: Replace external services (databases, APIs, message queues) with mock objects or stubs during testing. This allows the tests to run quickly and independently, without relying on real services. Mocking helps control the function’s behavior and isolate it for testing.

- Database Mocks: Use in-memory databases or mocking libraries to simulate database interactions. This allows you to control the data returned by the database and verify that the function interacts with the database correctly.

- API Mocks: Use mocking libraries to simulate API responses, allowing you to test how your function handles different API responses (success, error, etc.).

- Service Mocks: Mock AWS services like S3, DynamoDB, or SQS using tools such as LocalStack or dedicated mocking libraries. This provides a local environment to simulate interactions with these services.

- Simulating Events: Trigger serverless functions by simulating the events that trigger them. This involves creating mock event payloads that mimic the events that the function is designed to handle.

- API Gateway Events: Simulate API Gateway events by creating mock HTTP requests, including headers, query parameters, and request bodies. This allows you to test how your function handles different API requests.

- SNS/SQS Events: Simulate SNS/SQS events by creating mock messages with the expected payload format. This allows you to test how your function handles messages from these services.

- Scheduled Events: Simulate scheduled events by triggering the function manually with the appropriate event data. This allows you to test the function’s behavior when triggered by a schedule.

Monitoring and Logging

Effective monitoring and logging are crucial for maintaining the health, performance, and reliability of serverless applications. They provide insights into function execution, identify performance bottlenecks, and facilitate rapid debugging. Implementing these practices ensures that the serverless architecture operates as expected and allows for proactive issue resolution.

Setting Up Monitoring and Logging for Serverless Functions

Setting up monitoring and logging involves integrating tools and services provided by the cloud provider to capture, store, and analyze data related to function invocations and application behavior. This process typically involves configuring logging destinations, setting up monitoring dashboards, and defining alerts based on key metrics.The following steps are generally involved:

- Cloud Provider Specific Configuration: Each cloud provider (e.g., AWS, Azure, Google Cloud) offers its own set of services for monitoring and logging. For example, AWS provides CloudWatch for logging and monitoring, Azure offers Application Insights and Log Analytics, and Google Cloud uses Cloud Logging and Cloud Monitoring. The initial step involves configuring these services within the chosen cloud platform. This often includes enabling logging for the serverless functions themselves.

- Function Code Instrumentation: Instrumenting the function code to generate logs and emit custom metrics is a vital step. This can involve using logging libraries specific to the cloud provider (e.g., the AWS SDK for JavaScript to send logs to CloudWatch) or standard logging frameworks like `console.log` (which is automatically captured by many cloud providers). Custom metrics can be emitted using the cloud provider’s monitoring APIs.

- Log Aggregation and Storage: Logs from serverless functions are typically aggregated and stored in a centralized location. Cloud providers automatically handle this for you, for example, by storing logs in CloudWatch Logs, Azure Log Analytics, or Google Cloud Logging. The aggregation process simplifies analysis and allows for correlation across multiple functions and services.

- Metric Collection and Dashboards: Metrics are automatically collected by cloud providers, or they can be custom defined. These metrics include invocation counts, execution times, error rates, and resource utilization. Monitoring dashboards are then configured to visualize these metrics, providing a real-time view of the application’s health and performance.

- Alerting Configuration: Alerts are configured to trigger notifications based on predefined thresholds or anomalies in the metrics. For example, an alert can be configured to notify the operations team if the error rate of a function exceeds a certain percentage. This allows for proactive response to potential issues.

Key Metrics to Monitor for Performance and Availability

Monitoring specific metrics is crucial for understanding the performance and availability of serverless applications. Tracking these metrics provides insights into the overall health of the system and enables proactive identification of issues.Key metrics to monitor include:

- Invocation Count: The number of times a function is invoked. This metric provides an overview of function usage and traffic volume. A sudden increase in invocation count might indicate a potential performance issue or a surge in user activity.

- Execution Duration: The time it takes for a function to execute, from invocation to completion. Tracking execution duration helps identify performance bottlenecks and inefficiencies. Slow execution times can impact user experience and increase costs.

- Error Rate: The percentage of function invocations that result in errors. A high error rate indicates potential code issues, configuration problems, or dependencies that are failing.

- Cold Start Time: The time it takes for a function to initialize when it hasn’t been recently used (a “cold start”). Cold starts can impact the initial response time for users, especially for functions that are not frequently invoked.

- Concurrent Executions: The number of function instances running simultaneously. Monitoring concurrent executions helps identify resource contention and potential scaling issues.

- Throttling: The number of times a function invocation is throttled because it exceeds the provisioned concurrency or other resource limits. Throttling indicates that the function needs more resources to handle the workload.

- Memory Utilization: The amount of memory used by the function during execution. Monitoring memory utilization helps identify memory leaks or inefficient code.

- Network I/O: Metrics related to network traffic, such as data transfer in and out of the function. High network I/O might indicate that the function is interacting heavily with external services.

- Cost: Monitoring the cost associated with function invocations, execution time, and resource utilization is essential for optimizing spending and ensuring cost-effectiveness.

Using Logging Tools to Troubleshoot and Debug Serverless Applications

Logging tools are essential for troubleshooting and debugging serverless applications. Analyzing logs provides detailed information about function execution, including input parameters, execution paths, and error messages. Effective use of these tools can significantly reduce the time required to identify and resolve issues.The following techniques are commonly used:

- Structured Logging: Using structured logging formats, such as JSON, allows for easier parsing and analysis of logs. This enables efficient searching and filtering of log data based on specific criteria, such as timestamps, log levels (e.g., INFO, WARNING, ERROR), and custom fields.

- Log Filtering and Searching: Cloud providers offer tools for filtering and searching logs based on various criteria, such as function names, timestamps, log levels, and specific s. Effective use of these tools allows developers to quickly locate relevant log entries.

- Correlation IDs: Implementing correlation IDs (e.g., a unique request ID) allows developers to trace the execution flow across multiple functions and services. This is especially useful for debugging complex distributed systems.

- Error Tracking and Exception Handling: Integrating error tracking tools, such as Sentry or Rollbar, can automatically capture and report exceptions that occur within serverless functions. These tools provide detailed information about the error, including the stack trace and context.

- Log Level Management: Using different log levels (e.g., DEBUG, INFO, WARNING, ERROR) allows developers to control the verbosity of the logs. DEBUG logs can be used during development and testing, while INFO logs can be used for general monitoring in production. ERROR logs should be used to report critical issues.

- Real-Time Log Streaming: Some cloud providers offer real-time log streaming capabilities, which allows developers to monitor logs as they are generated. This can be helpful for debugging live issues and monitoring the behavior of the application in real-time.

- Log Analysis Tools: Using log analysis tools, such as the cloud provider’s built-in tools or third-party solutions like Splunk or the ELK stack (Elasticsearch, Logstash, Kibana), to perform more advanced analysis. These tools can provide visualizations, identify patterns, and generate reports.

- Reproducing Issues: When a bug is identified, carefully review the logs and attempt to reproduce the issue in a development or staging environment. This process involves examining the input data, function configurations, and other relevant factors that could have contributed to the error.

Security Considerations

Serverless architectures, while offering significant advantages in terms of scalability and cost-effectiveness, introduce unique security challenges. The distributed nature of serverless applications, coupled with the reliance on third-party services and managed infrastructure, expands the attack surface. A thorough understanding of these risks and the implementation of robust security measures are crucial for protecting serverless applications from vulnerabilities and potential breaches.

Identifying Security Risks

Serverless applications are susceptible to various security risks that can compromise data integrity, confidentiality, and availability. Understanding these risks is the first step towards building a secure serverless environment.

- Function Code Vulnerabilities: Malicious code or vulnerabilities within the serverless functions themselves can be exploited. This includes injection flaws (e.g., SQL injection, command injection), cross-site scripting (XSS), and insecure deserialization.

- Dependency Management Issues: Serverless functions often rely on external libraries and dependencies. Vulnerabilities in these dependencies can be exploited if not properly managed and updated. This includes the use of outdated or compromised libraries.

- Authentication and Authorization Flaws: Improperly configured authentication and authorization mechanisms can lead to unauthorized access to resources and data. This includes weak password policies, lack of multi-factor authentication (MFA), and insufficient role-based access control (RBAC).

- Event Injection Attacks: Malicious actors can inject malicious events into the event streams that trigger serverless functions. This can lead to unauthorized execution of code or data manipulation.

- Data Exposure and Leakage: Sensitive data can be exposed through misconfigured storage buckets, inadequate encryption, or improper handling of secrets. This can include API keys, database credentials, and personally identifiable information (PII).

- Denial-of-Service (DoS) Attacks: Serverless functions can be targeted by DoS attacks, where malicious actors attempt to overwhelm the functions with requests, making them unavailable. This can be achieved by exploiting the scaling capabilities of serverless platforms.

- Configuration Errors: Misconfigurations in the serverless platform or related services can create security vulnerabilities. This includes overly permissive IAM roles, open access to storage buckets, and insecure network configurations.

- Supply Chain Attacks: Compromised build processes or third-party integrations can introduce malicious code into the serverless application.

Best Practices for Securing Serverless Functions

Implementing a layered security approach is essential for protecting serverless applications. This involves a combination of preventative measures, detection mechanisms, and response strategies.

- Authentication: Implement robust authentication mechanisms to verify the identity of users and services accessing serverless functions.

- Use API keys: Generate and manage API keys for applications and services to access the API endpoints.

- Utilize JSON Web Tokens (JWTs): Implement JWTs for authentication, verifying user identities and session management.

- Integrate with Identity Providers (IdPs): Leverage external identity providers (e.g., AWS Cognito, Google Identity Platform, Azure Active Directory) for user authentication and authorization.

- Enforce Multi-Factor Authentication (MFA): Enable MFA for all users with access to sensitive resources.

- Authorization: Implement fine-grained authorization controls to limit access to resources based on user roles and permissions.

- Implement Role-Based Access Control (RBAC): Define roles and permissions to restrict access to specific resources and actions.

- Utilize IAM Policies: Configure IAM policies with the principle of least privilege to grant only the necessary permissions to serverless functions and related resources.

- Employ Attribute-Based Access Control (ABAC): Use ABAC for more dynamic and context-aware authorization based on attributes.

- Data Encryption: Protect sensitive data at rest and in transit using encryption.

- Encrypt Data at Rest: Encrypt data stored in databases, storage buckets, and other data stores using encryption keys managed by a key management service (KMS).

- Encrypt Data in Transit: Use HTTPS/TLS for all communication between clients and serverless functions, and between serverless functions and other services.

- Use KMS for Key Management: Leverage a KMS (e.g., AWS KMS) to manage encryption keys securely.

- Code Security: Ensure the security of the function code itself.

- Perform Static Code Analysis: Use static code analysis tools to identify potential vulnerabilities in the code.

- Implement Dynamic Application Security Testing (DAST): Use DAST tools to identify vulnerabilities in running applications.

- Conduct Regular Security Audits: Conduct regular security audits of the code to identify and address vulnerabilities.

- Use Secure Coding Practices: Follow secure coding practices to prevent common vulnerabilities such as SQL injection and cross-site scripting.

- Implement Input Validation: Validate all inputs to prevent injection attacks and other vulnerabilities.

- Dependency Management: Secure the dependencies used by the functions.

- Use a Dependency Management Tool: Employ a dependency management tool (e.g., npm, pip) to manage dependencies.

- Regularly Update Dependencies: Regularly update dependencies to the latest versions to patch security vulnerabilities.

- Scan Dependencies for Vulnerabilities: Use vulnerability scanners to identify and remediate vulnerabilities in dependencies.

- Monitoring and Logging: Implement robust monitoring and logging to detect and respond to security incidents.

- Implement Comprehensive Logging: Implement detailed logging for all function invocations, API requests, and security events.

- Monitor Logs for Anomalies: Monitor logs for unusual activity and potential security threats.

- Implement Security Information and Event Management (SIEM): Integrate logs with a SIEM system to centralize security monitoring and alerting.

- Network Security: Secure the network environment in which serverless functions operate.

- Use VPCs and Subnets: Deploy serverless functions within a Virtual Private Cloud (VPC) and utilize subnets to isolate resources.

- Implement Network Access Control Lists (ACLs): Use network ACLs to control network traffic and restrict access to serverless functions.

- Use Web Application Firewalls (WAFs): Deploy a WAF to protect serverless functions from common web application attacks.

- Secrets Management: Securely manage secrets such as API keys, database credentials, and other sensitive information.

- Use a Secrets Management Service: Use a secrets management service (e.g., AWS Secrets Manager, HashiCorp Vault) to store and manage secrets securely.

- Avoid Hardcoding Secrets: Avoid hardcoding secrets in the code.

- Rotate Secrets Regularly: Rotate secrets regularly to minimize the impact of a potential compromise.

Checklist for Implementing Security Measures

A security checklist can help ensure that all necessary security measures are implemented and maintained in a serverless application.

- Authentication and Authorization:

- [ ] Implement authentication mechanisms (API keys, JWTs, IdPs).

- [ ] Enforce MFA for all users with access to sensitive resources.

- [ ] Implement RBAC or ABAC.

- [ ] Define and enforce least privilege for IAM roles.

- Data Encryption:

- [ ] Encrypt data at rest using KMS.

- [ ] Enforce encryption in transit (HTTPS/TLS).

- Code Security:

- [ ] Perform static code analysis.

- [ ] Conduct regular security audits.

- [ ] Implement input validation.

- [ ] Use secure coding practices.

- [ ] Implement DAST.

- Dependency Management:

- [ ] Use a dependency management tool.

- [ ] Regularly update dependencies.

- [ ] Scan dependencies for vulnerabilities.

- Monitoring and Logging:

- [ ] Implement comprehensive logging.

- [ ] Monitor logs for anomalies.

- [ ] Integrate with a SIEM system.

- Network Security:

- [ ] Deploy functions within a VPC and utilize subnets.

- [ ] Implement network ACLs.

- [ ] Deploy a WAF.

- Secrets Management:

- [ ] Use a secrets management service.

- [ ] Avoid hardcoding secrets.

- [ ] Rotate secrets regularly.

Deployment and CI/CD

Deploying serverless applications requires a shift in mindset from traditional infrastructure management. Serverless platforms abstract away the underlying infrastructure, allowing developers to focus on code and functionality. This shift necessitates new approaches to deployment and continuous integration/continuous deployment (CI/CD) to ensure efficient and reliable application delivery.

Deployment Process for Serverless Applications

The deployment process for serverless applications typically involves packaging code, configuring resources, and deploying to the chosen serverless platform (e.g., AWS Lambda, Azure Functions, Google Cloud Functions). This process is streamlined and automated to a significant degree.The steps involved generally include:

- Code Packaging: The application code, along with any dependencies, is packaged into a deployment artifact. This artifact format varies depending on the platform. For instance, AWS Lambda functions are typically packaged as .zip files, while Azure Functions support various package formats.

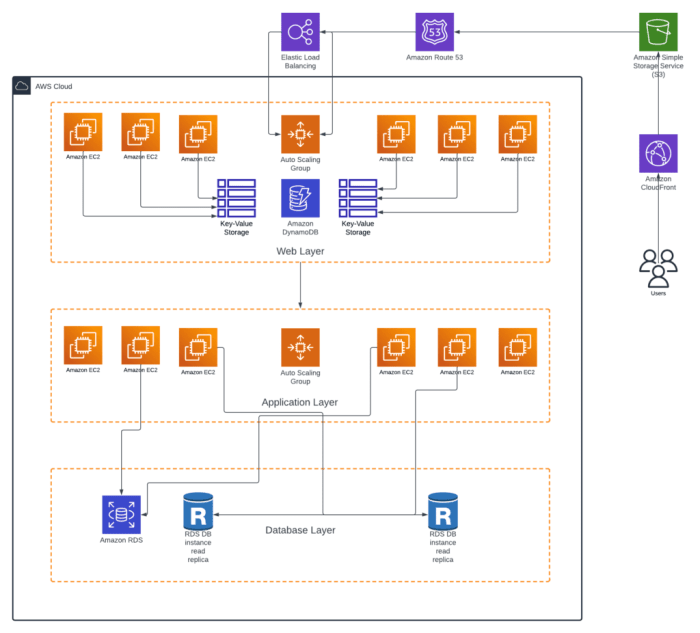

- Resource Configuration: Serverless applications rely on various resources, such as functions, APIs, databases, and storage buckets. Configuration involves defining these resources, their properties (e.g., memory allocation for Lambda functions, API gateway endpoints), and their relationships. This configuration is often managed through Infrastructure as Code (IaC) tools.

- Deployment Execution: The deployment process leverages platform-specific tools or SDKs to upload the deployment artifact and configure the defined resources. This step might involve creating or updating functions, setting up API gateways, and configuring event triggers.

- Testing and Validation: After deployment, it is crucial to validate the application’s functionality. This involves running tests (unit, integration, and end-to-end) to ensure that the deployed code behaves as expected. Validation may also include monitoring and logging to check for errors and performance issues.

Setting up a CI/CD Pipeline for Automated Deployments

Automating the deployment process through a CI/CD pipeline is critical for serverless applications. This ensures that code changes are automatically built, tested, and deployed, reducing manual effort and improving release velocity. A typical CI/CD pipeline consists of several stages.These stages are:

- Source Code Management (SCM): The pipeline starts with a code repository (e.g., Git) where the application code is stored. When a code change is pushed to the repository (e.g., a new feature branch is merged), the CI/CD pipeline is triggered.

- Build Stage: In the build stage, the code is compiled (if necessary), dependencies are installed, and the deployment artifact is created. This step ensures that the code is ready for deployment. For example, a Node.js application might use npm to install dependencies and then bundle the code using a tool like Webpack.

- Testing Stage: Automated tests (unit tests, integration tests, and potentially end-to-end tests) are executed to verify the code’s functionality. The testing stage is crucial for catching bugs early in the development cycle. Test results are collected and reported.

- Deployment Stage: If the tests pass, the code is deployed to the serverless platform. This stage uses platform-specific tools or APIs to deploy the code and configure the necessary resources. The deployment stage might involve deploying to a staging environment first, before deploying to production.

- Monitoring and Validation Stage: After deployment, the pipeline monitors the application’s performance and health. This stage might involve running automated health checks, monitoring logs, and sending alerts if any issues are detected.

Tools like AWS CodePipeline, Azure DevOps, and Google Cloud Build provide integrated CI/CD services specifically designed for serverless deployments. These tools automate the build, test, and deployment processes, allowing developers to focus on writing code.

For instance, a simple pipeline using AWS CodePipeline might trigger on a push to a GitHub repository, build a Lambda function using AWS CodeBuild, run tests, and deploy the function using AWS CodeDeploy.

Rolling Back Deployments in Case of Errors

Errors can occur during or after a deployment. A robust rollback strategy is crucial to minimize downtime and ensure application stability.Key rollback strategies include:

- Blue/Green Deployments: Deploy the new version of the application (green) alongside the existing version (blue). After testing the green environment, switch traffic to it. If issues arise, quickly switch traffic back to the blue environment. This approach minimizes downtime.

- Canary Deployments: Gradually introduce the new version of the application to a small subset of users (the “canary”). Monitor the canary’s performance and behavior. If the canary performs as expected, gradually increase the traffic to the new version. If issues arise, redirect the traffic from the canary to the original version.

- Version Control and Rollback: Utilize version control systems (e.g., Git) to track changes and maintain previous versions of the application. If a deployment fails, revert to a previous, stable version of the code and redeploy.

- Automated Rollback Triggers: Configure the CI/CD pipeline to automatically roll back to a previous version if specific errors or performance issues are detected (e.g., increased error rates, performance degradation).

- Immutable Infrastructure: Treat infrastructure as immutable. Instead of updating existing resources, deploy new resources with the updated code and then switch traffic. This approach ensures a clean and reliable deployment.

Final Review

In conclusion, refactoring applications to be serverless is a complex but rewarding endeavor. By carefully analyzing existing architectures, selecting appropriate serverless services, and adopting best practices for design, testing, and deployment, organizations can realize significant improvements in scalability, cost-effectiveness, and operational efficiency. The journey requires a strategic approach, a deep understanding of serverless principles, and a commitment to continuous learning.

Embracing serverless is not just about adopting a new technology; it’s about transforming the way we build, deploy, and manage applications, ultimately paving the way for more innovative and resilient software solutions.

FAQ Corner

What are the primary cost benefits of serverless architecture?

Serverless architectures typically offer cost savings through pay-per-use pricing models, eliminating the need to pay for idle resources. This results in reduced infrastructure costs and improved resource utilization.

How does serverless impact application scalability?

Serverless platforms automatically scale resources based on demand, providing near-infinite scalability. This eliminates the need for manual scaling and ensures applications can handle fluctuating workloads without performance degradation.

What are the key considerations when choosing a serverless platform?

Factors to consider include pricing, supported programming languages, available services, integration capabilities, and the platform’s ecosystem and community support.

How do I handle state and session management in a serverless application?

Serverless applications often use external services like databases (e.g., DynamoDB, Cloud Firestore) or caching solutions (e.g., Redis, Memcached) to manage state and session data. Alternatively, techniques like JWT (JSON Web Tokens) can be used for stateless authentication and authorization.

What are the main challenges in debugging serverless applications?

Debugging can be challenging due to the distributed nature of serverless functions. Effective logging, monitoring, and tracing are crucial. Tools like distributed tracing systems and cloud provider-specific debugging tools are helpful.