Embarking on the journey of serverless computing with AWS Lambda can seem daunting, yet it offers unparalleled scalability and efficiency. This guide dissects the process of crafting your first Python Lambda function, from setting up your development environment to deploying and managing your code. We’ll explore the intricacies of function structure, event triggers, and interaction with other AWS services, providing a robust foundation for your serverless endeavors.

The Artikel encompasses the essential steps, including installing the AWS CLI, configuring your credentials, and writing a simple “Hello, World!” function. We’ll delve into configuring your function in the AWS console, understanding event sources like API Gateway, and handling input and output data. Furthermore, we’ll cover testing and debugging techniques, integrating with services such as S3 and DynamoDB, and best practices for performance, security, and error handling.

This comprehensive approach aims to equip you with the knowledge and skills to confidently build and deploy your first Lambda function.

Setting Up Your Development Environment

To effectively develop and deploy AWS Lambda functions in Python, a well-configured development environment is essential. This involves installing and configuring the necessary tools and libraries, and ensuring secure access to your AWS resources. The following sections detail the steps involved in setting up this environment, focusing on the AWS CLI, Python installation, the `boto3` library, and secure credential management.

Installing the AWS CLI

The AWS Command Line Interface (CLI) is a unified tool to manage your AWS services. It allows you to interact with AWS services from your terminal.To install the AWS CLI:

- Using `pip` (Recommended): Python’s package installer, `pip`, is the preferred method. Open your terminal and execute the following command:

pip install awscli --upgrade --userThis command installs or upgrades the AWS CLI and places the executable in a user-specific directory, avoiding potential conflicts with system-level installations. The `–user` flag ensures the installation is specific to the current user.

- Verification: After installation, verify the AWS CLI is installed correctly by checking its version:

aws --versionThe output should display the installed version of the AWS CLI.

Installing Python and the `boto3` Library

Python is the programming language used for writing Lambda functions. The `boto3` library provides the Python SDK for interacting with AWS services.To install Python and `boto3`:

- Python Installation: If Python isn’t already installed, download and install the latest stable version from the official Python website (python.org). Ensure that Python and `pip` are added to your system’s PATH environment variable during installation.

- Installing `boto3`: Use `pip` to install the `boto3` library:

pip install boto3This command installs `boto3` and its dependencies.

- Verification: Verify the installation by importing `boto3` in a Python interpreter or script:

python -c "import boto3; print(boto3.__version__)"This command imports `boto3` and prints its version if the installation was successful.

Configuring Your AWS Credentials Securely

Securely configuring your AWS credentials is paramount for protecting your AWS resources. Using IAM roles is the recommended best practice for Lambda functions. This avoids storing long-term credentials directly in your code.To configure AWS credentials securely using IAM roles:

- IAM Role Creation: Create an IAM role with the necessary permissions for your Lambda function. This role should have a trust relationship that allows the Lambda service to assume it. The permissions granted to the role define what resources your function can access (e.g., access to an S3 bucket, DynamoDB table, etc.).

- Attaching the Role to the Lambda Function: When creating or updating your Lambda function, specify the IAM role you created.

- Using the `boto3` Library with IAM Roles: The `boto3` library automatically detects and uses the IAM role assigned to your Lambda function. You do not need to explicitly configure credentials within your function code.

import boto3 s3 = boto3.client('s3') response = s3.list_buckets() print(response)This code snippet shows how to use `boto3` to interact with S3. The `boto3.client(‘s3’)` call implicitly uses the IAM role associated with the Lambda function.

- Avoiding Hardcoded Credentials: Never store your AWS access keys and secret keys directly in your code or environment variables, except for local development and testing, and never in production environments. IAM roles provide a more secure and manageable approach. For local development, you can configure credentials using the AWS CLI with the `aws configure` command, or by setting the `AWS_ACCESS_KEY_ID` and `AWS_SECRET_ACCESS_KEY` environment variables, but only for testing purposes.

Creating Your First Lambda Function in Python

This section details the core mechanics of constructing a Python-based AWS Lambda function. Understanding the function’s structure, particularly the role of the handler, is crucial for effective serverless application development. The subsequent content provides a “Hello, World!” example and demonstrates the integration of Python libraries.

Code Structure

The fundamental architecture of a Python Lambda function revolves around a specific structure, primarily defined by the handler function. This function acts as the entry point for the Lambda function’s execution.

- Handler Function: The handler function is the designated entry point for your Lambda function. It receives events and context objects as arguments. The event object contains the input data provided to the function, while the context object provides runtime information, such as the function’s name, memory limits, and execution time remaining. The handler function is the primary function that Lambda executes when triggered.

- Function Logic: Within the handler function, the core logic of your application resides. This can include any operations you want the function to perform, such as processing data, interacting with other AWS services (e.g., S3, DynamoDB), or returning a response.

- Imports: Lambda functions can utilize Python libraries to extend their functionality. Imports are placed at the top of your code, making the required modules available to the function. Libraries can be either standard Python libraries or third-party libraries packaged with your deployment.

- Deployment Package: The deployment package encapsulates your function code, any dependencies (libraries), and configuration files. This package is uploaded to AWS Lambda.

“Hello, World!” Function

A simple “Hello, World!” function demonstrates the core structure of a Lambda function. This basic example clarifies the handler’s role and the fundamental operation of a Lambda function.

def lambda_handler(event, context): """ This is the handler function. """ return 'statusCode': 200, 'body': 'Hello, World!' In this example:

lambda_handleris the handler function. It takes two arguments:eventandcontext. In this simple example, the event is not used. The context object provides information about the execution environment.- The function returns a dictionary. The

statusCodeindicates the HTTP status code (200 for success). Thebodycontains the response message.

Importing and Using Python Libraries

Lambda functions can incorporate external Python libraries to expand their capabilities. The inclusion of libraries involves importing the necessary modules and using their functions within the handler.

import jsonimport datetimedef lambda_handler(event, context): """ This function demonstrates importing and using libraries. """ current_time = datetime.datetime.now().isoformat() message = 'message': 'Hello from Lambda!', 'time': current_time return 'statusCode': 200, 'body': json.dumps(message) In this extended example:

import jsonandimport datetimeimport thejsonanddatetimemodules, respectively.- The code uses

datetime.datetime.now().isoformat()to get the current time in ISO format. json.dumps(message)converts a Python dictionary into a JSON string for the response body. This is a common practice when returning data from a Lambda function.

Configuring the Lambda Function in the AWS Console

Configuring a Lambda function in the AWS Console is a crucial step in deploying and managing your serverless code. This process involves defining the function’s basic attributes, specifying its execution environment, and providing the code that the function will execute. Proper configuration ensures the function operates as intended, receives the necessary resources, and interacts correctly with other AWS services.

Creating a Lambda Function in the AWS Console

The creation of a Lambda function within the AWS Console initiates the deployment process. This involves several sequential steps, each contributing to the function’s operational characteristics.

- Accessing the AWS Lambda Service: Begin by logging into the AWS Management Console and navigating to the Lambda service. This is typically found under the “Compute” category or by searching for “Lambda” in the search bar.

- Initiating Function Creation: Once in the Lambda console, click the “Create function” button. This action prompts the system to present options for function creation.

- Selecting Function Creation Method: You will be presented with several options for creating a function. The primary choices include:

- Author from scratch: This option allows you to create a new function from scratch, defining all configurations manually.

- Use a blueprint: Blueprints offer pre-configured function templates for common use cases, such as processing S3 events or responding to API Gateway requests. This is a good starting point if you want a function with a predefined structure.

- Browse serverless app repository: This option allows you to deploy serverless applications created by the AWS community or third-party providers.

Choose “Author from scratch” to create a function from the ground up for this example.

- Configuring Basic Function Details: Provide the essential information for your function:

- Function name: Assign a unique name to your Lambda function. This name is used to identify and reference the function within AWS. It should be descriptive of the function’s purpose.

- Runtime: Select the programming language runtime for your function (e.g., Python, Node.js, Java). This determines the environment in which your code will execute.

- Architecture: Choose the processor architecture (e.g., x86_64 or arm64). The selection impacts performance and cost. The `arm64` architecture, based on the AWS Graviton processor, often provides better performance and lower cost for suitable workloads.

- Permissions: Configure the execution role, which grants the function permissions to access other AWS resources. You can either create a new role with basic Lambda permissions or choose an existing role with the necessary access rights. The execution role is critical for the function to interact with other AWS services.

- Finalizing Function Creation: After configuring the basic details, click the “Create function” button to initiate the function creation process. AWS will then provision the necessary resources and prepare the function for code upload and configuration.

Configuring Function’s Basic Settings

Configuring the function’s basic settings involves refining the function’s behavior and resource allocation. These settings are fundamental to the function’s performance, security, and operational characteristics.

- Function Overview: The function overview page provides a summary of the function’s configuration, including its name, runtime, and associated resources.

- Configuration Tab: Navigate to the “Configuration” tab to access the settings. This tab provides access to the settings.

- General configuration: This section allows you to modify the function’s basic settings. These settings include the function name, description, and the amount of memory allocated to the function. Adjusting the memory allocation can impact the function’s performance, and subsequently, its cost.

- Environment variables: You can define environment variables that your function can access during runtime. Environment variables are useful for storing configuration parameters, API keys, or other sensitive information.

- Tags: Apply tags to your function for organization and cost allocation. Tags are key-value pairs that help categorize and manage your AWS resources.

- Code source: In this section, you’ll manage the code that the function will execute.

- Permissions: This section displays the execution role associated with the function and the permissions granted to it.

- Monitoring: The “Monitoring” tab provides metrics and logs for your function. You can monitor metrics such as invocations, duration, errors, and throttles. Logs are essential for troubleshooting and understanding the function’s behavior.

- Concurrency: You can configure the function’s concurrency settings to control the number of concurrent executions. Limiting concurrency can help prevent your function from consuming excessive resources.

Uploading Python Code to the Lambda Function

Uploading your Python code to the Lambda function is a critical step, enabling the function to execute your desired logic. There are several methods for uploading the code.

- Code Entry Point: Navigate to the “Code” tab within the function configuration. This is where you manage the code source.

- Code Upload Options:

- Inline Code Editor: The AWS Console provides an inline code editor where you can directly write or paste your Python code. This is suitable for small functions or quick edits.

- Upload a .zip file: For more complex projects, you can package your Python code and dependencies into a `.zip` archive and upload it to the function. This method allows for the inclusion of external libraries and a more structured code organization.

- Upload from Amazon S3: You can upload your code to an Amazon S3 bucket and then specify the S3 bucket and object key to associate with your Lambda function. This is suitable for larger codebases or when you need to update the code frequently.

- Handler Configuration: In the “Code” section, you must specify the handler, which is the function within your Python code that AWS Lambda will invoke when the function is triggered. The handler typically follows the format `module_name.function_name`. For example, if your Python file is named `main.py` and the function you want to invoke is named `handler`, the handler would be `main.handler`.

- Saving the Configuration: After uploading the code and configuring the handler, save the changes. The Lambda function is now ready to be invoked.

Understanding Lambda Function Triggers and Events

Lambda functions are designed to be event-driven, reacting to specific occurrences within your AWS environment. These events, originating from various AWS services, trigger the execution of your code. Understanding these triggers and the structure of the events they generate is crucial for designing effective and responsive serverless applications. The ability to configure and manage these triggers is a core aspect of utilizing the power and flexibility of AWS Lambda.

Different Types of Event Sources

Lambda functions can be triggered by a wide array of event sources, allowing for diverse application architectures. These event sources represent various AWS services and custom applications that can initiate the execution of your Lambda code.

- API Gateway: API Gateway enables you to create, publish, maintain, monitor, and secure APIs at any scale. When a request is made to an API endpoint, API Gateway can trigger a Lambda function, processing the request and returning a response. This is fundamental for building web applications and backend services.

- Amazon S3: Amazon S3 is an object storage service. When an object is created, updated, or deleted in an S3 bucket, a Lambda function can be triggered. This is useful for tasks such as image processing, data transformation, and file archival.

- Amazon DynamoDB: DynamoDB is a NoSQL database service. Changes to items in a DynamoDB table, such as inserts, updates, or deletions, can trigger a Lambda function. This enables real-time data processing and event-driven architectures.

- Amazon SNS: Amazon SNS (Simple Notification Service) is a fully managed messaging service. When a message is published to an SNS topic, it can trigger a Lambda function. This is suitable for sending notifications, fan-out processing, and integrating with other services.

- Amazon SQS: Amazon SQS (Simple Queue Service) is a fully managed message queuing service. When a message is added to an SQS queue, it can trigger a Lambda function. This is beneficial for decoupling application components, handling asynchronous tasks, and managing workloads.

- Amazon CloudWatch Events (now EventBridge): CloudWatch Events allows you to create rules that match events from various AWS services. When a rule matches an event, it can trigger a Lambda function. This facilitates scheduled tasks, responding to service health changes, and creating event-driven workflows.

- Amazon Kinesis: Amazon Kinesis is a real-time data streaming service. When data is added to a Kinesis stream, it can trigger a Lambda function. This is useful for processing streaming data, such as logs, clickstream data, and sensor data.

- Amazon CloudFront: CloudFront is a content delivery network (CDN) service. You can trigger a Lambda function at the edge locations of CloudFront, for example, to modify HTTP requests or responses. This allows for customization and dynamic content delivery.

- AWS IoT: AWS IoT allows you to connect devices to the cloud. Data from IoT devices can trigger Lambda functions. This is essential for processing IoT data, such as sensor readings, and controlling devices.

Configuring the API Gateway Trigger

Configuring an API Gateway trigger for your Lambda function involves several steps, including creating an API Gateway API and configuring the integration with your Lambda function. This process allows your Lambda function to respond to HTTP requests.

- Create an API Gateway API: In the AWS console, navigate to the API Gateway service and create a new API. You can choose between REST API, HTTP API, or WebSocket API, depending on your needs. For basic HTTP requests, REST API or HTTP API are suitable.

- Define API Resources and Methods: Define the resources (e.g., `/hello`) and HTTP methods (e.g., `GET`, `POST`) that your API will support. Each method represents an endpoint that can be accessed.

- Integrate with Lambda Function: For each method, configure an integration with your Lambda function. This involves specifying the Lambda function’s ARN (Amazon Resource Name) and the payload format (e.g., JSON).

- Configure Request and Response Mappings (if needed): In more complex scenarios, you might need to configure request mappings to transform incoming API Gateway requests into a format that your Lambda function understands. Similarly, you might need to configure response mappings to transform the Lambda function’s output into a format that API Gateway can return to the client.

- Deploy the API: After configuring the API, deploy it to a stage (e.g., `prod`, `dev`). This generates an API endpoint URL that can be used to access your Lambda function.

- Test the API: Use a tool like `curl`, Postman, or a web browser to send requests to your API endpoint and verify that your Lambda function is executed and returns the expected response.

Event Payload Structure for API Gateway Trigger

When an API Gateway trigger invokes a Lambda function, it passes an event payload that contains information about the HTTP request. Understanding this payload is critical for processing the request and generating the appropriate response. The structure of the event payload varies depending on the API Gateway configuration, but a common structure includes:

- `resource`: The path of the resource that was requested (e.g., `/hello`).

- `path`: The full path of the requested resource, including any path parameters (e.g., `/hello/world`).

- `httpMethod`: The HTTP method used for the request (e.g., `GET`, `POST`).

- `headers`: A dictionary containing the HTTP request headers.

- `queryStringParameters`: A dictionary containing the query string parameters.

- `pathParameters`: A dictionary containing any path parameters extracted from the path.

- `body`: The request body, typically a string. It might be a JSON object, HTML content, or any other data sent with the request. The body is `null` if there is no body.

- `isBase64Encoded`: A boolean value indicating whether the request body is base64 encoded.

- `requestContext`: An object containing contextual information about the request, such as the API ID, stage, request ID, and client IP address.

For example, consider a `GET` request to the endpoint `/hello?name=John` with the following event payload (simplified):

“`json

“resource”: “/hello”,

“path”: “/hello”,

“httpMethod”: “GET”,

“headers”:

“Host”: “your-api-id.execute-api.us-east-1.amazonaws.com”,

“User-Agent”: “curl/7.79.1”,

“Accept”: “*/*”

,

“queryStringParameters”:

“name”: “John”

,

“pathParameters”: null,

“body”: null,

“isBase64Encoded”: false,

“requestContext”:

“accountId”: “123456789012”,

“resourceId”: “abcdef”,

“stage”: “dev”,

“requestId”: “c6af9ac6-7b61-11e6-9a41-93b844546556”,

“identity”:

“cognitoIdentityPoolId”: null,

“accountId”: null,

“cognitoIdentityId”: null,

“caller”: null,

“apiKey”: null,

“sourceIp”: “127.0.0.1”,

“cognitoAuthenticationType”: null,

“cognitoAuthenticationProvider”: null,

“userArn”: null,

“userAgent”: “curl/7.79.1”,

“user”: null

,

“resourcePath”: “/hello”,

“httpMethod”: “GET”,

“apiId”: “your-api-id”

“`

In this scenario, the Lambda function would receive this JSON payload. The function would access `queryStringParameters` to retrieve the `name` value, allowing the function to customize the response based on the input parameter. This example demonstrates how a Lambda function uses the API Gateway trigger’s event payload to process HTTP requests. The exact structure might vary based on API Gateway configurations, especially when using custom authorizers or request/response transformations.

Handling Input and Output

Lambda functions are designed to be stateless, meaning they do not maintain a persistent state between invocations. Therefore, they rely on input from events to perform their tasks and return output to signal completion or provide results. Understanding how to manage input and output is fundamental to building effective and useful Lambda functions. This section details the mechanisms for accessing input data, processing it, and returning the results in a structured format.

Accessing and Processing Input Data

Lambda functions receive input data through an event payload. The structure and content of this payload depend on the trigger that invokes the function. This could be an API Gateway request, an S3 object creation event, a scheduled CloudWatch event, or any other supported event source. Accessing this data within the function involves parsing the event object, which is a dictionary-like structure in Python.

To illustrate, consider a simple Lambda function triggered by an API Gateway. The API Gateway might send a JSON payload containing data, such as a user’s name and email address. The Python function needs to parse this JSON data to extract the relevant information.

“`python

import json

def lambda_handler(event, context):

“””

Processes input data from an API Gateway event.

Args:

event (dict): Event data from the API Gateway.

context (object): Lambda context object.

Returns:

dict: A dictionary containing a greeting with the user’s name.

“””

try:

body = json.loads(event[‘body’]) # Parse the JSON body of the request

name = body.get(‘name’, ‘Guest’) # Retrieve the ‘name’ field or default to ‘Guest’

return

‘statusCode’: 200,

‘body’: json.dumps(

‘message’: f’Hello, name!’,

)

except Exception as e:

return

‘statusCode’: 500,

‘body’: json.dumps(

‘message’: f’Error processing request: str(e)’

)

“`

In this example, `event[‘body’]` accesses the request body, and `json.loads()` parses the JSON string into a Python dictionary. The function then retrieves the ‘name’ field and uses it to construct a greeting. The `context` object provides information about the invocation, function, and execution environment.

Returning Output Data

The output from a Lambda function is also crucial, as it is the result that the calling service or application receives. The format of the output depends on the trigger and the requirements of the service consuming the output. For example, an API Gateway expects a specific response format, including a `statusCode` and a `body`.

The output must be serialized into a JSON string, and this is commonly achieved using the `json.dumps()` method. The returned value must be a dictionary, and the structure of this dictionary depends on the trigger. The `statusCode` field indicates the HTTP status code for an API Gateway response. The `body` field contains the actual response data, typically serialized as a JSON string.

Consider the example above. The function returns a dictionary with a `statusCode` of 200 and a `body` containing a JSON string with the greeting.

“`json

“statusCode”: 200,

“body”: “\”message\”: \”Hello, John!\””

“`

If an error occurs during processing, the function should return an appropriate status code (e.g., 500 for an internal server error) and an error message in the body.

Input Data Formats and Expected Output

Different event sources provide data in various formats. Understanding these formats and how to process them is key to writing robust Lambda functions. The following table provides examples of input data formats and the expected output for a simple function that echoes the input data. This table is designed to highlight the relationship between input and output for various event types.

| Event Source | Input Data Format (Example) | Expected Output |

|---|---|---|

| API Gateway | | |

| S3 Object Creation | | |

| CloudWatch Events (Scheduled) | | |

This table shows how the structure of the input data varies depending on the event source.

It also demonstrates how the function needs to parse the input to extract the relevant information and then format the output accordingly. For example, the S3 event contains details about the bucket and object, while the CloudWatch event contains information about the event’s time. The expected output consistently includes a `statusCode` and a `body` containing a message based on the input data.

The specific content of the body will change according to the information extracted and processed from the event.

Testing and Debugging Your Lambda Function

Debugging and testing are critical stages in the software development lifecycle, especially within serverless architectures like AWS Lambda. Rigorous testing ensures the function behaves as expected under various conditions, while effective debugging allows for rapid identification and resolution of issues. This section details methods for testing, logging, and troubleshooting Lambda functions.

Testing Lambda Functions Using the AWS Console

The AWS Management Console provides an integrated testing feature for Lambda functions, allowing developers to simulate various event inputs and observe the function’s output without deploying to a production environment. This capability facilitates rapid iteration and validation.

To test a Lambda function within the console:

- Navigate to the Lambda function’s configuration page in the AWS Management Console.

- Select the “Test” tab.

- Click “Configure test event.” The console offers pre-configured test event templates for various AWS services (e.g., S3, API Gateway, DynamoDB). These templates simulate events that trigger the Lambda function. Alternatively, you can create a custom test event by providing a JSON payload that represents the input the function will receive.

- Provide a name for the test event and modify the event payload as needed. This is crucial for testing different scenarios, such as handling valid and invalid inputs.

- Click “Create.” The test event is saved.

- Select the created test event from the dropdown menu.

- Click “Test.” The function is invoked with the selected event.

- The console displays the function’s execution results, including the response, logs, and any error messages. Examine these results to verify the function’s behavior.

The console also allows for repeated testing with different event inputs, enabling comprehensive validation of the function’s logic. Consider creating multiple test events to cover various scenarios, including edge cases and error conditions. This approach aligns with the principles of test-driven development, where tests are written before or alongside the code to guide development and ensure quality.

Using Logging for Debugging in Python

Effective logging is indispensable for debugging Lambda functions. By strategically incorporating logging statements within your Python code, you can track the function’s execution flow, inspect variable values, and identify the root cause of errors. AWS Lambda integrates seamlessly with CloudWatch Logs, where all logs generated by the function are stored and can be analyzed.

To implement logging in your Python Lambda function:

- Import the `logging` module: `import logging`

- Configure the logging level. Common levels include `DEBUG`, `INFO`, `WARNING`, `ERROR`, and `CRITICAL`. The level determines the verbosity of the logs. For example, `logging.basicConfig(level=logging.INFO)` sets the minimum log level to INFO, meaning only INFO, WARNING, ERROR, and CRITICAL messages will be logged.

- Use the logging functions to record information:

- `logging.debug(message)`: For detailed information, typically used during development.

- `logging.info(message)`: For general information about the function’s execution.

- `logging.warning(message)`: For potentially problematic situations.

- `logging.error(message)`: For errors that the function can handle.

- `logging.critical(message)`: For severe errors that may cause the function to fail.

Example:

“`python

import logging

# Configure logging

logging.basicConfig(level=logging.INFO)

def lambda_handler(event, context):

logging.info(“Received event: %s”, event)

try:

# Your function logic here

result = event[‘key1’] + event[‘key2’]

logging.info(“Calculation result: %s”, result)

return

‘statusCode’: 200,

‘body’: str(result)

except Exception as e:

logging.error(“An error occurred: %s”, str(e))

return

‘statusCode’: 500,

‘body’: ‘Error’

“`

In this example, `logging.info()` statements record the received event and the calculation result. Error handling is included with a `try…except` block. If an exception occurs, `logging.error()` logs the error message. This allows you to track the flow of execution and identify the source of any problems.

Troubleshooting Common Lambda Function Errors

Lambda functions can encounter various errors, ranging from simple input validation issues to complex runtime exceptions. Understanding common error scenarios and their potential causes is crucial for effective troubleshooting.

Here are some common error scenarios and their potential causes:

- Invocation Timeout: The Lambda function execution exceeds the configured timeout duration.

- Causes:

- Inefficient code: The function’s logic is slow, for instance, due to inefficient algorithms or slow external API calls.

- Resource exhaustion: The function is consuming too much memory or other resources.

- Infinite loops: The function may be stuck in an infinite loop.

- Troubleshooting:

- Review CloudWatch Logs for execution duration and error messages.

- Optimize the code for performance.

- Increase the function’s timeout (within reasonable limits).

- Review the function’s memory allocation.

- Causes:

- Memory Exhaustion: The Lambda function runs out of allocated memory.

- Causes:

- Memory leaks: The function allocates memory but does not release it.

- Large data processing: The function is processing large datasets that exceed the available memory.

- Inefficient data structures: The use of inefficient data structures can consume excessive memory.

- Troubleshooting:

- Review CloudWatch Logs for memory usage metrics.

- Optimize memory usage in the code.

- Increase the function’s memory allocation.

- Profile the function to identify memory bottlenecks.

- Causes:

- Permissions Errors: The Lambda function does not have the necessary permissions to access AWS resources.

- Causes:

- Incorrect IAM role: The function’s IAM role does not grant the required permissions.

- Missing permissions: The role is missing permissions to access specific resources.

- Invalid resource ARNs: The function is configured with incorrect resource ARNs.

- Troubleshooting:

- Review the function’s IAM role and attached policies.

- Verify that the role has the necessary permissions to access the required AWS resources.

- Check the resource ARNs for accuracy.

- Use the AWS IAM policy simulator to test the role’s permissions.

- Causes:

- Input Validation Errors: The Lambda function receives invalid input data.

- Causes:

- Incorrect event structure: The input event does not match the expected format.

- Data type mismatches: The function receives data of an unexpected type.

- Missing required fields: The input event is missing required fields.

- Troubleshooting:

- Review the function’s input validation logic.

- Examine the event payload to identify data format or type issues.

- Use test events to simulate various input scenarios.

- Implement robust input validation to handle unexpected data.

- Causes:

- Dependency Errors: The Lambda function fails to load required dependencies.

- Causes:

- Missing dependencies: The function is missing required Python packages.

- Incorrect deployment package: The deployment package does not include all required dependencies or includes them incorrectly.

- Incorrect import paths: The function’s code has incorrect import paths.

- Troubleshooting:

- Verify that all required dependencies are included in the deployment package.

- Use a virtual environment to manage dependencies.

- Ensure the deployment package structure is correct.

- Review the function’s import statements.

- Causes:

By understanding these common error scenarios and their potential causes, developers can proactively design their Lambda functions to be more resilient and efficiently troubleshoot any issues that arise. Using the AWS console for testing, coupled with comprehensive logging, facilitates rapid identification and resolution of problems, ultimately improving the reliability and performance of serverless applications.

Working with AWS Services (e.g., S3, DynamoDB)

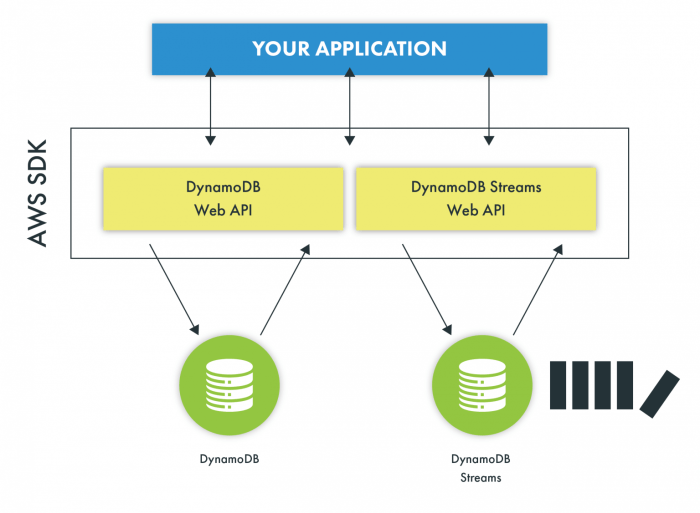

AWS Lambda functions gain significant power through their ability to interact with other AWS services. This interaction allows for complex workflows, data processing, and application logic to be executed in a serverless environment. This section will focus on how to leverage two crucial services: Amazon S3 for object storage and Amazon DynamoDB for NoSQL database operations, demonstrating the use of the `boto3` library, the official AWS SDK for Python.

Interacting with Amazon S3 with `boto3`

Amazon S3 provides scalable object storage, allowing Lambda functions to store, retrieve, and process data. The `boto3` library simplifies these interactions.

To interact with S3, the following steps are typically involved:

- Importing `boto3`: This initiates the connection to AWS services.

- Creating an S3 client: This client object handles all S3 operations.

- Using client methods: Methods like `upload_fileobj`, `download_fileobj`, `put_object`, `get_object`, and `delete_object` are used for various S3 operations.

Here is a code example that demonstrates how to read and write data to an S3 bucket. This example assumes the Lambda function has the necessary IAM permissions to access the S3 bucket. The bucket name is a placeholder and must be replaced with an actual bucket name.

“`python

import boto3

import io

s3 = boto3.client(‘s3’)

def lambda_handler(event, context):

bucket_name = ‘your-s3-bucket-name’

file_name = ‘example.txt’

content = “This is an example file content.”

# Write data to S3

try:

s3.put_object(Bucket=bucket_name, Key=file_name, Body=content)

print(f”Successfully wrote to bucket_name/file_name”)

except Exception as e:

print(f”Error writing to S3: e”)

return

‘statusCode’: 500,

‘body’: f’Error writing to S3: e’

# Read data from S3

try:

response = s3.get_object(Bucket=bucket_name, Key=file_name)

file_content = response[‘Body’].read().decode(‘utf-8’)

print(f”Successfully read from bucket_name/file_name”)

print(f”File content: file_content”)

except Exception as e:

print(f”Error reading from S3: e”)

return

‘statusCode’: 500,

‘body’: f’Error reading from S3: e’

return

‘statusCode’: 200,

‘body’: ‘Successfully read and wrote to S3’

“`

This example first writes a string to a specified S3 bucket and then reads it back. Error handling is included to provide informative feedback in case of failure. The function demonstrates fundamental S3 operations, which can be extended to handle more complex data processing and storage tasks.

Interacting with DynamoDB using `boto3`

DynamoDB is a fully managed NoSQL database service that provides fast and predictable performance. Lambda functions can interact with DynamoDB to store, retrieve, and modify data.

The core operations performed with DynamoDB through `boto3` are:

- Creating an item: Adding a new item to a table.

- Reading an item: Retrieving a specific item from a table based on its primary key.

- Updating an item: Modifying existing attributes of an item.

- Deleting an item: Removing an item from a table.

Here is an example demonstrating these operations:

“`python

import boto3

dynamodb = boto3.resource(‘dynamodb’)

table_name = ‘your-dynamodb-table-name’

table = dynamodb.Table(table_name)

def lambda_handler(event, context):

item_id = ‘123’

item_data =

‘id’: item_id,

‘name’: ‘Example Item’,

‘value’: 100

# Create item

try:

table.put_item(Item=item_data)

print(f”Item created: item_data”)

except Exception as e:

print(f”Error creating item: e”)

return

‘statusCode’: 500,

‘body’: f’Error creating item: e’

# Read item

try:

response = table.get_item(Key=’id’: item_id)

item = response.get(‘Item’)

if item:

print(f”Item retrieved: item”)

else:

print(“Item not found”)

except Exception as e:

print(f”Error reading item: e”)

return

‘statusCode’: 500,

‘body’: f’Error reading item: e’

# Update item

try:

table.update_item(

Key=’id’: item_id,

UpdateExpression=”SET #attr = :val”,

ExpressionAttributeNames=’#attr’: ‘value’,

ExpressionAttributeValues=’:val’: 200

)

print(f”Item updated with id item_id”)

except Exception as e:

print(f”Error updating item: e”)

return

‘statusCode’: 500,

‘body’: f’Error updating item: e’

# Delete item

try:

table.delete_item(Key=’id’: item_id)

print(f”Item deleted with id item_id”)

except Exception as e:

print(f”Error deleting item: e”)

return

‘statusCode’: 500,

‘body’: f’Error deleting item: e’

return

‘statusCode’: 200,

‘body’: ‘Successfully performed DynamoDB operations’

“`

This code snippet illustrates the basic CRUD (Create, Read, Update, Delete) operations against a DynamoDB table. It creates an item, retrieves it, updates a specific attribute, and then deletes the item. This example assumes a DynamoDB table named ‘your-dynamodb-table-name’ already exists and has a primary key attribute named ‘id’. Error handling is included to provide feedback on the operation’s success or failure.

This example provides a foundational understanding of how to interact with DynamoDB within a Lambda function.

Deploying and Managing Lambda Functions

Lambda functions, once created, require effective deployment and management strategies to ensure their availability, scalability, and maintainability. This involves understanding versioning, deployment tools, and update procedures. Proper management minimizes downtime, facilitates rollback capabilities, and streamlines the iterative development lifecycle.

Lambda Function Versions and Aliases

Understanding Lambda function versions and aliases is critical for managing code deployments and promoting stability. This approach allows for controlled releases and facilitates rollback strategies.

Lambda functions support versioning, which allows you to maintain multiple iterations of your code within the same function. Each time you publish a new version of your function, a unique Amazon Resource Name (ARN) is generated for that version. This enables you to:

- Isolate Code Changes: Separate code modifications into distinct versions, allowing you to revert to previous stable states if necessary.

- Controlled Rollouts: Gradually release new versions of your function to a subset of traffic (using aliases) to mitigate the impact of potential issues.

- Immutable Infrastructure: Versions act as immutable snapshots of your code, ensuring consistency and predictability in deployments.

Aliases provide a layer of indirection between your function’s code and its consumers. An alias points to a specific function version, allowing you to:

- Simplify Invocation: Clients invoke the function using the alias ARN, which remains constant even when the underlying function version changes.

- Traffic Management: Direct traffic to different versions of your function using aliases, enabling A/B testing, canary deployments, and phased rollouts.

- Versioning Flexibility: Easily switch between function versions by updating the alias to point to a different version without impacting client invocation logic.

For instance, consider a scenario where you have a Lambda function processing image uploads. You have three versions: $LATEST (actively under development), v1 (production), and v2 (canary release). You can create an alias named “production” that points to v1, and an alias named “staging” that points to $LATEST. When deploying a new version (v2), you can initially direct a small percentage of traffic through the “staging” alias to assess its performance before fully migrating production traffic.

Deploying Lambda Functions

Deploying Lambda functions involves packaging your code and dependencies and uploading them to AWS. This can be achieved using various tools, including the AWS CLI, infrastructure-as-code tools (like Terraform or AWS CloudFormation), and CI/CD pipelines.

The AWS CLI provides a command-line interface for interacting with AWS services, including Lambda. It allows you to create, update, and manage your Lambda functions directly. Deployment typically involves the following steps:

- Package Your Code: Bundle your code and any necessary dependencies (e.g., using `pip` for Python) into a deployment package. This package can be a ZIP file or a container image.

- Create the Function (if new): Use the `aws lambda create-function` command to create the function, specifying the function name, runtime, handler, and the deployment package.

- Update the Function (if existing): Use the `aws lambda update-function-code` command to update the function’s code, providing the deployment package. The `aws lambda update-function-configuration` command allows you to update other configuration parameters (e.g., memory, timeout).

- Configure Triggers and Permissions: Configure triggers (e.g., API Gateway, S3) and manage IAM permissions to allow the function to interact with other AWS services.

Infrastructure-as-code (IaC) tools, such as AWS CloudFormation or Terraform, automate the provisioning and management of cloud resources, including Lambda functions. These tools define your infrastructure as code, allowing you to version, reuse, and consistently deploy your Lambda functions and associated resources.

- Define Infrastructure: Define your Lambda function, its configuration, and related resources (e.g., API Gateway, S3 bucket) in a declarative configuration file (e.g., CloudFormation template or Terraform configuration).

- Automated Deployment: Use the IaC tool to deploy the infrastructure based on the configuration file. The tool automatically handles the creation, updating, and deletion of resources.

- Idempotent Operations: IaC tools ensure that deployments are idempotent, meaning that applying the same configuration multiple times results in the same state, minimizing the risk of configuration drift.

CI/CD (Continuous Integration/Continuous Deployment) pipelines automate the process of building, testing, and deploying your code. These pipelines can integrate with your code repository (e.g., GitHub, GitLab) to automatically trigger deployments when code changes are pushed. A typical CI/CD pipeline for Lambda functions includes:

- Code Commit: Developers commit code changes to the code repository.

- Build: The CI/CD system automatically builds the code, packages it, and prepares it for deployment.

- Test: Automated tests (e.g., unit tests, integration tests) are executed to ensure the code functions as expected.

- Deploy: The packaged code is deployed to AWS Lambda using the AWS CLI, IaC tools, or other deployment mechanisms.

- Monitoring and Rollback: The pipeline can integrate monitoring and alerting to detect issues after deployment, and facilitate automatic rollback if necessary.

Updating and Managing Lambda Functions

Updating and managing Lambda functions involves a systematic approach to code and configuration changes, ensuring minimal disruption and facilitating efficient maintenance.

The following procedure Artikels the steps for updating and managing your Lambda function’s code and configuration:

- Develop and Test Code: Make necessary code changes and thoroughly test the updated code locally or in a development environment. Use unit tests and integration tests to validate the changes.

- Package and Deploy Code: Package the updated code and deploy it to AWS Lambda using the AWS CLI, IaC tools, or a CI/CD pipeline. When deploying, consider using a new function version or an alias to minimize the impact on production traffic.

- Update Configuration (if needed): Modify the function’s configuration (e.g., memory, timeout, environment variables) as required using the AWS CLI or through your IaC tool.

- Test in Staging/Canary Environment: If you are using aliases or traffic shifting, test the updated function in a staging or canary environment to validate the changes and ensure they function correctly before deploying to production. This involves routing a small percentage of production traffic to the new version to assess its performance.

- Monitor Function Performance: Monitor the function’s performance metrics (e.g., invocation count, errors, duration, throttles) using CloudWatch metrics. This provides insights into the function’s behavior and helps identify potential issues.

- Promote to Production (if successful): If the testing and monitoring indicate that the updated function is performing well, promote it to production by updating the alias to point to the new version.

- Rollback Strategy: Establish a rollback strategy to revert to the previous function version if issues arise after deployment. This involves updating the alias to point to the previous version.

- Automated Monitoring and Alerting: Implement automated monitoring and alerting to detect issues such as errors, high latency, or unexpected resource consumption. This allows for proactive identification and resolution of problems.

- Version Control and Documentation: Maintain version control of your code and configuration files. Document your Lambda function’s design, configuration, and deployment process.

For example, consider a Lambda function that processes user data. To update the function, you could:

- Modify the code to add a new feature or fix a bug.

- Package the updated code.

- Deploy the updated code to a new version (e.g., v2) of the function.

- Create a “canary” alias and point it to v2.

- Route a small percentage of production traffic to the “canary” alias to test v2.

- Monitor the function’s performance using CloudWatch metrics.

- If v2 performs as expected, update the “production” alias to point to v2.

- If issues arise, update the “production” alias to point back to the previous version (e.g., v1).

Best Practices for Lambda Function Development

Developing effective AWS Lambda functions requires adherence to established best practices to ensure optimal performance, security, and maintainability. These practices address various aspects of the function lifecycle, from initial design and coding to deployment and monitoring. Implementing these guidelines can significantly improve the reliability and efficiency of serverless applications.

Optimizing Lambda Function Performance

Optimizing Lambda function performance is crucial for cost efficiency and responsiveness. Several factors contribute to performance, and understanding them allows for targeted improvements. One critical aspect is the function’s execution time, which directly impacts the cost and the user experience.

- Minimize Function Size: Smaller function packages lead to faster deployment and cold start times. Reducing the size involves only including necessary dependencies, using optimized libraries, and removing unused code. Consider using tools like `pip` with the `–no-cache-dir` and `–only-binary :all:` options during package creation to minimize the package size.

- Efficient Code Implementation: Writing efficient code is essential. This includes optimizing algorithms, minimizing I/O operations, and utilizing caching where appropriate. For instance, if a function frequently accesses the same data, caching the data in memory can significantly reduce execution time.

- Cold Start Considerations: Cold starts, the time it takes for a Lambda function to initialize when it’s not already running, can impact latency. Several strategies can mitigate this:

- Provisioned Concurrency: AWS Lambda allows you to pre-initialize a specified number of function instances. This ensures that the function is ready to respond quickly to incoming requests, minimizing cold start delays.

- Keep-Warm Techniques: Implement a periodic pinging mechanism to keep the function warm. This involves invoking the function at regular intervals to prevent it from becoming idle and subject to cold starts.

- Optimize Dependencies: As mentioned earlier, minimizing dependencies reduces the time required to load them during a cold start. Use only the libraries your function requires.

- Resource Allocation: Configure the function’s memory and CPU allocation appropriately. Allocating more memory also increases the CPU power available to the function. Finding the optimal balance between memory allocation and execution time is crucial for cost-effectiveness. Monitor the function’s performance metrics to identify bottlenecks and adjust resource allocation accordingly.

Error Handling and Exception Management

Robust error handling and exception management are critical for creating reliable and resilient Lambda functions. Proper handling of errors ensures that unexpected issues do not crash the function and provides insights for debugging and improvement.

- Implement Comprehensive Error Handling: Implement `try-except` blocks to catch potential exceptions. Log exceptions with detailed information, including the error message, stack trace, and context. This facilitates debugging and troubleshooting. For example:

try: # Code that might raise an exception result = some_operation() except Exception as e: logging.error(f"An error occurred: e", exc_info=True) raise # Re-raise the exception to propagate it

- Handle Specific Exceptions: Catch specific exception types to handle different error scenarios appropriately. For instance, handle `FileNotFoundError` differently from `TypeError`. This allows for more precise error responses and targeted troubleshooting.

- Logging and Monitoring: Implement thorough logging to track function execution, including input parameters, output, and any errors encountered. Utilize a monitoring service like Amazon CloudWatch to track metrics such as invocation count, error rate, and duration. This data provides valuable insights into function performance and helps identify potential issues.

- Retry Mechanisms: Implement retry mechanisms for operations that might fail transiently, such as network requests or database queries. Use exponential backoff to avoid overwhelming dependent services. Libraries like `backoff` in Python can simplify this.

- Dead-Letter Queues (DLQs): Configure DLQs for asynchronous invocations. If a function fails repeatedly, the event can be sent to a DLQ for later analysis. This prevents the function from continuously failing and allows for manual intervention to resolve the issue.

Security Best Practices

Securing Lambda functions is paramount to protect sensitive data and ensure the integrity of your serverless applications. Adhering to security best practices helps mitigate risks and prevents unauthorized access.

- Use IAM Roles with Least Privilege: Assign IAM roles to Lambda functions with the principle of least privilege. This means granting the function only the necessary permissions to perform its tasks. Avoid granting overly broad permissions, such as `AdministratorAccess`. Instead, create custom policies with specific permissions. For example, to allow a function to read from an S3 bucket, the IAM role should only have `s3:GetObject` permissions for that specific bucket.

- Avoid Hardcoded Credentials: Never hardcode sensitive information, such as API keys, database passwords, or access keys, directly into the function code. Instead, store these secrets securely using AWS Secrets Manager or AWS Systems Manager Parameter Store. Access the secrets dynamically within the function. This practice protects sensitive data and makes it easier to manage and rotate credentials.

- Encrypt Data at Rest and in Transit: Encrypt sensitive data stored in S3 buckets, DynamoDB tables, and other AWS services. Use HTTPS for all communication between the function and other services. For S3, enable server-side encryption with KMS keys.

- Input Validation and Sanitization: Validate and sanitize all input data to prevent injection attacks, such as SQL injection or cross-site scripting (XSS). Sanitize input by removing or encoding potentially malicious characters. Use regular expressions or other validation techniques to ensure that input data conforms to expected formats.

- Regular Security Audits and Updates: Regularly audit the function’s code and dependencies for vulnerabilities. Update the function’s runtime environment and dependencies to the latest versions to address security patches. Use tools like `pip-audit` or `snyk` to scan for known vulnerabilities in your dependencies.

- Network Security: If the function needs to access resources within a VPC, configure appropriate security groups and network access control lists (ACLs) to control network traffic. Consider using a private subnet to isolate the function from the public internet.

Closure

In summary, this guide has provided a structured path to creating and managing your initial AWS Lambda function in Python. From the fundamental setup to advanced integration with AWS services, we’ve covered the key aspects necessary for successful serverless application development. By adhering to the best practices Artikeld, you can optimize your functions for performance, security, and maintainability, paving the way for more complex and innovative serverless solutions.

The journey into AWS Lambda is just beginning; with each function, you expand your capabilities and contribute to the evolution of scalable, event-driven architectures.

Key Questions Answered

What is the maximum execution time for a Lambda function?

The maximum execution time for a Lambda function is 15 minutes (900 seconds). This is a configurable setting, but it has an upper limit.

How are Lambda functions priced?

Lambda functions are priced based on the number of requests and the duration of execution. There’s a free tier available that includes a certain number of requests and compute time per month.

What programming languages are supported by AWS Lambda?

AWS Lambda supports multiple programming languages, including Python, Node.js, Java, Go, C#, and Ruby. You can also provide a custom runtime.

How do I handle dependencies in my Lambda function?

You can include dependencies in your Lambda function by bundling them with your deployment package (for Python, this often involves a `requirements.txt` file and packaging them in a zip file), or by using Lambda layers.

How can I monitor my Lambda function’s performance?

AWS provides monitoring tools such as Amazon CloudWatch, which allows you to track metrics like invocation count, duration, errors, and concurrent executions. You can also set up alarms and create dashboards to monitor your function’s health.