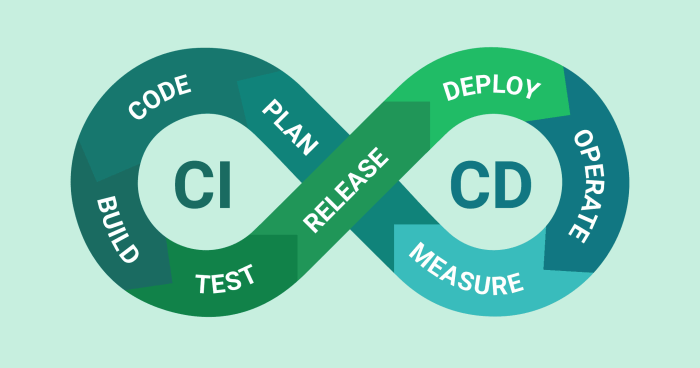

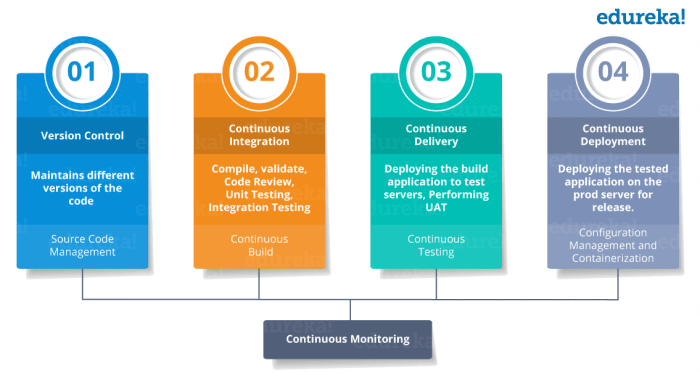

The evolution of software development necessitates agile methodologies and rapid deployment cycles. At the core of this transformation lies Continuous Integration and Continuous Delivery (CI/CD), a practice that automates the build, test, and deployment phases of the software lifecycle. Serverless CI/CD represents a paradigm shift, leveraging cloud-native services to create pipelines that are highly scalable, cost-effective, and inherently resilient.

This exploration of serverless CI/CD will delve into its fundamental components, operational benefits, and the practical considerations for implementation across major cloud platforms. We will dissect the architectural principles, examine best practices, and analyze the implications for security and monitoring, providing a thorough understanding of this transformative technology.

Defining Serverless CI/CD

Serverless CI/CD pipelines represent a significant evolution in software development practices, offering increased efficiency and scalability. This approach leverages the principles of serverless computing to automate and streamline the processes of continuous integration and continuous delivery. By understanding the core concepts and advantages, developers can harness the power of serverless technologies to build and deploy software faster and more reliably.

Core Concept of a Serverless CI/CD Pipeline

A serverless CI/CD pipeline is designed to automate the build, test, and deployment of software applications without the need for managing underlying infrastructure. This means that developers do not need to provision, manage, or scale servers manually. Instead, they rely on cloud providers to handle these tasks automatically. The pipeline is triggered by events, such as code commits to a repository, and then executes a series of automated steps.The fundamental concept revolves around the use of Functions as a Service (FaaS) and other serverless technologies.

These technologies allow developers to write and deploy code that runs in response to specific events. This event-driven architecture is central to serverless CI/CD.

Definition of Serverless Computing in the Context of CI/CD

In the context of CI/CD, serverless computing refers to a cloud computing execution model where the cloud provider dynamically manages the allocation of machine resources. Developers write code, typically in the form of functions, and deploy them to the cloud provider’s infrastructure. The cloud provider then executes these functions in response to triggers, such as code pushes or scheduled events.

This eliminates the need for developers to manage servers, virtual machines, or containers.Serverless CI/CD utilizes various serverless services, including:

- FaaS platforms: Such as AWS Lambda, Google Cloud Functions, and Azure Functions, which execute code in response to events.

- Object storage services: Like Amazon S3, Google Cloud Storage, and Azure Blob Storage, used to store artifacts and configurations.

- API gateways: Such as Amazon API Gateway, used to expose serverless functions as APIs.

- CI/CD services: Like AWS CodePipeline, Google Cloud Build, and Azure DevOps, that orchestrate the entire pipeline.

The cloud provider automatically scales the resources based on the demand. This leads to efficient resource utilization and cost optimization.

Advantages of Using Serverless CI/CD Over Traditional Approaches

Serverless CI/CD offers several advantages over traditional CI/CD approaches that rely on managing dedicated servers or virtual machines. These benefits include:

- Reduced Operational Overhead: Developers are freed from the burden of managing servers, patching, and scaling infrastructure. The cloud provider handles these tasks automatically. This allows development teams to focus on writing code and delivering features.

- Improved Scalability and Elasticity: Serverless platforms automatically scale resources up or down based on demand. This ensures that the CI/CD pipeline can handle varying workloads without manual intervention.

- Cost Optimization: With serverless, you pay only for the resources consumed. This “pay-as-you-go” model can significantly reduce costs compared to traditional approaches, where resources are often provisioned for peak load.

- Faster Deployment Times: Serverless CI/CD pipelines can automate the build, test, and deployment processes more efficiently. This results in faster deployment times and quicker feedback loops.

- Increased Developer Productivity: By removing the complexities of infrastructure management, developers can focus on writing and deploying code. This can lead to increased productivity and faster time-to-market.

These advantages make serverless CI/CD a compelling choice for modern software development, particularly for applications with fluctuating workloads and the need for rapid iteration. For example, consider a scenario where an e-commerce platform uses a serverless CI/CD pipeline. During a major sale event, the pipeline automatically scales to handle increased build and deployment requests, ensuring the platform remains responsive and available to customers.

Key Components of a Serverless Pipeline

A serverless CI/CD pipeline leverages cloud-native services to automate the software development lifecycle. This automation reduces operational overhead and allows developers to focus on writing code. The key components work in concert to streamline the build, test, and deployment processes, ensuring faster release cycles and improved application quality.

Code Repository

The code repository is the central location for storing and managing the source code of an application. It serves as the single source of truth for the codebase, allowing developers to collaborate effectively and track changes over time.

- Role: The code repository stores all versions of the source code, enabling version control and facilitating collaboration among developers. It acts as a trigger for the CI/CD pipeline, initiating the build process whenever changes are pushed to the repository.

- Examples: Popular code repository services include GitHub, GitLab, and AWS CodeCommit. These services provide features like version control, branching, and merging, which are essential for managing complex software projects. For instance, a developer might push a new feature branch to GitHub. This action would then trigger the CI/CD pipeline to build, test, and deploy the changes.

Build Tools

Build tools automate the process of compiling source code, managing dependencies, and packaging the application into a deployable artifact. They ensure that the application is built consistently and reproducibly, regardless of the environment.

- Role: Build tools transform source code into an executable or deployable artifact. They manage dependencies, run unit tests, and perform other necessary tasks to prepare the application for deployment. Serverless pipelines often utilize build tools specifically designed for serverless environments.

- Examples: AWS CodeBuild, Jenkins (although typically not serverless, can be integrated), and CircleCI can be integrated into a serverless pipeline. AWS CodeBuild, for example, can be configured to automatically build code pushed to an AWS CodeCommit repository. The build process might involve installing dependencies using npm or pip, compiling the code, and packaging it into a deployment package.

Testing Frameworks

Testing frameworks are crucial for ensuring the quality and reliability of the software. They allow developers to write automated tests that verify the functionality of the application and catch bugs early in the development cycle.

- Role: Testing frameworks execute automated tests to validate the application’s behavior. They cover various testing levels, including unit tests, integration tests, and end-to-end tests. These frameworks provide feedback on the code’s quality and identify potential issues before deployment.

- Examples: Popular testing frameworks include JUnit (for Java), pytest (for Python), and Jest (for JavaScript). In a serverless pipeline, tests might be executed against deployed serverless functions or API endpoints. For instance, a unit test could verify the logic within a serverless function that processes user data. Integration tests could check the interaction between multiple serverless functions or between a function and a database.

Deployment Platforms

Deployment platforms are responsible for deploying the application artifacts to the cloud infrastructure. They handle the provisioning of resources, configuration of services, and management of the deployment process.

- Role: Deployment platforms automate the process of deploying the application to the cloud infrastructure. They handle the provisioning of resources, such as serverless functions, APIs, and databases. They also configure the necessary settings and manage the deployment process, ensuring that the application is deployed correctly and efficiently.

- Examples: AWS SAM (Serverless Application Model), AWS CloudFormation, and Serverless Framework are commonly used deployment platforms. These tools simplify the deployment process by allowing developers to define the infrastructure as code. For example, a developer could use AWS SAM to define a serverless application consisting of an API Gateway, Lambda functions, and a DynamoDB table. The deployment platform would then automatically provision and configure these resources.

Notification and Monitoring Systems

Notification and monitoring systems provide insights into the performance and health of the CI/CD pipeline and the deployed application. They alert developers to issues and enable them to proactively address problems.

- Role: These systems track the progress of the pipeline and provide real-time feedback on the build, test, and deployment processes. They also monitor the application’s performance in production, alerting developers to errors or performance bottlenecks.

- Examples: AWS CloudWatch, Slack, and email notifications are commonly used. AWS CloudWatch can be used to monitor the performance of serverless functions and other AWS services. Slack and email notifications can be configured to alert developers about build failures, deployment successes, or application errors. For example, if a build fails, the CI/CD pipeline can automatically send a notification to the development team via Slack, enabling them to quickly identify and resolve the issue.

Workflow Orchestration Engine

A workflow orchestration engine coordinates the various stages of the CI/CD pipeline, ensuring that tasks are executed in the correct order and that dependencies are handled appropriately.

- Role: The workflow orchestration engine manages the flow of tasks within the pipeline, automating the entire CI/CD process. It defines the sequence of steps, handles dependencies, and manages the execution of each stage.

- Examples: AWS Step Functions, and other similar services are utilized to orchestrate serverless pipelines. AWS Step Functions, for instance, can be used to define a workflow that starts with a code commit to a repository, triggers a build using AWS CodeBuild, runs tests, and then deploys the application using AWS SAM. The engine ensures that each step is completed successfully before proceeding to the next.

Interactions Between Components

The components interact seamlessly during the CI/CD process. A typical workflow begins with a code commit to the repository. This triggers the build process, which uses build tools to compile and package the code. Automated tests are then executed using testing frameworks. If the tests pass, the deployment platform deploys the application to the cloud infrastructure.

Throughout this process, notification and monitoring systems provide feedback and insights. The workflow orchestration engine coordinates all these steps.

Benefits of Serverless CI/CD

Serverless CI/CD pipelines offer a compelling array of advantages over traditional, infrastructure-managed approaches. These benefits span cost efficiency, scalability, and enhanced security, making serverless a strategically sound choice for modern software development practices. The adoption of serverless CI/CD can significantly streamline development workflows and optimize resource utilization.

Cost Benefits of Implementing a Serverless CI/CD Pipeline

The cost benefits of serverless CI/CD are primarily driven by the “pay-per-use” model. This model eliminates the need for provisioning and maintaining dedicated infrastructure, leading to substantial cost savings.

- Reduced Infrastructure Costs: Serverless CI/CD eliminates the need to provision and manage servers, virtual machines, or containers. Instead, resources are consumed only when tasks are executed, and you are billed only for the actual compute time and resources used. This contrasts sharply with traditional CI/CD systems where you pay for idle resources. For example, a small startup might see a 70% reduction in infrastructure costs by moving to serverless, according to case studies from AWS, Google Cloud, and Azure.

- Optimized Resource Utilization: Serverless platforms automatically scale resources up or down based on demand. This ensures that you are not paying for unused capacity, a common issue with traditional CI/CD systems that often over-provision resources to handle peak loads.

- Elimination of Operational Overhead: Serverless providers handle all the underlying infrastructure management, including server maintenance, patching, and scaling. This frees up DevOps teams to focus on developing and deploying code, rather than managing infrastructure. This leads to increased developer productivity and lower operational costs.

- Predictable Pricing: Serverless pricing models are typically transparent and predictable, making it easier to forecast costs. You pay only for the specific resources you consume, such as the number of function invocations, the amount of memory used, and the duration of execution. This allows for better budget management.

Scalability Aspects of Serverless CI/CD

Scalability is a core strength of serverless CI/CD. The architecture inherently supports automatic scaling, providing unparalleled flexibility and responsiveness to changing workloads. This contrasts sharply with traditional methods, where scaling often involves manual intervention and can be time-consuming.

- Automatic Scaling: Serverless platforms automatically scale compute resources up or down based on demand. This means that the CI/CD pipeline can handle sudden spikes in build requests or test executions without manual intervention. The scaling is typically handled by the cloud provider, ensuring that resources are available when needed.

- High Availability: Serverless architectures are designed for high availability. The cloud provider manages the underlying infrastructure, ensuring that the CI/CD pipeline remains operational even if individual servers or services fail. This minimizes downtime and ensures that builds and deployments can continue uninterrupted.

- Faster Deployment Times: The ability to scale resources quickly allows for faster deployment times. With serverless, the CI/CD pipeline can rapidly provision the resources needed to build, test, and deploy code, reducing the time it takes to get new features and updates to users.

- Improved Developer Productivity: The automatic scaling and high availability of serverless CI/CD frees up developers from having to manage infrastructure. This allows them to focus on writing and testing code, leading to increased productivity and faster release cycles. For example, companies using serverless CI/CD often report a 20-30% reduction in deployment times.

Security Advantages Offered by Serverless Architectures

Serverless architectures provide several security advantages over traditional CI/CD pipelines. These advantages stem from the reduced attack surface, the inherent security features of the cloud provider, and the ability to implement granular access controls.

- Reduced Attack Surface: Serverless architectures have a smaller attack surface compared to traditional systems. Because there are no servers to manage, there are fewer potential points of entry for attackers. The cloud provider manages the underlying infrastructure, including security patching and vulnerability management.

- Enhanced Security Features: Serverless platforms often provide built-in security features, such as identity and access management (IAM), encryption at rest and in transit, and network security controls. These features can be used to protect the CI/CD pipeline and the data it processes.

- Granular Access Controls: Serverless platforms allow for granular access controls, which means that you can restrict access to specific resources and operations based on the principle of least privilege. This helps to prevent unauthorized access and data breaches.

- Automated Security Auditing: Many serverless platforms provide automated security auditing tools that can be used to identify and remediate security vulnerabilities. These tools can help you to ensure that your CI/CD pipeline is secure and compliant with industry regulations. For instance, using serverless CI/CD in conjunction with a managed security service like AWS Security Hub or Azure Security Center can automatically detect and respond to security threats.

Implementing Continuous Integration (CI)

Implementing Continuous Integration (CI) within a serverless CI/CD pipeline necessitates a strategic approach to leverage the scalability, cost-effectiveness, and agility offered by serverless functions. The core objective is to automate the process of integrating code changes frequently, ensuring rapid feedback and early detection of integration issues. This section details the design, organization, and triggering mechanisms inherent in a serverless CI process.

Design of a CI Process Using Serverless Functions

Designing a CI process utilizing serverless functions involves defining the workflow steps, function interactions, and data flow required to build, test, and validate code changes automatically. The design should prioritize modularity, concurrency, and fault tolerance to optimize performance and maintainability.The general architecture comprises the following key components:* Code Repository Integration: The CI process begins with integration with a code repository such as GitHub, GitLab, or Bitbucket.

This integration enables the CI pipeline to be triggered by events like code commits, pull requests, or branch merges.

Triggering Mechanism

An event trigger, typically provided by the code repository or a cloud service, initiates the CI pipeline upon a specified event. For instance, a push event to a designated branch triggers the CI process.

Build Function

This serverless function is responsible for compiling the source code, managing dependencies, and packaging the application. It typically retrieves the code from the repository, executes build commands (e.g., `npm install`, `mvn package`), and produces deployable artifacts.

Test Function(s)

One or more serverless functions execute automated tests, including unit tests, integration tests, and potentially performance tests. These functions run the test suites against the built artifacts and report the results.

Artifact Storage

A serverless object storage service (e.g., Amazon S3, Google Cloud Storage, Azure Blob Storage) stores the built artifacts, test reports, and any other relevant output generated during the CI process.

Notification Function

This function handles notifications, such as sending emails, posting messages to Slack, or updating dashboards, based on the CI process’s outcome. It informs developers about the build and test results.

Pipeline Orchestration

A serverless orchestration service (e.g., AWS Step Functions, Google Cloud Workflows, Azure Logic Apps) coordinates the execution of the serverless functions, manages dependencies, and ensures the correct order of operations. This service defines the workflow and handles error conditions.

Organization of Automated Testing Steps within a Serverless CI Pipeline

Organizing automated testing steps within a serverless CI pipeline requires a structured approach to ensure comprehensive test coverage and efficient execution. This structure involves defining the types of tests, the order of execution, and the reporting mechanisms.The automated testing steps typically include:* Unit Tests: These tests verify the functionality of individual code units (e.g., functions, classes) in isolation.

They are designed to be fast and focused on specific code segments. Unit tests are often the first tests to run in the CI pipeline.

Integration Tests

These tests verify the interaction between different components or modules of the application. They ensure that the components work correctly together. Integration tests often involve testing the communication between different services or systems.

Component Tests

These tests validate the functionality of a specific component, which can be a UI element or a microservice.

End-to-End (E2E) Tests

These tests simulate user interactions with the entire application to ensure that the application functions as expected from the user’s perspective. They test the complete workflow of the application.

Performance Tests

These tests evaluate the application’s performance under different load conditions, such as stress tests and load tests. Performance tests can identify bottlenecks and ensure the application can handle the expected traffic.

Security Tests

These tests verify the application’s security posture, including vulnerability scans, penetration tests, and security audits. Security tests ensure the application is protected against common security threats.The execution order of the tests typically follows a pyramid model, with a large number of unit tests at the base and fewer, more comprehensive tests at the top. This structure prioritizes fast feedback from unit tests and ensures that more complex tests are executed only after the simpler tests have passed.

CI Triggers

CI triggers initiate the serverless CI pipeline based on various events. These triggers ensure that the pipeline runs automatically whenever changes are made to the codebase or according to a predefined schedule.Common CI triggers include:* Code Commits: The most frequent trigger is a code commit to the code repository. Each commit to a specific branch (e.g., `main`, `develop`) can automatically trigger the CI pipeline.

This trigger ensures that every code change is immediately tested.

Pull Requests

When a pull request is created, the CI pipeline can be triggered to build and test the proposed changes. This trigger allows developers to validate their code before merging it into the main branch.

Branch Merges

When a branch is merged into another branch (e.g., `feature` into `main`), the CI pipeline can be triggered. This ensures that merged code changes are tested immediately.

Scheduled Runs

The CI pipeline can be scheduled to run at specific times or intervals (e.g., daily, weekly). Scheduled runs can be used for nightly builds, regression testing, or performance monitoring.

Manual Triggers

Developers can manually trigger the CI pipeline for specific builds or tests. Manual triggers are useful for debugging, re-running failed tests, or triggering tests in a specific environment.

Tag Creation

Creating a tag in the repository can trigger the CI pipeline. This can be useful for building and deploying releases.

Dependency Updates

When dependencies are updated (e.g., a new version of a library is released), the CI pipeline can be triggered to rebuild and test the application with the updated dependencies. This helps ensure compatibility and prevent issues caused by outdated dependencies.

Webhooks

Webhooks from external services (e.g., security scanners, monitoring tools) can trigger the CI pipeline. This can be used to integrate the CI pipeline with other tools and services.

Implementing Continuous Delivery (CD)

Continuous Delivery (CD) automates the process of releasing software changes to production. In a serverless context, CD streamlines the deployment of functions, APIs, and other serverless components. This automation minimizes manual intervention, reduces the risk of errors, and accelerates the release cycle, enabling faster delivery of value to end-users. The core objective is to ensure that code changes, once integrated and tested, can be reliably and frequently released.

Deploying Serverless Applications: The CD Process

The CD process for serverless applications typically involves several stages, automated through a pipeline. This pipeline starts with the successful completion of the Continuous Integration (CI) phase and progresses through automated testing, environment provisioning, and finally, deployment to production.The typical CD process for serverless applications includes:

- Artifact Creation and Storage: Following successful CI, the build artifacts (e.g., packaged code, configuration files) are created and stored in a designated repository, such as an Amazon S3 bucket or a container registry.

- Environment Provisioning: The CD pipeline provisions the necessary infrastructure resources for the deployment environment, such as creating or updating serverless function configurations (e.g., memory allocation, timeout settings), API gateways, and databases. Tools like AWS CloudFormation, Terraform, or Serverless Framework are frequently employed for Infrastructure as Code (IaC).

- Automated Testing: Comprehensive automated testing, including unit tests, integration tests, and potentially end-to-end tests, is executed against the deployed application in a staging or pre-production environment. This stage verifies the functionality and performance of the serverless components.

- Deployment: The serverless application is deployed to the production environment. This involves updating function code, API configurations, and other related resources. Deployment strategies, discussed in detail below, are employed to minimize downtime and risk.

- Verification and Monitoring: Post-deployment, the CD pipeline automatically verifies the application’s functionality in production. This may involve running smoke tests or monitoring key metrics. Continuous monitoring is established to track application health, performance, and error rates.

- Rollback Strategy: A rollback strategy is defined and automated to quickly revert to a previous stable version in case of deployment failures or critical issues. This typically involves deploying a previous artifact version or modifying environment variables to point to a previous version.

Deployment Strategies in Serverless Environments

Different deployment strategies can be used to minimize downtime, reduce risk, and facilitate testing in serverless environments. Choosing the appropriate strategy depends on factors like application complexity, traffic volume, and the desired level of risk tolerance.

- In-Place Deployment: This is the simplest strategy, where the new code directly replaces the existing code. This approach is generally suitable for small, less critical applications or during the initial development phases. However, it can lead to downtime if the deployment process takes a long time or if issues arise during the update.

- Canary Deployment: A small subset of production traffic is routed to the new version of the application (the “canary”). If the canary performs as expected, the traffic is gradually increased until all traffic is routed to the new version. This strategy allows for thorough testing in a production environment and minimizes the impact of potential issues.

- Blue/Green Deployment: Two identical environments are maintained: the “blue” environment (current production) and the “green” environment (new version). Once the green environment is fully tested, traffic is switched from the blue to the green environment, often using a traffic management system like a load balancer or API gateway. This approach provides zero-downtime deployments and allows for easy rollbacks.

- Rolling Deployment: The new version of the application is deployed to a subset of the infrastructure, while the rest continues to serve the old version. As the new version proves stable, more instances are updated until the entire infrastructure is running the new version. This provides a gradual update process and minimizes downtime.

- A/B Testing: Different versions of the application are simultaneously deployed and traffic is split between them based on predefined rules (e.g., user attributes, geographic location). This strategy is primarily used for experimentation and gathering user feedback on different features or designs.

Comparison of CD Methods

The following table compares different Continuous Delivery (CD) methods, considering aspects like downtime, rollback complexity, and testing capabilities.

| Deployment Strategy | Downtime | Rollback Complexity | Testing Capabilities | Suitable Use Cases |

|---|---|---|---|---|

| In-Place Deployment | Potentially High (dependent on deployment time) | Simple (revert to previous version) | Limited (typically after deployment) | Small applications, development environments |

| Canary Deployment | Minimal | Moderate (route traffic back to old version) | Excellent (testing in production with real traffic) | Applications requiring production testing with minimal impact |

| Blue/Green Deployment | Zero | Simple (switch traffic back to blue environment) | Excellent (testing in a separate environment) | Critical applications requiring zero downtime |

| Rolling Deployment | Minimal | Moderate (gradual rollback) | Good (testing during rollout) | Large-scale applications, infrastructure with multiple instances |

Choosing the Right Tools

Selecting the appropriate tools is a critical step in establishing a robust and efficient serverless CI/CD pipeline. The choice of tools significantly impacts the pipeline’s performance, scalability, and maintainability. A well-chosen toolset streamlines the development lifecycle, automates key processes, and ultimately contributes to faster and more reliable software deployments.

Process of Tool Selection

The selection process involves a methodical approach that considers several factors. This ensures the chosen tools align with project needs and organizational goals.The process can be summarized as follows:

- Requirement Analysis: The initial step involves a thorough understanding of project requirements. This includes identifying the specific tasks the CI/CD pipeline needs to automate, the programming languages and frameworks used, the target cloud provider (AWS, Azure, Google Cloud), and the desired level of automation and monitoring. For example, if a project uses Python and targets AWS, the tool selection will differ from a project using Java and targeting Azure.

- Vendor Evaluation: Researching and evaluating available tools is crucial. This involves exploring the features, pricing models, and community support of different options. It is also important to assess the tool’s integration capabilities with other existing systems, such as version control systems (Git), container registries (Docker Hub, Amazon ECR), and monitoring platforms (CloudWatch, Datadog).

- Proof of Concept (PoC): Conducting a PoC is essential to validate the selected tools in a real-world scenario. This involves setting up a small-scale pipeline using the chosen tools and testing their functionalities. The PoC helps identify potential issues, assess performance, and gain hands-on experience with the tools before full-scale implementation.

- Cost Analysis: Consider the total cost of ownership (TCO) for each tool. This includes not only the initial licensing or subscription fees but also the costs associated with infrastructure, maintenance, and training. For serverless CI/CD, pay-per-use pricing models are common, so the cost analysis must consider usage patterns and potential scaling needs.

- Security Considerations: Security is a paramount concern. Evaluate the security features of each tool, including authentication, authorization, encryption, and vulnerability management. Ensure that the tools comply with relevant security standards and best practices.

- Documentation and Support: Assess the availability and quality of documentation and support resources. Comprehensive documentation, active community forums, and responsive vendor support are critical for troubleshooting issues and staying up-to-date with the latest features and updates.

Popular Serverless CI/CD Tools

Several tools are popular for building serverless CI/CD pipelines. These tools provide different functionalities and cater to various needs.

- AWS CodePipeline: AWS CodePipeline is a fully managed CI/CD service provided by Amazon Web Services. It enables the automation of build, test, and deployment processes.

- AWS CodeBuild: AWS CodeBuild is a fully managed build service that compiles source code, runs tests, and produces software packages. It supports a wide range of programming languages and build tools.

- AWS CodeDeploy: AWS CodeDeploy is a deployment service that automates the deployment of applications to various compute services, including AWS Lambda functions.

- Azure DevOps: Azure DevOps is a comprehensive platform that provides a suite of services for managing the entire software development lifecycle, including CI/CD pipelines.

- Azure Pipelines: Azure Pipelines is a CI/CD service within Azure DevOps that allows you to automate the build, test, and deployment of your applications.

- Google Cloud Build: Google Cloud Build is a fully managed CI/CD service on Google Cloud Platform (GCP). It enables the automation of builds, tests, and deployments.

- GitHub Actions: GitHub Actions is a CI/CD platform integrated directly into GitHub repositories. It allows you to automate workflows for building, testing, and deploying code.

- GitLab CI/CD: GitLab CI/CD is a CI/CD solution integrated into the GitLab platform. It provides features for managing the entire software development lifecycle, including version control, issue tracking, and CI/CD pipelines.

- Serverless Framework: The Serverless Framework is an open-source framework for building serverless applications. It provides tools for managing the deployment and configuration of serverless functions and resources.

Criteria for Evaluating Tools

Evaluating tools based on project requirements involves a systematic assessment of various criteria. These criteria help determine the suitability of a tool for a specific project.The evaluation criteria include:

- Integration Capabilities: Assess the tool’s ability to integrate with existing systems, such as version control systems (Git, GitHub), cloud providers (AWS, Azure, GCP), container registries (Docker Hub, ECR, ACR, GCR), and monitoring platforms (CloudWatch, Azure Monitor, Cloud Monitoring).

- Ease of Use: Consider the tool’s user interface, documentation, and learning curve. A user-friendly tool can reduce development time and improve developer productivity.

- Scalability and Performance: Evaluate the tool’s ability to handle increasing workloads and scale efficiently. Serverless CI/CD tools should be able to scale automatically to accommodate changing demands.

- Cost-Effectiveness: Analyze the tool’s pricing model and assess its overall cost-effectiveness. Consider pay-per-use pricing, which is common in serverless environments, and estimate the potential costs based on usage patterns.

- Security Features: Review the tool’s security features, including authentication, authorization, encryption, and vulnerability management. Ensure that the tool complies with relevant security standards and best practices.

- Community Support and Documentation: Evaluate the availability and quality of documentation, community forums, and vendor support. Comprehensive documentation, active community forums, and responsive vendor support are essential for troubleshooting issues and staying up-to-date.

- Customization Options: Assess the tool’s flexibility and customization options. The ability to customize the tool to meet specific project requirements is crucial.

- Support for Serverless Technologies: Ensure that the tool supports the serverless technologies used in the project, such as AWS Lambda, Azure Functions, and Google Cloud Functions.

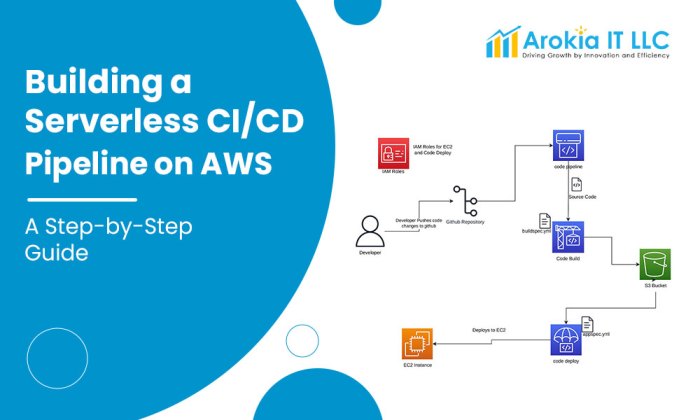

Serverless CI/CD on AWS

Implementing serverless CI/CD on Amazon Web Services (AWS) offers a robust and scalable approach to automate software development lifecycles. Leveraging AWS services, developers can create pipelines that automatically build, test, and deploy code changes with minimal operational overhead. This section details the implementation, best practices, and architectural considerations for serverless CI/CD pipelines on AWS.

Implementing Serverless CI/CD using AWS Services

AWS provides a suite of services designed to facilitate serverless CI/CD pipelines. These services work in concert to automate the various stages of the software development lifecycle. A typical implementation involves the following key components.

- AWS CodePipeline: This is the orchestration service that acts as the central hub for the CI/CD pipeline. It defines the stages, transitions, and actions involved in building, testing, and deploying code. CodePipeline integrates with other AWS services and third-party tools to automate the entire release process.

- AWS CodeCommit/CodeStar: AWS CodeCommit provides a secure, highly scalable, and managed source control service. Alternatively, AWS CodeStar offers a unified interface for managing the entire software development process, including source code management, build, test, and deployment.

- AWS CodeBuild: CodeBuild is a fully managed build service that compiles source code, runs tests, and produces software packages. It supports various programming languages and build tools, eliminating the need to manage build servers.

- AWS CodeDeploy: CodeDeploy automates code deployments to various compute services, such as Amazon EC2, AWS Lambda, and on-premises servers. It handles tasks like application updates, rollback, and traffic management.

- AWS Lambda: Lambda is a serverless compute service that runs code in response to events. It’s frequently used in CI/CD pipelines for tasks such as running automated tests, performing custom transformations, and deploying serverless applications.

- Amazon S3: Amazon Simple Storage Service (S3) serves as a storage location for artifacts, such as build outputs and deployment packages.

- Amazon CloudWatch: CloudWatch monitors the performance and health of the CI/CD pipeline. It provides metrics, logs, and alarms to track the pipeline’s behavior and troubleshoot issues.

Best Practices for Setting Up a Serverless CI/CD Pipeline on AWS

Adhering to best practices is crucial for creating efficient, reliable, and secure serverless CI/CD pipelines on AWS. These practices help optimize performance, reduce costs, and improve the overall development experience.

- Infrastructure as Code (IaC): Implement IaC using tools like AWS CloudFormation or Terraform to define and manage the pipeline’s infrastructure. This approach enables repeatable deployments, version control, and automated updates.

- Automated Testing: Integrate comprehensive automated testing at various stages of the pipeline, including unit tests, integration tests, and end-to-end tests. This ensures code quality and prevents defects from reaching production.

- Immutable Deployments: Employ immutable deployments, where each deployment creates a new version of the application instead of updating the existing one. This approach simplifies rollback and reduces the risk of deployment-related issues.

- Blue/Green Deployments: Implement blue/green deployments to minimize downtime during deployments. This involves running two identical environments (blue and green) and switching traffic between them.

- Security Best Practices: Implement robust security measures, including secure code repositories, access control, and encryption. Use AWS Identity and Access Management (IAM) to manage permissions and restrict access to sensitive resources.

- Monitoring and Logging: Set up comprehensive monitoring and logging to track the pipeline’s performance and identify potential issues. Use CloudWatch to collect metrics, logs, and alarms.

- Version Control: Integrate source code management using AWS CodeCommit, Git, or a similar system. This is crucial for versioning, collaboration, and traceability of code changes.

- Cost Optimization: Optimize costs by leveraging the pay-as-you-go pricing model of serverless services. Monitor resource usage and identify opportunities to reduce costs, such as by optimizing build times and using appropriate instance sizes.

AWS Serverless CI/CD Architecture Diagram

The following diagram illustrates a typical AWS serverless CI/CD architecture, demonstrating the flow of code from source to deployment.

Diagram Description: The diagram depicts a CI/CD pipeline that begins with a developer committing code to a source code repository (e.g., AWS CodeCommit). CodePipeline triggers the pipeline. CodeBuild then compiles the code, runs unit tests, and packages the application. If the build and tests are successful, CodeDeploy deploys the application to a target environment (e.g., AWS Lambda or Amazon EC2). CloudWatch monitors the application and pipeline, providing logs, metrics, and alerts.

S3 is used for storing build artifacts. The pipeline stages are linked with arrows to indicate the flow of data.

This visual representation clearly illustrates the interaction of various AWS services within a serverless CI/CD pipeline.

Serverless CI/CD on Azure

Implementing serverless CI/CD on Azure leverages the platform’s extensive suite of services to automate software builds, tests, and deployments. This approach offers scalability, cost-efficiency, and reduced operational overhead. The process typically involves integrating Azure DevOps for source control and pipeline orchestration with Azure Functions for executing build steps, tests, and deployments. This allows developers to focus on code while the platform handles the underlying infrastructure.

Implementing Serverless CI/CD using Azure Services

Azure provides a comprehensive set of services to build a serverless CI/CD pipeline. The core components include Azure DevOps for source control and pipeline definition, Azure Functions for executing build and deployment steps, and Azure Storage for artifact storage. This integrated approach streamlines the entire software development lifecycle.

- Azure DevOps: Serves as the central hub for the CI/CD pipeline. It provides features for version control (using Azure Repos, which supports Git), build definitions, release pipelines, and test management. Azure DevOps facilitates the creation and management of build and release pipelines, orchestrating the various stages of the CI/CD process.

- Azure Functions: Azure Functions allows developers to execute code without managing servers. It supports various programming languages and triggers, making it ideal for running build scripts, tests, and deployment tasks. Functions are triggered by events, such as code commits to the repository or scheduled tasks.

- Azure Storage: Azure Storage, particularly Azure Blob Storage, provides a scalable and cost-effective solution for storing build artifacts, deployment packages, and other pipeline-related data. Artifacts are typically stored after the build process and retrieved during the deployment phase.

- Azure Container Registry (ACR): For containerized applications, ACR acts as a private Docker registry. It stores and manages container images used during the build and deployment processes, streamlining the deployment of containerized applications.

- Azure App Service or other Deployment Targets: Azure App Service or other Azure services (like Azure Kubernetes Service – AKS) are used to host the deployed applications. App Service provides a platform-as-a-service (PaaS) environment for web applications, APIs, and mobile backends.

Integration of Azure Services for a Seamless CI/CD Process

The integration of Azure services is crucial for creating a seamless CI/CD pipeline. This integration enables automation and efficiency throughout the software development lifecycle. The orchestration of these services leads to streamlined builds, automated testing, and efficient deployments.

- Source Code Integration with Azure DevOps: Developers commit code changes to a repository within Azure DevOps (Azure Repos). This triggers a build pipeline.

- Triggering Builds with Pipelines: When a code change is detected, an Azure DevOps pipeline is triggered. The pipeline can be configured to run automatically on every commit, on a schedule, or manually.

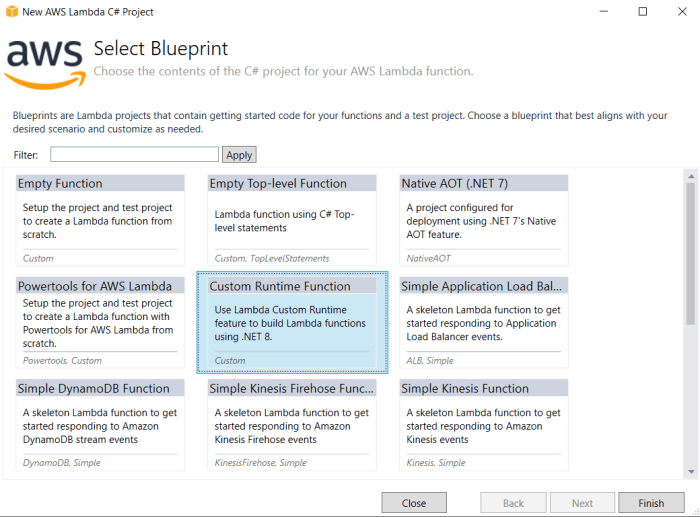

- Build Process with Azure Functions: The build pipeline can utilize Azure Functions to perform tasks like compiling code, running unit tests, and creating deployment packages. Azure Functions can be triggered by the pipeline and execute these tasks serverlessly. For instance, a function might compile a C# application using the .NET SDK.

- Artifact Storage with Azure Storage: Build artifacts (e.g., compiled binaries, packages) are stored in Azure Blob Storage after the build process. This provides a central location for deployment packages.

- Deployment Process with Release Pipelines: Release pipelines in Azure DevOps orchestrate the deployment of artifacts to various environments (e.g., development, staging, production). They leverage Azure Functions for tasks like deploying the application to Azure App Service or AKS.

- Testing Integration: The CI/CD pipeline integrates automated testing, including unit tests, integration tests, and end-to-end tests. Test results are reported and can be used to trigger further actions, such as blocking a deployment if tests fail.

- Monitoring and Logging: Azure Monitor and Application Insights provide monitoring and logging capabilities for the entire pipeline. This allows developers to track the performance of the pipeline, identify issues, and analyze deployment metrics.

Securing a Serverless CI/CD Pipeline on Azure

Securing a serverless CI/CD pipeline is paramount to protect the code, build processes, and deployed applications. Implementing security best practices ensures the confidentiality, integrity, and availability of the entire system.

- Role-Based Access Control (RBAC): Utilize RBAC in Azure to restrict access to resources based on roles and responsibilities. This limits the blast radius if an account is compromised. Grant the least privilege necessary for each user and service principal.

- Service Principals and Managed Identities: Use service principals or managed identities to authenticate Azure Functions and other services. Service principals allow Azure services to authenticate and access other Azure resources without requiring user credentials. Managed identities eliminate the need for developers to manage credentials.

- Secure Storage of Secrets: Store sensitive information like API keys, connection strings, and passwords in Azure Key Vault. Access these secrets securely within Azure Functions using managed identities. This avoids hardcoding sensitive data in the code.

- Network Security: Secure the network by restricting access to Azure resources. Use private endpoints and virtual networks to isolate the CI/CD pipeline and deployed applications. Implement network security groups (NSGs) to control inbound and outbound traffic.

- Pipeline Security: Secure the Azure DevOps pipelines themselves.

- Protect Branches: Enforce branch policies to protect important branches (e.g., `main` or `develop`) from direct pushes. Require code reviews and build validation before merging changes.

- Pipeline Permissions: Restrict pipeline permissions to prevent unauthorized access or modification. Configure pipelines to only run for authorized users or groups.

- Secure Artifacts: Protect build artifacts during storage and deployment. Use encryption and access controls to prevent unauthorized access.

- Regular Security Audits: Conduct regular security audits to identify and address vulnerabilities. Use Azure Security Center to monitor the security posture of the CI/CD pipeline and deployed applications.

- Vulnerability Scanning: Integrate vulnerability scanning into the CI/CD pipeline to identify and remediate security vulnerabilities in the code and dependencies. Use tools like SonarQube or other static analysis tools to scan the code for potential security issues.

- Monitoring and Alerting: Implement monitoring and alerting to detect and respond to security incidents. Use Azure Monitor and security logs to track pipeline activity, access attempts, and potential security breaches. Configure alerts to notify security teams of suspicious activity.

Serverless CI/CD on Google Cloud

Building a serverless CI/CD pipeline on Google Cloud Platform (GCP) offers significant advantages in terms of scalability, cost-effectiveness, and automation. This approach leverages GCP’s serverless services to streamline the software development lifecycle, from code commit to production deployment. The core philosophy centers on eliminating the need for managing infrastructure, allowing developers to focus on writing and deploying code.

Building a Serverless CI/CD Pipeline with Google Cloud Services

Creating a serverless CI/CD pipeline on Google Cloud typically involves integrating several key services. This integration allows for an automated and efficient process, minimizing manual intervention and improving the speed of deployments.The typical process includes:

- Code Repository Integration: The process begins with a code repository, such as Google Cloud Source Repositories, GitHub, or GitLab. These repositories trigger the CI/CD pipeline upon code changes, such as pushes to specific branches.

- Cloud Build: Cloud Build is the core CI/CD service on GCP. It automates the building, testing, and deployment of code. Cloud Build uses build configurations defined in a YAML file (

cloudbuild.yaml) to specify the steps of the build process. - Artifact Registry: After the build process completes, artifacts (e.g., container images, libraries) are stored in Artifact Registry. This provides a centralized location for managing and versioning build artifacts.

- Cloud Functions or Cloud Run Deployment: Depending on the application type, deployment can be handled by Cloud Functions for event-driven, serverless functions or Cloud Run for containerized applications. Cloud Run provides a fully managed platform for running stateless containers.

- Cloud Deploy (Optional): For more complex deployments, especially those involving multiple environments (e.g., staging, production), Cloud Deploy can orchestrate the deployment process, managing rollouts, and providing advanced features like canary deployments.

- Monitoring and Logging: Cloud Monitoring and Cloud Logging are used to track the performance and health of the deployed applications. This includes collecting metrics, generating alerts, and analyzing logs to identify and resolve issues.

Steps for Deploying Applications to Google Cloud Using a Serverless Approach

Deploying applications using a serverless approach on Google Cloud involves a series of well-defined steps to ensure a smooth and automated process. This process is crucial for efficient software delivery.The standard steps include:

- Code Commit: Developers commit code changes to a supported repository (e.g., Cloud Source Repositories, GitHub). This action triggers the CI/CD pipeline.

- Build Trigger: Cloud Build is triggered based on the code commit event. This can be configured to trigger on pushes to specific branches or tags.

- Build Process: Cloud Build executes the steps defined in the

cloudbuild.yamlfile. These steps typically include:- Downloading dependencies.

- Building the application.

- Running tests.

- Creating container images (if applicable).

- Storing artifacts in Artifact Registry.

- Deployment: Depending on the application type and deployment strategy, Cloud Build deploys the application to Cloud Functions or Cloud Run. This step can also involve configuring Cloud Deploy for more complex deployment scenarios.

- Testing and Validation: After deployment, automated tests can be run to validate the application’s functionality. This ensures the application is working correctly in the target environment.

- Monitoring and Logging: Cloud Monitoring and Cloud Logging are configured to collect metrics and logs. This allows for monitoring the application’s performance and identifying any issues.

Example of a Google Cloud Serverless CI/CD Configuration File

A sample cloudbuild.yaml file provides a basic configuration for building and deploying a simple application to Cloud Run. This example showcases the core elements of a Cloud Build configuration.

steps:-name: 'gcr.io/cloud-builders/docker'

args: ['build', '-t', 'gcr.io/$PROJECT_ID/my-app', '.']-name: 'gcr.io/cloud-builders/docker'

args: ['push', 'gcr.io/$PROJECT_ID/my-app']-name: 'gcr.io/google-cloud-sdk/cloud-sdk'

args:-'gcloud'

-'run'

-'deploy'

-'my-app'

-'--image=gcr.io/$PROJECT_ID/my-app'

-'--region=us-central1'

Explanation:

- The first step uses the Docker builder to build a Docker image from the current directory (‘.’) and tags it with the project ID and application name.

- The second step pushes the built image to Google Container Registry (GCR).

- The third step uses the Google Cloud SDK to deploy the image to Cloud Run in the us-central1 region.

Monitoring and Logging in Serverless CI/CD

Monitoring and logging are critical components of a serverless CI/CD pipeline, providing essential insights into the performance, health, and behavior of the system. Without robust monitoring and logging practices, identifying and resolving issues, optimizing performance, and ensuring the reliability of the deployment process becomes significantly more challenging. This section details the importance of these practices and explores methods and tools for their effective implementation.

Importance of Monitoring in a Serverless CI/CD Environment

Monitoring plays a crucial role in the success of a serverless CI/CD pipeline. It provides real-time visibility into the pipeline’s operation, enabling proactive identification and resolution of problems. The ephemeral nature of serverless functions and the distributed architecture necessitate a centralized and comprehensive monitoring strategy.

- Performance Optimization: Monitoring allows for the identification of bottlenecks and inefficiencies in the pipeline. By analyzing metrics such as execution time, memory usage, and error rates, developers can optimize the performance of serverless functions and the overall pipeline. For example, if a function consistently exceeds its allocated memory, monitoring data can trigger alerts and prompt code optimization or resource adjustments.

- Error Detection and Troubleshooting: Monitoring tools provide valuable information for identifying and diagnosing errors. Detailed logs and traces help pinpoint the root cause of issues, allowing for faster resolution. For instance, if a deployment fails, the monitoring system can provide information about which function failed, the specific error message, and the associated logs, facilitating rapid troubleshooting.

- Security Auditing and Compliance: Monitoring helps ensure the security of the CI/CD pipeline and the deployed applications. By tracking access patterns, security events, and potential vulnerabilities, organizations can maintain a secure environment and meet compliance requirements. For example, monitoring can identify unauthorized access attempts or suspicious activity within the pipeline.

- Cost Management: Monitoring helps to understand the resource consumption and cost associated with the CI/CD pipeline. This is particularly important in serverless environments where cost is directly related to resource usage. By monitoring metrics like function invocations and execution time, organizations can optimize resource allocation and minimize costs.

- Automated Alerting and Incident Response: Monitoring systems can be configured to trigger alerts based on predefined thresholds or anomalies. This allows for automated incident response, enabling developers to address issues promptly. For instance, if the error rate of a function exceeds a certain threshold, the monitoring system can automatically trigger an alert, notify the responsible team, and initiate a rollback or other remediation steps.

Methods for Implementing Logging and Tracing in a Serverless Pipeline

Effective logging and tracing are essential for gaining insights into the operation of a serverless CI/CD pipeline. Implementing these practices involves capturing relevant information at various stages of the pipeline and making it accessible for analysis.

- Structured Logging: Implement structured logging to ensure that logs are easily searchable and analyzable. Structured logs use a consistent format (e.g., JSON) to capture key information, such as timestamps, log levels, function names, request IDs, and error messages. This allows for efficient filtering, aggregation, and analysis of log data. For example, a log entry might include the following information:

"timestamp": "2024-10-27T10:00:00Z", "level": "ERROR", "function": "processData", "requestId": "12345", "message": "Failed to process data", "error": "Invalid input" - Centralized Logging: Utilize a centralized logging service to aggregate logs from all components of the serverless pipeline. This provides a single pane of glass for viewing and analyzing log data. Popular centralized logging services include Amazon CloudWatch Logs, Azure Monitor, and Google Cloud Logging.

- Distributed Tracing: Implement distributed tracing to track requests as they flow through the serverless pipeline. Distributed tracing tools, such as AWS X-Ray, Azure Application Insights, and Google Cloud Trace, correlate requests across multiple functions and services, providing end-to-end visibility into the request flow. This enables developers to identify performance bottlenecks and understand the dependencies between different components. The tracing system will assign a unique ID to each request as it enters the pipeline.

As the request flows through different functions, the tracing system propagates this ID and records information about each function’s execution, including start and end times, latency, and any errors encountered. This information is then visualized in a trace diagram, allowing developers to understand the request’s journey and identify areas for optimization.

- Context Propagation: Propagate context, such as request IDs and correlation IDs, throughout the pipeline to ensure that logs and traces can be correlated. This allows for linking related events across different functions and services.

- Logging Best Practices:

- Log at appropriate levels (e.g., DEBUG, INFO, WARNING, ERROR) to capture relevant information without overwhelming the system.

- Include contextual information, such as function names, request IDs, and timestamps, in log messages.

- Avoid logging sensitive information, such as passwords or API keys.

- Regularly review and archive logs to manage storage costs and comply with retention policies.

Tools and Techniques for Monitoring the Performance of Serverless Functions

Monitoring the performance of serverless functions is critical for optimizing the CI/CD pipeline and ensuring the reliability of deployed applications. Several tools and techniques can be used to collect and analyze performance data.

- Cloud Provider Monitoring Services: Utilize the built-in monitoring services provided by cloud providers, such as Amazon CloudWatch, Azure Monitor, and Google Cloud Monitoring. These services automatically collect a wide range of metrics for serverless functions, including invocation count, execution time, memory usage, error rates, and concurrent executions. They also provide dashboards and alerting capabilities.

- Custom Metrics and Dashboards: Define custom metrics to track application-specific performance indicators. Create custom dashboards to visualize these metrics and gain insights into the behavior of serverless functions. For example, if a serverless function processes user orders, you might create a custom metric to track the number of orders processed per minute.

- Profiling Tools: Use profiling tools to identify performance bottlenecks in serverless functions. Profiling tools can provide detailed information about function execution, including CPU usage, memory allocation, and function call graphs. For example, AWS X-Ray can be used to profile AWS Lambda functions.

- Performance Testing: Implement performance testing to evaluate the performance of serverless functions under various load conditions. Performance testing tools can simulate realistic user traffic and measure response times, throughput, and error rates. The results of performance tests can be used to identify performance bottlenecks and optimize the configuration of serverless functions. For instance, using a tool like Apache JMeter or Locust to simulate concurrent requests to a function and analyzing the response times and error rates to identify performance limitations.

- Alerting and Notifications: Configure alerts to be triggered based on predefined thresholds or anomalies in performance metrics. Use notifications to alert the appropriate teams when performance issues arise. For example, set up an alert in CloudWatch to notify the operations team if the average execution time of a Lambda function exceeds a certain threshold.

- Real-time Monitoring: Implement real-time monitoring to provide immediate visibility into the performance of serverless functions. Real-time monitoring dashboards display key metrics in real-time, allowing for quick identification of issues and rapid response. This could involve displaying the current number of invocations, the average execution time, and any errors that are occurring.

Security Considerations

Serverless CI/CD pipelines, while offering significant advantages in terms of scalability and cost-effectiveness, introduce unique security challenges. These challenges stem from the distributed nature of serverless architectures, the reliance on third-party services, and the increased attack surface area. Implementing robust security measures is crucial to protect the pipeline and the applications it deploys.

Security Challenges Specific to Serverless CI/CD Pipelines

The ephemeral nature of serverless functions, the reliance on external services, and the distributed architecture introduce several security challenges. These challenges necessitate a shift in security thinking, focusing on automation, least privilege, and continuous monitoring.

- Increased Attack Surface: Serverless CI/CD pipelines often integrate with numerous services, including code repositories, build tools, container registries, and deployment platforms. Each of these integrations presents a potential entry point for attackers.

- Dependency on Third-Party Services: Serverless architectures frequently rely on third-party services for code management, build processes, and deployments. Vulnerabilities in these services can compromise the entire pipeline. For example, a vulnerability in a container registry could allow attackers to inject malicious code into container images.

- Configuration Management Challenges: The configuration of serverless resources, such as function permissions and API gateways, can be complex and prone to errors. Misconfigurations can lead to unauthorized access or data breaches. For instance, a function granted excessive permissions could be exploited to access sensitive data.

- Ephemeral Nature of Resources: Serverless functions are typically short-lived, making it difficult to perform traditional security audits and forensics. Logs and audit trails are critical for incident response, but they must be carefully managed and retained.

- Automated Deployments and Code Execution: The automation inherent in CI/CD pipelines introduces risks. Compromised build processes or malicious code injections can lead to rapid and widespread deployment of vulnerabilities.

- Lack of Visibility: The distributed nature of serverless applications can make it challenging to gain complete visibility into the security posture of the entire pipeline. Monitoring and logging must be comprehensive and centralized.

Best Practices for Securing Serverless CI/CD Configurations

Implementing a robust security strategy involves a combination of proactive measures, automated checks, and continuous monitoring. These practices help to mitigate risks and ensure the integrity of the pipeline.

- Principle of Least Privilege: Grant only the minimum necessary permissions to service accounts, functions, and other resources. Avoid using broad permissions like “administrator” or “full access.” Instead, define specific roles and permissions based on the principle of least privilege.

- Automated Security Scanning: Integrate security scanning tools into the CI/CD pipeline to automatically identify vulnerabilities in code, dependencies, and container images. This includes static code analysis, dynamic application security testing (DAST), and software composition analysis (SCA).

- Infrastructure as Code (IaC): Use IaC tools like Terraform or CloudFormation to define and manage infrastructure configurations. This enables version control, automated validation, and consistent deployments. IaC also facilitates security audits and compliance checks.

- Secrets Management: Securely store and manage sensitive information, such as API keys, database credentials, and passwords. Use a dedicated secrets management service like AWS Secrets Manager, Azure Key Vault, or Google Cloud Secret Manager. Never hardcode secrets in code or configuration files.

- Input Validation and Sanitization: Implement robust input validation and sanitization to prevent injection attacks, such as SQL injection and cross-site scripting (XSS). Sanitize all user inputs before processing them.

- Regular Security Audits and Penetration Testing: Conduct regular security audits and penetration tests to identify vulnerabilities and assess the effectiveness of security controls. This includes both automated and manual testing.

- Comprehensive Logging and Monitoring: Implement comprehensive logging and monitoring to track all activities within the pipeline. Collect logs from all services and components, and use a centralized logging and monitoring system to analyze and correlate events. Establish alerts for suspicious activities and potential security breaches.

- Network Security: Secure network configurations. This involves using private networks where possible, implementing firewalls, and restricting access to resources based on the principle of least privilege.

- Container Image Security: If using containers, regularly scan container images for vulnerabilities. Use a container registry with built-in scanning capabilities and update base images frequently.

- Automated Patching and Updates: Automate the patching and updating of all software components, including operating systems, libraries, and dependencies. This reduces the risk of exploitation of known vulnerabilities.

Examples of Security Vulnerabilities and Mitigation Strategies

Understanding common vulnerabilities and implementing specific mitigation strategies is critical for securing serverless CI/CD pipelines.

- Vulnerability: Unsecured Secrets Management

- Description: Sensitive credentials like API keys are hardcoded in code or configuration files, or stored insecurely.

- Mitigation: Use a dedicated secrets management service. Never hardcode secrets. Rotate secrets regularly.

- Vulnerability: Insecure Dependencies

- Description: The application uses vulnerable versions of third-party libraries or dependencies.

- Mitigation: Use software composition analysis (SCA) tools to identify and manage dependencies. Regularly update dependencies to the latest secure versions. Implement a dependency firewall.

- Vulnerability: Excessive Permissions

- Description: Functions or service accounts are granted excessive permissions, allowing unauthorized access to resources.

- Mitigation: Apply the principle of least privilege. Define specific roles with only the necessary permissions. Regularly review and audit permissions.

- Vulnerability: Cross-Site Scripting (XSS)

- Description: User-supplied data is not properly sanitized, allowing attackers to inject malicious scripts into web pages.

- Mitigation: Implement input validation and output encoding. Sanitize all user inputs. Use Content Security Policy (CSP) to restrict the sources from which the browser can load resources.

- Vulnerability: SQL Injection

- Description: User-supplied data is not properly validated, allowing attackers to inject malicious SQL code.

- Mitigation: Use parameterized queries or prepared statements. Validate all user inputs. Implement a web application firewall (WAF).

- Vulnerability: Container Image Vulnerabilities

- Description: Container images contain known vulnerabilities.

- Mitigation: Regularly scan container images for vulnerabilities. Use a container registry with built-in scanning capabilities. Update base images frequently.

Epilogue

In conclusion, serverless CI/CD offers a compelling alternative to traditional pipeline architectures, providing significant advantages in terms of cost, scalability, and operational efficiency. By embracing serverless technologies, development teams can accelerate their release cycles, improve application resilience, and focus on delivering value to end-users. The adoption of serverless CI/CD is not merely a technological upgrade, but a strategic shift towards a more agile and responsive approach to software development.

Questions Often Asked

What is the primary advantage of serverless CI/CD over traditional CI/CD?

The primary advantage lies in its scalability and cost-effectiveness. Serverless CI/CD eliminates the need to provision and manage infrastructure, automatically scaling resources based on demand and only charging for the compute time consumed.

How does serverless CI/CD enhance security?

Serverless CI/CD can enhance security through reduced attack surface, automated security patching, and granular access control. The ephemeral nature of serverless functions and the ability to leverage managed security services further contribute to a more secure environment.

What are the key tools used in a serverless CI/CD pipeline?

Popular tools include AWS CodePipeline, Azure DevOps, Google Cloud Build, and third-party solutions like CircleCI and GitLab CI/CD, often integrating with code repositories like GitHub and deployment platforms like Terraform or Serverless Framework.

What are the main challenges when implementing serverless CI/CD?

Challenges include increased complexity in monitoring and debugging, vendor lock-in concerns, and the need for specialized skills in serverless technologies. Effective logging and monitoring are crucial to overcome these challenges.