What is Amazon Athena for serverless querying? This question initiates an exploration of a powerful, serverless query service provided by Amazon Web Services (AWS). Athena empowers users to analyze data stored in various AWS services, primarily Amazon S3, using standard SQL. This approach bypasses the need for complex infrastructure management typically associated with traditional data warehousing solutions, offering a more accessible and cost-effective means of data analysis.

Athena’s inception reflects the evolving demands of big data analysis, providing a scalable and on-demand querying solution. Its development was driven by the need to simplify data exploration, enabling business intelligence, and facilitating data-driven decision-making. Athena’s architecture, deeply rooted in serverless principles, has dramatically reshaped the landscape of data analytics, making sophisticated querying capabilities readily available to a broader audience, regardless of their expertise in database administration.

Overview of Amazon Athena

Amazon Athena is a serverless query service that allows users to analyze data stored in Amazon Simple Storage Service (S3) using standard SQL. It eliminates the need for complex infrastructure management and provides a cost-effective solution for ad-hoc querying and data exploration. Athena’s design emphasizes ease of use, making it accessible to users with varying levels of technical expertise.

Core Purpose of Amazon Athena

The primary function of Amazon Athena is to provide a simplified, on-demand data querying service. It enables users to directly query data stored in S3 without the necessity of setting up or managing any servers or data warehouses. This is achieved through a serverless architecture, where AWS handles all the underlying infrastructure. Athena transforms data analysis, allowing users to quickly extract insights from their datasets.

Brief History and Evolution of Athena

Amazon Athena was launched in It was designed to address the growing need for cost-effective and scalable data analysis solutions within the AWS ecosystem. The initial release focused on querying data stored in S3 using SQL, targeting use cases such as log analysis and business intelligence. Over time, Athena has evolved with the addition of features like:

- Support for various data formats, including CSV, JSON, Parquet, and ORC. This expansion increased the versatility of the service.

- Integration with AWS Glue, allowing users to define and manage data catalogs. This integration streamlined the process of querying data by providing a central metadata repository.

- Federated query capabilities, which enable users to query data from various sources, including on-premises databases and other cloud services. This broadened the scope of Athena’s data analysis capabilities.

- Performance optimizations, such as predicate pushdown and partition pruning, which improved query execution speed and efficiency.

These enhancements have transformed Athena from a basic querying tool into a powerful and versatile data analysis platform.

Main Benefits of Using Athena for Data Querying

Utilizing Amazon Athena provides several advantages for data analysis and management:

- Cost-Effectiveness: Athena follows a pay-per-query pricing model. Users are charged based on the amount of data scanned by their queries. This eliminates the costs associated with maintaining dedicated infrastructure. For instance, if a user only queries a dataset a few times a month, the cost is significantly lower compared to maintaining a continuously running data warehouse.

- Serverless Architecture: Athena is fully serverless, eliminating the need for infrastructure management. AWS handles all the underlying tasks, including server provisioning, scaling, and patching. This allows users to focus on data analysis rather than infrastructure maintenance.

- Scalability and Performance: Athena automatically scales to handle large datasets and complex queries. Its distributed architecture allows for parallel processing, ensuring fast query execution times. For example, when querying a large dataset, Athena can distribute the workload across multiple nodes, resulting in quicker results compared to single-server solutions.

- Ease of Use: Athena supports standard SQL, making it accessible to users with existing SQL knowledge. Its intuitive interface and integration with other AWS services simplify the data analysis process. The user-friendly design reduces the learning curve and enables quick data exploration.

- Integration with AWS Ecosystem: Athena seamlessly integrates with other AWS services, such as S3, Glue, and QuickSight. This integration allows users to build end-to-end data pipelines and create interactive dashboards. For example, users can easily store their data in S3, define a schema using Glue, query the data with Athena, and visualize the results in QuickSight.

These benefits contribute to Athena’s popularity as a versatile and efficient data querying service.

Serverless Architecture and Athena

Amazon Athena is inherently designed to function within a serverless computing model, offering significant advantages in data analysis and cost management. This section will delve into how Athena embraces serverless principles, contrasting it with traditional database approaches and highlighting the cost efficiencies it provides.

Athena’s Serverless Integration

Athena’s architecture is built upon a serverless foundation, meaning users do not need to provision, manage, or scale any underlying infrastructure. This contrasts sharply with traditional database systems that require significant administrative overhead, including server setup, configuration, patching, and capacity planning.Athena’s serverless nature manifests in several key aspects:

- No Server Management: Users interact with Athena through SQL queries without concern for the underlying hardware or software. AWS manages all infrastructure, including compute resources, storage, and database software.

- Automatic Scaling: Athena automatically scales its resources to handle query workloads. It dynamically allocates the necessary compute power based on the complexity and size of the data being queried.

- Pay-per-Query Pricing: Users are charged only for the queries they run, based on the amount of data scanned. There are no upfront costs or charges for idle resources. This model ensures cost-effectiveness, especially for infrequent or variable query patterns.

Comparison with Traditional Database Approaches

Traditional database systems, such as those using relational database management systems (RDBMS) like MySQL or PostgreSQL, necessitate a different operational paradigm. These systems require dedicated server infrastructure, whether on-premises or in the cloud.The key differences between Athena and traditional database approaches are:

- Infrastructure Management: Traditional databases demand constant management, including server provisioning, configuration, and maintenance. Athena eliminates this burden by providing a managed service.

- Scalability: Scaling traditional databases often involves complex procedures like vertical scaling (increasing server resources) or horizontal scaling (adding more servers), potentially requiring downtime and expertise. Athena handles scaling automatically.

- Cost Model: Traditional databases often involve upfront costs for hardware, software licenses, and ongoing operational expenses. Athena’s pay-per-query model can be significantly more cost-effective, especially for infrequent or ad-hoc querying.

For example, consider a scenario where a company needs to analyze a large dataset infrequently, such as for quarterly financial reporting. Using a traditional database, the company would need to maintain a database server running 24/7, incurring costs even when the server is idle. With Athena, the company only pays for the queries executed during the reporting period, resulting in substantial cost savings.

Cost Efficiency Advantages

Athena’s serverless design directly translates into significant cost efficiencies. The pay-per-query pricing model ensures that users are only charged for the resources they consume.The cost efficiency advantages of Athena are:

- Elimination of Idle Resource Costs: Users are not charged for idle servers, unlike traditional database systems where servers incur costs regardless of usage.

- Cost-Effective for Infrequent Queries: Athena is particularly advantageous for infrequent or ad-hoc queries, where the cost of maintaining a dedicated database server would be prohibitive.

- Reduced Operational Overhead: The serverless nature of Athena reduces the need for database administrators and associated operational costs.

- Scalability and Resource Allocation: Athena’s ability to automatically scale resources ensures that users are only paying for the exact resources required for their queries, preventing over-provisioning and wasted resources.

The pay-per-query model can be expressed as:

Cost = (Data Scanned in GB)

(Cost per GB)

Consider a real-world example: a marketing team wants to analyze clickstream data stored in Amazon S3. With Athena, they can query the data on demand, paying only for the data scanned during each query. If the queries are infrequent, the cost is minimal compared to maintaining a dedicated database instance. This cost-effective approach empowers data-driven decision-making without the burden of managing infrastructure.

Data Sources Supported by Athena

Amazon Athena’s versatility stems from its ability to query data residing in various locations and formats. This capability allows users to analyze data across different storage solutions without the need for complex ETL processes or data migration. Athena supports a wide array of data sources, offering flexibility and efficiency in data analysis workflows.

Connecting Athena to Different Data Sources

Connecting Athena to data sources typically involves configuring data catalogs and setting up access permissions. Athena leverages the AWS Glue Data Catalog to store metadata about the data sources, including schema, table definitions, and location. The process varies slightly depending on the data source, but generally follows these steps:* AWS S3: Data stored in Amazon S3 is the most common data source for Athena.

Users can point Athena to the S3 bucket containing the data and define the schema using the AWS Glue Data Catalog. Athena then uses this metadata to interpret and query the data.* Other AWS Services: Athena can query data from other AWS services, such as Amazon DynamoDB, Amazon CloudWatch Logs, and Amazon Kinesis Data Streams. For DynamoDB, Athena can query data directly by mapping DynamoDB tables to Athena tables.

For CloudWatch Logs and Kinesis Data Streams, users can define schemas and query data using Athena’s SQL interface.* External Data Sources (via Connectors): Athena supports querying data from external data sources such as relational databases (e.g., MySQL, PostgreSQL) and other data stores. This is achieved through the use of Athena data source connectors. These connectors act as a bridge between Athena and the external data source, translating SQL queries into the native query language of the external source and returning the results.

Connectors are deployed as AWS Lambda functions.* Data Catalog Configuration: Regardless of the data source, the AWS Glue Data Catalog is crucial. Users must define tables, schemas, and data locations within the catalog to enable Athena to query the data. This often involves specifying the file format, delimiter, and data types for each column.

File Formats Supported by Athena

Athena’s ability to handle various file formats is a key factor in its flexibility. Supporting different formats allows users to analyze data stored in their preferred formats without the need for conversion. Athena supports the following file formats:* CSV (Comma-Separated Values): A widely used format for storing tabular data. Athena supports various CSV configurations, including custom delimiters, quote characters, and escape characters.* JSON (JavaScript Object Notation): A popular format for semi-structured data.

Athena can query JSON data, including nested structures, by utilizing functions to extract specific elements.* Parquet: A columnar storage format optimized for analytical queries. Parquet’s columnar storage allows Athena to efficiently read only the necessary columns, improving query performance.* ORC (Optimized Row Columnar): Another columnar storage format, similar to Parquet. ORC offers features like data compression and predicate pushdown, further enhancing query performance.* Avro: A row-oriented storage format commonly used in the Hadoop ecosystem.

Athena can query Avro data, allowing users to leverage existing Avro data sets.* Text files (e.g., TSV – Tab-Separated Values): Athena can also query plain text files with different delimiters, offering flexibility for various data storage scenarios.

Querying with Athena

Amazon Athena provides a powerful and flexible platform for querying data stored in various data sources. Understanding the query language and leveraging advanced techniques are crucial for extracting meaningful insights efficiently. This section delves into the SQL dialect employed by Athena, presents basic and advanced querying examples, and highlights optimization strategies.

SQL Dialect in Athena

Athena utilizes a standard SQL dialect based on Presto, which is an open-source distributed SQL query engine. This dialect is ANSI SQL compliant, supporting a wide range of SQL functions and operators. While generally compatible with standard SQL, it includes specific extensions and features tailored for data analysis and querying data stored in various formats like CSV, JSON, Parquet, and ORC.The Athena SQL dialect supports:

- Standard SQL functions: Includes functions for string manipulation (e.g., `SUBSTR`, `CONCAT`), date and time operations (e.g., `DATE_TRUNC`, `DATE_ADD`), and mathematical calculations (e.g., `SUM`, `AVG`).

- Data type support: Supports a broad spectrum of data types, including `INT`, `BIGINT`, `VARCHAR`, `BOOLEAN`, `DATE`, `TIMESTAMP`, and `ARRAY`.

- Window functions: Enables advanced analytical capabilities such as calculating running totals, rankings, and moving averages.

- JSON functions: Allows for the extraction and manipulation of data stored in JSON format.

- Array functions: Provides functionality to work with array data types.

- User-defined functions (UDFs): Athena allows users to create and register custom functions using Java or Python, extending its capabilities.

Basic SQL Queries

Basic SQL queries form the foundation for data retrieval in Athena. These queries are designed to select data from tables, filter results, and perform basic aggregations. Understanding these fundamentals is essential for more complex data analysis.Here are some examples:

- Selecting all columns from a table:

This query retrieves all data from a specified table.

SELECT- FROM my_database.my_table; - Selecting specific columns:

This query retrieves only the specified columns.

SELECT column1, column2 FROM my_database.my_table; - Filtering data with WHERE clause:

This query filters data based on a specified condition.

SELECT- FROM my_database.my_table WHERE column1 = 'value'; - Ordering results with ORDER BY clause:

This query sorts the results based on one or more columns.

SELECT- FROM my_database.my_table ORDER BY column1 DESC; - Grouping and aggregating data with GROUP BY and aggregate functions:

This query groups data and performs calculations (e.g., `SUM`, `AVG`, `COUNT`) on each group.

SELECT column1, COUNT(*) FROM my_database.my_table GROUP BY column1;

These examples showcase the core building blocks of SQL queries in Athena, enabling users to extract and manipulate data effectively.

Advanced Querying Techniques

Beyond basic SQL, Athena offers advanced techniques for optimizing queries and handling large datasets. These techniques include partitioning and data optimization strategies, which are critical for performance and cost efficiency.

- Partitioning:

Partitioning involves dividing a table into logical segments based on the values of one or more columns (partition keys). This enables Athena to selectively scan only the relevant partitions during a query, significantly reducing the amount of data processed and improving query performance. Common partition keys include date, region, or customer ID.

For example, consider a table of web server logs partitioned by date. A query filtering for logs from a specific date would only need to scan the partition corresponding to that date, dramatically reducing the query time compared to scanning the entire table.

To create a partitioned table:

CREATE EXTERNAL TABLE my_database.my_table ( column1 VARCHAR, column2 INT ) PARTITIONED BY (date_partition DATE) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE LOCATION 's3://my-bucket/my-table/';To add partitions:

ALTER TABLE my_database.my_table ADD PARTITION (date_partition = '2023-01-01') LOCATION 's3://my-bucket/my-table/date_partition=2023-01-01/'; - Data Optimization:

Data optimization involves techniques to improve query performance and reduce costs.

- Data Format: Using columnar storage formats like Parquet or ORC is recommended. These formats store data column-wise, allowing Athena to read only the columns needed for a query, reducing I/O and improving performance.

For example, if you have a table with 20 columns and a query only needs 3 columns, using Parquet format allows Athena to read only those 3 columns, significantly reducing the amount of data scanned.

- Compression: Compressing data using algorithms like GZIP or Snappy reduces storage space and can improve query performance by reducing the amount of data that needs to be read from S3.

- Data Filtering: Efficient use of the `WHERE` clause to filter data early in the query process minimizes the data scanned.

- Query Optimization: Careful query design, including using the appropriate join types and avoiding unnecessary calculations, can significantly impact performance.

- Data Format: Using columnar storage formats like Parquet or ORC is recommended. These formats store data column-wise, allowing Athena to read only the columns needed for a query, reducing I/O and improving performance.

These advanced techniques enable users to effectively query large datasets, optimize performance, and manage costs within Athena.

Cost Considerations and Optimization

Understanding the cost implications of utilizing Amazon Athena is crucial for effective resource management and budget planning. Athena’s pricing model and optimization strategies are key to controlling expenses while leveraging its serverless querying capabilities. Careful consideration of query design, data formats, and data partitioning can significantly impact the overall cost.

Pricing Model of Athena

Athena operates on a pay-per-query basis. This means that users are charged for the amount of data scanned by each query. There are no upfront costs, and users only pay for the queries they run. This model aligns with the serverless architecture, offering flexibility and scalability without requiring infrastructure management.

The pricing is determined by the amount of data scanned per query, typically measured in gigabytes (GB). The cost per GB scanned varies depending on the AWS Region where the Athena queries are executed. Data scanned from compressed formats and partitioned data can significantly reduce the cost.

* Data Scanned: This is the primary factor influencing the cost. Athena scans data from the underlying data sources (e.g., S3) to execute queries.

– AWS Region: The cost per GB scanned varies based on the AWS Region.

– Data Formats: Using compressed data formats (e.g., GZIP, Snappy, ORC, Parquet) can dramatically reduce the amount of data scanned, thus lowering costs.

– Query Execution Time: While not a direct cost component, faster query execution can indirectly reduce costs by optimizing resource utilization.

– Data Transfer: Data transfer costs may apply if the data is stored in a different AWS Region than where the Athena query is executed.

The general formula for calculating Athena costs is:

Cost = (Data Scanned in GB)

– (Cost per GB in the AWS Region)

For example, if a query scans 10 GB of data in the US East (N. Virginia) region, and the cost per GB is $5, the cost of the query would be $50.

Comparing Costs with Other Data Warehousing Solutions

Athena’s cost-effectiveness can be assessed by comparing it with traditional data warehousing solutions like Amazon Redshift or other cloud-based data warehouses. The choice between these solutions depends on specific requirements, including data volume, query complexity, and the need for real-time analytics.

* Amazon Redshift: Redshift is a fully managed, petabyte-scale data warehouse service. It offers high performance for complex analytical queries. However, it involves upfront costs for cluster provisioning and ongoing infrastructure management. Costs are driven by the size of the cluster, storage, and data transfer. Redshift is typically more suitable for workloads requiring complex ETL processes and very large datasets with frequent, complex queries.

– Other Cloud Data Warehouses: Similar to Redshift, other cloud-based data warehouses (e.g., Snowflake, Google BigQuery) offer robust data warehousing capabilities with varying pricing models. These solutions often involve costs for storage, compute, and data transfer. They typically require more upfront setup and configuration compared to Athena.

– Athena: Athena’s serverless, pay-per-query model makes it suitable for ad-hoc queries, exploratory data analysis, and workloads with infrequent or unpredictable query patterns.

It eliminates the need for infrastructure management, reducing operational overhead. The cost is primarily tied to data scanned, making it cost-effective for small to medium datasets and occasional querying.

In general, Athena is often more cost-effective for:

* Small to medium datasets.

– Infrequent or ad-hoc queries.

– Exploratory data analysis.

– When the user wants to avoid managing infrastructure.

Redshift or other data warehouses may be more cost-effective for:

* Very large datasets (petabytes).

– Complex, frequently executed queries.

– Real-time analytics requirements.

– When the user requires complex ETL processes.

Methods for Optimizing Queries to Reduce Costs

Several strategies can be employed to optimize Athena queries and minimize costs. These techniques focus on reducing the amount of data scanned, improving query performance, and leveraging Athena’s features effectively.

* Data Partitioning: Partitioning data involves organizing data into logical segments based on specific criteria (e.g., date, region, product category). When querying partitioned data, Athena can efficiently filter data by only scanning the relevant partitions, drastically reducing the amount of data processed. For instance, if a dataset is partitioned by date, a query for a specific date range will only scan the data for those dates.

To illustrate this, consider a dataset of sales transactions stored in Amazon S3. If the data is partitioned by date, a query for sales on January 1, 2023, would only scan the data in the partition for that date, significantly reducing the data scanned compared to a query that scans the entire dataset.

– Data Compression: Compressing data using formats like GZIP, Snappy, ORC, or Parquet can significantly reduce the storage size and the amount of data Athena needs to scan.

Parquet and ORC are particularly efficient columnar storage formats that are optimized for analytical queries.

For example, if a dataset is stored in CSV format, compressing it with GZIP can reduce the storage size and the data scanned by Athena, resulting in cost savings. Converting the data to Parquet format offers even greater compression and performance benefits.

– Query Optimization: Writing efficient SQL queries is crucial. This involves using the correct data types, avoiding unnecessary `SELECT` statements, and utilizing `WHERE` clauses effectively to filter data early in the query.

For example, instead of `SELECT

– FROM table`, specify the columns needed in the `SELECT` statement. Also, using `WHERE` clauses to filter data based on specific criteria (e.g., `WHERE date = ‘2023-01-01’`) reduces the data scanned.

– Data Format Selection: Choosing the appropriate data format is essential. Columnar formats like Parquet and ORC are optimized for analytical queries, enabling Athena to read only the required columns and improve query performance.

If the data is stored in a row-based format like CSV, Athena has to scan the entire row to retrieve specific columns. However, if the data is stored in a columnar format like Parquet, Athena can read only the relevant columns, significantly reducing the amount of data scanned.

– Use of `LIMIT` Clause: When exploring data or retrieving a subset of results, using the `LIMIT` clause restricts the amount of data scanned.

For instance, when previewing a table, using `SELECT

– FROM table LIMIT 10` will only scan the first 10 rows, saving costs compared to scanning the entire table.

– Caching: Athena caches query results, which can improve performance and reduce costs for frequently executed queries. Subsequent queries that access the same data can retrieve results from the cache, avoiding the need to scan the underlying data sources again.

If a query is run repeatedly, Athena will store the results in a cache. Subsequent executions of the same query will retrieve the results from the cache, significantly reducing the data scanned and improving performance.

– Cost Allocation Tags: Using cost allocation tags allows you to track and categorize Athena costs, providing insights into which queries or users are consuming the most resources.

This information helps in identifying optimization opportunities and controlling costs.

By tagging queries with specific project names or user IDs, you can analyze the cost associated with each tag and identify areas where costs can be reduced.

Use Cases for Athena

Amazon Athena’s serverless architecture and SQL-based querying capabilities make it suitable for a diverse range of data analysis tasks. Its flexibility allows it to be applied across various industries and use cases, simplifying data access and analysis without the need for complex infrastructure management. This section explores specific real-world applications of Athena, demonstrating its versatility and effectiveness.

Data Analysis and Business Intelligence

Athena empowers users to perform ad-hoc analysis and create business intelligence dashboards by querying data stored in various formats within data lakes or other data repositories. This eliminates the need to move or transform data before analysis, reducing time to insights.

Log Analysis

Athena excels in analyzing log data generated by applications, servers, and other systems. It allows users to extract valuable insights from these logs, such as identifying performance bottlenecks, security threats, and user behavior patterns. This capability is crucial for monitoring, troubleshooting, and optimizing system operations.

To illustrate the process, consider a web server generating access logs in the Common Log Format (CLF). These logs, typically stored in Amazon S3, can be directly queried by Athena. A user could write SQL queries to:

- Identify the most frequently accessed pages.

- Determine the geographic distribution of users based on their IP addresses.

- Detect potential security threats by analyzing HTTP status codes and user agents.

The efficiency of Athena in log analysis stems from its ability to handle large datasets and its integration with other AWS services like CloudWatch Logs and S3.

Use Case Examples in a Responsive Table

Below is a table outlining diverse use cases for Athena, demonstrating its adaptability across different domains. The table is designed to be responsive, adapting to various screen sizes for optimal readability.

| Use Case | Description | Data Sources | Benefits |

|---|---|---|---|

| Website Analytics | Analyzing web server logs to track website traffic, user behavior, and performance metrics. | S3 (web server logs in formats like CLF or JSON), CloudFront logs | Identifies traffic patterns, optimizes content, and enhances user experience. |

| Application Performance Monitoring (APM) | Monitoring application logs to identify performance bottlenecks, errors, and other issues. | S3 (application logs), CloudWatch Logs, Kinesis Data Firehose | Faster troubleshooting, improved application stability, and optimized resource utilization. |

| Security Analysis | Analyzing security logs to detect and respond to security threats, such as unauthorized access attempts and malicious activities. | S3 (security logs from various sources), VPC Flow Logs, CloudTrail logs | Improved security posture, faster incident response, and reduced risk of data breaches. |

| Business Intelligence Dashboards | Creating interactive dashboards and reports to visualize key business metrics and trends. | S3 (data in various formats), databases (using federated queries) | Data-driven decision making, improved business performance, and better understanding of customer behavior. |

Security and Access Control in Athena

Securing data and controlling access are paramount concerns when utilizing a service like Amazon Athena, especially given its serverless nature and reliance on external data sources. Implementing robust security measures is crucial to protect sensitive information, maintain data integrity, and comply with regulatory requirements. This section will delve into the security features offered by Athena, access management using AWS Identity and Access Management (IAM), and best practices for securing data within the Athena environment.

Security Features Offered by Athena

Athena provides several built-in security features designed to protect data and control access. These features are integral to the service’s design and are essential for maintaining a secure querying environment.

- Encryption: Athena supports encryption at rest using AWS Key Management Service (KMS) keys. This ensures that data stored in Amazon S3, which Athena queries, is protected from unauthorized access. Users can choose to use AWS-managed KMS keys or customer-managed KMS keys, offering flexibility in managing encryption keys and access control. Using customer-managed keys provides more granular control over key rotation and access policies.

- Network Isolation: Athena queries are executed within the AWS infrastructure. Users can leverage Virtual Private Clouds (VPCs) and security groups to control network access to S3 buckets containing the data. This enables isolation of Athena from the public internet, reducing the attack surface.

- Data Masking and Filtering: While Athena doesn’t offer built-in data masking or filtering capabilities directly within the query execution, it integrates with other AWS services and allows users to implement these features. For example, users can pre-process data in S3 with services like AWS Glue to mask sensitive information before Athena queries are run.

- Audit Logging: Athena integrates with AWS CloudTrail, providing detailed logs of all API calls made to Athena. These logs record information such as the user, the API call, the source IP address, and the request parameters. This audit trail is crucial for monitoring activities, identifying potential security threats, and meeting compliance requirements.

Managing Access Control Using IAM

AWS IAM is the cornerstone of access control in Athena. IAM policies define the permissions granted to users, groups, or roles, specifying which Athena actions they can perform and on which resources.

- IAM Policies: IAM policies are JSON documents that define permissions. They can be attached to IAM users, groups, or roles. For Athena, policies typically grant permissions to perform actions like `athena:StartQueryExecution`, `athena:GetQueryExecution`, `athena:ListWorkGroups`, and `athena:GetWorkGroup`.

- IAM Roles: IAM roles are a more secure way to grant access to Athena. Users can assume a role, gaining the permissions defined in the role’s policy. This approach allows for temporary access, reducing the risk of long-term credentials being compromised. For example, an IAM role can be created to allow an Athena query to access data in a specific S3 bucket.

- Resource-Based Policies: Athena supports resource-based policies for workgroups. This allows specifying which IAM principals (users, roles, etc.) can access a particular workgroup. This is helpful for isolating different teams or projects.

- Examples of IAM Policies:

- Allowing a user to query Athena: A policy granting permissions to `athena:StartQueryExecution`, `athena:GetQueryExecution`, and `athena:GetQueryResults` for a specific workgroup.

- Granting access to an S3 bucket: An IAM role allowing Athena to read data from a specific S3 bucket using `s3:GetObject` and `s3:ListBucket` permissions.

Best Practices for Securing Data Stored in Athena

Implementing best practices is essential for ensuring the security of data used with Athena. These practices address various aspects, from data storage to query execution.

- Least Privilege Principle: Grant users and roles only the necessary permissions to perform their tasks. Avoid giving broad, overly permissive policies. This reduces the potential impact of a security breach.

- Encryption: Always encrypt data at rest in S3 using KMS keys. This protects the data from unauthorized access if the storage is compromised. Rotate KMS keys regularly to enhance security.

- Network Security: Use VPC endpoints for Athena to allow private access to Athena from within a VPC. This avoids exposing Athena to the public internet. Configure security groups to restrict inbound and outbound traffic to only necessary sources.

- Data Governance: Implement data governance policies to manage data access and usage. This includes defining data access controls, data masking, and data retention policies. Use AWS Lake Formation to manage data access and security.

- Audit and Monitoring: Regularly review CloudTrail logs to monitor Athena API calls and identify any suspicious activity. Set up alerts for critical events, such as unauthorized access attempts. Use AWS Security Hub to centrally manage security alerts and compliance checks.

- Data Masking and Anonymization: Before storing data in S3 for Athena queries, consider masking or anonymizing sensitive data. This reduces the risk of data breaches and protects privacy. Use services like AWS Glue to implement data masking.

- Regularly Review and Update Policies: Periodically review IAM policies to ensure they align with current security requirements. Update policies as needed to reflect changes in user roles, data access requirements, and security best practices.

Integrating Athena with Other AWS Services

Amazon Athena’s power is amplified through its seamless integration with other AWS services, fostering a comprehensive data analysis ecosystem. This integration enables users to build sophisticated data pipelines, automate workflows, and leverage the strengths of various AWS tools for a unified data management and analytics experience. This section explores the key integrations and their implications for efficient data processing.

Integration with Amazon S3 and AWS Glue

Athena’s core functionality hinges on its interaction with Amazon S3 and AWS Glue. Understanding this relationship is crucial for effective data querying and management.

Athena relies on Amazon S3 for data storage. Data residing in S3 buckets serves as the source for Athena queries. When a query is executed, Athena accesses the data directly from S3, eliminating the need for data loading or ETL (Extract, Transform, Load) processes before analysis. The user specifies the S3 location of the data, and Athena reads the data in the format specified (e.g., CSV, JSON, Parquet).

This direct interaction facilitates quick query execution and cost-effectiveness, as users only pay for the queries they run.

AWS Glue, on the other hand, provides a managed ETL service and a central metadata repository known as the Data Catalog. This is a crucial component for Athena’s functionality. The Glue Data Catalog stores metadata about the data stored in S3, including table schemas, data types, and partitioning information. Athena uses this metadata to understand the structure of the data, enabling users to query the data using standard SQL.

Without the Glue Data Catalog, users would have to manually define the schema for each dataset, which is time-consuming and prone to errors.

The integration allows users to:

- Discover Data: Use AWS Glue crawlers to automatically scan data in S3, infer schemas, and populate the Data Catalog. This eliminates the manual effort of schema definition.

- Catalog Metadata: Store and manage metadata about the data in a centralized location, making it easier to organize and govern the data.

- Query Data: Use Athena to query data based on the metadata stored in the Glue Data Catalog. This provides a user-friendly interface for data analysis.

- Transform Data (indirectly): While Athena itself does not perform extensive data transformations, it can query data that has been pre-processed by AWS Glue ETL jobs, allowing for complex data transformations before querying.

Functionalities of Athena and AWS Glue: A Comparison

While both Athena and AWS Glue are essential for data analysis on AWS, they serve distinct purposes. Understanding their differences helps in choosing the appropriate service for specific tasks.

Athena is primarily a query service. It excels at interactive querying and ad-hoc analysis of data stored in S3. It offers a serverless environment, allowing users to query data without managing infrastructure.

AWS Glue, in contrast, is a managed ETL service and a data catalog. It focuses on data preparation, transformation, and metadata management. Glue provides features for data discovery, schema inference, ETL job creation, and data cataloging.

The following table highlights the key differences:

| Feature | Amazon Athena | AWS Glue |

|---|---|---|

| Primary Function | Interactive Querying and Ad-hoc Analysis | ETL, Data Catalog, and Data Transformation |

| Focus | Query Execution and Data Analysis | Data Preparation and Metadata Management |

| Data Transformation | Limited (primarily through SQL) | Extensive (using ETL jobs) |

| Pricing Model | Pay-per-query | Pay-per-use (for crawlers, ETL jobs, and Data Catalog storage) |

| Serverless | Yes | Yes (for most components) |

The best approach is often to use them together: Athena for querying and analyzing data, and Glue for preparing and managing that data. For example, one might use Glue to clean and transform raw data, then use Athena to query the transformed data.

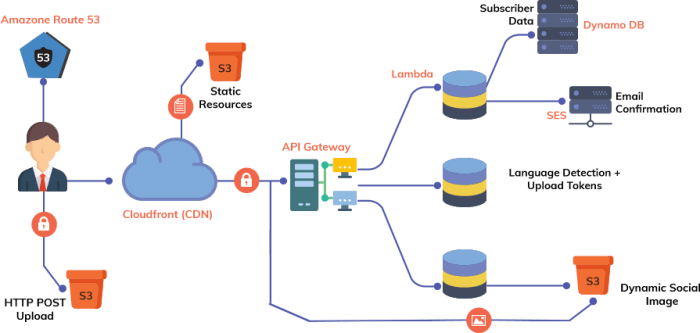

Creating a Data Pipeline Using Athena and Other AWS Services

Building a data pipeline using Athena involves several AWS services to automate the flow of data from its source to analysis. The following example illustrates the process of creating a simple data pipeline using S3, Glue, Athena, and Amazon CloudWatch for monitoring.

Step 1: Data Ingestion and Storage in S3

Data is initially ingested into an Amazon S3 bucket. This can be done through various methods, such as uploading files directly, using AWS CLI commands, or through applications that write data to S3. The data format (e.g., CSV, JSON, Parquet) and the organization of the data within S3 (e.g., partitioning by date) will influence how Athena queries the data.

Step 2: Data Cataloging with AWS Glue

An AWS Glue crawler is configured to scan the S3 bucket. The crawler infers the schema of the data, creates tables in the Glue Data Catalog, and registers the metadata. This includes information about the data format, data types, and any partitioning schemes used. The crawler can be scheduled to run periodically to automatically update the Data Catalog as new data arrives in S3.

Step 3: Querying with Amazon Athena

Using Athena, users can query the data in S3 using standard SQL. Athena reads the metadata from the Glue Data Catalog to understand the structure of the data and the location of the data files. Users can execute SQL queries to analyze the data, generate reports, and extract insights.

Step 4: Monitoring with Amazon CloudWatch

CloudWatch is used to monitor the performance and health of the data pipeline. Metrics like query execution time, number of queries executed, and data volume processed are tracked. Alerts can be set up to notify users of any issues, such as slow query performance or errors in data processing.

This pipeline can be further enhanced by:

- Data Transformation with Glue: Use Glue ETL jobs to transform the data before it is queried by Athena. This can include cleaning, filtering, and aggregating the data.

- Data Visualization with Amazon QuickSight: Integrate Athena with QuickSight to create interactive dashboards and visualizations of the query results.

- Automation with AWS Lambda: Trigger Athena queries automatically using AWS Lambda based on events in S3 or other AWS services.

This comprehensive integration allows for the creation of scalable, cost-effective, and automated data pipelines for various analytical purposes.

Athena Performance Tuning and Best Practices

Optimizing Amazon Athena performance is crucial for cost efficiency and faster query execution. This involves strategic adjustments to query design, data storage, and the underlying infrastructure. Effective tuning minimizes latency and resource consumption, ensuring that Athena remains a performant solution for data analysis.

Strategies for Improving Query Performance in Athena

Several strategies can significantly enhance query performance within Athena. These methods target different aspects of the query execution process, from data access to resource allocation. Implementing these techniques can result in substantial improvements in query speed and reduced costs.

- Partitioning Data: Partitioning involves organizing data into logical segments based on frequently queried columns (e.g., date, region). This allows Athena to selectively scan only the relevant partitions, drastically reducing the amount of data processed. For instance, if you frequently query data by date, partitioning your data by date enables Athena to avoid scanning irrelevant data, significantly speeding up queries.

- Data Compression: Compressing data reduces the amount of data that needs to be read from storage, leading to faster query execution. Athena supports various compression formats, including GZIP, Snappy, and Zstandard. The optimal compression method depends on the data and query patterns. For example, using Snappy compression often provides a good balance between compression ratio and decompression speed.

- Query Optimization: Writing efficient SQL queries is paramount. Avoid using `SELECT

-`, which forces Athena to read all columns. Instead, specify only the required columns. Use `WHERE` clauses to filter data as early as possible. Optimize join operations by ensuring the smaller table is on the left side of the join.Consider using `EXISTS` or `NOT EXISTS` for checking the existence of data rather than `COUNT(*)` if you only need to check if a record exists.

- Using Appropriate Data Types: Choosing the correct data types for your columns can improve query performance. For instance, using `INT` instead of `VARCHAR` for numerical data reduces storage size and speeds up calculations. Properly defined data types enable Athena to optimize storage and processing, leading to faster query results.

- Leveraging Cost-Based Optimizer (CBO): Athena’s CBO analyzes the data and estimates the cost of different query execution plans. It then chooses the most efficient plan. Ensure that table statistics are up-to-date to allow the CBO to make informed decisions. Regularly update table statistics using `ANALYZE TABLE` command to maintain accurate cost estimations.

- Caching Query Results: Athena automatically caches query results. When a query is executed, the results are stored in a cache. Subsequent queries for the same data are served from the cache, reducing latency. Users can leverage this by re-running frequently used queries.

Methods for Optimizing Data Storage for Athena

Optimizing data storage is essential for Athena performance. The way data is stored directly impacts query speed and storage costs. Implementing these optimization techniques can lead to significant improvements in overall performance.

- Choosing the Right File Format: The file format impacts both storage costs and query performance. Athena supports formats such as Parquet, ORC, and CSV. Parquet and ORC are columnar storage formats, which are generally more efficient for analytical queries because they allow Athena to read only the necessary columns. CSV is less efficient, especially for complex queries. Consider Parquet or ORC for optimal performance.

- Data Partitioning and Folder Structure: Organizing data into a logical folder structure that aligns with your partitioning strategy is critical. For example, if partitioning by date, create folders for each date. This structure allows Athena to efficiently identify and scan the relevant data.

- Optimizing Data Placement on S3: Data placement on Amazon S3 can impact performance. Store data in the same AWS Region as your Athena queries to minimize latency. Ensure data is stored in a cost-effective storage class. Consider using S3 Intelligent-Tiering to automatically move data to the most cost-effective storage tier based on access patterns.

- Data Compaction: Compacting small files into larger files can improve query performance. Athena often processes many small files, which can introduce overhead. Compacting these files reduces the number of files Athena needs to scan. Tools and processes can be used to consolidate small files into larger ones.

Best Practices for Using Athena Effectively

Adhering to best practices ensures optimal utilization of Athena. Following these recommendations can improve performance, reduce costs, and enhance overall data analysis capabilities.

- Regularly Monitor Query Performance: Monitor query execution times and resource usage to identify performance bottlenecks. Use Athena’s query history and CloudWatch metrics to track performance trends and identify areas for optimization.

- Implement Cost Controls: Set up cost alerts and budgets to control Athena spending. Use Athena’s cost allocation tags to track costs by project or team. Regularly review and optimize queries to minimize costs.

- Optimize Data Transfer Costs: Minimize data transfer costs by storing data in the same AWS Region as your Athena queries. Avoid unnecessary data transfers between regions.

- Use Presto or Trino Engines: While Athena uses Presto and Trino engines under the hood, you can specify the engine version. Newer engine versions often include performance improvements and bug fixes. Keep the engine version updated to take advantage of these benefits.

- Document Your Data and Queries: Maintain clear documentation of your data schema, partitioning strategy, and query logic. This helps with collaboration and troubleshooting. Documenting queries also facilitates the understanding of query behavior.

- Test Queries in a Development Environment: Before running complex queries against production data, test them in a development environment to identify potential issues and optimize performance. This prevents costly mistakes and minimizes impact on production workloads.

Epilogue

In conclusion, Amazon Athena presents a compelling paradigm shift in data querying, offering a serverless, scalable, and cost-effective solution for analyzing diverse datasets. From its serverless architecture and SQL-based querying capabilities to its seamless integration with other AWS services, Athena provides a robust and adaptable platform for various data analysis requirements. The service continues to evolve, optimizing performance, expanding its capabilities, and solidifying its position as a crucial tool for businesses and individuals seeking efficient and insightful data analysis in the cloud environment.

FAQ Guide

What is the primary cost driver when using Amazon Athena?

The primary cost driver is the amount of data scanned during query execution. Efficient query design and data partitioning are crucial for cost optimization.

Does Athena support ACID transactions?

No, Athena does not support ACID (Atomicity, Consistency, Isolation, Durability) transactions. It’s designed for read-heavy, analytical workloads.

Can I use Athena to query data stored in my on-premises databases?

No, Athena directly queries data stored within AWS services. However, you can migrate or replicate your on-premises data to AWS services like S3 to then query it with Athena.

What security measures are available to protect data in Athena?

Athena integrates with AWS Identity and Access Management (IAM) for access control, supports encryption at rest and in transit, and integrates with AWS Lake Formation for more granular access control.

How does Athena handle data format conversions?

Athena can query various data formats, including CSV, JSON, Parquet, and ORC. It uses data format-specific serdes (serializers/deserializers) to interpret the data and convert it for query processing.