Data masking and tokenization are crucial security techniques for protecting sensitive information. These methods allow organizations to safeguard confidential data while still enabling legitimate access and use. By understanding the fundamental differences and various applications of these methods, businesses can proactively mitigate risks and ensure compliance with industry regulations.

This guide provides a comprehensive overview of data masking and tokenization, exploring different techniques, implementations, tools, and considerations. We’ll delve into the nuances of each approach, highlighting their strengths and weaknesses, and ultimately empowering you to make informed decisions regarding data security.

Introduction to Data Masking and Tokenization

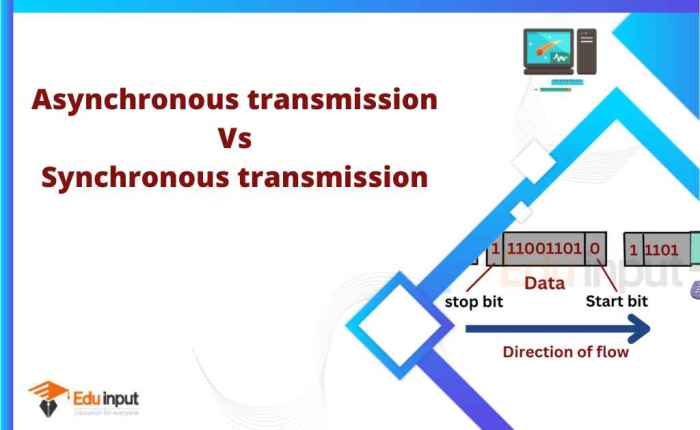

Data masking and tokenization are crucial techniques in data privacy and security. These methods protect sensitive data by transforming it into a less revealing form while still allowing authorized users to access it for legitimate business purposes. They are vital components of data governance and compliance initiatives, particularly for organizations dealing with regulations like GDPR and CCPA.Data masking and tokenization differ in their approach to data protection.

Data masking obscures the original data, while tokenization replaces it with a non-sensitive substitute. This distinction significantly impacts the level of access control and the potential for re-identification. Understanding these differences is essential for selecting the appropriate technique for a given scenario.

Data Masking

Data masking alters the original data without removing it from the system. This allows for retention of the data’s structure while concealing sensitive information. Various masking techniques are employed, including:

- Value Masking: Replacing specific values with a predetermined substitute (e.g., replacing social security numbers with “XXX-XX-XXXX”).

- Range Masking: Replacing values within a specific range with a representative value (e.g., replacing salaries between $50,000 and $75,000 with an average salary within that range).

- Data Type Masking: Converting sensitive data into a different data type (e.g., replacing dates with a generic placeholder).

- Pattern Masking: Replacing parts of data with a consistent pattern (e.g., replacing credit card numbers with a series of asterisks).

Data Tokenization

Tokenization involves replacing sensitive data with unique, non-sensitive tokens. These tokens are stored separately, allowing access to the data while preventing direct identification of the original information. The tokens are managed through a secure tokenization service, which ensures that the original data cannot be retrieved. This approach is often preferred for highly sensitive data where even partial disclosure is unacceptable.

- Data Replacement: A crucial step in tokenization where sensitive data is replaced with non-sensitive tokens.

- Token Management: A secure tokenization service handles the tokens, enabling authorized access while maintaining data security.

Examples of Data Types

Data masking and tokenization can be applied to a wide array of data types, including but not limited to:

- Financial Data: Credit card numbers, account balances, transaction details.

- Personal Identifiable Information (PII): Names, addresses, phone numbers, social security numbers.

- Healthcare Information: Patient records, medical diagnoses, treatment details.

- Government Data: Tax information, government IDs, citizen records.

Comparison of Data Masking and Tokenization

| Data Type | Masking Method | Tokenization Method | Pros/Cons |

|---|---|---|---|

| Credit Card Numbers | Replacing with asterisks or placeholder values | Replacing with unique, non-sensitive tokens | Masking: Simple to implement, preserves data structure; Tokenization: Stronger protection, prevents re-identification, but requires a token management system. |

| Social Security Numbers | Replacing with “XXX-XX-XXXX” | Replacing with a unique token linked to a secure database | Masking: Relatively simple, data structure maintained; Tokenization: Stronger privacy protection, prevents re-identification, but has additional complexity. |

| Dates of Birth | Replacing with generic placeholder | Replacing with a unique token | Masking: Simple and quick; Tokenization: Strong protection but requires a lookup system. |

| Addresses | Replacing street numbers or parts of the address with generic values | Replacing with a unique token linked to a secure database | Masking: Relatively simple, maintains some data context; Tokenization: Strong protection, but might require additional data validation. |

Types of Data Masking Techniques

Data masking is a crucial technique for protecting sensitive data during storage, transmission, and processing. Different masking techniques cater to diverse needs and levels of sensitivity, offering a tailored approach to data security. Understanding these techniques is vital for organizations to implement robust data protection strategies.Various data masking methods exist, each with its own set of characteristics, advantages, and disadvantages.

These methods aim to transform or replace sensitive data with pseudonyms or simulated data while preserving the data’s overall statistical properties. Selecting the appropriate technique depends on the specific requirements of the data and the context in which it is used.

Substitution

Substitution involves replacing sensitive data values with pseudonyms or surrogate values. This method is commonly used for masking personally identifiable information (PII) such as names, addresses, and social security numbers. For example, a customer’s name might be replaced with a unique identifier or a generic name. The substituted values must be carefully chosen to avoid revealing any information about the original data.Advantages of substitution include its simplicity and speed of implementation.

It is relatively straightforward to implement and can be applied quickly. Moreover, substitution can often maintain the integrity of data relationships. If properly managed, it can minimize the disruption to existing systems.Disadvantages of substitution include the potential for re-identification if the substitution scheme is not well-designed or if the data is combined with other datasets. This is a critical consideration when choosing this technique.

Additionally, the choice of surrogate values can affect the analytical accuracy of the masked data, especially if the surrogate values do not adequately reflect the original data’s distribution.

Generalization

Generalization involves replacing specific values with more general categories or groups. For example, instead of storing precise ages, generalized age ranges (e.g., 25-34, 35-44) can be used. This technique is particularly useful for masking data that can be grouped into meaningful categories.Advantages of generalization include its ability to maintain the overall statistical distribution of the data while masking sensitive details.

It is generally more efficient than other masking methods. Moreover, it allows for analysis of aggregated data while protecting individual records.Disadvantages of generalization may include loss of detail, impacting analyses requiring precise values. The level of generalization should be carefully chosen to strike a balance between data protection and analysis requirements.

Suppression

Suppression involves removing specific data values entirely. This technique is effective for masking data that is not essential for a particular analysis or use case. For example, in a customer survey, certain specific responses might be suppressed to protect individual preferences.Advantages of suppression include its simplicity and effectiveness in reducing the risk of re-identification. Suppression is often the most straightforward method for masking data, and can be particularly effective for specific columns.Disadvantages of suppression include potential loss of information, which might affect the analysis.

The impact of suppression on the overall data analysis should be evaluated carefully.

Data Anonymization

Data anonymization involves a set of techniques to remove all personally identifiable information (PII) from the data, thus ensuring that the data is no longer linked to any individual. This approach often includes a combination of generalization, suppression, and pseudonymization. For instance, a customer’s name, address, and phone number might be replaced with a unique, non-identifiable identifier.Advantages of data anonymization include a high level of privacy protection, suitable for sensitive data sets.

It ensures the complete removal of PII, which is crucial for regulatory compliance.Disadvantages of data anonymization might include a reduction in the usability of the data for certain analytical purposes. This method may make it difficult to link records together.

Table Comparing Masking Techniques

| Technique | Security | Performance | Ease of Implementation |

|---|---|---|---|

| Substitution | Medium | High | High |

| Generalization | Medium-High | Medium | Medium |

| Suppression | High | High | Low |

| Data Anonymization | High | Low | High |

Types of Tokenization Techniques

Tokenization, a crucial data masking technique, transforms sensitive data into a non-sensitive representation. This transformation preserves the data’s utility for analysis and reporting while significantly reducing the risk of unauthorized access and breaches. Effective tokenization methods must ensure the transformed data cannot be linked back to the original sensitive information. Different tokenization methods cater to various data types and security needs.

Surrogate Keys

Surrogate keys, often used in database systems, are unique identifiers that replace the original primary keys. These keys are carefully chosen to avoid any correlation with the original data’s meaning. For instance, a social security number might be replaced by a randomly generated surrogate key. The critical aspect of this method is the creation of a mapping between the surrogate key and the original data, typically stored in a separate, secure location.

This mapping allows the retrieval of the original data when required, while the surrogate key acts as a secure substitute in all other operations.

Universally Unique Identifiers (UUIDs)

UUIDs are globally unique identifiers, often employed in distributed systems and applications. Their randomness and global uniqueness make them ideal for masking sensitive data. A UUID, such as ‘a1b2c3d4-e5f6-7890-1234-567890abcdef’, can replace a specific customer ID without compromising data utility. The primary benefit of UUIDs is their inherent randomness, which minimizes the potential for correlation with the original data.

However, their length might impact performance in certain applications.

Hashing Techniques

Hashing algorithms transform data into a fixed-size string, or hash. These algorithms generate a unique hash for each input, making it difficult to reverse engineer the original data. Hashing is often used for passwords and other sensitive information. However, collisions (where different inputs produce the same hash) are a potential drawback. Techniques like salting can mitigate this risk.

Furthermore, hashing does not guarantee complete data masking; it depends on the strength of the hashing algorithm.

Data Anonymization Techniques

Data anonymization techniques aim to remove identifying information from data sets. This method involves replacing sensitive data with pseudonyms or generic values. While anonymization can significantly reduce the risk of data breaches, it might also compromise data utility, depending on the level of transformation. Carefully selecting anonymization techniques is crucial to maintain data utility while enhancing security.

Table: Strengths and Weaknesses of Tokenization Methods

| Tokenization Method | Strengths | Weaknesses |

|---|---|---|

| Surrogate Keys | Preserves data utility, traceable, easily reversible | Requires separate mapping table, potentially complex setup |

| UUIDs | Globally unique, high randomness, easy to generate | Potential performance impact, less secure if not used properly |

| Hashing | Difficult to reverse, efficient for data transformation | Collision risk, potential for re-identification, limited traceability |

| Data Anonymization | High level of privacy, reducing re-identification risk | Potential loss of data utility, varying levels of anonymization required |

Implementing Data Masking and Tokenization

Implementing data masking and tokenization procedures requires careful planning and execution to ensure data privacy and security while maintaining application functionality. This involves selecting appropriate techniques, designing policies, and integrating them seamlessly into existing systems. A thorough understanding of the specific data types and the intended use cases is critical for successful implementation.A robust implementation strategy involves not only the technical aspects of applying masking and tokenization but also the organizational aspects of defining clear policies and procedures.

This approach ensures consistency, traceability, and compliance with regulatory requirements.

Steps in Implementing Data Masking Procedures

Understanding the data flow and identifying sensitive data are the initial steps in implementing data masking. Data inventory and classification help to identify specific fields or records that require masking. This detailed analysis is crucial for establishing appropriate masking policies and choosing the most effective technique. Next, the selection of the masking technique and the establishment of a clear masking policy is essential.

This includes determining the scope of the masking (e.g., specific columns, entire records), the type of masking to be applied (e.g., substitution, generalization), and the frequency of updates. A detailed testing plan to verify the effectiveness and integrity of the implemented masking is critical. Thorough testing ensures the masked data does not compromise the application’s functionality and that the masked data can be restored when necessary.

Steps in Implementing Tokenization Procedures

Similar to masking, tokenization implementation involves a series of steps. Identifying the sensitive data to be tokenized and the scope of the tokenization process is crucial. Choosing the appropriate tokenization technique is vital; this includes deciding whether to use static or dynamic tokens and the token length and complexity. A critical aspect of tokenization implementation is designing a secure token management system.

This involves defining access controls and audit trails for token generation, storage, and retrieval. The process should include establishing a comprehensive testing strategy to ensure the tokenization process does not impact application performance or functionality. Regular audits and reviews of the token management system are necessary for ongoing compliance.

Technical Aspects of Data Masking and Tokenization

The technical aspects of data masking and tokenization vary depending on the chosen method and the specific context. For example, data masking in a relational database might involve using database triggers or stored procedures to mask data on the fly. In a cloud environment, cloud-native masking tools can be leveraged for automating the process. Likewise, tokenization might involve using dedicated libraries or APIs to generate and manage tokens.

Careful consideration of data formats and database structures is essential.

Designing Data Masking Policies

Designing data masking policies involves establishing clear guidelines and rules for masking sensitive data. The policy should define the scope of the masking process (e.g., specific columns, entire records). It should also specify the types of masking to be applied, the frequency of updates, and the procedures for handling exceptions. A crucial element is specifying the criteria for triggering masking.

These criteria might include data type, value ranges, or specific conditions.

Example of Data Masking Policy for Customer Information

Consider a customer information system. A policy might specify that customer addresses should be masked by replacing the street address with a generic placeholder like “Masked Address” and the city and state with a generic region. The policy would specify that this masking should apply to all customer records, regardless of other attributes. This is designed to prevent the disclosure of specific addresses while still allowing access to aggregated customer data.

Considerations for Implementation

- Data Inventory and Classification: A comprehensive inventory of data assets, including their sensitivity levels, is essential for determining which data requires masking or tokenization.

- Data Flow Analysis: Understanding the data flow within the application is vital to identify sensitive data and ensure that masking or tokenization does not disrupt functionality.

- Performance Considerations: The chosen masking or tokenization technique should not significantly impact application performance. Testing is critical to verify performance under various conditions.

- Compliance Requirements: Data masking and tokenization should adhere to relevant industry regulations (e.g., GDPR, HIPAA). This might involve obtaining specific approvals or certifications.

- Integration with Existing Systems: The implementation should seamlessly integrate with existing systems to avoid disrupting workflows and processes.

Data Masking and Tokenization Tools

Data masking and tokenization tools are crucial for securely managing sensitive data within organizations. These tools allow businesses to protect confidential information while enabling legitimate data access for various purposes, such as testing, analytics, and reporting. Effective selection and implementation of these tools are essential for compliance with data privacy regulations and maintaining data security.

Popular Data Masking and Tokenization Tools

Several tools are available in the market, each with its own strengths and weaknesses. Choosing the right tool depends on specific organizational needs, budget, and technical expertise. Below are some widely used tools.

- Redaction: Redaction tools allow users to selectively remove or mask sensitive data within datasets. They offer granular control over the masking process, enabling users to define specific criteria for identifying and masking data elements. A key feature is the ability to easily identify and redact personal information or other sensitive data. This is highly effective for compliance with regulations like GDPR.

Redaction tools typically provide a range of masking options, allowing users to control the level of data visibility during testing or analytics processes. Examples include masking full fields, partial fields, or specific patterns.

- Data Masking Tools (e.g., Informatica PowerCenter, IBM InfoSphere DataStage): These enterprise-level data masking tools are commonly used in large organizations. They offer comprehensive data masking capabilities, including masking techniques such as data swapping, encryption, and hashing. They also support integration with existing data pipelines and ETL processes. Their advanced features include automated masking rules and validation. These tools often provide strong support for different data formats and structures, ensuring compatibility with diverse data environments.

However, these tools can be expensive and require significant technical expertise for implementation and maintenance.

- Tokenization Tools (e.g., Fivetran, Snowflake): These tools focus on replacing sensitive data with non-sensitive tokens. They often work by generating unique, non-sensitive identifiers to replace the original data, ensuring the original data is not visible or accessible. They facilitate the secure transfer and storage of sensitive data. This approach is particularly useful when dealing with large volumes of data or when the data needs to be shared with external parties.

These tools often provide strong support for data governance and compliance. However, they might require specific integrations or custom configurations depending on the specific data environment.

Comparison of Data Masking and Tokenization Tools

A comparative analysis of popular tools helps in making informed decisions.

| Tool | Features | Pricing | Ease of Use |

|---|---|---|---|

| Redaction Tool | Granular control, flexible masking, easy identification | Variable, often based on features and support | Generally easy to learn and implement |

| Informatica PowerCenter | Comprehensive masking, integration with ETL, automated rules | High | Steep learning curve |

| IBM InfoSphere DataStage | Robust masking, integration with existing platforms, validation | High | Steep learning curve |

| Fivetran | Secure data transfer, tokenization, data governance | Variable, often based on usage | Generally easy to learn and implement |

| Snowflake | Cloud-based tokenization, secure storage, large-scale data handling | Variable, often based on usage | Generally easy to learn and implement |

Data Masking and Tokenization Use Cases

Data masking and tokenization are crucial techniques for protecting sensitive data while enabling legitimate access and use. These methods safeguard confidential information, comply with regulations, and minimize the risk of breaches. This section explores various applications of these techniques across diverse scenarios.Data masking and tokenization are not just theoretical concepts; they are practical tools that organizations employ to mitigate risks and maintain compliance.

Implementing these techniques effectively strengthens data security posture, protects sensitive information from unauthorized access, and ensures regulatory adherence.

Financial Services Use Cases

Data masking and tokenization play a vital role in financial institutions. Protecting customer financial data is paramount. Masking techniques can be used to obscure sensitive account numbers, credit card details, or social security numbers during data analysis or reporting. Tokenization replaces sensitive data with unique, non-sensitive identifiers (tokens). These tokens are stored securely and can be used to access the original data through a secure process.

Healthcare Use Cases

In the healthcare industry, protecting patient data is critical. Data masking techniques are used to protect patient identifiers, medical records, and other sensitive information during data analysis, research, or sharing with third parties. Tokenization is a valuable tool for secure data exchange with other healthcare providers or for research purposes.

Retail Use Cases

Retail organizations often handle vast amounts of customer data, including credit card information and personal details. Data masking and tokenization are essential to protect this data from unauthorized access during data processing, analysis, and reporting. Masking techniques can be used to obscure sensitive data in reports, while tokenization is crucial for secure data sharing with third-party payment processors.

Government Use Cases

Government agencies often handle highly sensitive data, including personal information and financial records. Data masking and tokenization are essential for protecting this data from unauthorized access and ensuring compliance with various regulations. Masking can be used to protect sensitive information in reports, while tokenization can secure data exchanged with other government agencies or for research.

Compliance with Regulations (GDPR, HIPAA)

Data masking and tokenization are critical for meeting regulatory requirements such as GDPR and HIPAA. These regulations mandate the protection of personal data. Implementing data masking and tokenization is an essential part of demonstrating compliance. These techniques help organizations meet the stringent requirements of data protection laws and regulations.

Detailed Example: Customer Data Analysis

Consider a retail company analyzing customer purchase data to identify trends. They need access to customer data but cannot expose sensitive data like credit card numbers. To comply with regulations and maintain security, they can use the following approach:

| Step | Action | Description |

|---|---|---|

| 1 | Data Masking | Mask credit card numbers with a placeholder or generic value, e.g., “XXXX-XXXX-XXXX-1234.” |

| 2 | Data Tokenization | Replace customer names with unique, non-sensitive tokens for privacy and security. |

| 3 | Data Analysis | Analyze the masked and tokenized data for trends and insights. |

This approach ensures that sensitive data is protected while allowing the company to perform the necessary analysis without exposing customer information. It demonstrates compliance with regulations and maintains customer trust.

Security Considerations

Data masking and tokenization, while crucial for data privacy and compliance, introduce new security considerations that must be carefully addressed. Implementing these techniques requires a thorough understanding of potential vulnerabilities and proactive strategies to mitigate risks. A robust security posture encompassing the entire data lifecycle, from initial masking to final disposal, is essential.Thorough risk assessment and mitigation planning are paramount in ensuring the security of masked and tokenized data.

Failure to anticipate and address potential weaknesses can compromise the confidentiality and integrity of sensitive information, leading to severe consequences. A proactive approach that prioritizes data security throughout the entire masking and tokenization process is critical.

Security Implications of Data Masking and Tokenization

Data masking and tokenization, while protecting sensitive data, can introduce new security risks if not properly implemented. Careless implementation can inadvertently expose masked or tokenized data to unauthorized access, compromise the integrity of the underlying data, or create new attack vectors. It is important to note that masking and tokenization are not foolproof security measures and do not replace robust security protocols and controls.

Potential Vulnerabilities and Risks

Several potential vulnerabilities and risks associated with data masking and tokenization need careful consideration. Improperly configured masking rules can allow access to sensitive data. If the masking process is not adequately tested, it might inadvertently reveal patterns or characteristics of the original data. Furthermore, vulnerabilities in the chosen masking or tokenization tools can be exploited. Finally, the tokenization process itself could be a target if the token management system is not secure.

Mitigation Strategies

A comprehensive approach to mitigating risks involves multiple layers of security. Regular security audits of the masking and tokenization processes are crucial. Strong access controls should be implemented to limit access to the masked or tokenized data. Rigorous testing of the masking and tokenization mechanisms is essential to identify and address potential vulnerabilities before deployment. Employing robust encryption for data at rest and in transit further strengthens security.

This includes using strong cryptographic algorithms and adhering to industry best practices.

Data Security Procedures

Robust procedures must be in place to ensure the security of data throughout the masking and tokenization process. These procedures should cover the entire lifecycle, from data collection and preparation to disposal. Comprehensive documentation outlining the masking and tokenization procedures, including details of the chosen techniques and tools, is vital. Regular security awareness training for personnel involved in the masking and tokenization process is crucial.

This ensures they are aware of the security implications and how to avoid common pitfalls. Data should be stored securely in accordance with regulatory requirements and best practices.

Secure Data Disposal

Proper disposal of masked or tokenized data is critical. Data should be securely deleted or overwritten using approved methods to prevent recovery. This includes using secure deletion techniques to ensure that the data is unrecoverable. Adherence to data retention policies and schedules is also crucial for mitigating risks associated with prolonged data storage. These policies must be consistently applied to masked and tokenized data as well as the original data.

Performance Considerations

Data masking and tokenization, while crucial for data security, can introduce performance overhead. Understanding and mitigating this impact is vital for maintaining system responsiveness and efficiency. Carefully designed strategies can minimize performance degradation without compromising security.Efficient implementation of data masking and tokenization techniques directly impacts the overall performance of applications and systems. This section examines potential bottlenecks and explores strategies for optimizing performance while preserving the integrity of sensitive data.

Impact of Data Masking Techniques on Performance

Different data masking techniques exhibit varying performance characteristics. Simple masking methods, such as replacing specific characters or values with predefined patterns, typically introduce minimal performance overhead. More complex techniques, like data anonymization or generalization, may result in more substantial performance implications, especially when dealing with large datasets or complex data transformations. The choice of technique should be carefully evaluated based on the specific performance requirements of the system.

Potential Performance Bottlenecks

Several factors can contribute to performance bottlenecks during data masking and tokenization processes. These include the size of the dataset, the complexity of the masking rules, the frequency of masking operations, and the chosen masking technique. The processing of large datasets, particularly those involving complex masking logic, can lead to extended processing times and resource consumption. Furthermore, real-time masking operations, or frequent masking/unmasking, can significantly affect the performance of the system.

Regular monitoring of system performance is crucial to identify and address any bottlenecks.

Strategies for Optimizing Performance

Several strategies can be employed to optimize the performance of data masking and tokenization processes while maintaining data security. These include utilizing optimized algorithms, leveraging parallel processing, and employing efficient data structures. For instance, using optimized algorithms for data transformations can dramatically reduce processing time. Leveraging parallel processing capabilities can significantly accelerate masking tasks, particularly for large datasets.

Employing appropriate data structures can enhance data access and manipulation, thus improving performance. Careful planning and implementation of these strategies can ensure the system remains responsive and efficient.

Minimizing Performance Impact During Masking/Tokenization Operations

Minimizing performance impact during masking/tokenization operations involves careful planning and strategic implementation. Implementing the masking techniques as part of a scheduled process, rather than in real-time, can reduce the impact on ongoing operations. Batch processing can further optimize performance by handling larger datasets in manageable chunks. Caching frequently accessed data can reduce the number of database queries or file I/O operations, which can improve overall performance.

Moreover, choosing appropriate masking tools with optimized masking engines and sufficient hardware resources is crucial to minimize processing time.

Performance Measurement and Monitoring

Rigorous performance measurement and monitoring are essential to identify and address any performance issues. Monitoring tools and metrics should be implemented to track the time taken for masking operations and identify potential bottlenecks. Key performance indicators (KPIs) such as average masking time, maximum masking time, and the number of masking operations per second can provide valuable insights. Regular performance analysis allows for proactive identification and resolution of performance issues, ensuring the system remains efficient and secure.

Data Governance and Compliance

Data masking and tokenization are crucial for protecting sensitive data, but their effective implementation necessitates a robust data governance framework. This framework ensures compliance with relevant regulations and maintains data security throughout the lifecycle of the data. A well-defined policy for data masking and tokenization is essential for organizations to manage the risk associated with sensitive data.Data governance plays a vital role in establishing clear guidelines, procedures, and controls for data masking and tokenization.

This includes defining data classification policies, identifying sensitive data, and establishing the appropriate masking or tokenization methods. This approach ensures that sensitive data is treated with the appropriate level of protection.

Role of Data Governance in Data Masking and Tokenization

Data governance provides a structured approach to managing sensitive data throughout its lifecycle, including masking and tokenization. It encompasses policies, procedures, and standards for data classification, access control, and data quality. Effective data governance ensures that data masking and tokenization techniques are applied consistently and appropriately, mitigating risks and maintaining compliance. This encompasses establishing clear roles and responsibilities for data owners, stewards, and security personnel in the process.

Compliance with Regulations (e.g., GDPR, HIPAA)

Adherence to regulations like GDPR and HIPAA is critical when implementing data masking and tokenization. Organizations must identify sensitive data elements and apply appropriate masking or tokenization techniques to meet the requirements of these regulations. These regulations mandate specific procedures for data handling, and data masking/tokenization should align with these requirements. For example, GDPR mandates the right to access, rectification, and erasure of personal data.

This necessitates careful consideration when implementing masking and tokenization to ensure these rights can be upheld. The level of masking or tokenization must be proportionate to the sensitivity of the data.

Data Masking and Tokenization Policies

A well-defined policy is crucial for ensuring consistent and secure implementation of data masking and tokenization. This policy should include guidelines for data classification, identification of sensitive data, selection of appropriate masking/tokenization techniques, and procedures for ongoing monitoring and evaluation. Furthermore, policies should detail the handling of exceptions and ensure compliance with regulatory requirements.

- Data Classification Policy: This policy defines how data is categorized based on sensitivity, risk, and legal requirements. It guides the selection of appropriate masking/tokenization techniques for each category.

- Data Inventory: A comprehensive inventory of all sensitive data assets is essential to ensure all relevant data is masked or tokenized.

- Access Control: Policies must clearly define who has access to masked or tokenized data and under what circumstances. Access should be strictly limited to authorized personnel.

- Auditing and Monitoring: Regular audits and monitoring are essential to ensure the effectiveness of data masking/tokenization policies and to detect any deviations or vulnerabilities. This includes tracking the use and impact of the policies and making necessary revisions to maintain their effectiveness.

Summary of Key Aspects of Data Governance and Compliance

Data governance and compliance are fundamental to the successful implementation of data masking and tokenization. These aspects require a holistic approach encompassing data classification, access control, policy definition, and ongoing monitoring. By ensuring adherence to regulations like GDPR and HIPAA, organizations can protect sensitive data, maintain business continuity, and avoid potential legal or reputational risks. The core elements include establishing clear policies, conducting regular audits, and maintaining a well-defined framework for managing sensitive data.

Epilogue

In conclusion, data masking and tokenization are powerful tools for enhancing data security. By understanding the diverse techniques, implementation strategies, and security considerations, organizations can effectively protect sensitive data while maintaining data utility. Careful consideration of performance, governance, and compliance requirements is paramount in ensuring successful implementation.

Clarifying Questions

What are some common use cases for data masking and tokenization?

Data masking and tokenization are used in various scenarios, including testing and development environments, data warehousing, reporting, and regulatory compliance. They also play a crucial role in protecting sensitive data during data breaches and security audits.

What are the key differences between data masking and tokenization?

Data masking alters the original data, making it unreadable without the decryption key. Tokenization replaces sensitive data with non-sensitive, unique identifiers (tokens). Tokenization generally preserves data utility better than masking, while masking may offer better performance in some cases.

What are some common security considerations when implementing these methods?

Security considerations include the selection of appropriate masking or tokenization techniques, the implementation of robust access controls, the protection of masking/tokenization keys, and ongoing monitoring for vulnerabilities.

How can I ensure data remains secure throughout the masking/tokenization process?

This requires strong data governance policies, regular security audits, and the use of tools that support data masking and tokenization best practices. Strict adherence to regulatory compliance requirements (e.g., GDPR, HIPAA) is also crucial.