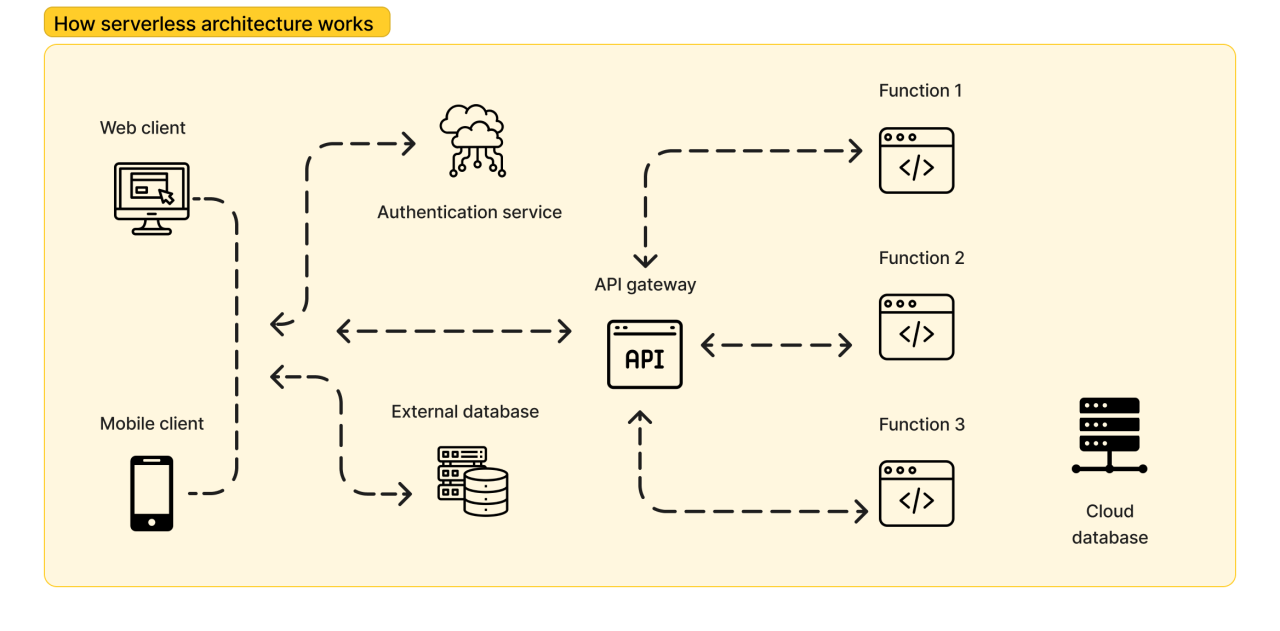

The advent of serverless architectures has revolutionized software development, promising scalability, cost-efficiency, and rapid deployment. However, this shift introduces complexities in testing. Integration testing, specifically, becomes crucial for verifying the interactions between disparate serverless components. This is particularly true in an environment where services are distributed and ephemeral, demanding a rigorous approach to ensure functionality and reliability. This exploration delves into the intricacies of integration testing within the serverless paradigm, examining its importance, challenges, and best practices.

Integration testing in serverless environments differs significantly from traditional testing methodologies. Unlike unit tests, which focus on individual components, integration tests validate the collaboration of multiple serverless functions, API gateways, databases, and event buses. This process ensures that these components function harmoniously, providing a holistic verification of the application’s behavior. Furthermore, this approach ensures that data flows seamlessly between components, and that each function responds as expected under varying conditions, thereby guaranteeing the overall reliability and performance of the serverless application.

Defining Integration Testing in Serverless Architectures

Integration testing is crucial in serverless architectures to ensure that individual components, often deployed and managed independently, function correctly when interacting with each other. This testing phase verifies the interfaces and data flow between these components, identifying integration issues early in the development lifecycle. The distributed nature of serverless applications necessitates robust integration testing strategies to maintain overall system reliability and functionality.

Core Concept of Integration Testing in Serverless Applications

The core concept of integration testing in serverless applications centers on verifying the interactions between different serverless functions, services, and external resources. These components are typically developed, deployed, and scaled independently. Integration testing aims to validate that these independent units work cohesively as a system. It focuses on the data exchange, event handling, and overall coordination between components. The success of integration testing hinges on simulating real-world scenarios where these components interact, identifying any discrepancies or failures in their interactions.

Definition of Integration Testing

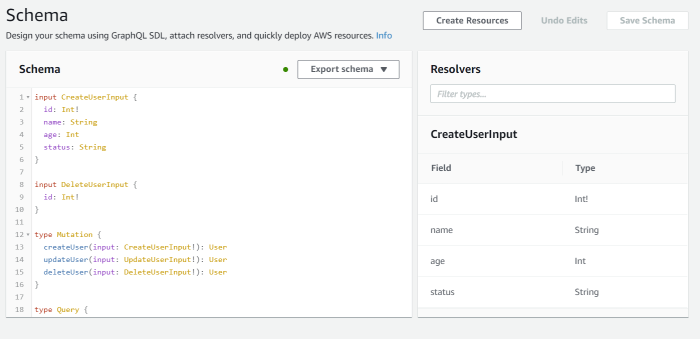

Integration testing is a type of software testing that verifies the interaction between different modules or components of a software system. In the context of serverless architectures, it focuses on testing the interactions between different serverless functions (e.g., AWS Lambda functions, Azure Functions, Google Cloud Functions), APIs (e.g., API Gateway), databases (e.g., DynamoDB, Azure Cosmos DB, Cloud SQL), message queues (e.g., SQS, Azure Service Bus, Google Cloud Pub/Sub), and other external services.

It aims to ensure that these components work together as expected, exchanging data correctly and handling events appropriately.

Differences from Unit Testing and End-to-End Testing

Integration testing plays a distinct role in the testing spectrum, differing significantly from both unit testing and end-to-end testing in a serverless environment.

- Unit Testing: Unit testing focuses on verifying the smallest testable parts of an application, typically individual functions or methods within a single serverless function. The objective is to isolate and test each unit independently, ensuring it performs its intended function correctly. Unit tests do not involve interactions with other components. They use mocked dependencies to isolate the unit under test.

- End-to-End Testing: End-to-end (E2E) testing validates the complete workflow of an application from start to finish, simulating user interactions with the entire system. E2E tests typically involve testing through the user interface, interacting with all the components of the system. This type of testing is valuable for verifying the overall user experience and functionality but can be more time-consuming and complex to set up and maintain.

It is less granular than integration testing, which focuses on the interactions between specific components.

- Integration Testing: Integration testing, positioned between unit and end-to-end testing, validates the interactions between different serverless components. It verifies that these components work together as designed, focusing on data flow, event handling, and interface compatibility. Integration tests typically involve deploying and invoking multiple serverless functions and other services. They use real or simulated dependencies to test the interactions between these components.

This provides a balance between detailed testing and overall system verification.

For instance, consider an e-commerce serverless application. A unit test might verify the functionality of a single Lambda function responsible for validating a credit card. An end-to-end test might simulate a user placing an order, interacting with the entire system from the user interface to the database. Integration tests, in this case, would focus on the interaction between the Lambda function that validates the credit card and the Lambda function that processes the order, ensuring that the payment information is correctly passed and the order is processed accordingly.

The credit card validation function might use a third-party payment gateway. The integration test would verify that the communication with the payment gateway is correctly implemented and the payment processing function handles the response.

Key Serverless Components for Integration Testing

Integration testing in serverless architectures necessitates a thorough understanding of the components that comprise these systems. These components, working in concert, handle various aspects of application functionality, from user interaction to data persistence and event processing. Effective integration testing focuses on validating the interactions between these components, ensuring they function as expected within the broader system context.

API Gateways and Their Role

API Gateways serve as the entry points for client requests in serverless architectures. They manage routing, authentication, authorization, and rate limiting, acting as a crucial intermediary between clients and the backend services.

API Gateways manage the lifecycle of API calls.

- Request Routing: API Gateways direct incoming requests to the appropriate backend services, such as Lambda functions. This routing is typically based on the request’s URL path, HTTP method, and other criteria.

- Authentication and Authorization: They handle the authentication of users and authorize access to specific API endpoints. This often involves validating API keys, JWT tokens, or other security mechanisms.

- Request Transformation: API Gateways can transform incoming requests before forwarding them to the backend. This may involve modifying headers, payloads, or other request parameters.

- Response Transformation: Similarly, they can transform responses from backend services before returning them to the client. This could involve adding headers, modifying payloads, or formatting the response.

- Rate Limiting: API Gateways can enforce rate limits to protect backend services from being overwhelmed by excessive traffic. This helps to ensure the availability and performance of the system.

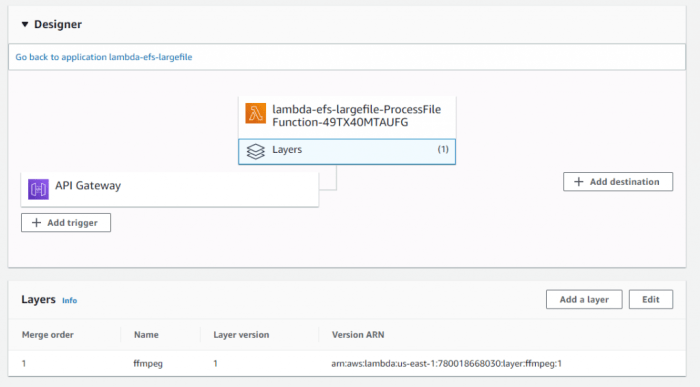

Lambda Functions in Integration Tests

Lambda functions are the core compute units in serverless architectures. They execute code in response to triggers, such as API Gateway requests, database updates, or events from event buses.

Lambda functions represent the computational logic in serverless applications.

- Business Logic Execution: Lambda functions encapsulate the application’s business logic, performing tasks such as data processing, calculations, and interacting with other services.

- Event Handling: They respond to events from various sources, including API Gateway, SQS queues, SNS topics, and database changes.

- Scalability and Concurrency: Lambda functions automatically scale to handle the load, enabling the system to accommodate a large number of concurrent requests.

- Statelessness: Lambda functions are designed to be stateless, meaning they do not maintain any persistent state between invocations. Any state needed for processing a request must be retrieved from external services, such as databases.

Databases and Data Persistence

Databases are critical for storing and retrieving data in serverless architectures. They provide the persistent storage required by Lambda functions and other components. Common choices include NoSQL databases like DynamoDB and document databases like MongoDB.

Databases store and manage data, ensuring data consistency and availability.

- Data Storage: Databases store the application’s data, providing a persistent and reliable storage mechanism.

- Data Retrieval: Lambda functions and other services interact with databases to retrieve data needed for processing requests.

- Data Consistency: Databases ensure data consistency through mechanisms like transactions and data validation.

- Scalability: Serverless databases are designed to scale automatically to accommodate increasing data volumes and request loads.

Event Buses: SQS and SNS

Event buses, such as Simple Queue Service (SQS) and Simple Notification Service (SNS), facilitate asynchronous communication between serverless components. They decouple components, allowing them to communicate without direct dependencies.

Event buses enable asynchronous communication and event-driven architectures.

- Asynchronous Communication: SQS and SNS allow components to communicate asynchronously, decoupling the sender and receiver.

- Event Distribution: SNS distributes events to multiple subscribers, such as Lambda functions or other services.

- Message Queuing: SQS provides a message queue for handling asynchronous tasks, ensuring that messages are processed even if the receiving component is temporarily unavailable.

- Decoupling: Event buses decouple components, allowing them to evolve independently without affecting each other.

Benefits of Integration Testing for Serverless

Implementing robust integration testing in serverless architectures offers significant advantages, contributing to improved application quality, reduced operational costs, and accelerated development cycles. These benefits stem from the nature of serverless applications, which are inherently distributed and reliant on the seamless interaction of various cloud services. Effective integration testing ensures that these services work together as intended, preventing common issues and fostering a more reliable and maintainable system.

Enhanced Application Reliability

Integration tests are crucial for ensuring the reliability of serverless applications. By verifying the interactions between different serverless components and external services, integration tests proactively identify potential issues that might arise from misconfigurations, version incompatibilities, or unexpected service behaviors.

- Early Detection of Errors: Integration tests uncover integration issues early in the development lifecycle, before they impact production environments. This early detection significantly reduces the cost and effort required to fix defects, compared to discovering them during user acceptance testing or in production. For example, if a Lambda function fails to correctly process data from an API Gateway, an integration test would catch this, preventing data corruption or service outages.

- Reduced Production Incidents: Thorough integration testing minimizes the likelihood of production incidents caused by integration failures. By simulating real-world scenarios, integration tests validate the application’s ability to handle various load conditions, error scenarios, and data volumes. This reduces the probability of unexpected behavior and service disruptions in the live environment.

- Improved Error Handling and Resilience: Integration tests can validate the effectiveness of error handling mechanisms and resilience strategies. This includes verifying the correct behavior of retry logic, circuit breakers, and other techniques designed to mitigate the impact of service failures. Tests can simulate service unavailability to ensure the application gracefully degrades and recovers.

Improved Maintainability and Reduced Development Costs

Integration testing contributes to improved maintainability by making the application easier to understand, modify, and extend. It also helps reduce development costs by streamlining the development process and minimizing the need for expensive rework.

- Simplified Code Changes: Integration tests provide a safety net when making code changes or introducing new features. They confirm that modifications do not break existing functionality or introduce new integration issues. This reduces the risk of regressions and simplifies the refactoring process.

- Faster Debugging: When integration issues do arise, integration tests facilitate faster debugging by isolating the source of the problem. Test failures provide valuable clues about where the integration broke down, enabling developers to quickly pinpoint the root cause and implement a fix.

- Automated Testing and Continuous Integration: Integration tests are typically automated and integrated into a continuous integration (CI) and continuous delivery (CD) pipeline. This automation ensures that tests are executed frequently, providing rapid feedback on code changes and enabling faster release cycles. The CI/CD pipeline automatically runs integration tests after each code commit.

Impact of Integration Testing on the Development Lifecycle

The following table illustrates the benefits of integration testing and the corresponding impacts on the development lifecycle:

| Benefit | Impact on Development | Example | Measurement |

|---|---|---|---|

| Enhanced Reliability | Reduced production incidents and service outages. | A serverless application that processes financial transactions, where integration tests prevent data corruption or lost transactions due to integration issues between Lambda functions and a database. | Reduction in the number of production incidents, measured as incidents per month. |

| Improved Maintainability | Easier code changes, reduced regressions, and faster debugging. | A serverless application that evolves over time, with new features added regularly. Integration tests ensure that new features do not break existing functionality and that existing features continue to work as expected. | Time spent on debugging and fixing integration-related issues, measured in developer hours per release. |

| Reduced Development Costs | Faster release cycles, lower rework costs, and improved developer productivity. | A team using integration tests to automate the testing of a complex serverless application. The automated tests provide rapid feedback on code changes, allowing developers to fix bugs quickly and release new features faster. | Number of releases per quarter and the cost of rework (fixing bugs found after deployment), measured in dollars. |

| Improved Error Handling and Resilience | Ensure graceful degradation and rapid recovery from service failures. | Testing the integration of a Lambda function with an external API. Integration tests simulate API unavailability and ensure the Lambda function implements retry logic and circuit breakers to handle the failure gracefully, preventing cascading failures. | Time to recover from a service failure, measured in minutes. |

Challenges in Integration Testing Serverless

Integration testing in serverless architectures presents a unique set of challenges that developers must navigate. The distributed nature of serverless applications, coupled with the inherent characteristics of the cloud environment, complicates testing procedures. Understanding these challenges is crucial for implementing effective and reliable integration tests.

Dependency Management Complexities

Managing dependencies in serverless integration testing involves several intricate considerations. Serverless functions often rely on external services, APIs, and libraries. Properly configuring and managing these dependencies is vital for ensuring the test environment accurately reflects the production environment.

- Versioning Conflicts: Different functions within a serverless application may depend on different versions of the same library. This can lead to conflicts during testing if the test environment isn’t carefully configured to handle these variations. For example, Function A might depend on version 1.0 of a library, while Function B relies on version 2.0. Running integration tests without proper version isolation could result in unexpected behavior or test failures.

- External Service Availability: Integration tests frequently interact with external services like databases, message queues, and third-party APIs. Ensuring the availability and reliability of these external dependencies during testing is paramount. Network outages, service disruptions, or rate limiting can all impact test results, making it difficult to determine whether a failure is due to a code issue or an external service problem.

- Dependency Injection Challenges: Injecting dependencies into serverless functions can be more complex than in traditional applications. Serverless platforms often have limitations on how dependencies can be passed to functions. This can make it difficult to mock or stub dependencies for testing purposes. For example, using a dependency injection framework within a serverless function can be challenging due to cold start times and resource constraints.

Mocking and Stubbing Difficulties

Effectively mocking and stubbing dependencies is essential for isolating serverless functions and controlling their behavior during integration tests. However, this process can be more challenging in serverless environments due to the distributed nature of the architecture and the reliance on external services.

- Complex Service Interactions: Serverless functions often interact with numerous other services, such as databases, object storage, and event buses. Mocking all these interactions can be a significant undertaking, requiring detailed knowledge of each service’s API and behavior. For example, mocking an AWS Lambda function that interacts with an S3 bucket, a DynamoDB table, and an SNS topic involves creating stubs for all three services.

- Behavioral Variations: External services may exhibit different behaviors in different environments. For instance, a database might return different data in a test environment compared to a production environment. Mocking these variations accurately can be challenging. Consider a scenario where a test function expects a specific data format from a database. If the mocked database returns data in a different format, the test will fail, even if the function itself is correct.

- Integration with Mocking Frameworks: Integrating mocking frameworks with serverless platforms can sometimes be problematic. Some frameworks may not be fully compatible with the execution environment of serverless functions. Additionally, the distributed nature of serverless applications may require the use of more sophisticated mocking strategies, such as service virtualization, to accurately simulate complex interactions.

Environment Setup Complications

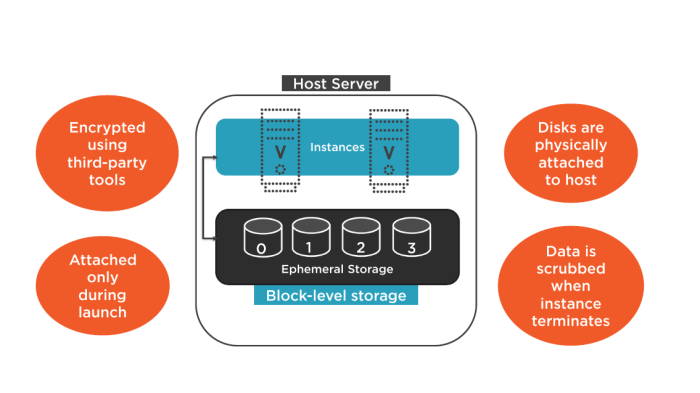

Setting up and managing test environments for serverless applications can be complex. The ephemeral nature of serverless resources and the reliance on cloud-specific configurations add to the complexity.

- Infrastructure as Code (IaC) Requirement: Automating the creation and teardown of test environments is crucial for serverless integration testing. This often involves using Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation. Setting up and managing the IaC configuration itself can be a complex process, requiring expertise in the specific tool and the underlying cloud platform.

- Ephemeral Resources: Serverless resources, such as functions and databases, are often created and destroyed on demand. This ephemeral nature means that test environments must be quickly provisioned and deprovisioned to avoid resource conflicts and ensure test isolation. For instance, an integration test might create a temporary database to test a function that writes data to a database. After the test completes, the database must be automatically deleted to avoid cluttering the test environment.

- Configuration Drift: Maintaining consistent configurations across different environments (development, staging, production) is essential for accurate testing. Configuration drift, where the configuration of a test environment deviates from the production environment, can lead to misleading test results. This can be mitigated by using IaC to ensure that the test environment is a faithful replica of the production environment.

- Cost Management: Running integration tests in the cloud can incur costs, especially if tests are run frequently or involve resource-intensive operations. Optimizing test configurations and resource usage is important to control costs. For example, using smaller instance sizes for test databases or implementing strategies to reuse test resources can help reduce costs.

Testing Strategies for Serverless Integration

Effective integration testing is crucial for ensuring the reliable operation of serverless applications. Serverless architectures, with their distributed nature and reliance on third-party services, necessitate a strategic approach to testing. Choosing the right testing strategy depends on factors such as the complexity of the application, the level of risk tolerance, and the available resources. Several strategies can be employed, each with its own strengths and weaknesses.

Contract Testing

Contract testing focuses on verifying the agreements, or “contracts,” between different components of a system. In a serverless environment, these components often include functions, APIs, and external services. This strategy ensures that the data exchanged between these components adheres to predefined specifications.Contract testing offers several advantages and disadvantages:

- Pros:

- Early Detection of Integration Issues: Contract tests can identify integration problems early in the development cycle, before components are fully integrated. This helps prevent costly rework.

- Decoupled Testing: Allows for testing components in isolation, as long as the contract is satisfied. This makes testing faster and more efficient.

- Reduced Test Complexity: By focusing on contracts, the tests are less complex than end-to-end tests, reducing the overall testing effort.

- Improved Collaboration: Contracts serve as a shared understanding between teams developing different components. This facilitates collaboration and reduces misunderstandings.

- Cons:

- Contract Maintenance: Contracts need to be maintained and updated as the system evolves. This can be time-consuming and require careful attention to detail.

- Limited Scope: Contract tests only verify the data exchange. They don’t test the business logic or the behavior of individual components in detail.

- Potential for False Positives/Negatives: If the contract is not defined correctly or if the testing implementation is flawed, contract tests can generate false positives or negatives, leading to inaccurate results.

Consumer-Driven Contracts

Consumer-driven contracts (CDCs) take a consumer-first approach to contract testing. The consumer, or the component that uses a service, defines the contract based on its needs. This ensures that the service provides the data and functionality required by the consumer.The pros and cons of consumer-driven contracts are:

- Pros:

- Consumer-Centric: Ensures that the service meets the specific needs of its consumers, improving the overall user experience.

- Reduced Risk of Breaking Changes: Changes to the service are less likely to break the consumers, as the contract is driven by the consumer’s requirements.

- Increased Confidence: Provides a high level of confidence that the service will function correctly with its consumers.

- Cons:

- Complexity: Requires a deeper understanding of the consumer’s needs and how it interacts with the service.

- Increased Testing Effort: May require more tests, as each consumer’s needs must be considered.

- Potential for Over-Specification: Consumers may over-specify their needs, leading to unnecessary complexity in the service.

Component Testing

Component testing involves testing individual components of a serverless application in isolation. This approach focuses on verifying the functionality of each component and its interactions with other components.Here’s a look at the pros and cons of component testing:

- Pros:

- Focused Testing: Allows for testing specific components in isolation, making it easier to identify and fix bugs.

- Faster Execution: Component tests are typically faster to execute than end-to-end tests, which reduces the overall testing time.

- Improved Debugging: Makes debugging easier, as the scope of the test is limited to a single component.

- Reduced Dependencies: Allows for testing components without needing to deploy the entire application.

- Cons:

- Limited Scope: Component tests do not verify the interactions between different components or the overall behavior of the system.

- Mocking Requirements: May require the use of mocks or stubs to simulate the behavior of dependent components.

- Potential for Integration Issues: Does not guarantee that the components will work together correctly when integrated.

Mocking and Stubbing in Serverless Integration Tests

Mocking and stubbing are essential techniques in serverless integration testing, allowing developers to isolate and test individual components without relying on the availability or stability of external services or dependencies. This approach significantly improves test execution speed, reduces costs, and enhances the reliability of test results by eliminating external factors that could lead to false negatives. By simulating the behavior of these dependencies, developers can focus on verifying the logic of their serverless functions and their interactions with other components within the architecture.

Importance of Mocking and Stubbing

Mocking and stubbing play a critical role in ensuring the effectiveness and efficiency of serverless integration tests. They provide a controlled environment for testing, enabling developers to simulate various scenarios and edge cases without the complexities of real-world dependencies.

- Isolation: Mocking isolates the component under test by replacing its dependencies with controlled substitutes. This prevents external factors, such as network latency or service outages, from affecting the test results.

- Speed: Mocking reduces test execution time. Instead of waiting for responses from external services, mocks return predefined values instantly, significantly speeding up the testing process.

- Control: Mocks allow developers to control the behavior of dependencies. They can simulate various responses, including success, failure, and different error conditions, to test how the component under test handles these scenarios.

- Cost-effectiveness: By avoiding calls to external services, mocking helps to reduce testing costs, especially for services with pay-per-use pricing models.

- Repeatability: Mocks ensure that tests are repeatable. They eliminate the variability introduced by external services, ensuring that tests produce the same results every time.

Methods for Mocking Serverless Components and External Services

Effective mocking strategies are crucial for simulating the behavior of serverless components and external services in integration tests. This involves creating controlled substitutes that mimic the interactions of these dependencies.

- API Gateway Mocking: API Gateway can be mocked to simulate HTTP requests and responses. This can be achieved by intercepting the requests and returning predefined responses, or by using mock integrations that directly return a pre-configured response.

- External Service Mocking: External services, such as databases, message queues, and other APIs, can be mocked using various techniques. This can involve creating mock implementations that return pre-defined data or simulate specific behaviors, or using mocking frameworks that allow developers to define expected interactions and responses.

- Event Source Mocking: Event sources, such as S3 buckets or CloudWatch events, can be mocked by creating simulated events and triggering the relevant Lambda functions with these events. This allows developers to test how their functions handle different event scenarios.

- Dependency Injection: Employing dependency injection is a good practice to make components easier to mock. By injecting dependencies as interfaces, developers can substitute the real implementations with mock objects during testing.

Using Mocking Frameworks in Serverless Development

Several mocking frameworks are available for different programming languages commonly used in serverless development, enabling developers to effectively create and manage mocks for their integration tests.

- JavaScript (Node.js): Jest is a popular JavaScript testing framework that includes built-in mocking capabilities. It allows developers to easily mock modules, functions, and objects. For example, using `jest.mock()` to replace a module with a mock implementation.

Example:

// Original Module (e.g., an API client) const axios = require('axios'); async function fetchData(url) const response = await axios.get(url); return response.data; module.exports = fetchData ; // Test File const fetchData = require('./your-module'); const axios = require('axios'); // Import axios to mock it jest.mock('axios'); // Mock the axios module test('fetches data successfully', async () => const mockData = data: 'mocked data' ; axios.get.mockResolvedValue( data: mockData ); // Configure mock to return resolved value const data = await fetchData('http://example.com'); expect(data).toEqual(mockData); expect(axios.get).toHaveBeenCalledWith('http://example.com'); // Verify that axios.get was called ); - Python: unittest.mock (built-in) and pytest-mock are commonly used for mocking in Python. `unittest.mock` provides classes and functions for creating mock objects, patching objects, and verifying interactions. `pytest-mock` provides pytest fixtures for mocking and patching.

Example:

# Original Module (e.g., an API client) import requests def get_data(url): response = requests.get(url) return response.json() # Test File import unittest from unittest.mock import patch import your_module # Import your module containing get_data class TestGetData(unittest.TestCase): @patch('your_module.requests.get') def test_get_data_success(self, mock_get): mock_get.return_value.json.return_value = 'data': 'mocked data' result = your_module.get_data('http://example.com') self.assertEqual(result, 'data': 'mocked data') mock_get.assert_called_once_with('http://example.com') # Verify that requests.get was called - Java: Mockito is a popular mocking framework for Java. It allows developers to create mock objects, define their behavior, and verify interactions.

Example:

// Original Module (e.g., an API client) import org.springframework.web.client.RestTemplate; import org.springframework.http.ResponseEntity; public class ApiClient private final RestTemplate restTemplate; public ApiClient(RestTemplate restTemplate) this.restTemplate = restTemplate; public String fetchData(String url) ResponseEntity<String> response = restTemplate.getForEntity(url, String.class); return response.getBody(); // Test File import org.junit.jupiter.api.Test; import org.mockito.Mockito; import org.springframework.web.client.RestTemplate; import org.springframework.http.ResponseEntity; import static org.junit.jupiter.api.Assertions.assertEquals; import static org.mockito.Mockito.*; public class ApiClientTest @Test public void testFetchDataSuccess() // Arrange RestTemplate restTemplate = Mockito.mock(RestTemplate.class); ApiClient apiClient = new ApiClient(restTemplate); ResponseEntity<String> responseEntity = Mockito.mock(ResponseEntity.class); when(restTemplate.getForEntity(Mockito.anyString(), Mockito.eq(String.class))).thenReturn(responseEntity); when(responseEntity.getBody()).thenReturn("mocked data"); // Act String result = apiClient.fetchData("http://example.com"); // Assert assertEquals("mocked data", result); verify(restTemplate, times(1)).getForEntity("http://example.com", String.class);

Setting Up Test Environments for Serverless

Setting up effective test environments is crucial for serverless integration testing. It allows developers to simulate the production environment and validate the interactions between various serverless components before deployment. A well-configured test environment minimizes the risk of errors in production, ensures system stability, and accelerates the development lifecycle. The goal is to create a controlled and repeatable environment that accurately reflects the behavior of the live system.

Replicating the production environment or employing a similar configuration is essential for ensuring the validity of integration tests. This involves mirroring the services, configurations, and dependencies used in production, to the extent possible within the constraints of cost and practicality. The degree of replication depends on the complexity of the serverless architecture and the criticality of the system. A balance must be struck between achieving a high degree of fidelity and maintaining a manageable and cost-effective testing setup.

Environment Configuration Strategies

Several strategies exist for setting up test environments for serverless architectures. The choice depends on factors such as the complexity of the application, the budget allocated for testing, and the desired level of realism. These strategies often involve trade-offs between accuracy, cost, and ease of maintenance.

- Production Environment Replication: This approach involves creating an exact replica of the production environment.

- Description: The test environment mirrors the production environment in every aspect, including infrastructure, configurations, and data.

- Benefits: Provides the highest level of realism, ensuring tests accurately reflect the behavior of the production system.

- Drawbacks: Can be expensive, complex to manage, and may not be feasible for all serverless applications due to cost constraints or data sensitivity. Requires significant infrastructure and configuration effort.

- Staging Environment: A staging environment is a close approximation of the production environment, often used for pre-production testing.

- Description: It uses a similar configuration to production, but might use smaller instances, different data sets, or simulated external services to reduce costs.

- Benefits: Offers a good balance between realism and cost-effectiveness. Allows for thorough testing of integration points.

- Drawbacks: Might not perfectly replicate production behavior, potentially leading to discrepancies between test results and real-world performance.

- Development/Test Environments: Dedicated environments designed specifically for development and testing.

- Description: These environments often utilize mocked services and simplified configurations for faster development and testing cycles.

- Benefits: Cost-effective and suitable for unit and integration testing. Enables rapid iteration and debugging.

- Drawbacks: May not accurately reflect production behavior, potentially leading to undetected integration issues. Requires careful consideration of mocked services.

- Hybrid Approach: A combination of different environment types.

- Description: Uses a staging environment for comprehensive testing and development/test environments for rapid prototyping and unit testing.

- Benefits: Balances realism, cost, and development speed.

- Drawbacks: Requires careful management and coordination between different environments.

Step-by-Step Guide to Setting Up a Test Environment

The following steps Artikel a general process for setting up a test environment for serverless integration testing. The specifics will vary depending on the cloud provider, the serverless components used, and the application’s complexity.

- Define Environment Requirements: Determine the specific components and configurations needed for the test environment.

- Description: Identify the serverless functions, APIs, databases, event sources, and any external services that interact with the application. Define the configuration parameters, such as memory allocation, timeouts, and security settings.

- Description: Consider factors such as cost, complexity, and the level of realism required. Choose between production replication, staging, or development/test environments, or a hybrid approach.

- Description: Use Infrastructure as Code (IaC) tools like AWS CloudFormation, Terraform, or Serverless Framework to automate the creation and configuration of the test environment. This ensures consistency and repeatability.

- Description: Set up the function code, API gateways, database schemas, event triggers, and any necessary integrations with external services. Configure security settings such as IAM roles and access control policies.

- Description: Prepare a representative dataset that reflects the expected data volume and structure. Consider using data masking or anonymization techniques to protect sensitive information.

- Description: Install and configure the necessary testing libraries, mocking frameworks, and test runners. Choose tools that integrate well with the chosen serverless platform and programming language.

- Description: Run basic tests to ensure that the components are deployed and configured correctly and that the integration points function as expected.

- Description: Run the integration tests against the test environment to validate the functionality and integration between the serverless components.

- Description: After testing, automatically remove or reset the test environment to prevent resource exhaustion and ensure a clean state for subsequent tests.

Example: AWS Environment Setup

Consider a scenario where a serverless application on AWS consists of an API Gateway, Lambda functions, and a DynamoDB table. The following illustrates steps to create a test environment:

- Use Infrastructure as Code (IaC): Utilize AWS CloudFormation or Terraform to define the infrastructure as code. This includes defining the API Gateway, Lambda functions (including their code and dependencies), and the DynamoDB table.

- Example: A CloudFormation template could specify the creation of an API Gateway with associated API routes, the creation of Lambda functions triggered by API Gateway requests, and the creation of a DynamoDB table.

- Environment Variables: Configure environment variables within the Lambda functions to point to the correct DynamoDB table in the test environment.

- Example: The Lambda function code retrieves the table name from an environment variable, enabling the function to interact with the correct DynamoDB table based on the environment (test, staging, production).

- Test Data Loading: Populate the DynamoDB table in the test environment with test data.

- Example: Use a script to insert sample data into the DynamoDB table or copy a subset of data from a production database (after anonymization).

- Testing Framework: Implement integration tests using a testing framework like Jest or Mocha.

- Example: The tests will send requests to the API Gateway endpoints, and then verify that the Lambda functions correctly process the requests, interact with the DynamoDB table (inserting, updating, or retrieving data), and return the expected responses.

- Automated Deployment: Implement a CI/CD pipeline to automatically deploy the infrastructure and the application code to the test environment.

- Example: Upon code commits, the pipeline triggers the IaC scripts, deploys the code, and then runs the integration tests.

By following these steps, developers can establish a robust test environment that facilitates reliable integration testing and contributes to the overall quality and stability of serverless applications.

Tools and Frameworks for Serverless Integration Testing

The efficacy of serverless integration testing heavily relies on the selection and proper utilization of appropriate tools and frameworks. These tools streamline the testing process, facilitate automation, and enhance the reliability of serverless applications. This section explores some of the most popular tools and frameworks available, along with their integration into CI/CD pipelines.

Popular Serverless Testing Tools and Frameworks

A diverse ecosystem of tools and frameworks supports serverless integration testing, each offering unique capabilities and addressing specific testing needs. Choosing the right combination of tools depends on the specific architecture, programming languages, and testing requirements of the serverless application.

- Serverless Framework: This is a widely adopted open-source framework for building and deploying serverless applications. It simplifies the deployment process by abstracting away much of the underlying infrastructure management. The Serverless Framework can be integrated with testing frameworks like Jest or Mocha to automate integration tests. It allows developers to define their serverless application using a `serverless.yml` configuration file, which specifies the functions, events, and resources.

- AWS SAM (Serverless Application Model): Developed by AWS, SAM is a framework for defining and deploying serverless applications. It extends AWS CloudFormation to provide a simplified way to define serverless resources. SAM provides a local testing environment and supports various testing tools. It offers features for local invocation, debugging, and simulating AWS services, enabling developers to test their applications without deploying to the cloud.

SAM CLI allows developers to emulate the AWS Lambda environment locally, reducing the need for cloud deployments during testing.

- Jest: A JavaScript testing framework maintained by Facebook, Jest is known for its simplicity and ease of use. It can be used to write unit and integration tests for serverless functions written in JavaScript and TypeScript. Jest’s mocking capabilities and fast test execution make it suitable for serverless testing. Jest can be used to mock AWS SDK calls, allowing developers to test function logic without making actual calls to AWS services.

- Mocha: Another popular JavaScript testing framework, Mocha provides a flexible environment for writing and running tests. It’s often used with assertion libraries like Chai and mocking libraries like Sinon. Mocha’s flexibility allows for complex test setups and integration with various testing tools.

- Chai: A popular assertion library for JavaScript, Chai provides a fluent and readable way to write assertions in tests. It supports different styles of assertions, including `expect`, `assert`, and `should`, allowing developers to choose the style that best suits their preferences.

- Sinon.JS: A standalone test spies, stubs, and mocks for JavaScript. Sinon allows developers to create mock objects, stub methods, and spy on function calls, which is essential for isolating and testing serverless functions.

- Testcontainers: While not serverless-specific, Testcontainers is valuable for integration testing. It enables the creation of lightweight, disposable instances of databases, message queues, and other dependencies within Docker containers. This allows for testing serverless functions that interact with external services.

Integrating Tools and Frameworks into CI/CD Pipelines

Integrating serverless testing tools into a CI/CD pipeline is crucial for automating the testing process and ensuring the continuous delivery of high-quality serverless applications. The pipeline should include steps for building, testing, and deploying the application.

- Automated Testing Execution: CI/CD pipelines automatically run integration tests whenever code changes are pushed to the repository. This typically involves configuring the pipeline to execute the test suite using a command-line interface (CLI) or a build script. For instance, a pipeline could execute `npm test` (if using Jest or Mocha with npm) or use the `sam local invoke` command for AWS SAM tests.

- Test Results Reporting: The CI/CD pipeline should generate and report test results, providing developers with immediate feedback on the success or failure of their tests. This typically involves integrating the testing framework with a reporting tool that can display the results in a user-friendly format. Tools like JUnit, or custom reporting scripts can be used to aggregate test results.

- Deployment Automation: Successful integration tests can trigger automated deployment to staging or production environments. This involves configuring the pipeline to use tools like the Serverless Framework or AWS SAM to deploy the application. The deployment process should include steps to update the necessary infrastructure and configure the application for the target environment.

- Example CI/CD Pipeline using GitHub Actions: A simple GitHub Actions workflow for testing a serverless application using Jest and the Serverless Framework might include the following steps:

- Checkout the code from the repository.

- Set up Node.js.

- Install dependencies using `npm install`.

- Run integration tests using `npm test`.

- If tests pass, deploy the application to a staging environment using the Serverless Framework.

- Generate and publish test reports.

Best Practices for Serverless Integration Testing

Effective integration testing in serverless architectures is crucial for ensuring the reliability and performance of the system. Adhering to best practices allows developers to identify and resolve integration issues early in the development lifecycle, leading to more robust and maintainable applications. These practices cover various aspects, from test design to environment setup and test data management.

Integration tests, unlike unit tests, focus on verifying the interaction between different components of a system. In a serverless environment, these components are often independent functions or services that communicate through events, APIs, or other mechanisms. Therefore, a well-defined strategy for integration testing is essential.

Test Coverage Guidelines

Comprehensive test coverage is essential for ensuring the quality of serverless applications. It aims to verify that all the key components of the system interact correctly and that the overall functionality operates as expected.

- Prioritize Critical Paths: Focus test coverage on the most critical paths and functionalities of the application. Identify the core business processes and design tests that cover these scenarios extensively. This includes testing the interactions between different serverless functions, API gateways, databases, and other services that are essential for the application’s core functionality.

- Test All Integration Points: Ensure that all integration points, such as API calls, database interactions, and event triggers, are thoroughly tested. Verify that data is correctly passed between components and that the system handles different types of input and output appropriately. This helps to identify issues related to data mapping, serialization, and deserialization.

- Test Error Handling and Edge Cases: Include tests that specifically target error handling and edge cases. Verify that the system correctly handles unexpected inputs, network failures, and other error conditions. This includes testing scenarios like invalid API requests, database connection errors, and event processing failures.

- Aim for High Code Coverage: Strive for a high level of code coverage to ensure that all parts of the codebase are tested. Code coverage metrics can help identify areas of the code that are not adequately tested, providing insights into the overall test effectiveness. Tools like JaCoCo, Istanbul, or SonarQube can be used to measure code coverage.

- Automate Test Execution: Automate the execution of integration tests as part of the CI/CD pipeline. Automated tests provide fast feedback on code changes and help to prevent regressions. Automating test execution also ensures that tests are run consistently across different environments.

Test Data Management Principles

Effective test data management is critical for creating reliable and repeatable integration tests. It involves creating, managing, and using test data in a way that minimizes dependencies and maximizes test effectiveness.

- Use Realistic Test Data: Use test data that closely resembles the production data. This ensures that the tests accurately reflect the behavior of the system in a real-world scenario. If possible, use anonymized or masked data from the production environment.

- Isolate Test Data: Ensure that test data is isolated from the production data. Avoid using production data in integration tests, as this can lead to data corruption or unintended consequences. Use dedicated test databases or environments for integration tests.

- Use Data Factories: Employ data factories or test data builders to create and manage test data. Data factories automate the creation of test data, ensuring that it is consistent and reliable. This can also involve tools for data seeding, allowing the creation of pre-populated data sets for specific test scenarios.

- Clean Up Test Data: Implement a mechanism to clean up test data after each test run. This prevents test data from accumulating and potentially interfering with subsequent test runs. Cleaning up test data can also help to improve the performance of the tests.

- Consider Data Masking: When using production-like data, consider data masking or anonymization techniques to protect sensitive information. This can involve replacing sensitive data with non-sensitive alternatives, ensuring compliance with data privacy regulations.

Test Case Design Considerations

Designing effective test cases is crucial for the success of integration testing. The design should focus on verifying the interactions between components and the overall system behavior.

- Design for Independent Tests: Each test case should be independent and not rely on the results of other tests. This ensures that tests can be run in any order and that failures do not cascade. Independent tests also make it easier to isolate and debug issues.

- Follow the Arrange-Act-Assert Pattern: Structure test cases using the Arrange-Act-Assert (AAA) pattern. The “Arrange” phase sets up the test environment and prepares the necessary data. The “Act” phase performs the action or interaction being tested. The “Assert” phase verifies the expected outcome.

- Test Positive and Negative Scenarios: Design test cases to cover both positive and negative scenarios. Positive scenarios verify that the system behaves as expected under normal conditions. Negative scenarios verify that the system handles errors and unexpected inputs correctly.

- Use Descriptive Test Names: Use descriptive test names that clearly indicate the purpose of the test. This makes it easier to understand the test and to diagnose issues. Test names should be concise and informative, indicating the scenario being tested and the expected outcome.

- Document Test Cases: Document each test case, including the purpose, preconditions, steps, expected results, and any dependencies. Well-documented test cases make it easier to maintain and understand the tests. Documenting tests helps to clarify the functionality being tested and allows for easy debugging.

Best Practices Summary:

- Prioritize Critical Paths and Test All Integration Points

- Use Realistic and Isolated Test Data

- Design Independent Tests with Descriptive Names

- Test Positive and Negative Scenarios

- Automate Test Execution and Clean Up Test Data

Continuous Integration and Continuous Deployment (CI/CD) for Serverless Integration Tests

Integrating integration tests into a Continuous Integration and Continuous Deployment (CI/CD) pipeline is crucial for ensuring the reliability and maintainability of serverless applications. This process automates the execution of integration tests, along with the deployment process, providing rapid feedback and enabling faster, more reliable releases. Automating this process is paramount in a serverless environment, where frequent deployments and changes are common.

Integrating Integration Tests into a CI/CD Pipeline

The integration of integration tests into a CI/CD pipeline involves incorporating test execution into the automated build and deployment process. This integration ensures that every code change triggers a series of automated tests, including integration tests, before deployment to production. This proactive approach identifies integration issues early in the development cycle, minimizing the risk of errors reaching end-users.

- Triggering the Pipeline: The CI/CD pipeline is typically triggered by events such as code commits to a version control system (e.g., Git). Upon detecting a change, the pipeline initiates the build and test processes.

- Building the Application: The build stage involves compiling the code, managing dependencies, and preparing the application for deployment. For serverless applications, this might involve packaging the function code and associated resources.

- Executing Integration Tests: After the build stage, the pipeline executes the integration tests. These tests verify the interactions between different serverless functions and external services.

- Analyzing Test Results: The pipeline analyzes the test results. If any tests fail, the pipeline typically halts, preventing the deployment of potentially broken code.

- Deployment: If all tests pass, the pipeline proceeds to the deployment stage, deploying the serverless application to the target environment (e.g., staging or production).

Automating Test Execution and Deployment

Automating test execution and deployment streamlines the software delivery process. Automation reduces manual intervention, minimizes human error, and accelerates the feedback loop. This approach enhances efficiency and allows for more frequent, safer releases.

- Automated Test Execution: Integration tests are executed automatically as part of the CI/CD pipeline. Tools such as AWS CodePipeline, Azure DevOps, or Jenkins can be configured to run tests after each code change.

- Automated Deployment: Successful test runs trigger automated deployments. The deployment process can include updating serverless functions, configuring API gateways, and managing other cloud resources.

- Environment-Specific Deployments: The CI/CD pipeline can be configured to deploy to different environments (e.g., development, staging, production). This allows for thorough testing and validation before deploying to production.

- Rollback Mechanisms: Automated deployment should include rollback mechanisms to revert to a previous version if issues arise after deployment. This minimizes downtime and ensures application stability.

CI/CD Pipeline Diagram

The following diagram illustrates a typical CI/CD pipeline for a serverless application with integration tests.

Diagram Description:

The diagram depicts a CI/CD pipeline with several stages, each represented by a rectangular box connected by arrows indicating the flow of the process. The process starts with a “Code Commit” event in a Version Control System (VCS). This triggers the first stage, “Source,” which retrieves the code from the VCS. Following the “Source” stage is the “Build” stage, where the application is compiled, dependencies are managed, and the application is prepared for deployment.

After the “Build” stage comes the “Test” stage. In this stage, integration tests are executed to validate the interaction between different components of the serverless application and external services. The “Test” stage feeds into the “Deploy” stage. The “Deploy” stage deploys the application to a staging environment if the tests pass, allowing for additional testing. If the staging tests are successful, the application is then deployed to a production environment.

Throughout the pipeline, there are notifications (e.g., email, Slack) that inform developers about the status of the pipeline, including success or failure, after each stage. If a stage fails, the pipeline stops and notifies the team.

Monitoring and Reporting Test Results

Effective monitoring and reporting of serverless integration test results are crucial for maintaining application reliability, identifying issues promptly, and facilitating continuous improvement. By systematically tracking test outcomes, developers gain insights into the performance and stability of their serverless applications, enabling them to make data-driven decisions and optimize their testing strategies. This proactive approach ensures that potential problems are addressed before they impact users.

Methods for Monitoring Serverless Integration Test Results

Monitoring serverless integration tests involves collecting, analyzing, and visualizing test results to provide actionable insights. This process allows for the rapid identification of failures, performance bottlenecks, and areas for improvement within the serverless architecture.

- Automated Test Execution and Reporting: Integration tests should be integrated into the CI/CD pipeline, automating their execution after code changes. Automated reporting tools then aggregate the results, making them accessible to the development team. This ensures that tests are run consistently and that results are readily available.

- Real-time Monitoring: Real-time dashboards and alerts provide immediate feedback on test results. This allows developers to react quickly to failures and identify performance issues as they occur. Tools should be configured to send notifications (e.g., email, Slack) when tests fail or when performance metrics deviate from established baselines.

- Test Result Aggregation: Aggregating test results from multiple environments and test runs provides a comprehensive view of application health. This aggregation facilitates the identification of trends and patterns, helping developers to understand the impact of changes over time. This involves storing test results in a centralized location, such as a database or a cloud-based monitoring service.

- Performance Metric Tracking: Monitor key performance indicators (KPIs) such as latency, error rates, and throughput. These metrics provide insight into the performance and stability of the serverless functions and the overall application. Establishing baselines for these metrics is crucial for detecting regressions.

- Detailed Logging and Tracing: Comprehensive logging and tracing are essential for diagnosing test failures. Logs should capture detailed information about the execution of each test, including input parameters, function invocations, and error messages. Distributed tracing tools help to track requests across multiple serverless functions and services, making it easier to identify the root cause of failures.

Tools and Dashboards for Test Reporting

Various tools and dashboards are available to effectively monitor and report serverless integration test results. These tools offer different features, from basic reporting to advanced analytics and visualization capabilities.

- CloudWatch (AWS): CloudWatch provides comprehensive monitoring capabilities for AWS services, including serverless functions. It can be used to collect logs, metrics, and custom dashboards. It allows setting up alarms based on test results and performance metrics.

- Cloud Logging (GCP): Google Cloud Logging offers centralized logging for Google Cloud Platform (GCP) services. It supports log aggregation, analysis, and alerting. It integrates seamlessly with other GCP services, such as Cloud Functions and Cloud Run.

- Azure Monitor (Azure): Azure Monitor provides monitoring and logging capabilities for Azure services. It allows the creation of custom dashboards and alerts. It integrates with Azure Functions and other Azure services.

- Grafana: Grafana is a powerful open-source platform for data visualization and monitoring. It supports various data sources, including Prometheus, InfluxDB, and Elasticsearch. Grafana can be used to create custom dashboards that visualize test results and performance metrics.

- Prometheus: Prometheus is an open-source monitoring system and time-series database. It is particularly well-suited for collecting and analyzing metrics from serverless applications. Prometheus integrates with Grafana for visualization.

- ELK Stack (Elasticsearch, Logstash, Kibana): The ELK Stack is a popular open-source solution for log management and analysis. Elasticsearch is a search and analytics engine, Logstash is a data processing pipeline, and Kibana is a data visualization dashboard. The ELK Stack can be used to analyze test logs and create custom dashboards.

- Test Management Tools: Tools like TestRail, Zephyr, and Xray provide features for managing test cases, tracking test execution, and generating reports. These tools can be integrated with CI/CD pipelines to automate test execution and reporting.

Importance of Different Test Result Types

Understanding the different types of test results and their importance is critical for effective monitoring and reporting. The following table illustrates the types of test results and their significance.

| Test Result Type | Description | Importance | Metrics to Track |

|---|---|---|---|

| Pass/Fail Status | Indicates whether a test case passed or failed. | Provides a fundamental assessment of the application’s functionality. Quickly identifies broken features or regressions. | Number of tests passed, number of tests failed, pass rate. |

| Performance Metrics | Measures the performance of the application, such as latency, throughput, and error rates. | Ensures the application meets performance requirements and identifies performance bottlenecks. Helps in optimizing function execution and resource allocation. | Average latency, maximum latency, throughput (requests per second), error rate (percentage of failed requests). |

| Code Coverage | Measures the percentage of the codebase covered by the tests. | Indicates the thoroughness of testing. Higher coverage generally leads to fewer undetected bugs. | Line coverage, branch coverage, function coverage. |

| Test Execution Time | Measures the time it takes to execute a test case. | Helps in identifying slow tests and optimizing test execution time. Important for maintaining a fast feedback loop in CI/CD pipelines. | Average test execution time, maximum test execution time, total test execution time. |

Closing Notes

In conclusion, integration testing is indispensable for serverless architectures, providing a crucial layer of assurance in a dynamic and distributed environment. While challenges exist, the benefits—improved reliability, maintainability, and accelerated development cycles—are significant. By adopting appropriate testing strategies, utilizing effective tools, and adhering to best practices, developers can successfully navigate the complexities of serverless integration testing. Ultimately, this approach allows for the creation of robust, scalable, and resilient serverless applications that can meet the demands of modern software development.

Query Resolution

What is the primary difference between integration testing and end-to-end testing in a serverless context?

Integration testing focuses on the interactions between internal serverless components (e.g., Lambda functions, API Gateway), while end-to-end testing validates the entire system, including external services and user interfaces.

How does the ephemeral nature of serverless resources impact integration testing?

Ephemeral resources require automation and infrastructure-as-code to set up, tear down, and manage test environments efficiently, as resources are not persistent.

What are some common mocking techniques used in serverless integration tests?

Mocking involves simulating the behavior of external services or components. Common techniques include using mocking libraries to simulate API Gateway responses, database interactions, and event bus messages.

Why is test coverage important in serverless integration testing?

Test coverage ensures that a sufficient portion of the code and interactions between components are validated. It helps identify untested code paths and potential integration issues, contributing to higher software quality.