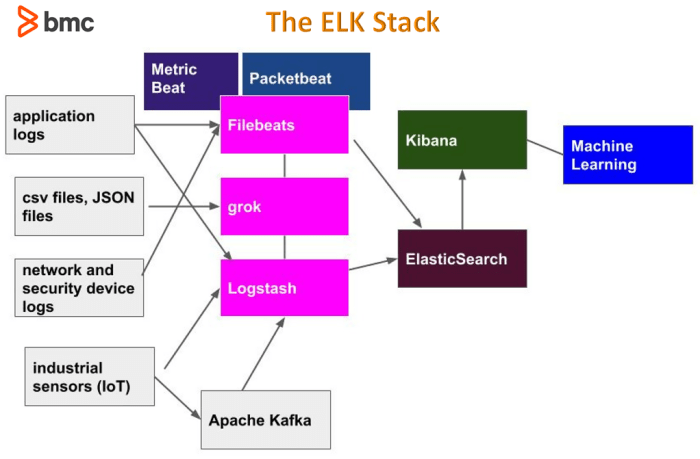

The ELK Stack, comprising Elasticsearch, Logstash, and Kibana, has revolutionized log management, transforming raw data into actionable insights. This powerful combination provides a robust solution for collecting, processing, storing, and visualizing log data from various sources. From humble beginnings, the ELK Stack has evolved into a cornerstone for modern IT operations, offering unparalleled capabilities in troubleshooting, security, and performance monitoring.

This comprehensive guide will delve into each component of the ELK Stack, exploring its functionalities, benefits, and practical applications. We will examine how Elasticsearch enables efficient search and analytics, how Logstash ingests and transforms data, and how Kibana provides intuitive visualizations and dashboards. Furthermore, we will explore the advantages of implementing the ELK Stack, including improved operational efficiency, enhanced security posture, and the ability to proactively address potential issues.

Introduction to ELK Stack

The ELK Stack, now often referred to as the Elastic Stack, is a powerful and widely used open-source log management solution. It’s designed to help users collect, process, store, and analyze log data from various sources. This makes it an invaluable tool for troubleshooting, security monitoring, and gaining insights into application and system behavior.

Core Components of the ELK Stack

The ELK Stack comprises three primary components, each playing a crucial role in the overall log management process. Understanding these components is essential to effectively utilizing the stack.

- Elasticsearch: Elasticsearch is a distributed, RESTful search and analytics engine built on Apache Lucene. It serves as the central data store for the ELK Stack, indexing and storing the log data. Its powerful search capabilities enable users to quickly find specific log entries and perform complex queries. Elasticsearch is designed for scalability and high availability, allowing it to handle large volumes of data.

It provides near real-time search and analytics, making it ideal for operational monitoring. For example, a retail company might use Elasticsearch to analyze sales data, identify trends, and detect fraudulent transactions in near real-time.

- Logstash: Logstash is a data processing pipeline that collects, parses, and transforms log data. It ingests data from various sources, such as application logs, system logs, and network devices. Logstash uses a series of plugins to parse the data, filter it, and enrich it. This might involve extracting specific fields from log messages, converting data types, or adding geolocation information based on IP addresses.

Logstash then sends the processed data to Elasticsearch. For instance, Logstash could be configured to parse web server access logs, extract the IP address, user agent, and request path, and then send this structured data to Elasticsearch for analysis.

- Kibana: Kibana is a visualization and user interface for Elasticsearch. It allows users to explore, visualize, and analyze data stored in Elasticsearch. Kibana provides a range of features, including dashboards, visualizations (such as charts and graphs), and data discovery tools. Users can create interactive dashboards to monitor key metrics, identify trends, and troubleshoot issues. Kibana also supports alerting, allowing users to be notified of critical events or anomalies.

An e-commerce platform could use Kibana to visualize website traffic, track sales performance, and monitor server response times, creating dashboards to quickly identify performance bottlenecks or security threats.

Definition and Purpose of the ELK Stack

The ELK Stack is a comprehensive log management platform designed to centralize, analyze, and visualize log data from diverse sources. Its primary purpose is to provide insights into system and application behavior, enabling users to troubleshoot issues, monitor performance, and improve security posture. The stack’s capabilities extend to various use cases, including security information and event management (SIEM), application performance monitoring (APM), and business intelligence (BI).

The ELK Stack’s core function is to transform raw log data into actionable insights.

History and Evolution of the ELK Stack

The ELK Stack has evolved significantly since its inception. The journey began with Elasticsearch, created by Shay Banon in 2010. The initial focus was on providing a powerful search engine. Logstash was added in 2011 to handle data ingestion and processing. Kibana, developed by Rashid Khan, was integrated to provide a visualization layer in the same year.Over the years, the ELK Stack has seen numerous updates and enhancements.

Elastic, the company behind the stack, has continuously added new features and improved performance. Key milestones include the introduction of:

- X-Pack: This commercial extension added features like security, monitoring, alerting, and reporting.

- Beats: Lightweight data shippers, like Filebeat, Metricbeat, and Auditbeat, were introduced to simplify data collection.

- Integration with cloud platforms: Elastic has developed integrations with cloud providers like AWS, Azure, and Google Cloud, offering managed services and seamless deployment options.

The ELK Stack has become a cornerstone of modern IT infrastructure, providing a robust and scalable solution for log management and data analysis. Its open-source nature and active community support have contributed to its widespread adoption across various industries.

Elasticsearch: The Search and Analytics Engine

Elasticsearch is the core of the ELK Stack, serving as the powerful search and analytics engine. It’s responsible for storing, indexing, and providing real-time search capabilities for the log data collected from various sources. This section delves into the specifics of Elasticsearch, highlighting its role, features, and capabilities.

Role of Elasticsearch in Storing and Indexing Log Data

Elasticsearch’s primary function within the ELK Stack is to store and index log data efficiently. When logs are ingested by Logstash or other input mechanisms, they are parsed, transformed, and then sent to Elasticsearch. Elasticsearch then processes this data to make it searchable and analyzable.Elasticsearch stores data in a distributed manner across multiple nodes, ensuring high availability and scalability. This distributed architecture allows for parallel processing of search queries, significantly improving performance.

Indexing is a crucial process; it involves creating an inverted index, which is a data structure that maps terms to the documents containing them. This allows for incredibly fast search operations, as Elasticsearch doesn’t need to scan the entire dataset to find relevant information. Instead, it uses the index to quickly locate the documents that match the search query. The indexing process also supports various data types, allowing for complex search and analysis.

Elasticsearch’s Key Features and Benefits

Elasticsearch offers a wide array of features and benefits that make it a powerful log management tool. The following table summarizes some of its key aspects:

| Feature | Description | Benefit | Example |

|---|---|---|---|

| Distributed Architecture | Elasticsearch is designed to be distributed, allowing data to be stored across multiple nodes. | Ensures high availability and scalability; if one node fails, the data is still accessible from other nodes. | A cluster with three nodes can continue operating even if one node goes down, maintaining search and indexing capabilities. |

| Real-time Search and Analytics | Provides near real-time search and analytics capabilities. | Enables quick identification of issues and trends within log data. | Security analysts can quickly search for suspicious activity patterns in logs and take immediate action. |

| Schema-free | Allows for flexible data ingestion without requiring a predefined schema. | Simplifies the onboarding of new data sources and reduces the overhead of schema management. | Logs from different applications with varying formats can be ingested without strict schema constraints. |

| RESTful API | Exposes a RESTful API for easy interaction and integration with other systems. | Facilitates automation and integration with various tools and platforms. | Allows developers to easily integrate Elasticsearch into their applications for log analysis and reporting. |

| Scalability | Designed to scale horizontally by adding more nodes to the cluster. | Accommodates growing data volumes and increasing search demands. | A company experiencing rapid growth can easily scale its Elasticsearch cluster to handle the increasing volume of logs generated. |

| Full-text Search | Offers powerful full-text search capabilities, including stemming, synonyms, and relevance scoring. | Enables users to find relevant information quickly and efficiently. | Searching for “network errors” will return results that include logs containing “network error”, “network issues”, and similar phrases. |

Handling Scalability and Performance in Elasticsearch

Elasticsearch is designed with scalability and performance in mind. Its architecture allows it to handle large volumes of data and complex search queries efficiently. Several factors contribute to its scalability and performance.

- Distributed Indexing: Elasticsearch distributes the index across multiple shards, which are independent units of an index. Each shard can be stored on a different node in the cluster. This distribution allows for parallel processing of search queries, significantly improving performance.

- Horizontal Scalability: Elasticsearch can be scaled horizontally by adding more nodes to the cluster. As data volume and search demands increase, more nodes can be added to handle the load. This allows for linear scalability, meaning that adding more resources directly translates to increased capacity.

- Replication: Data is replicated across multiple nodes to ensure high availability and data durability. If a node fails, the data is still available on the replicated nodes.

- Caching: Elasticsearch uses caching mechanisms to improve performance. Caches store frequently accessed data, reducing the need to access the disk for every search query. There are different types of caches, including node caches and query caches.

- Query Optimization: Elasticsearch provides various query optimization techniques, such as using filters to narrow down search results, using aggregations to summarize data, and optimizing data modeling to improve search efficiency.

These features and capabilities make Elasticsearch a robust and efficient solution for log management, enabling organizations to effectively store, search, and analyze large volumes of log data. For example, a large e-commerce company, by leveraging Elasticsearch’s scalability, can handle massive amounts of transaction logs, user activity data, and server logs generated during peak sales periods, ensuring that its search and analysis capabilities remain responsive and reliable.

Logstash

Logstash is a powerful, open-source data ingestion pipeline that allows you to collect data from various sources, transform it, and send it to your desired destination. It’s a crucial component of the ELK Stack, acting as the bridge between your diverse data sources and Elasticsearch. Its flexibility and robust feature set make it ideal for handling the complexities of modern log management.Logstash’s primary function is to collect, parse, and transform log data.

It ingests data from various sources, including application logs, system logs, and network devices. It then parses the data, extracts relevant information, and transforms it into a structured format suitable for analysis in Elasticsearch. This structured data is then sent to the output, often Elasticsearch, for indexing and storage.

Logstash: Data Ingestion and Processing

Logstash’s role involves collecting data from different sources, parsing and transforming the data. This structured data is then sent to an output, usually Elasticsearch, for indexing and storage.Logstash uses a pipeline architecture consisting of three main components: inputs, filters, and outputs. Inputs collect data from various sources. Filters process and transform the data. Outputs send the processed data to its destination.Here are examples of common Logstash input, filter, and output configurations:

- Input Configuration: This configuration defines how Logstash receives data. For example, to receive data from a file:

input file path => "/var/log/apache2/access.log" start_position => "beginning"

This configuration instructs Logstash to read the Apache access logs from the specified file path. The `start_position` parameter ensures that Logstash reads from the beginning of the file when it starts.

- Filter Configuration: This configuration defines how Logstash processes and transforms the data. For example, to parse Apache access logs:

filter grok match => "message" => "%IP:clientip %WORD:ident %USER:auth \[%HTTPDATE:timestamp\] \"%WORD:verb %URIPATHPARAM:request HTTP/%NUMBER:httpversion\" %NUMBER:response %NUMBER:bytes" date match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

This configuration uses the `grok` filter to parse the log messages and extract relevant fields like client IP, timestamp, and HTTP verb.

The `date` filter then parses the timestamp into a proper date format.

- Output Configuration: This configuration defines where Logstash sends the processed data. For example, to send data to Elasticsearch:

output elasticsearch hosts => ["http://localhost:9200"] index => "apache-access-logs-%+YYYY.MM.dd"

This configuration sends the processed data to an Elasticsearch instance running on `localhost` at port 9200. The `index` parameter defines the index name in Elasticsearch, which in this case, will be a daily index named based on the current date (e.g., `apache-access-logs-2024.10.27`).

Logstash offers a wide array of data processing capabilities, making it a versatile tool for log management.

- Data Enrichment: Logstash can enrich log data with additional information, such as geolocation data from IP addresses or user agent details. This allows for more in-depth analysis.

- Data Transformation: Logstash can transform data into a structured format. This may include converting data types, removing sensitive information, or calculating new fields based on existing data.

- Data Filtering: Logstash can filter out irrelevant or noisy data, reducing the amount of data stored and improving the efficiency of analysis.

- Data Aggregation: Logstash can aggregate data to provide summaries and insights. This can involve counting events, calculating averages, or creating histograms.

- Conditional Processing: Logstash supports conditional processing, allowing different actions to be taken based on the content of the log data.

- Data Routing: Logstash can route data to different outputs based on its content. For example, it can send error logs to one index and informational logs to another.

Kibana

Kibana is the visualization and exploration component of the ELK Stack. It provides a user-friendly interface for interacting with the data stored in Elasticsearch, enabling users to gain insights through interactive dashboards, visualizations, and exploration tools. Kibana transforms raw log data into meaningful and actionable information.

Kibana’s Role in Visualizing and Exploring Log Data

Kibana serves as the visual front-end for the ELK Stack, allowing users to analyze and understand their data stored in Elasticsearch. It facilitates the creation of dashboards, visualizations, and saved searches, enabling efficient log analysis, performance monitoring, and anomaly detection. The platform allows for the following key functionalities:

- Data Exploration: Kibana provides tools to explore the data stored in Elasticsearch, allowing users to filter, search, and aggregate data to identify trends and patterns.

- Visualization Creation: Users can create a variety of visualizations, such as charts, graphs, and maps, to represent their data visually. This makes it easier to understand complex data and identify insights.

- Dashboard Design: Kibana allows users to create and customize dashboards, which combine multiple visualizations and other elements into a single, interactive view. These dashboards provide a comprehensive overview of the data and enable users to monitor key metrics.

- Alerting and Monitoring: Kibana can be integrated with other tools to provide alerting capabilities, enabling users to be notified of critical events or anomalies in their data.

Creating Different Types of Visualizations in Kibana

Kibana offers a wide range of visualization types, each designed to represent data in a specific way. These visualizations are created within the “Visualize” section of Kibana. Users can choose from various options, configuring the visualization based on their data and analytical goals. Common visualization types include:

- Area Chart: Used to display trends over time, highlighting the magnitude of change. An area chart can effectively illustrate the growth or decline of website traffic over a period.

- Line Chart: Similar to an area chart but focuses on the trend line, useful for tracking metrics like server response times. A line chart can show the fluctuations in CPU usage of a server over a day.

- Bar Chart: Represents data using rectangular bars, ideal for comparing values across different categories. A bar chart can show the number of errors by different application modules.

- Pie Chart: Displays data as slices of a circle, showing the proportion of each category to the whole. A pie chart can show the percentage of different log levels (e.g., INFO, WARN, ERROR) in the logs.

- Data Table: Presents data in a tabular format, useful for displaying detailed information. A data table can show the list of the most frequent HTTP error codes.

- Metric: Displays a single number, representing a key metric, such as the total number of errors. This is useful for highlighting important statistics.

- Map: Visualizes geographical data, such as the location of events or the distribution of users. A map can display the geographic origin of website visitors.

Each visualization type allows users to specify the data source (index pattern), the aggregation method (e.g., count, sum, average), and other configuration options, such as the fields to display, the time range, and the styling. The creation process typically involves selecting the visualization type, choosing the index pattern, defining the aggregation, and customizing the appearance.

Setting Up a Kibana Dashboard for Monitoring

Creating a Kibana dashboard involves combining multiple visualizations to provide a comprehensive overview of key metrics. The dashboard is designed to monitor specific aspects of the data and can be customized to meet the needs of the user. The following steps Artikel the general process:

1. Plan the Dashboard: Determine the key metrics and visualizations that need to be displayed on the dashboard. Consider the information that is most important for monitoring.

2. Create Visualizations: Create individual visualizations based on the data and the metrics being monitored. Ensure each visualization is configured correctly to display the relevant data.3. Add Visualizations to the Dashboard: Create a new dashboard and add the visualizations that were created. Arrange the visualizations in a way that makes sense for the user, considering the flow of information.

4. Customize the Dashboard: Adjust the size and position of the visualizations.Add titles, descriptions, and other elements to improve the clarity and usability of the dashboard.

5. Set Time Range: Configure the time range for the dashboard to ensure that the data being displayed is relevant and up-to-date.

6. Save and Share: Save the dashboard and share it with other users.Consider creating multiple dashboards for different monitoring needs.

For example, a dashboard for monitoring web server performance might include a line chart showing the average response time, a bar chart showing the number of requests per minute, and a metric showing the total number of errors. By combining these visualizations, users can quickly identify performance issues and troubleshoot problems. Another example includes creating a dashboard to monitor application performance metrics, such as transaction response times, error rates, and throughput.

By including visualizations such as line charts to track response times over time, bar charts to show error distributions, and metric visualizations to highlight key performance indicators (KPIs), users can quickly identify performance bottlenecks and trends.

Log Management Challenges

Managing logs effectively is crucial for operational efficiency, security, and compliance. Before the advent of sophisticated log management solutions like the ELK Stack, organizations often grappled with numerous challenges that hindered their ability to derive value from their log data. These challenges stemmed from the sheer volume of data, the diversity of sources, and the limitations of existing tools.

Addressing these challenges is essential for organizations aiming to improve their IT infrastructure’s performance, security posture, and overall reliability. The ELK Stack provides a powerful and flexible solution that overcomes many of these obstacles, offering a significant improvement over traditional log management approaches.

Data Volume and Scalability

Organizations generate vast amounts of log data from various sources, including servers, applications, network devices, and security systems. Managing this volume effectively presents a significant challenge.

The challenges include:

- Storage Limitations: Traditional log management systems often struggle to store the massive volumes of data generated by modern IT environments. Limited storage capacity can lead to data loss or the need to implement complex data retention policies.

- Performance Bottlenecks: Querying and analyzing large datasets can be slow and resource-intensive. This can impact the ability to quickly identify and respond to critical events.

- Scalability Issues: As data volumes grow, traditional systems may become increasingly difficult and expensive to scale, requiring significant hardware upgrades or complex infrastructure changes.

Data Diversity and Format Inconsistencies

Log data comes from a wide variety of sources, each with its own unique format and structure. This heterogeneity makes it difficult to aggregate, normalize, and analyze the data effectively.

The complexities include:

- Format Variations: Logs may be structured, semi-structured, or unstructured, and use different delimiters, timestamps, and field names.

- Data Silos: Data from different sources is often stored in isolated silos, making it challenging to correlate events and gain a comprehensive view of the system.

- Manual Parsing and Transformation: Manually parsing and transforming log data to make it usable is time-consuming, error-prone, and difficult to scale.

Search and Analysis Capabilities

Extracting meaningful insights from log data requires powerful search and analysis capabilities. Traditional tools often lack the flexibility and features needed to perform complex queries and visualizations.

The shortcomings encompass:

- Limited Search Functionality: Basic search tools may struggle to handle complex search queries or provide advanced filtering options.

- Lack of Data Correlation: The ability to correlate events from different sources is often limited, hindering the ability to identify root causes and understand the relationships between different system components.

- Insufficient Visualization Tools: Visualizing log data is crucial for identifying trends and patterns. Traditional tools may offer limited visualization options, making it difficult to gain a clear understanding of the data.

Cost and Complexity

Implementing and maintaining traditional log management solutions can be expensive and complex, requiring significant investment in hardware, software, and skilled personnel.

The drawbacks encompass:

- High Implementation Costs: Traditional solutions often require significant upfront investment in hardware, software licenses, and professional services.

- Complex Configuration and Management: Configuring and managing these systems can be complex, requiring specialized knowledge and expertise.

- Vendor Lock-in: Some traditional solutions can create vendor lock-in, making it difficult to switch to alternative solutions or integrate with other systems.

Security and Compliance

Organizations must comply with various security and compliance regulations, which often require them to collect, store, and analyze log data for auditing and reporting purposes. Meeting these requirements can be challenging with traditional log management solutions.

The considerations include:

- Data Retention: Regulations often mandate specific data retention periods, requiring organizations to store log data for extended periods.

- Security Vulnerabilities: Traditional systems may have security vulnerabilities that can expose log data to unauthorized access or modification.

- Compliance Reporting: Generating reports to demonstrate compliance with regulatory requirements can be time-consuming and difficult with traditional tools.

Addressing the Challenges with the ELK Stack

The ELK Stack, comprising Elasticsearch, Logstash, and Kibana, offers a comprehensive solution to the log management challenges Artikeld above. It addresses these challenges through its robust architecture, powerful features, and flexible design.

How the ELK Stack addresses the challenges:

- Scalability and Performance: Elasticsearch is designed for scalability and can handle massive volumes of data. Its distributed architecture allows for horizontal scaling, adding more nodes to the cluster as data volumes grow. The use of inverted indexes enables fast search and analysis.

- Data Ingestion and Transformation: Logstash acts as a data ingestion pipeline, collecting logs from various sources, parsing and transforming them into a consistent format. It supports a wide range of input plugins and provides powerful filtering and processing capabilities.

- Advanced Search and Analysis: Elasticsearch provides a powerful search engine with advanced query capabilities, including full-text search, aggregations, and geospatial analysis. Kibana provides a user-friendly interface for visualizing and exploring log data, enabling users to identify trends, patterns, and anomalies.

- Cost-Effectiveness: The ELK Stack is open-source, which significantly reduces the cost of ownership compared to commercial log management solutions. It can be deployed on commodity hardware or in the cloud, further reducing costs.

- Security and Compliance: Elasticsearch offers robust security features, including user authentication, role-based access control, and encryption. It supports data retention policies and can be integrated with security information and event management (SIEM) systems.

Log Management Solutions: Before and After ELK Stack Implementation

Comparing log management solutions before and after implementing the ELK Stack reveals significant improvements in various aspects.

Before ELK Stack:

- Limited Scalability: Traditional solutions often struggled to handle large data volumes, leading to performance issues and data loss.

- Difficult Data Integration: Integrating data from multiple sources was challenging, requiring manual parsing and transformation.

- Basic Search and Analysis: Search capabilities were limited, and visualization tools were often rudimentary.

- High Cost and Complexity: Implementation and maintenance were expensive and complex.

After ELK Stack:

- Scalable and High-Performance: Elasticsearch’s distributed architecture allows for handling massive data volumes with excellent performance.

- Simplified Data Integration: Logstash streamlines data ingestion and transformation, supporting various input plugins and filtering options.

- Advanced Search and Visualization: Elasticsearch provides powerful search capabilities, and Kibana offers a user-friendly interface for visualizing and analyzing log data.

- Cost-Effective and Flexible: The open-source nature of the ELK Stack reduces costs, and its flexible design allows for easy customization and integration.

This comparison highlights the transformative impact of the ELK Stack on log management, enabling organizations to overcome the challenges associated with traditional approaches and gain valuable insights from their log data.

Benefits of Using the ELK Stack

The ELK Stack (Elasticsearch, Logstash, and Kibana) offers a powerful and versatile solution for log management, providing numerous advantages for organizations of all sizes. By centralizing, analyzing, and visualizing log data, the ELK Stack enables businesses to gain valuable insights, improve operational efficiency, and proactively address potential issues.

Improved Operational Efficiency

The ELK Stack streamlines various operational processes, leading to significant improvements in efficiency. This is achieved through automation, faster troubleshooting, and proactive monitoring.

- Centralized Log Management: The ELK Stack consolidates logs from diverse sources, including servers, applications, and network devices, into a single, searchable repository. This eliminates the need to manually sift through individual log files on various systems, saving time and effort.

- Faster Troubleshooting: Elasticsearch’s powerful search capabilities enable rapid identification of the root cause of issues. Instead of manually reviewing log entries, operators can use search queries, filters, and aggregations to pinpoint the exact events contributing to a problem. This dramatically reduces the mean time to resolution (MTTR).

- Automated Alerting: Kibana’s alerting features allow organizations to set up real-time notifications based on specific log patterns or anomalies. This enables proactive issue detection and prevents potential outages or security breaches. For instance, an alert can be triggered if a server’s CPU usage exceeds a predefined threshold, allowing administrators to investigate and resolve the issue before it impacts users.

- Simplified Monitoring: The ELK Stack provides comprehensive dashboards and visualizations that offer a real-time overview of system performance and application health. These dashboards can display key metrics such as error rates, request latency, and resource utilization, allowing operators to quickly identify trends and anomalies.

Enhanced Security and Compliance

The ELK Stack plays a crucial role in enhancing security posture and ensuring compliance with industry regulations. By providing tools for security monitoring, threat detection, and audit logging, the ELK Stack empowers organizations to proactively protect their systems and data.

- Security Monitoring: The ELK Stack facilitates continuous security monitoring by collecting and analyzing security-related logs, such as authentication attempts, access logs, and intrusion detection system (IDS) alerts. This allows security teams to identify suspicious activities, investigate potential threats, and take appropriate action.

- Threat Detection: Using Elasticsearch’s analytical capabilities, security teams can detect threats by identifying patterns and anomalies in log data. For example, they can identify unusual login attempts, suspicious network traffic, or malware infections. This enables proactive threat hunting and incident response.

- Compliance: The ELK Stack supports compliance efforts by providing the necessary tools for audit logging, reporting, and data retention. Organizations can use the stack to collect and store audit logs, generate compliance reports, and meet regulatory requirements, such as those Artikeld by PCI DSS, HIPAA, and GDPR.

- User Behavior Analytics (UBA): By analyzing user activity logs, the ELK Stack can identify anomalous user behavior that may indicate a security breach. This helps organizations detect compromised accounts and prevent data breaches. For example, if a user suddenly accesses sensitive data from an unusual location or at an unusual time, an alert can be triggered for further investigation.

Improved Business Intelligence

Beyond operational and security benefits, the ELK Stack empowers businesses to gain valuable insights from their log data, driving data-driven decision-making and improving overall business intelligence.

- Data-Driven Decision Making: By analyzing application logs, organizations can identify performance bottlenecks, user behavior patterns, and areas for improvement. This information can be used to optimize applications, improve user experience, and make data-driven decisions about product development and marketing strategies.

- Performance Monitoring: The ELK Stack provides real-time performance monitoring, allowing organizations to track key performance indicators (KPIs) such as website traffic, transaction volumes, and error rates. This information can be used to identify performance issues, optimize system resources, and improve the overall user experience.

- Trend Analysis: By analyzing historical log data, organizations can identify trends and patterns in user behavior, system performance, and security threats. This information can be used to forecast future trends, proactively address potential issues, and make informed decisions about resource allocation and business strategy.

- Real-time Analytics: The ELK Stack enables real-time analytics by providing the ability to analyze log data as it is generated. This allows organizations to gain immediate insights into their systems and applications, enabling them to respond quickly to changing conditions and make data-driven decisions in real-time.

Real-World Examples of ELK Stack Benefits

Numerous organizations across various industries have successfully leveraged the ELK Stack to achieve significant benefits. Here are a few examples:

- E-commerce: An e-commerce company used the ELK Stack to analyze website logs, identify performance bottlenecks, and optimize its online store. As a result, they improved website loading times, reduced cart abandonment rates, and increased revenue. The visualization dashboards provided insights into customer behavior, such as popular product searches, purchase patterns, and areas where users encountered issues.

- Financial Services: A financial institution implemented the ELK Stack to monitor security logs, detect fraudulent activities, and ensure compliance with regulatory requirements. The stack enabled the institution to identify and respond to suspicious login attempts, unusual transaction patterns, and other security threats, reducing the risk of financial losses and protecting customer data.

- Healthcare: A healthcare provider utilized the ELK Stack to monitor application logs, track patient data access, and improve the performance of its electronic health record (EHR) system. The stack helped them identify and resolve performance issues, ensure data security, and improve the overall patient experience.

- Software as a Service (SaaS): A SaaS company employed the ELK Stack to monitor application performance, track user activity, and identify areas for product improvement. The stack provided insights into user behavior, allowing the company to optimize its platform, improve user engagement, and reduce customer churn.

ELK Stack Use Cases

The ELK Stack, due to its versatility and powerful capabilities, finds application across a wide spectrum of industries and operational needs. Its ability to ingest, process, analyze, and visualize data makes it an invaluable tool for various use cases, ranging from security and application monitoring to business intelligence. The following sections will delve into specific examples of how the ELK Stack is employed to address critical challenges and improve operational efficiency.

Security Information and Event Management (SIEM)

The ELK Stack is frequently utilized as a powerful SIEM solution, providing organizations with a centralized platform for security monitoring, threat detection, and incident response. This involves collecting security-related data from diverse sources, such as firewalls, intrusion detection systems (IDS), web servers, and endpoint security agents. This data is then analyzed to identify potential security threats and vulnerabilities.

The process typically involves these steps:

- Data Ingestion: Logstash is configured to collect security logs from various sources, parsing and transforming them into a consistent format. This ensures that data from different vendors and systems can be analyzed together.

- Data Processing: Logstash applies filters to enrich the data, such as adding geographical information based on IP addresses or performing threat intelligence lookups. This enhances the context of the logs, making them more valuable for analysis.

- Indexing and Storage: Elasticsearch indexes the processed logs, making them searchable and readily available for analysis. The indexing process optimizes the retrieval of relevant data.

- Analysis and Alerting: Kibana is used to visualize the security data and create dashboards that provide insights into security events. Alerts can be configured based on specific criteria, such as suspicious login attempts or unusual network traffic patterns.

- Incident Response: When an alert is triggered, security analysts can use Kibana to investigate the incident, identify the scope of the attack, and take appropriate actions to mitigate the threat. This might involve blocking malicious IP addresses, isolating compromised systems, or resetting user passwords.

For example, a financial institution might use the ELK Stack to monitor for fraudulent transactions. By analyzing transaction logs, the system can identify suspicious activities, such as unusually large transactions or transactions originating from unfamiliar locations. This enables the institution to quickly detect and prevent financial fraud.

Application Monitoring and Troubleshooting

The ELK Stack is a critical tool for application monitoring and troubleshooting, providing valuable insights into application performance and identifying the root causes of issues. It helps developers and operations teams to proactively identify and resolve problems before they impact users.

The application monitoring process typically involves these steps:

- Log Collection: Application logs are collected from various sources, including application servers, databases, and web servers. Logstash is used to collect and parse these logs, ensuring a consistent format.

- Performance Metrics Collection: Besides logs, metrics related to application performance, such as response times, error rates, and resource utilization, are collected. These metrics are often collected using tools like Metricbeat, which sends the data to Elasticsearch.

- Data Indexing: Elasticsearch indexes the logs and metrics, making them searchable and enabling efficient analysis.

- Visualization and Analysis: Kibana is used to create dashboards and visualizations that provide a comprehensive view of application performance. These dashboards can display key performance indicators (KPIs), such as response times, error rates, and transaction volumes.

- Alerting: Alerts can be configured to notify teams when performance thresholds are exceeded or when critical errors occur. This allows for proactive problem resolution.

- Troubleshooting: When an issue is detected, the ELK Stack provides the tools to quickly identify the root cause. By searching and analyzing logs, teams can pinpoint the specific events that led to the problem. For instance, a sudden increase in error logs can be traced back to a recent code deployment.

For example, an e-commerce company can use the ELK Stack to monitor the performance of its website. If users experience slow loading times or frequent errors, the ELK Stack can help identify the cause. This might involve analyzing logs to identify database bottlenecks, network issues, or code errors. By quickly identifying and resolving these issues, the company can improve the user experience and prevent lost revenue.

The ELK Stack allows for real-time monitoring, enabling the identification of performance degradation, which, if left unaddressed, could lead to significant revenue loss. For example, a website experiencing a 10% increase in load times could translate to a noticeable decrease in sales, highlighting the critical importance of effective monitoring.

Architecture and Deployment

The ELK Stack’s power lies not only in its individual components but also in how they are architected and deployed. Understanding the different architectural options and deployment strategies is crucial for building a scalable, reliable, and performant log management solution. This section explores the various deployment architectures, scaling considerations, and the steps involved in setting up a basic ELK Stack.

Different Deployment Architectures for the ELK Stack

The architecture of your ELK Stack deployment significantly impacts its performance, scalability, and resilience. Several architectural patterns cater to different needs and scales.

- Single-Node Deployment: This is the simplest architecture, suitable for development, testing, or small-scale environments. All components (Elasticsearch, Logstash, and Kibana) run on a single server. While easy to set up, it lacks scalability and high availability. This architecture is ideal for learning and experimenting with the ELK Stack.

- Multi-Node Deployment: This architecture distributes the components across multiple servers, providing better scalability and fault tolerance.

- Dedicated Nodes: Each component runs on its own dedicated server. For example, one server for Elasticsearch, another for Logstash, and a third for Kibana. This offers the best performance and isolation.

- Combined Nodes: Components can be combined on the same server, such as running Logstash and Kibana on one server while Elasticsearch runs on another. This approach can be cost-effective but might impact performance if resources are constrained.

- Clustered Deployment: This architecture focuses on Elasticsearch clustering to achieve high availability and scalability. Multiple Elasticsearch nodes form a cluster, and data is replicated across the nodes. Logstash can be deployed as a centralized aggregator or distributed across multiple nodes. Kibana typically runs on one or more nodes and connects to the Elasticsearch cluster. This architecture is designed for production environments that require high uptime and handle large volumes of data.

- Containerized Deployment (e.g., Docker, Kubernetes): Containerization offers a flexible and scalable way to deploy the ELK Stack. Docker containers can package each component, simplifying deployment and management. Kubernetes provides orchestration capabilities, automating scaling, rolling updates, and self-healing. This approach is beneficial for cloud-native environments and allows for efficient resource utilization.

- Cloud-Based Deployment (e.g., Elasticsearch Service on AWS, Azure, GCP): Cloud providers offer managed ELK Stack services. These services handle infrastructure management, scaling, and maintenance, simplifying deployment and reducing operational overhead. These services typically offer features like automated backups, monitoring, and security enhancements. They are a good option for organizations that want to focus on log analysis rather than infrastructure management.

Considerations for Scaling an ELK Stack Deployment

Scaling an ELK Stack deployment involves adjusting the resources allocated to each component to handle increasing data volumes and user load. Several factors influence the scalability of an ELK Stack deployment.

- Horizontal Scaling: This involves adding more nodes to the Elasticsearch cluster to increase storage capacity and processing power. Elasticsearch is designed to scale horizontally, making it a key factor in scaling the ELK Stack.

- Vertical Scaling: This involves increasing the resources (CPU, RAM, disk) of individual nodes. While less common than horizontal scaling, it can be useful for improving performance on individual components.

- Data Storage: Adequate storage capacity is essential for handling the growing volume of log data. Consider using SSDs for faster read/write speeds and choosing a storage strategy that balances performance and cost.

- Indexing Performance: Optimize Elasticsearch indexing to ensure efficient data ingestion. This includes choosing the right data types, defining mappings, and using appropriate analyzers.

- Resource Allocation: Carefully allocate resources (CPU, RAM, disk I/O) to each component based on its workload. Monitoring resource utilization is crucial for identifying bottlenecks.

- Sharding and Replication: Configure Elasticsearch shards and replicas to distribute data across nodes and ensure high availability. Sharding divides an index into smaller, manageable units, while replication creates copies of shards for fault tolerance.

- Logstash Configuration: Optimize Logstash pipelines to handle high data ingestion rates. This may involve tuning the number of worker threads, buffering data, and using efficient filter plugins.

- Kibana Performance: Optimize Kibana’s performance by caching dashboards, using efficient visualizations, and tuning the Kibana server configuration.

- Monitoring and Alerting: Implement comprehensive monitoring and alerting to proactively identify and address performance issues. Use tools like Elasticsearch’s built-in monitoring features or third-party monitoring solutions.

Steps Involved in Deploying a Basic ELK Stack Setup

Deploying a basic ELK Stack setup involves installing and configuring each component and then integrating them to ingest and visualize log data. Here’s a simplified set of steps.

- Install Java: Elasticsearch requires Java to run. Ensure Java is installed on the server(s) where you will be deploying the ELK Stack. The version of Java must be compatible with the version of Elasticsearch being installed.

- Install Elasticsearch: Download and install Elasticsearch. Configure the Elasticsearch node by editing the `elasticsearch.yml` configuration file. Define the cluster name, node name, and other settings like memory allocation.

- Install Logstash: Download and install Logstash. Configure the Logstash pipeline by creating a configuration file (`.conf`) that defines input, filter, and output stages. The input stage specifies how to receive log data, the filter stage processes and transforms the data, and the output stage sends the data to Elasticsearch.

- Install Kibana: Download and install Kibana. Configure Kibana to connect to the Elasticsearch cluster by editing the `kibana.yml` configuration file.

- Start the ELK Stack Components: Start Elasticsearch, Logstash, and Kibana in the correct order. Check the logs of each component to ensure they are running without errors.

- Configure Logstash Input: Configure Logstash to receive log data from your log sources. This might involve configuring filebeat, syslog, or other input plugins.

- Create an Index Pattern in Kibana: In Kibana, create an index pattern that matches the index name in Elasticsearch where your logs are stored. This allows Kibana to access and visualize your data.

- Create Visualizations and Dashboards: Build visualizations and dashboards in Kibana to analyze your log data. This involves creating charts, graphs, and other visual representations of your data.

- Test and Monitor: Test your ELK Stack setup by sending log data and verifying that it is ingested and visualized correctly. Monitor the performance and resource utilization of each component to ensure optimal performance.

Data Sources and Log Collection

Integrating diverse data sources and efficiently collecting logs is crucial for the ELK Stack’s effectiveness in log management. This section explores various data sources compatible with the ELK Stack and provides practical examples of log collection methods from different systems. Understanding these aspects allows for a comprehensive and centralized logging solution.

Data Sources Integrated with the ELK Stack

The ELK Stack supports integration with a wide array of data sources, enabling centralized logging and analysis across various systems and applications. This versatility is a key strength, allowing organizations to gather and analyze logs from a diverse infrastructure.

- Servers: Logs from operating systems (e.g., Linux, Windows) and server applications (e.g., web servers, database servers) are commonly collected.

- Applications: Application logs provide insights into application behavior, including errors, warnings, and informational messages.

- Network Devices: Network devices (e.g., routers, switches, firewalls) generate logs related to network traffic, security events, and performance metrics.

- Cloud Services: Logs from cloud platforms (e.g., AWS, Azure, GCP) provide information on resource usage, security events, and application performance within the cloud environment.

- Databases: Database logs contain information about database operations, queries, and errors, which are critical for performance monitoring and troubleshooting.

- Containers and Orchestration Platforms: Logs from containerized applications (e.g., Docker) and orchestration platforms (e.g., Kubernetes) offer visibility into container health, application performance, and resource utilization.

- Security Information and Event Management (SIEM) Systems: Integration with SIEM systems allows the ELK Stack to ingest security-related logs and correlate them with other data sources for threat detection and incident response.

Examples of Log Collection from Different Systems

Collecting logs effectively requires configuring appropriate agents and settings to ensure data is accurately captured and transmitted to the ELK Stack. Here are examples demonstrating how to collect logs from various systems.

- Collecting Logs from Linux Servers: Using Filebeat, a lightweight log shipper, to collect logs from `/var/log/syslog`, `/var/log/auth.log`, and application-specific log files. Filebeat monitors these files, ships new log lines to Logstash or Elasticsearch, and can also perform initial parsing. For instance, a configuration might specify the paths to log files and the Elasticsearch host and port.

- Collecting Logs from Windows Servers: Utilizing Winlogbeat to collect Windows Event Logs. Winlogbeat reads events from the Windows Event Log and sends them to the ELK Stack. Configuration includes specifying the event log channels to monitor (e.g., Application, System, Security) and the Elasticsearch connection details.

- Collecting Logs from Web Servers (e.g., Apache, Nginx): Configuring Filebeat to collect access and error logs. The configuration specifies the log file paths for the web server (e.g., `/var/log/apache2/access.log`, `/var/log/nginx/error.log`) and defines parsing rules to structure the logs into meaningful fields.

- Collecting Logs from Applications: Implementing logging libraries within applications (e.g., using Log4j in Java applications, or Python’s `logging` module). The application logs messages to files or standard output, which are then collected by Filebeat or Logstash. These logs are often structured using JSON or other formats for easier parsing.

- Collecting Logs from Cloud Services (e.g., AWS CloudTrail): Utilizing the AWS integration capabilities. AWS CloudTrail logs are sent to an S3 bucket, and then ingested into Elasticsearch via Logstash or an alternative ingestion mechanism, enabling analysis of API calls and user activity.

Common Log Formats and Logstash Handling

Logstash plays a critical role in parsing and processing logs from various formats. It uses a combination of input, filter, and output plugins to transform raw log data into structured, searchable documents.

- Plain Text: The simplest format, often used for application logs. Logstash uses regular expressions (regex) in its `grok` filter to parse plain text logs.

- JSON: A structured format that is easily parsed by Logstash. The `json` filter plugin is used to parse JSON-formatted logs. This format is preferred for its readability and ease of processing.

- CSV (Comma-Separated Values): Commonly used for tabular data. Logstash’s `csv` filter plugin is employed to parse CSV logs, specifying delimiters and field names.

- Syslog: A standard protocol for sending log messages. Logstash can receive Syslog messages and parse them using the `syslog` filter.

- Apache Common Log Format (CLF): A standard format for web server access logs. Logstash can parse CLF logs using predefined grok patterns.

- Apache Combined Log Format: An extension of CLF that includes the referrer and user agent. Logstash uses specific grok patterns to parse this format.

- Windows Event Log Format: The format used by Windows Event Logs. Winlogbeat is typically used to collect these logs, and Logstash parses them using specific filters.

For example, to parse a plain text log, you might use a grok filter like this:

filter

grok

match => "message" => "%TIMESTAMP_ISO8601:timestamp %LOGLEVEL:level %DATA:process: %GREEDYDATA:message"

The `grok` filter uses regular expressions to extract fields like `timestamp`, `level`, `process`, and `message` from the log line.

Advanced ELK Stack Features

The ELK Stack’s capabilities extend far beyond basic log aggregation and visualization. It offers advanced features that enable sophisticated analysis, proactive monitoring, and automated responses to critical events. These features leverage the power of machine learning and robust alerting mechanisms to transform raw log data into actionable insights.

Machine Learning and Alerting Capabilities

The ELK Stack’s advanced features significantly enhance its value proposition. These capabilities allow for proactive monitoring, automated responses, and deeper insights into data patterns.

Configuring Alerts in Kibana

Kibana’s alerting features enable users to define rules that trigger notifications based on specific conditions within the log data. This allows for proactive identification of issues, enabling rapid response and minimizing downtime.

To configure alerts in Kibana, follow these general steps:

- Access the Alerting Section: Navigate to the “Stack Management” or “Management” section within Kibana, and then select “Alerts” or “Alerting.”

- Create a New Alert: Click the “Create alert” button to begin defining a new alert.

- Choose Alert Type: Select the appropriate alert type based on the data source and desired trigger condition. Common alert types include:

- Threshold Alerts: Trigger when a metric crosses a defined threshold (e.g., CPU usage exceeds 90%).

- Anomaly Detection Alerts: Trigger when Elasticsearch’s machine learning features identify unusual patterns or anomalies in the data.

- Metric Alerts: Trigger when a metric changes based on predefined conditions (e.g., a sudden spike in error logs).

- Log Alerts: Trigger when specific log events match a defined query.

- Define Conditions: Specify the criteria that must be met to trigger the alert. This involves defining queries, thresholds, and other relevant parameters.

- Set Actions: Configure the actions to be taken when the alert is triggered. Actions can include:

- Sending email notifications.

- Posting messages to Slack or other communication platforms.

- Triggering webhooks to integrate with external systems.

- Creating Jira tickets.

- Configure Schedule and Frequency: Set the frequency at which the alert is checked and the schedule for notifications.

- Save and Activate: Save the alert configuration and activate it to begin monitoring the data.

For example, to create a threshold alert for high CPU usage:

- Select the “Threshold” alert type.

- Specify the index pattern containing the system metrics.

- Define a query to filter the relevant data (e.g., CPU utilization metrics).

- Set the threshold condition (e.g., “When cpu.usage.percent is above 90”).

- Configure an email action to notify the system administrators.

Using Machine Learning Features for Anomaly Detection

Elasticsearch’s machine learning capabilities provide powerful tools for automatically identifying anomalies within log data. This allows for proactive detection of unusual events, such as performance degradations, security breaches, or unexpected application behavior.

Anomaly detection in Elasticsearch relies on the use of “jobs” that analyze time-series data and identify patterns that deviate from the norm.

Here’s how to use machine learning features for anomaly detection:

- Create a Machine Learning Job: Within Kibana, navigate to the Machine Learning app. Select “Create job” and choose the appropriate job type (e.g., “Single metric job” for detecting anomalies in a single metric, or “Population job” for comparing data across different groups).

- Define Data Source: Specify the index pattern containing the data to be analyzed (e.g., application logs, system metrics).

- Configure Analysis: Select the fields to analyze and the desired analysis method (e.g., “mean,” “sum,” “count”).

- Set Anomaly Detection Parameters: Adjust parameters such as the “bucket span” (time interval for analysis) and the “influencer fields” (fields that might influence the anomaly score).

- Start the Job: Once the job is configured, start it to begin analyzing the data and identifying anomalies.

- Visualize Anomalies: Kibana provides visualizations to display the detected anomalies, including charts and tables highlighting unusual events.

- Configure Alerts (Optional): Integrate machine learning results with Kibana’s alerting features to automatically trigger notifications when anomalies are detected.

For instance, consider a scenario where an e-commerce website experiences a sudden increase in latency. Using machine learning, you could create a job to monitor the “response_time” field in your web server logs. The job would analyze the historical response times and identify any deviations from the typical patterns. If the response time suddenly spikes, the machine learning job would flag it as an anomaly.

This anomaly could then trigger an alert, notifying the operations team to investigate the issue immediately.

Another real-world case involves security monitoring. A machine learning job can be set up to analyze network traffic logs, identifying unusual patterns like a surge in failed login attempts or an unusual number of connections from a specific IP address. Such anomalies might indicate a potential security breach, allowing security teams to take immediate action to mitigate the threat.

Final Wrap-Up

In conclusion, the ELK Stack stands as a versatile and indispensable tool for effective log management. By mastering its components and understanding its capabilities, organizations can unlock the full potential of their log data, transforming it into a valuable asset for informed decision-making and proactive problem-solving. Whether you’re focused on security, application monitoring, or operational efficiency, the ELK Stack offers a powerful and adaptable solution to meet your needs.

Embracing the ELK Stack empowers businesses to gain deeper insights, optimize performance, and safeguard their digital assets.

Quick FAQs

What is the ELK Stack?

The ELK Stack is a collection of open-source tools: Elasticsearch (search and analytics engine), Logstash (data ingestion and processing pipeline), and Kibana (data visualization and exploration tool), used for log management, monitoring, and analysis.

Why is the ELK Stack used for log management?

The ELK Stack is used for log management because it offers a centralized platform for collecting, storing, searching, analyzing, and visualizing logs from various sources, enabling faster troubleshooting, security analysis, and performance monitoring.

What are the key benefits of using the ELK Stack?

Key benefits include centralized logging, real-time monitoring, efficient search and analysis, scalable data storage, customizable dashboards, and proactive alerting for improved operational efficiency and security.

Is the ELK Stack suitable for all sizes of organizations?

Yes, the ELK Stack is scalable and can be adapted to meet the needs of organizations of all sizes, from small businesses to large enterprises. Its flexible architecture allows for customization and growth.

How does the ELK Stack handle large volumes of log data?

Elasticsearch is designed to handle large volumes of data through its distributed architecture and indexing capabilities, ensuring fast search and analysis even with massive log datasets. Logstash helps to ingest the data efficiently.